Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Proposition 1.25. Let (N,F, P) be a probability space, H C G C F sub-o-algebras and X, Y, Z, (Yn)nEN random variables with existing

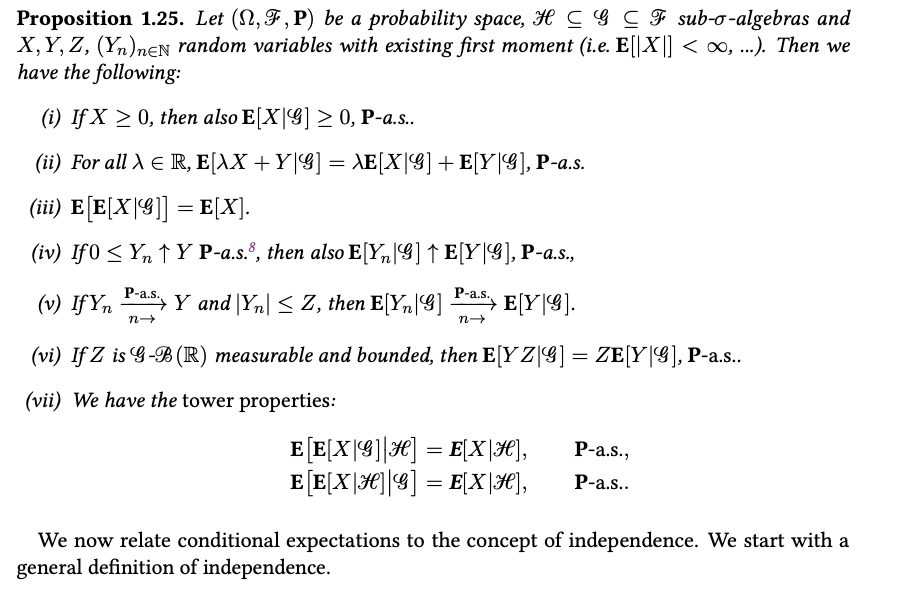

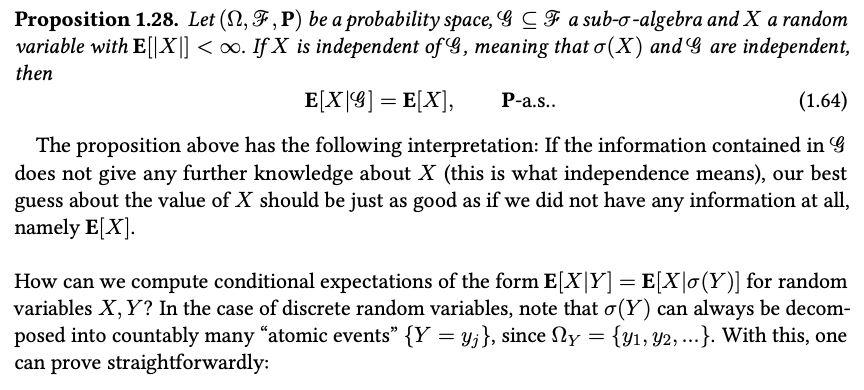

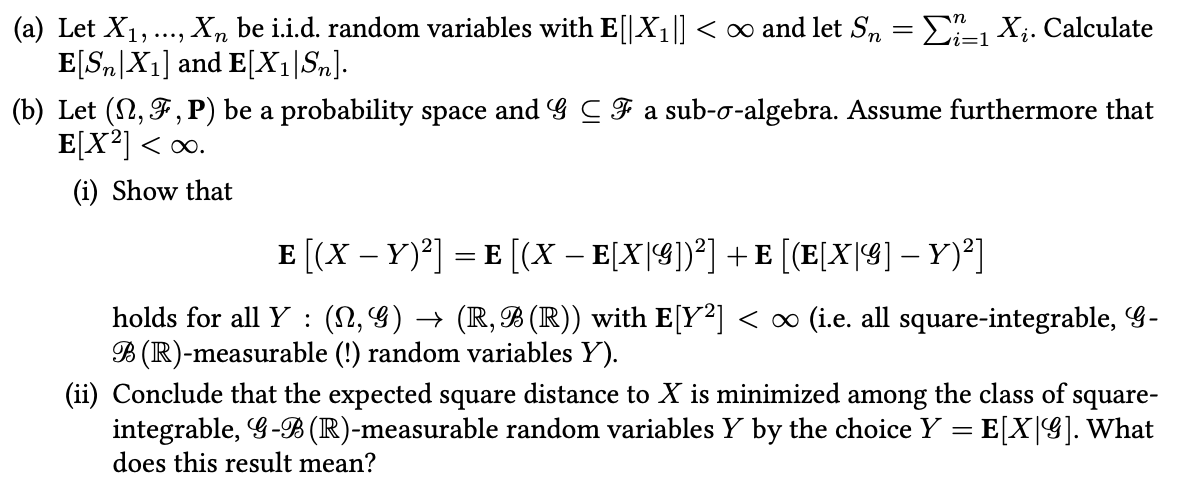

Proposition 1.25. Let (N,F, P) be a probability space, H C G C F sub-o-algebras and X, Y, Z, (Yn)nEN random variables with existing first moment (i.e. E[|X|] < , ...). Then we have the following: (i) If X 0, then also E[X|G] 0, P-a.s.. (ii) For all R, E[\X + Y|G] = \E[X]G] +E[Y|G], P-a.s. (iii) E[E[X|G]] = E[X]. (iv) If 0 Yn Y P-a.s., then also E[Y|G] E[Y|G], P-a.s., (v) If Yn Pas Y and |Yn| Z, then E[YnG] P-a.s., E[YG]. n n (vi) If Z is G-B (R) measurable and bounded, then E[YZ|G] = ZE[Y|G], P-a.s.. (vii) We have the tower properties: E[E[X|G]|H] = E[X|H], P-a.s., E[E[X|H]|G] = E[X|H], P-a.s.. We now relate conditional expectations to the concept of independence. We start with a general definition of independence. Proposition 1.28. Let (N, F, P) be a probability space, & C F a sub-o-algebra and X a random variable with E[|X|] < . If X is independent of G, meaning that (X) and G are independent, then E[X|G] = E[X], P-a.s.. (1.64) The proposition above has the following interpretation: If the information contained in G does not give any further knowledge about X (this is what independence means), our best guess about the value of X should be just as good as if we did not have any information at all, namely E[X]. How can we compute conditional expectations of the form E[X]Y] = E[X]o (Y)] for random variables X, Y? In the case of discrete random variables, note that (Y) can always be decom- posed into countably many "atomic events" {Y = y; }, since y = {1, 2, ...}. With this, one can prove straightforwardly: (a) Let X1, ..., Xn be i.i.d. random variables with E[|X1|] < and let Sn E[Sn|X1] and E[X1|Sn]. = =1 X. Calculate (b) Let (, F, P) be a probability space and G C F a sub--algebra. Assume furthermore that E[X2] < . (i) Show that E [(X Y)] = E [(X E[X|G])] + E [(E[X|G] Y)] holds for all Y (N,G) (R,B(R)) with E[Y] < (i.e. all square-integrable, G- B(R)-measurable (!) random variables Y). (ii) Conclude that the expected square distance to X is minimized among the class of square- integrable, G-B (R)-measurable random variables Y by the choice Y = E[X|G]. What does this result mean?

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started