Question: Python Basic Data Science (Machine Learning) PLEASE HELP! WILL UPVOTE AND LEAVE A GREAT REVIEW 1. In this problem, we will compare the performance of

Python Basic Data Science (Machine Learning) PLEASE HELP! WILL UPVOTE AND LEAVE A GREAT REVIEW

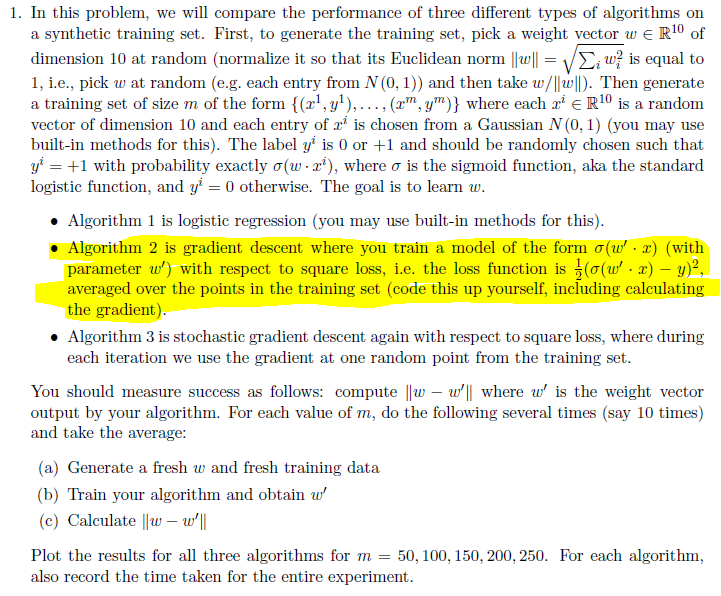

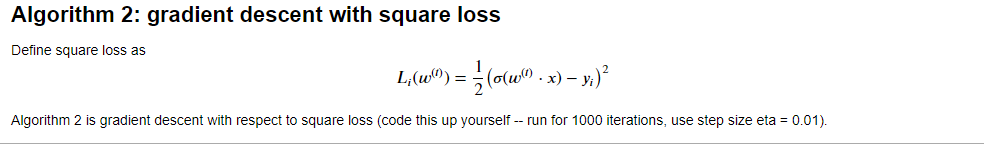

1. In this problem, we will compare the performance of three different types of algorithms on a synthetic training set. First, to generate the training set, pick a weight vector w e R10 of dimension 10 at random (normalize it so that its Euclidean norm ||0|| = VE;wis equal to 1, i.e., pick w at random (e.g. each entry from N (0,1)) and then take w/||||). Then generate a training set of size m of the form {(x+, y1), ..., (x",y)} where each zi e R10 is a random vector of dimension 10 and each entry of zi is chosen from a Gaussian N(0,1) (you may use built-in methods for this). The label y' is 0 or +1 and should be randomly chosen such that y' = +1 with probability exactly o(w-z?), where o is the sigmoid function, aka the standard logistic function, and y* = 0) otherwise. The goal is to learn w. Algorithm 1 is logistic regression (you may use built-in methods for this). Algorithm 2 is gradient descent where you train a model of the form o(w': 2) (with parameter w') with respect to square loss, i.e. the loss function is }(o(w'. 1) y)?, averaged over the points in the training set (code this up yourself, including calculating the gradient). Algorithm 3 is stochastic gradient descent again with respect to square loss, where during each iteration we use the gradient at one random point from the training set. You should measure success as follows: compute ||W w'|| where w' is the weight vector output by your algorithm. For each value of m, do the following several times (say 10 times) and take the average: (a) Generate a fresh w and fresh training data (b) Train your algorithm and obtain w' (c) Calculate ||W w'll Plot the results for all three algorithms for m = 50, 100, 150, 200, 250. For each algorithm, also record the time taken for the entire experiment. Algorithm 2: gradient descent with square loss Define square loss as L;(W) = = (Clea" . x) v)? Algorithm 2 is gradient descent with respect to square loss (code this up yourself -- run for 1000 iterations, use step size eta = 0.01)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts