Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Q2. Perceptrons Instead of using Naive Bayes, you decide to try applying Perceptron to the interrogation data. You generate features from the training data

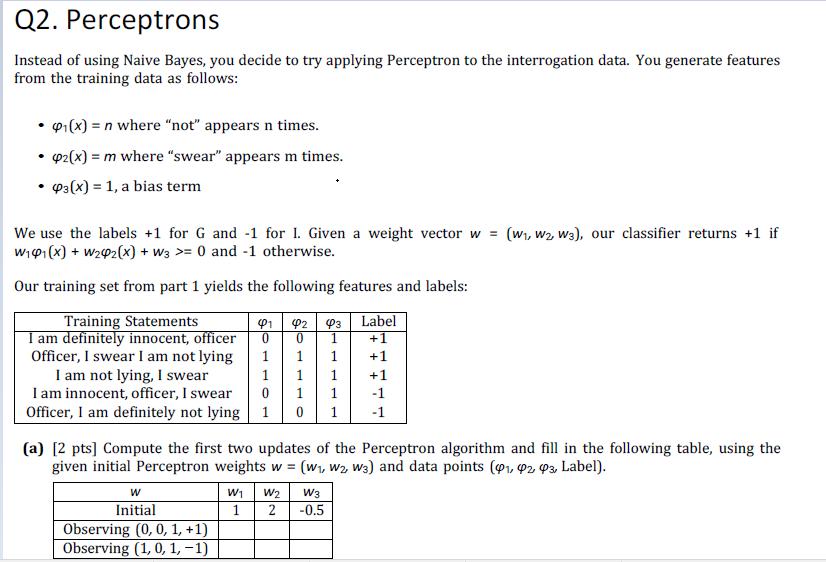

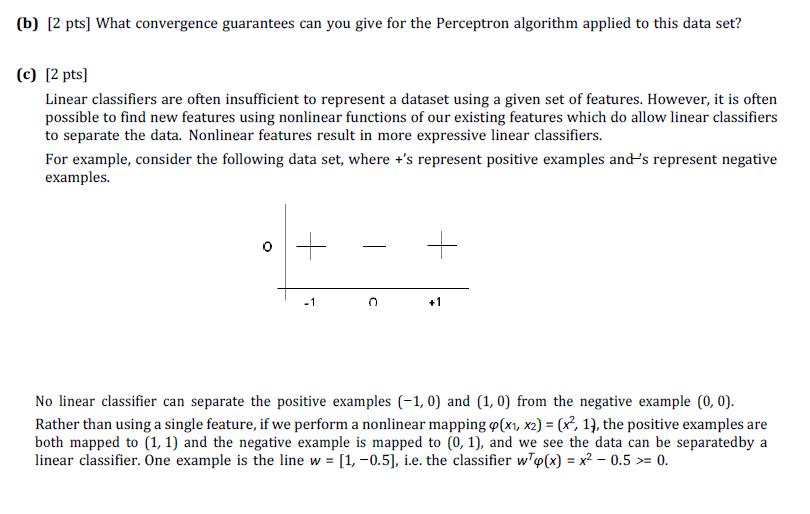

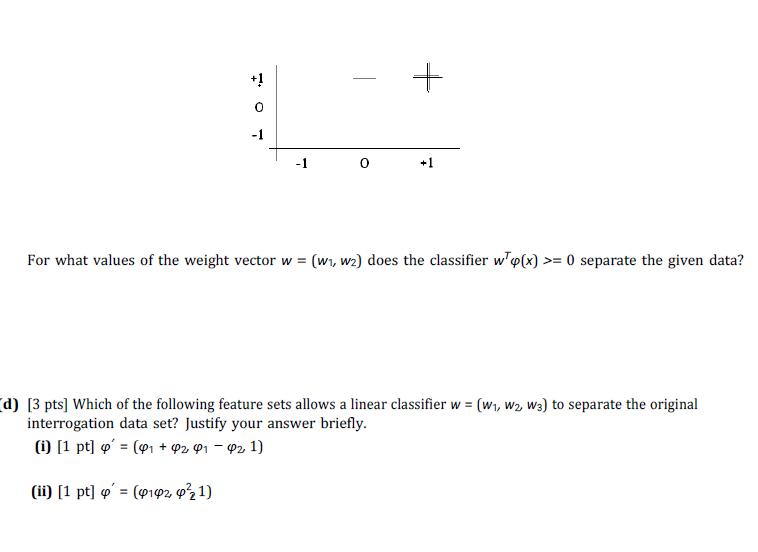

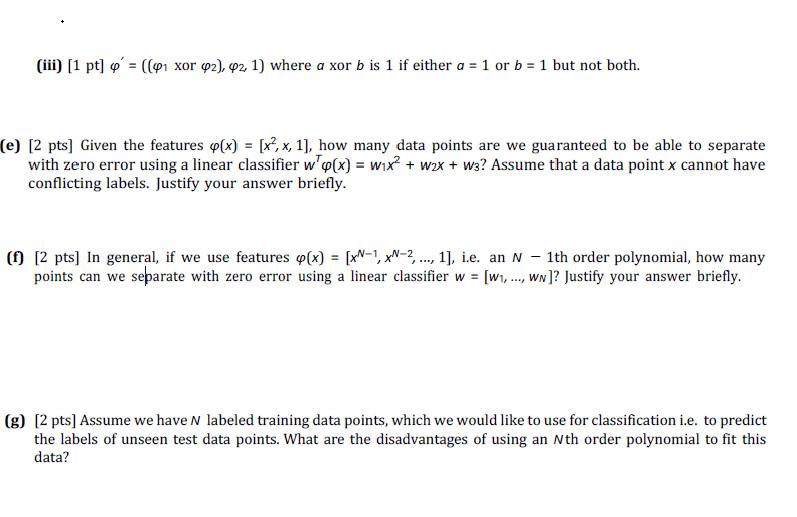

Q2. Perceptrons Instead of using Naive Bayes, you decide to try applying Perceptron to the interrogation data. You generate features from the training data as follows: 91(x) = n where "not" appears n times. v2(x) = m where "swear" appears m times. P3(x) = 1, a bias term We use the labels +1 for G and -1 for 1. Given a weight vector w = (wi, W2, w3), our classifier returns +1 if Wi91 (x) + W242(x) + w3 >= 0 and -1 otherwise. Our training set from part 1 yields the following features and labels: Training Statements I am definitely innocent, officer Officer, I swear I am not lying I am not lying, I swear I am innocent, officer, I swear Officer, I am definitely not lying 1 Label P3 +1 1 1 +1 1 +1 1 1 -1 -1 (a) [2 pts] Compute the first two updates of the Perceptron algorithm and fill in the following table, using the given initial Perceptron weights w = (w1, w2, w3) and data points (P1, 42 P3. Label). W1 W2 W3 Initial 2 -0.5 Observing (0, 0, 1, +1) Observing (1, 0, 1, -1) (b) [2 pts] What convergence guarantees can you give for the Perceptron algorithm applied to this data set? (c) [2 pts] Linear classifiers are often insufficient to represent a dataset using a given set of features. However, it is often possible to find new features using nonlinear functions of our existing features which do allow linear classifiers to separate the data. Nonlinear features result in more expressive linear classifiers. For example, consider the following data set, where +'s represent positive examples and's represent negative examples. -1 +1 No linear classifier can separate the positive examples (-1, 0) and (1, 0) from the negative example (0,0). Rather than using a single feature, if we perform a nonlinear mapping o(x1, x2) = (x, 1}, the positive examples are both mapped to (1, 1) and the negative example is mapped to (0, 1), and we see the data can be separatedby a linear classifier. One example is the line w [1, -0.5], i.e. the classifier w'o(x) = x2 - 0.5 >= 0. %3D + +! +1 For what values of the weight vector w (wi, w2) does the classifier w'o(x) >= 0 separate the given data? d) [3 pts] Which of the following feature sets allows a linear classifier w = (w1, w2 w3) to separate the original interrogation data set? Justify your answer briefly. %3! () [1 pt] p'= (1 + P2 P1 - P2 1) %3D (ii) [1 pt] o' = (p192 oz 1) %3D (ii) [1 pt] o' = (( xor o2), q2 1) where a xor b is 1 if either a = 1 or b = 1 but not both. (e) [2 pts] Given the features o(x) = [x?, x, 1], how many data points are we guaranteed to be able to separate with zero error using a linear classifier w'o(x) = wix? + w2x + w3? Assume that a data pointx cannot have conflicting labels. Justify your answer briefly. () [2 pts] In general, if we use features o(x) = [xN-1, xN-2 ., 1], ie. an N - 1th order polynomial, how many points can we separate with zero error using a linear classifier w = [w,., WN ]? Justify your answer briefly. (g) [2 pts] Assume we have N labeled training data points, which we would like to use for classification i.e. to predict the labels of unseen test data points. What are the disadvantages of using an Nth order polynomial to fit this data?

Step by Step Solution

★★★★★

3.32 Rating (155 Votes )

There are 3 Steps involved in it

Step: 1

Question 1 Nave Bayes Using the training data find the maximum likelihood estimate ofthe parameters they will be the classconditional relative frequen...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Document Format ( 1 attachment)

626b9be7ee867_93951.docx

120 KBs Word File

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started