Answered step by step

Verified Expert Solution

Question

1 Approved Answer

1. In this question, we consider the relative performance obtained by running a particular program with various caches installed between the memory and the

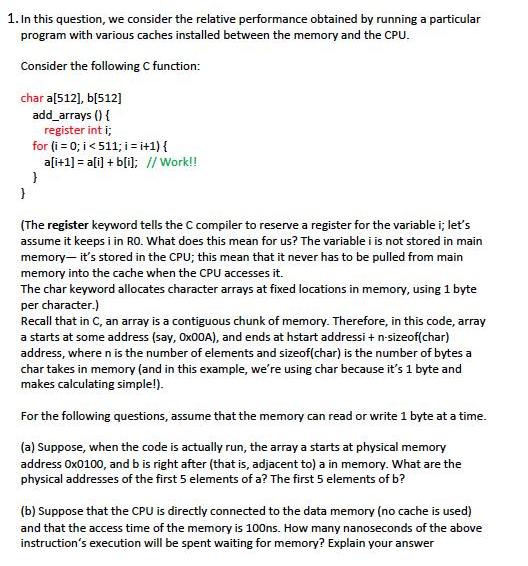

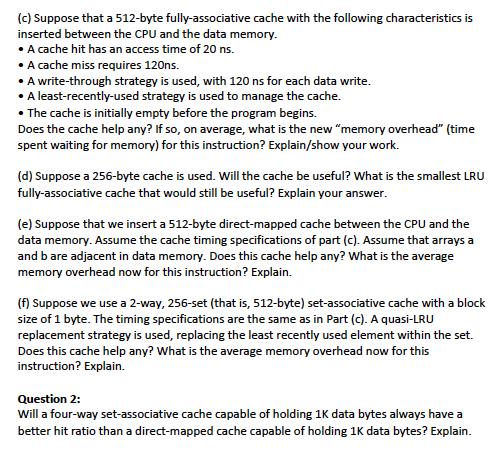

1. In this question, we consider the relative performance obtained by running a particular program with various caches installed between the memory and the CPU. Consider the following C function: char a[512], b[512] add_arrays () { register int i; } for (i = 0; i < 511; i =i+1) { a[i+1] = a[i] + b[i]; // Work!! } (The register keyword tells the C compiler to reserve a register for the variable i; let's assume it keeps i in RO. What does this mean for us? The variable i is not stored in main memory- it's stored in the CPU; this mean that it never has to be pulled from main memory into the cache when the CPU accesses it. The char keyword allocates character arrays at fixed locations in memory, using 1 byte per character.) Recall that in C, an array is a contiguous chunk of memory. Therefore, in this code, array a starts at some address (say, 0x00A), and ends at hstart addressi + n-sizeof(char) address, where n is the number of elements and sizeof(char) is the number of bytes a char takes in memory (and in this example, we're using char because it's 1 byte and makes calculating simple!). For the following questions, assume that the memory can read or write 1 byte at a time. (a) Suppose, when the code is actually run, the array a starts at physical memory address 0x0100, and b is right after (that is, adjacent to) a in memory. What are the physical addresses of the first 5 elements of a? The first 5 elements of b? (b) Suppose that the CPU is directly connected to the data memory (no cache is used) and that the access time of the memory is 100ns. How many nanoseconds of the above instruction's execution will be spent waiting for memory? Explain your answer (c) Suppose that a 512-byte fully-associative cache with the following characteristics is inserted between the CPU and the data memory. A cache hit has an access time of 20 ns. A cache miss requires 120ns. A write-through strategy is used, with 120 ns for each data write. A least-recently-used strategy is used to manage the cache. The cache is initially empty before the program begins. Does the cache help any? If so, on average, what is the new "memory overhead" (time spent waiting for memory) for this instruction? Explain/show your work. (d) Suppose a 256-byte cache is used. Will the cache be useful? What is the smallest LRU fully-associative cache that would still be useful? Explain your answer. (e) Suppose that we insert a 512-byte direct-mapped cache between the CPU and the data memory. Assume the cache timing specifications of part (c). Assume that arrays a and b are adjacent in data memory. Does this cache help any? What is the average memory overhead now for this instruction? Explain. (f) Suppose we use a 2-way, 256-set (that is, 512-byte) set-associative cache with a block size of 1 byte. The timing specifications are the same as in Part (c). A quasi-LRU replacement strategy is used, replacing the least recently used element within the set. Does this cache help any? What is the average memory overhead now for this instruction? Explain. Question 2: Will a four-way set-associative cache capable of holding 1K data bytes always have a better hit ratio than a direct-mapped cache capable of holding 1K data bytes? Explain.

Step by Step Solution

★★★★★

3.62 Rating (181 Votes )

There are 3 Steps involved in it

Step: 1

a Since array a starts at physical memory address 0x0100 the addresses of the first 5 elements of a are 0x0100 element a0 0x0101 element a1 0x0102 element a2 0x0103 element a3 0x0104 element a4 Since ...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started