Answered step by step

Verified Expert Solution

Question

1 Approved Answer

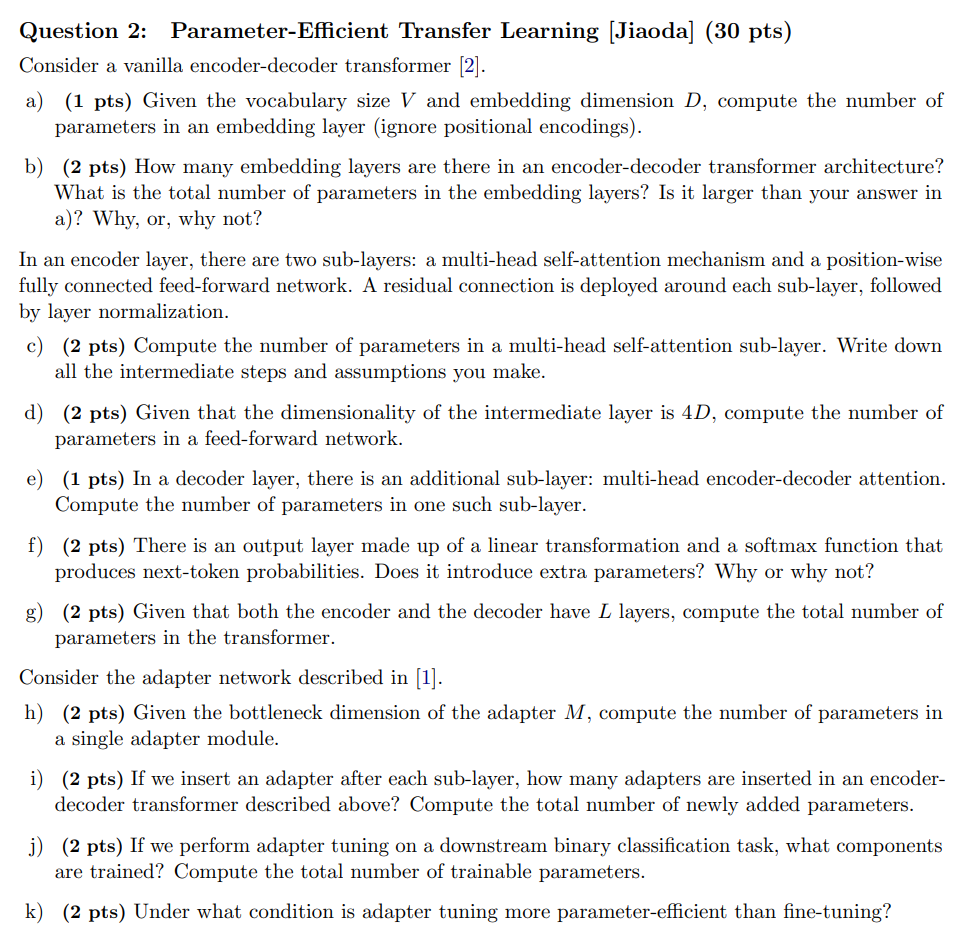

Question 2 : Parameter - Efficient Transfer Learning [ Jiaoda ] ( 3 0 pts ) Consider a vanilla encoder - decoder transformer [ 2

Question : ParameterEfficient Transfer Learning Jiaoda pts

Consider a vanilla encoderdecoder transformer

a pts Given the vocabulary size and embedding dimension compute the number of

parameters in an embedding layer ignore positional encodings

b pts How many embedding layers are there in an encoderdecoder transformer architecture?

What is the total number of parameters in the embedding layers? Is it larger than your answer in

a Why, or why not?

In an encoder layer, there are two sublayers: a multihead selfattention mechanism and a positionwise

fully connected feedforward network. A residual connection is deployed around each sublayer, followed

by layer normalization.

c pts Compute the number of parameters in a multihead selfattention sublayer. Write down

all the intermediate steps and assumptions you make.

d pts Given that the dimensionality of the intermediate layer is compute the number of

parameters in a feedforward network.

e pts In a decoder layer, there is an additional sublayer: multihead encoderdecoder attention.

Compute the number of parameters in one such sublayer.

f pts There is an output layer made up of a linear transformation and a softmax function that

produces nexttoken probabilities. Does it introduce extra parameters? Why or why not?

g pts Given that both the encoder and the decoder have layers, compute the total number of

parameters in the transformer.

Consider the adapter network described in

h pts Given the bottleneck dimension of the adapter compute the number of parameters in

a single adapter module.

i pts If we insert an adapter after each sublayer, how many adapters are inserted in an encoder

decoder transformer described above? Compute the total number of newly added parameters.

j pts If we perform adapter tuning on a downstream binary classification task, what components

are trained? Compute the total number of trainable parameters.

k pts Under what condition is adapter tuning more parameterefficient than finetuning?

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started