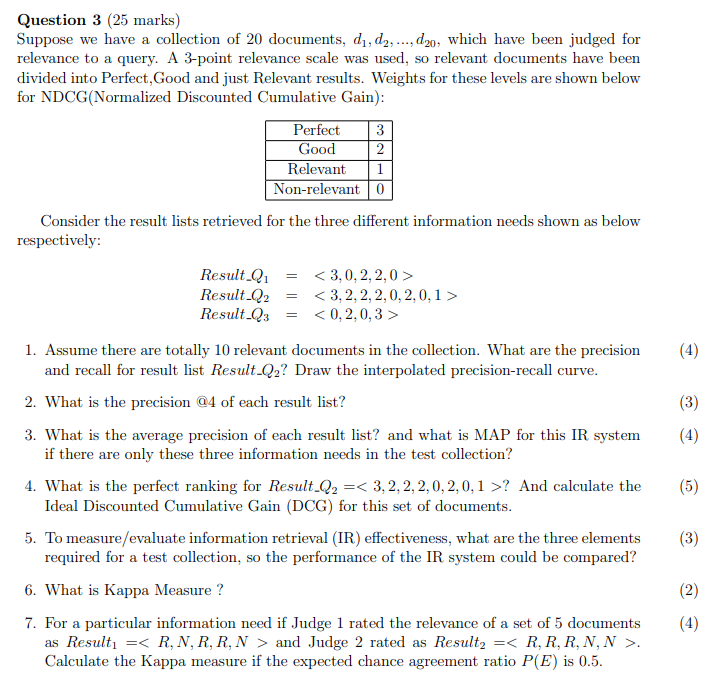

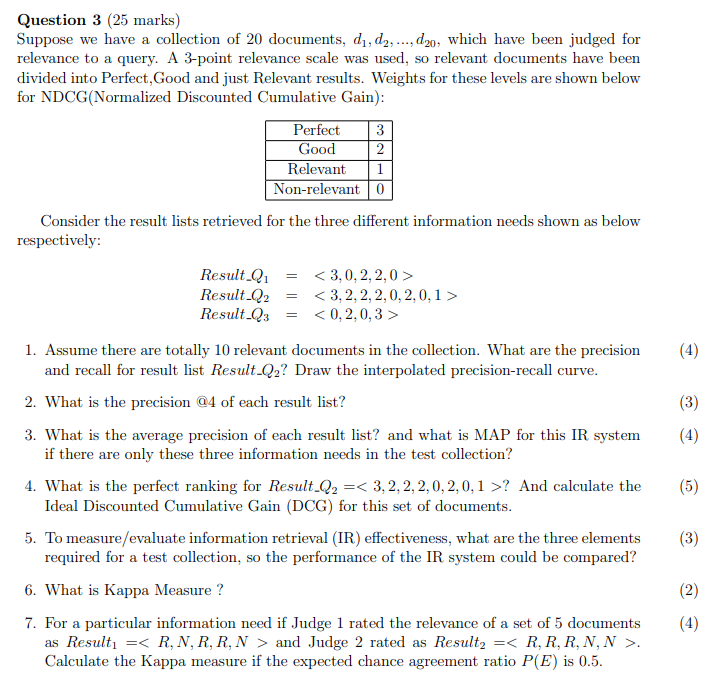

Question 3 (25 marks) Suppose we have a collection of 20 documents, d, d2, ..., d20, which have been judged for relevance to a query. A 3-point relevance scale was used, so relevant documents have been divided into Perfect, Good and just Relevant results. Weights for these levels are shown below for NDCG(Normalized Discounted Cumulative Gain): Perfect 3 Good 2 Relevant 1 Non-relevant 0 Consider the result lists retrieved for the three different information needs shown as below respectively: Result.Q Result-Q2 Result-Q3 (4) (3) (4) (5) 1. Assume there are totally 10 relevant documents in the collection. What are the precision and recall for result list Result-Q2? Draw the interpolated precision-recall curve. 2. What is the precision 24 of each result list? 3. What is the average precision of each result list? and what is MAP for this IR system if there are only these three information needs in the test collection? 4. What is the perfect ranking for Result-Q2 =? And calculate the Ideal Discounted Cumulative Gain (DCG) for this set of documents. 5. To measure/evaluate information retrieval (IR) effectiveness, what are the three elements required for a test collection, so the performance of the IR system could be compared? 6. What is Kappa Measure ? 7. For a particular information need if Judge 1 rated the relevance of a set of 5 documents as Result = and Judge 2 rated as Result2 =. Calculate the Kappa measure if the expected chance agreement ratio P(E) is 0.5. (3) (2) (4) = Question 3 (25 marks) Suppose we have a collection of 20 documents, d, d2, ..., d20, which have been judged for relevance to a query. A 3-point relevance scale was used, so relevant documents have been divided into Perfect, Good and just Relevant results. Weights for these levels are shown below for NDCG(Normalized Discounted Cumulative Gain): Perfect 3 Good 2 Relevant 1 Non-relevant 0 Consider the result lists retrieved for the three different information needs shown as below respectively: Result.Q Result-Q2 Result-Q3 (4) (3) (4) (5) 1. Assume there are totally 10 relevant documents in the collection. What are the precision and recall for result list Result-Q2? Draw the interpolated precision-recall curve. 2. What is the precision 24 of each result list? 3. What is the average precision of each result list? and what is MAP for this IR system if there are only these three information needs in the test collection? 4. What is the perfect ranking for Result-Q2 =? And calculate the Ideal Discounted Cumulative Gain (DCG) for this set of documents. 5. To measure/evaluate information retrieval (IR) effectiveness, what are the three elements required for a test collection, so the performance of the IR system could be compared? 6. What is Kappa Measure ? 7. For a particular information need if Judge 1 rated the relevance of a set of 5 documents as Result = and Judge 2 rated as Result2 =. Calculate the Kappa measure if the expected chance agreement ratio P(E) is 0.5. (3) (2) (4) =