Answered step by step

Verified Expert Solution

Question

1 Approved Answer

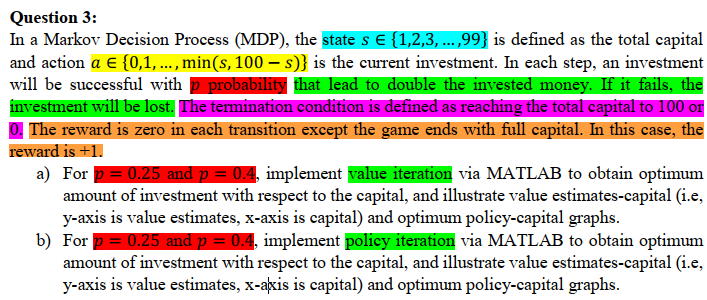

Question 3 : In a Markov Decision Process ( MDP ) , the state s i n { 1 , 2 , 3 , dots,

Question :

In a Markov Decision Process MDP the state dots, is defined as the total capital

and action aindots,min is the current investment. In each step, an investment

will be successful with probability that lead to double the invested money. If it fails, the

investment will be lost. The termination condition is defined as reaching the total capital to or

The reward is zero in each transition except the game ends with full capital. In this case, the

reward is

a For and implement value iteration via MATLAB to obtain optimum

amount of investment with respect to the capital, and illustrate value estimatescapital ie

axis is value estimates, axis is capital and optimum policycapital graphs.

b For and implement policy iteration via MATLAB to obtain optimum

amount of investment with respect to the capital, and illustrate value estimatescapital ie

axis is value estimates, axis is capital and optimum policycapital graphs.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started