Question

QUESTION FIN 34 Martin prepared his final accounts and calculated that his net profit for the year was 7,875. This was before the following errors

QUESTION FIN 34

Martin prepared his final accounts and calculated that his net profit for the year was 7,875. This was before the following errors were discovered: (i) Rent receivable of 520 was outstanding. (ii) Sales of 928 had not been recorded. (iii) A provision for bad debts of 700 should have been created. (iv) Depreciation of 800 had not been provided for. (v) An invoice for telephone charges of 120 had not been paid. (vi) Sales returns of 322 had not been entered. (vii) Rent of 1,000 shown in the profit and loss account relates to the following year. (viii) Closing stock had been overvalued by 648. Show the corrected net profit for the year.

Compute the Gini index for the entire data set of Table 10.1, with respect to the two

classes. Compute the Gini index for the portion of the data set with age at least 50.

2. Repeat the computation of the previous exercise with the use of the entropy criterion.

3. Show how to construct a (possibly overfitting) rule-based classifier that always exhibits

100 % accuracy on the training data. Assume that the feature variables of no two

training instances are identical.

4. Design a univariate decision tree with a soft maximum-margin split criterion borrowed

from SVMs. Suppose that this decision tree is generalized to the multivariate case.

How does the resulting decision boundary compare with SVMs? Which classifier can

handle a larger variety of data sets more accurately?

5. Discuss the advantages of a rule-based classifier over a decision tree.

6. Show that an SVM is a special case of a rule-based classifier. Design a rule-based

classifier that uses SVMs to create an ordered list of rules.

7. Implement an associative classifier in which only maximal patterns are used for classification, and the majority consequent label of rules fired, is reported as the label of

the test instance.

8. Suppose that you had d-dimensional numeric training data, in which it was known that

the probability density of d-dimensional data instance X in each class i is proportiona

Explain the relationship of mutual exclusiveness and exhaustiveness of a rule set, to

the need to order the rule set, or the need to set a class as the default class.

10. Consider the rules Age > 40 ? Donor and Age ? 50 ? Donor. Are these two rules

mutually exclusive? Are these two rules exhaustive?

11. For the example of Table 10.1, determine the prior probability of each class. Determine

the conditional probability of each class for cases where the Age is at least 50.

12. Implement the naive Bayes classifier.

13. For the example of Table 10.1, provide a single linear separating hyperplane. Is this

separating hyperplane unique?

14. Consider a data set containing four points located at the corners of the square. The

two points on one diagonal belong to one class, and the two points on the other

diagonal belong to the other class. Is this data set linearly separable? Provide a proof.

15. Provide a systematic way to determine whether two classes in a labeled data set are

linearly separable.

16. For the soft SVM formulation with hinge loss, show that:

(a) The weight vector is given by the same relationship W = n

i=1 ?iyiXi, as for

hard SVMs.

(b) The condition n

i=1 ?iyi = 0 holds as in hard SVMs.

(c) The Lagrangian multipliers satisfy ?i ? C.

(d) The Lagrangian dual is identical to that of hard SVMs.

17. Show that it is possible to omit the bias parameter b from the decision boundary of

SVMs by suitably preprocessing the data set. In other words, the decision boundary is

now W X = 0. What is the impact of eliminating the bias parameter on the gradient

ascent approach for Lagrangian dual optimization in SVMs?

18. Show that an nd data set can be mean-centered by premultiplying it with the nn

matrix (I ? U), where U is a unit matrix of all ones. Show that an n n kernel

matrix K can be adjusted for mean centering of the data in the transformed space by

adjusting it to K = (I ? U)K(I ? U).

19. Consider two classifiers A and B. On one data set, a 10-fold cross validation shows

that classifier A is better than B by 3 %, with a standard deviation of 7 % over 100

different folds. On the other data set, classifier B is better than classifier A by 1 %,

with a standard deviation of 0.1 % over 100 different folds. Which classifier would you

prefer on the basis of this evidence, and why?

20. Provide a nonlinear transformation which would make the data set of Exercise 14

linearly separable.

21. Let Sw and Sb be defined according to Sect. 10.2.1.3 for the binary class problem.

Let the fractional presence of the two classes be p0 and p1, respectively. Show that

Sw + p0p1Sb is equal to the covariance matrix of the data se

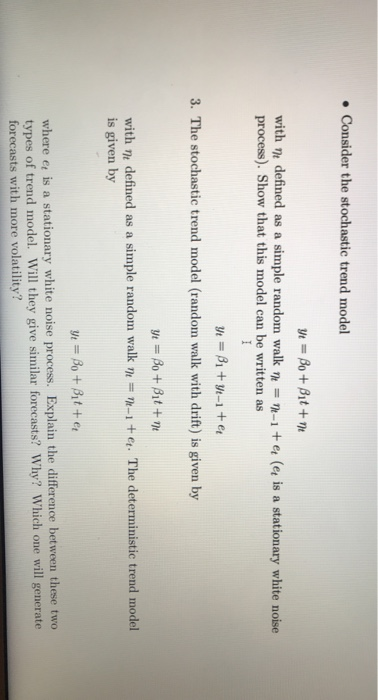

Consider the stochastic trend model Yt=Bo+Bit+ne with nt defined as a simple random walk n =-1+et (et is a stationary white noise process). Show that this model can be written as I Y = B1+ Y-1 + 3. The stochastic trend model (random walk with drift) is given by with yt = Bo+ Bit+m defined as a simple random walk n =-1+e. The deterministic trend model is given by where et is a stationary white noise process. Explain the difference between these two types of trend model. Will they give similar forecasts? Why? Which one will generate forecasts with more volatility?

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started