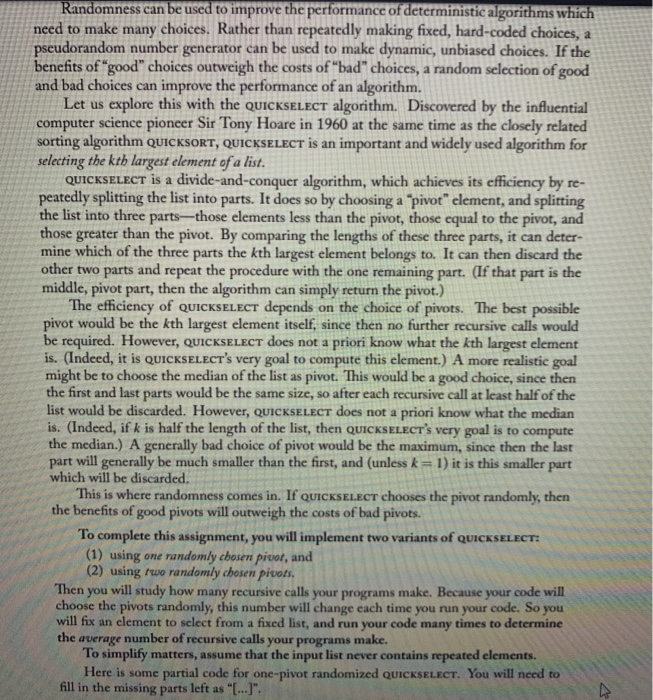

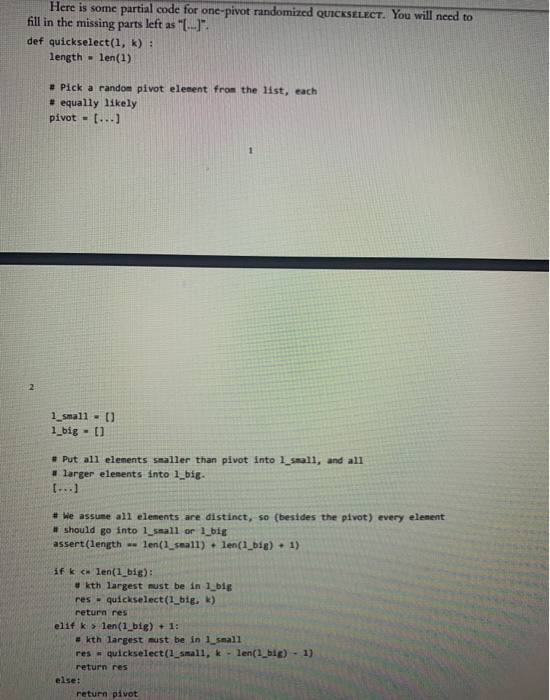

Randomness can be used to improve the performance of deterministic algorithms which need to make many choices. Rather than repeatedly making fixed, hard-coded choices, a pseudorandom number generator can be used to make dynamic, unbiased choices. If the benefits of "good" choices outweigh the costs of "bad" choices, a random selection of good and bad choices can improve the performance of an algorithm. Let us explore this with the QUICKSELECT algorithm. Discovered by the influential computer science pioneer Sir Tony Hoare in 1960 at the same time as the closely related sorting algorithm QUICKSORT, QUICKSELECT is an important and widely used algorithm for selecting the kth largest element of a list. QUICKSELECT is a divide-and-conquer algorithm, which achieves its efficiency by re- peatedly splitting the list into parts. It does so by choosing a "pivot" element, and splitting the list into three parts--those elements less than the pivot, those equal to the pivot, and those greater than the pivot. By comparing the lengths of these three parts, it can deter- mine which of the three parts the kth largest element belongs to. It can then discard the other two parts and repeat the procedure with the one remaining part. (If that part is the middle, pivot part, then the algorithm can simply return the pivot.) The efficiency of QUICKSELECT depends on the choice of pivots. The best possible pivot would be the kth largest element itself, since then no further recursive calls would be required. However, QUICKSELECT does not a priori know what the kth largest element is. (Indeed, it is QUICKSELECT's very goal to compute this element.) A more realistic goal might be to choose the median of the list as pivot. This would be a good choice, since then the first and last parts would be the same size, so after each recursive call at least half of the list would be discarded. However, QUICKSELECT does not a priori know what the median is. (Indeed, if k is half the length of the list, then QUICKSELECT's very goal is to compute the median.) A generally bad choice of pivot would be the maximum, since then the last part will generally be much smaller than the first, and (unless k = 1) it is this smaller part which will be discarded. This is where randomness comes in. If QUICKSELECT chooses the pivot randomly, then the benefits of good pivots will outweigh the costs of bad pivots. To complete this assignment, you will implement two variants of QUICKSELECT: (1) using one randomly chosen picot, and (2) using two randomly chosen pivots. Then you will study how many recursive calls your programs make. Because your code will choose the pivots randomly, this number will change each time you run your code. So you will fix an element to select from a fixed list, and run your code many times to determine the average number of recursive calls your programs make. To simplify matters, assume that the input list never contains repeated elements. Here is some partial code for one-pivot randomized QUICKSELECT. You will need to fill in the missing parts left as "[...]". Here is some partial code for one-pivot randomized QUICKSELECT. You will need to fill in the missing parts left as "(...)". def quickselect(1, k) : length - len(1) # Pick a random pivot element from the list, each # equally likely pivot - [...] 1_small - 0 1_big - [] # Put all elements smaller than pivot into l_small, and all #larger elements into 1_big. [...] # We assume all elements are distinct, so (besides the pivot) every element # should go into 1_small or 1_big assert(length -- len(1_small) + len(1_big) - 1) if k len(1_big) + 1: # kth largest must be in 1_small res - quickselect(1_small, k - len(1_big) - 1) return res else: return pivot Make your code return both the kth largest element and the number of calls to QUICK- SELECT (or its double-pivot variant) it took to find it. (The first call counts.) In the two-pivot variant, randomly select two different pivots in each round. Make each possible pair of pivots equally likely. Say the smaller pivot is a and the larger one is b. Then split the list into three parts: those elements smaller than a, those between a and b, and those larger than b. Compare the sizes of the parts to determine whether one of the pivots was the kth largest element, or if not, which of the three parts contains the kth largest element, and then recursively search through that part. [To emphasize, you should not recurse if one of the pivots is the kth largest element.) Once you have all that working, write code to do a Monte Carlo simulation to estimate the average number of calls required to find the kth largest element in a list of n distinct elements (for both variants). Note that it doesn't matter what the elements of the list are, as long as they are distinct. Also, running at least 20,000 trials ought to produce a good enough estimate for the parameters we will ask you about. As you might expect, the double pivot requires fewer recursive calls on average. How- ever, the cost is that it might take longer to complete each round for a given size since it potentially has to compare each element to both pivots. We are not asking you to do so for this assignment, but you might consider trying to also estimate the average number of total comparisons the two versions make. Randomness can be used to improve the performance of deterministic algorithms which need to make many choices. Rather than repeatedly making fixed, hard-coded choices, a pseudorandom number generator can be used to make dynamic, unbiased choices. If the benefits of "good" choices outweigh the costs of "bad" choices, a random selection of good and bad choices can improve the performance of an algorithm. Let us explore this with the QUICKSELECT algorithm. Discovered by the influential computer science pioneer Sir Tony Hoare in 1960 at the same time as the closely related sorting algorithm QUICKSORT, QUICKSELECT is an important and widely used algorithm for selecting the kth largest element of a list. QUICKSELECT is a divide-and-conquer algorithm, which achieves its efficiency by re- peatedly splitting the list into parts. It does so by choosing a "pivot" element, and splitting the list into three parts--those elements less than the pivot, those equal to the pivot, and those greater than the pivot. By comparing the lengths of these three parts, it can deter- mine which of the three parts the kth largest element belongs to. It can then discard the other two parts and repeat the procedure with the one remaining part. (If that part is the middle, pivot part, then the algorithm can simply return the pivot.) The efficiency of QUICKSELECT depends on the choice of pivots. The best possible pivot would be the kth largest element itself, since then no further recursive calls would be required. However, QUICKSELECT does not a priori know what the kth largest element is. (Indeed, it is QUICKSELECT's very goal to compute this element.) A more realistic goal might be to choose the median of the list as pivot. This would be a good choice, since then the first and last parts would be the same size, so after each recursive call at least half of the list would be discarded. However, QUICKSELECT does not a priori know what the median is. (Indeed, if k is half the length of the list, then QUICKSELECT's very goal is to compute the median.) A generally bad choice of pivot would be the maximum, since then the last part will generally be much smaller than the first, and (unless k = 1) it is this smaller part which will be discarded. This is where randomness comes in. If QUICKSELECT chooses the pivot randomly, then the benefits of good pivots will outweigh the costs of bad pivots. To complete this assignment, you will implement two variants of QUICKSELECT: (1) using one randomly chosen picot, and (2) using two randomly chosen pivots. Then you will study how many recursive calls your programs make. Because your code will choose the pivots randomly, this number will change each time you run your code. So you will fix an element to select from a fixed list, and run your code many times to determine the average number of recursive calls your programs make. To simplify matters, assume that the input list never contains repeated elements. Here is some partial code for one-pivot randomized QUICKSELECT. You will need to fill in the missing parts left as "[...]". Here is some partial code for one-pivot randomized QUICKSELECT. You will need to fill in the missing parts left as "(...)". def quickselect(1, k) : length - len(1) # Pick a random pivot element from the list, each # equally likely pivot - [...] 1_small - 0 1_big - [] # Put all elements smaller than pivot into l_small, and all #larger elements into 1_big. [...] # We assume all elements are distinct, so (besides the pivot) every element # should go into 1_small or 1_big assert(length -- len(1_small) + len(1_big) - 1) if k len(1_big) + 1: # kth largest must be in 1_small res - quickselect(1_small, k - len(1_big) - 1) return res else: return pivot Make your code return both the kth largest element and the number of calls to QUICK- SELECT (or its double-pivot variant) it took to find it. (The first call counts.) In the two-pivot variant, randomly select two different pivots in each round. Make each possible pair of pivots equally likely. Say the smaller pivot is a and the larger one is b. Then split the list into three parts: those elements smaller than a, those between a and b, and those larger than b. Compare the sizes of the parts to determine whether one of the pivots was the kth largest element, or if not, which of the three parts contains the kth largest element, and then recursively search through that part. [To emphasize, you should not recurse if one of the pivots is the kth largest element.) Once you have all that working, write code to do a Monte Carlo simulation to estimate the average number of calls required to find the kth largest element in a list of n distinct elements (for both variants). Note that it doesn't matter what the elements of the list are, as long as they are distinct. Also, running at least 20,000 trials ought to produce a good enough estimate for the parameters we will ask you about. As you might expect, the double pivot requires fewer recursive calls on average. How- ever, the cost is that it might take longer to complete each round for a given size since it potentially has to compare each element to both pivots. We are not asking you to do so for this assignment, but you might consider trying to also estimate the average number of total comparisons the two versions make