Regression matrix, confidence intervals and regions

(problem 1)

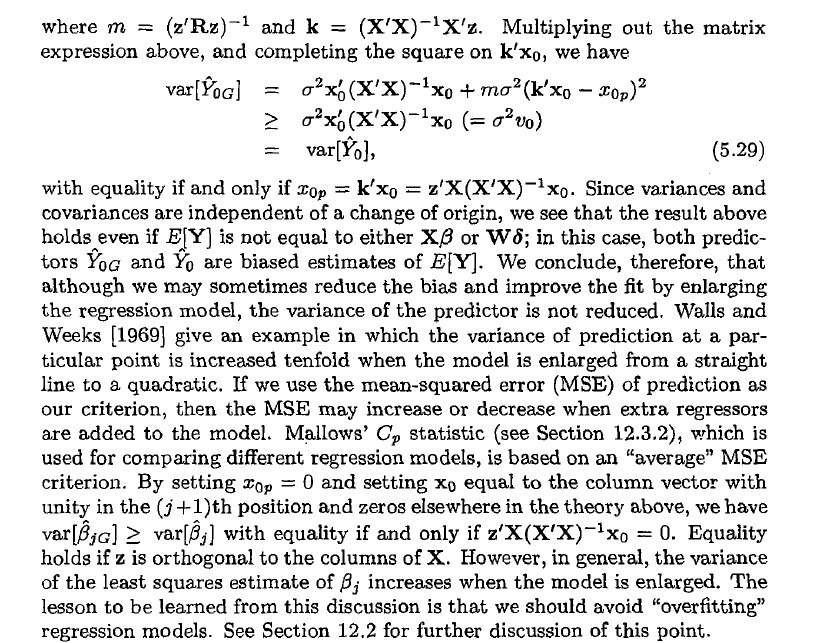

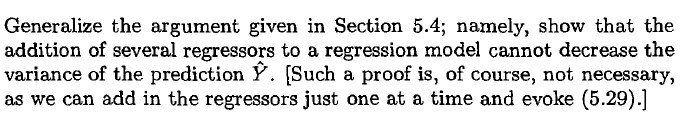

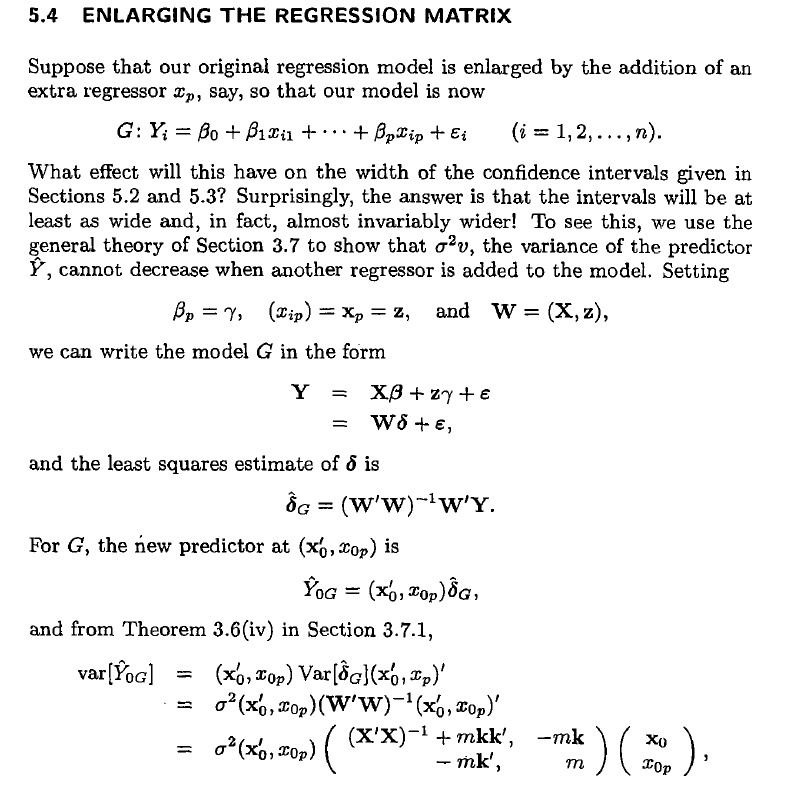

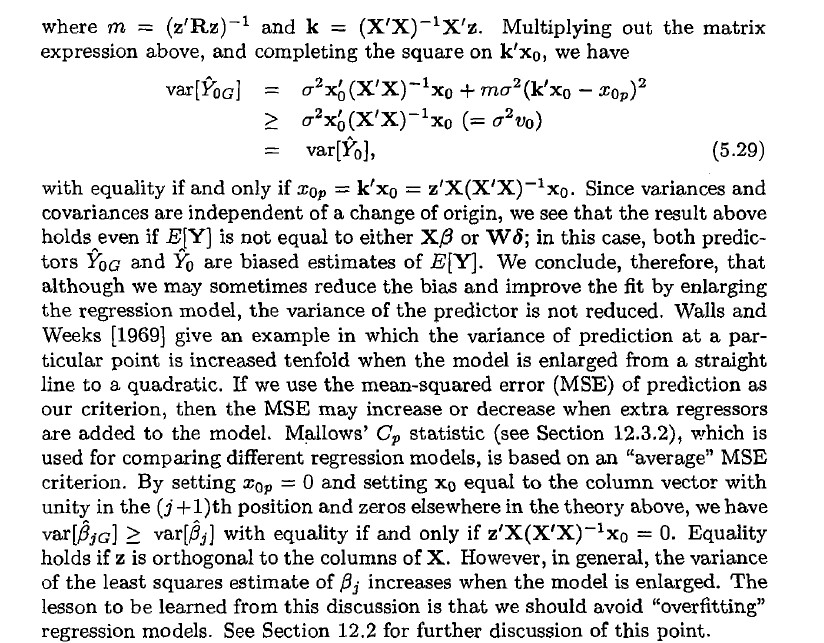

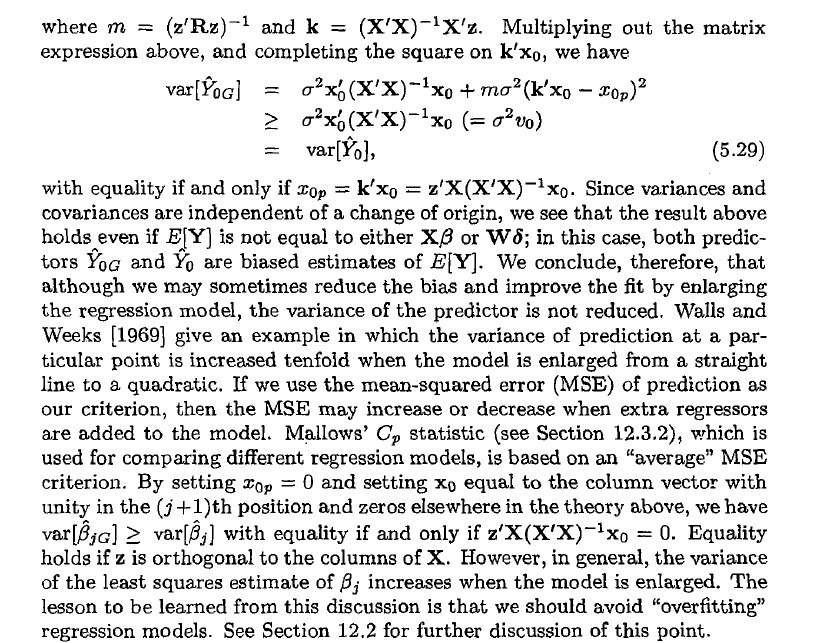

Generalize the argument given in Section 5.4; namely, show that the addition of several regressors to a regression model cannot decrease the variance of the prediction Y. [Such a proof is, of course, not necessary, as we can add in the regressors just one at a time and evoke (5.29).]5.4 ENLARGING THE REGRESSION MATRIX Suppose that our original regression model is enlarged by the addition of an extra regressor , say, so that our model is now G: Yi = Bo + Bizu+ ."+ BpTip + Ei (i = 1, 2, ..., n). What effect will this have on the width of the confidence intervals given in Sections 5.2 and 5.3? Surprisingly, the answer is that the intervals will be at least as wide and, in fact, almost invariably wider! To see this, we use the general theory of Section 3.7 to show that o'v, the variance of the predictor Y', cannot decrease when another regressor is added to the model. Setting Bp = 7, (tip) = Xp = z, and W = (X,z), we can write the model G in the form Y = XB+zy+e = WO+, and the least squares estimate of 6 is bc = (Ww)'WY. For G, the new predictor at (X,, Cop) is YOG = (X5, LOP ) OG, and from Theorem 3.6(iv) in Section 3.7.1, var[Yoo] = (Xo, Cop) Var[ocl(xo, Xp)' = 0'(X0, Cop) (WW)-'(x6, Cop)' = 02(x6, XOp) ( x'X) -1 +mkk', -mk XO - mk' , m TOPwhere m = (z'Rs)'1 and k = (X'XT'C'CL Multiplying out the matrix expression above, and completing the square on k'xu, we have \"(XI )"1 xu + m03(k'xn 2:0?)2 ::XE(X'X)'1 so (= 0 Eva} V3.1: [f0] , (5 .29] Vesta] ll ll TV with equality if and only if 1:0,, = k'xu = z'XOC'XJ'Ixo. Since variances and covariances are independent of a change of origin, we see that the result above holdseven if Eff] is not equal to either 3,8 or W6; in this case, both predic- tors YQG and Ya are biased estimates of EEY]. We conclude, therefore, that although we may sometimes reduce the bias and improve the t by enlarging the regression model, the variance of the predictor is not reduced. Walls and Weeks [1969} give an example in which the variance of prediction at a par- ticular point is increased tenfold when the model is enlarged from a straight line to a quadratic. If we use the mean-squared error (MSE) of prediction as our criterion, then the MSE may increase or decrease when extra regreesors are added to the model. Mellow-3' 0,, statistic {see Section 12.3.2), which is used for comparing different regression models, is based on an \"average\" MSE criterion. By setting 39,, = D and setting are. equal to the column vector with unity in the (j +1)th position and zeros elsewhere' 1n the theory above, we have mijJH > var[,6_,] with equality if and only if z'XOC'X) 13in 0. Equalityr holds if z is orthogonal to the columns of X. However, in general, the variance of the least squares estimate of ,- increases when the model is enlarged. The lesson to be lea1ned from this discussion is that we should avoid \"overiitting\" regression models. See Section 12.2 for further discussion of this point