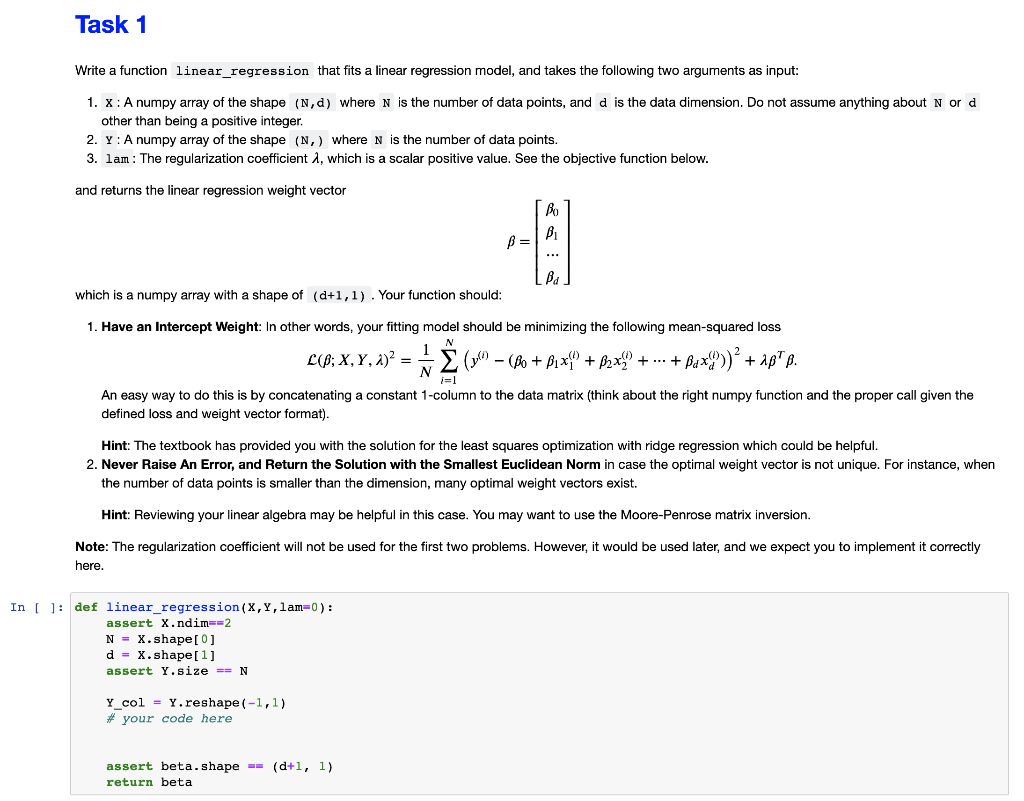

Question

Rewrite the function linear_regression that [does the instructions in the image below], and make sure it passes the assertion. Copyable Code: def linear_regression(X,Y,lam=0): Train

Rewrite the function linear_regression that [does the instructions in the image below], and make sure it passes the assertion.

Copyable Code:

Copyable Code:

def linear_regression(X,Y,lam=0): """ Train linear regression model

Parameters: X (np.array): A numpy array with the shape (N, d) where N is the number of data points and d is dimension Y (np.array): A numpy array with the shape (N,), where N is the number of data points lam (int): The regularization coefficient where default value is 0 Returns: beta (np.array): A numpy array with the shape (d+1, 1) that represents the linear regression weight vector """ assert X.ndim==2 N = X.shape[0] d = X.shape[1] assert Y.size == N Y_col = Y.reshape(-1,1) # ADD your code here

assert beta.shape == (d+1, 1) return beta

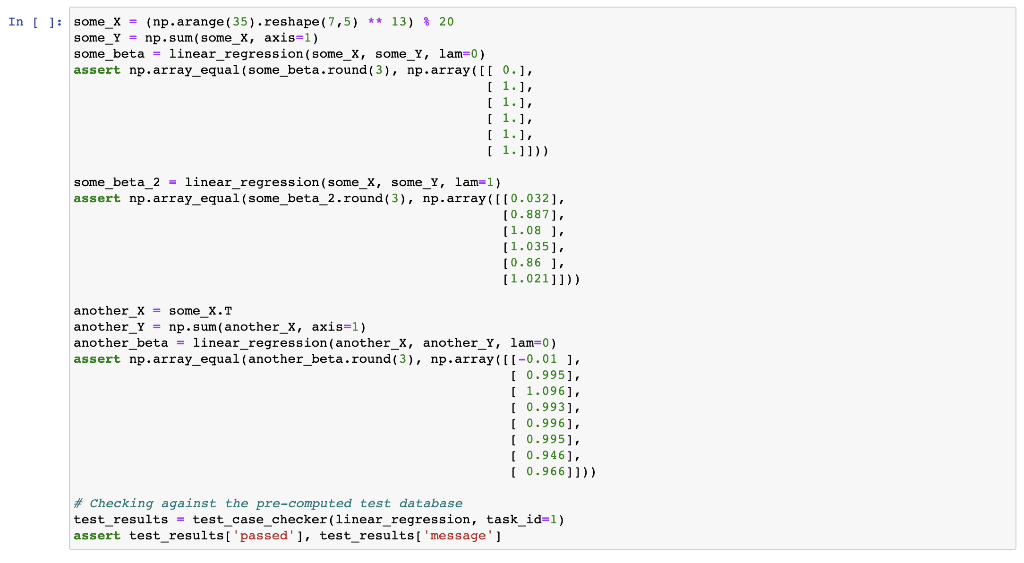

ASSERTION CHECK:

# Performing sanity checks on your implementation some_X = (np.arange(35).reshape(7,5) ** 13) % 20 some_Y = np.sum(some_X, axis=1) some_beta = linear_regression(some_X, some_Y, lam=0) assert np.array_equal(some_beta.round(3), np.array([[ 0.], [ 1.], [ 1.], [ 1.], [ 1.], [ 1.]]))

some_beta_2 = linear_regression(some_X, some_Y, lam=1) assert np.array_equal(some_beta_2.round(3), np.array([[0.032], [0.887], [1.08 ], [1.035], [0.86 ], [1.021]]))

another_X = some_X.T another_Y = np.sum(another_X, axis=1) another_beta = linear_regression(another_X, another_Y, lam=0) assert np.array_equal(another_beta.round(3), np.array([[-0.01 ], [ 0.995], [ 1.096], [ 0.993], [ 0.996], [ 0.995], [ 0.946], [ 0.966]]))

# Checking against the pre-computed test database test_results = test_case_checker(linear_regression, task_id=1) assert test_results['passed'], test_results['message']

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started