Question: [Solutions to this assignment must be submitted vio CANVAS prior to midnight on the due dote. These dates and times vory depending on the milestone

![day later than the due date.] This project may be undertaken in](https://s3.amazonaws.com/si.experts.images/answers/2024/07/669a483739ed6_062669a4836c6e20.jpg)

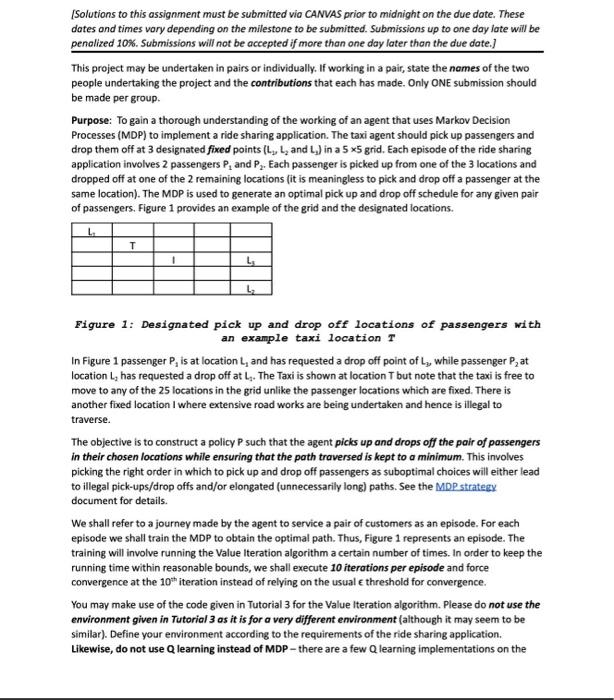

[Solutions to this assignment must be submitted vio CANVAS prior to midnight on the due dote. These dates and times vory depending on the milestone to be submitted. Submissions up to one day late will be penalized 10\%. Submissions will not be accepted if more than one day later than the due date.] This project may be undertaken in pairs or individually. If working in a pair, state the names of the two people undertaking the project and the contributions that each has made. Only ONE submission should be made per group. Purpose: To gain a thorough understanding of the working of an agent that uses Markov Decision Processes (MDP) to implement a ride sharing application. The taxi agent should pick up passengers and drop them off at 3 designated fixed points (L1,L2 and L1) in a 55 grid. Each episode of the ride sharing application involves 2 passengers P1 and P2. Each passenger is picked up from one of the 3 locations and dropped off at one of the 2 remaining locations (it is meaningless to pick and drop off a passenger at the same location). The MDP is used to generate an optimal pick up and drop off schedule for any given pair of passengers. Figure 1 provides an example of the grid and the designated locations. Figure 1: Designated pick up and drop off locations of passengers with an example taxi location T In Figure 1 passenger P1 is at location L1 and has requested a drop off point of L3, while passenger P2 at location L2 has requested a drop off at L2. The Taxi is shown at location T but note that the taxi is free to move to any of the 25 locations in the grid unlike the passenger locations which are fixed. There is another fixed location I where extensive road works are being undertaken and hence is illegal to traverse. The objective is to construct a policy P such that the agent picks up and drops off the pair of passengers in their chosen locations while ensuring that the path traversed is kept to a minimum. This involves picking the right order in which to pick up and drop off passengers as suboptimal choices will either lead to illegal pick-ups/drop offs and/or elongated (unnecessarily long) paths. See the MDP stratecy document for details. We shall refer to a journey made by the agent to service a pair of customers as an episode. For each episode we shall train the MDP to obtain the optimal path. Thus, Figure 1 represents an episode. The training will involve running the Value Iteration algorithm a certain number of times. In order to keep the running time within reasonable bounds, we shall execute 10 iterations per episode and force convergence at the 10th iteration instead of relying on the usual threshold for convergence. You may make use of the code given in Tutorial 3 for the Value Iteration algorithm. Please do not use the environment given in Tutorial 3 as it is for a very different environment (although it may seem to be similar). Define your environment according to the requirements of the ride sharing application. Likewise, do not use Q learning instead of MDP - there are a few Q learning implementations on the key factors: the states, the transitions between states, the rewards to be used and finally the discounting factor Y. 1. A state in this application consists of a 5 tuple (T, P2,P4P2,P1P2D,P2D) where T is the location of the taxi in the grid, P2P is the pickup point for passenger 14P2P is the pickup point for passenger 2,P3D is the drop-off point for passenger 1 and P2D is the drop-off point for passenger 2 . Thus, for example for the episode given in Figure 1 we have: ((2,4),(1,5),(5,1),(5,3),(1,5)) using the cortuention of indexing first by column and then by row (to maintain consistency with the class notes; also, indexes start from 1 instead of 0 as in Python). From our definition of a state, we see that we have a total of 253322=900 states (we have 22 at the end instead of 33 as drop-off points need to differ from pick-up points). 2. In terms of actions, we have a total of 6 actions from each state. The set of actions A={N,S,W,E,P, D) where N refers to North, S to South, W to West, E to East, P to Pick-up and D to Drop-off. 3. We will define the transitions between states in terms of probabilities Pr(ss,a) which is the probability of moving from the current state s to a new state s using action a. The probability matrix Pr is defined using 2 rules: 1. For a designated pick-up/drop-off location (a,b) we have: Pr((a,b)(a,b),P)=0.4Pr((a,b)(a,b),D)=0.4Pr(s(a,b),M)=0.05whereM=(N,S,W,E)andsistheneighborofsdefinedbyactionM. II. For all other points, Pr(s(a,b),M)=0.8whereMistheintendedmovewhichcanbeoneofN,S,WorE. Pr(s(a,b),M)=0.04whereMisnotintheintendeddirectionPr((a,b)(a,b),P)=0.04Pr((a,b)(a,b),D)=0.04 4. For pick-ups and drop-off points (i.e., L1,L2 and L1 ) we will set a value of +20,000. For the illegal pick-up/drop-off point L, we will set a reward of 10,000. The live-in reward is set at a value of -1. 5. We set Y=1.0 Implementation: Once you have written the code to implement the 3 rules above and integrated with the Value Iteration method given in Tutorial 3 (which you may use when it is available on Thursday) the next step is to train your MDP on episodes that you generate. Generate a total of 5 episodes, starting with the episode given in Figure 1. In addition to this episode, generate 4 other episodes. For each of these 4 episodes, generate random pick-up and drop-off points for the 2 passengers from the 3 available locations L2,L2 and L3. Likewise, generate a random location T for the taxi T from any of the grid points, taking care to avoid illegal point I. Once each episode is generated, train the MDP by executing your Value Iteration algorithm with 10 iterations. Once the policy is generated the next step is to evaluate the policy. For policy evaluation, use location T as the starting point and follow the path defined by the policy until both passengers are dropped off. For the evaluation we shall compute the simple metric of path length (other metrics such as sum of expected discounted rewards are also possible, but we will not use them in this Project). The smaller the path length, the bigger is the reward obtained and hence we rank paths by length. Your task in this project is to implement the following requirements. The project has a single milestone which is your code that you used and the answers to the questions below. 1. For episode 1 given in Figure 1, work out the optimal policy by hand. The optimal policy is the one that results in the shortest path that picks up and drops off both passengers without traversing illegal point I. Work out this optimal policy by hand and display the path P as a sequence of grid locations (2D coordinates) starting with the Taxi location T. 2. Run your MDP code for episode 1 and display the path given by its policy, also as a sequence of grid locations starting with T. If your path differs from the path you constructed in 1 above, why do you think it is so? 3. Generate 4 other episodes. For each episode: a. print out the locations of the pick-up and drop-off points and the location of the Taxi b. print out the path and its length that was produced by your MDP to service the journey (i.e., the episode). The following three changes have been made to the project specification: 1. Positive rewards are +20000. 2. Negative reward for illegal pickup/drop off is 10000 3. For all points that are not designated as pickup or drop-off points (rule 2 in Project 3 Specification document) Pr(s(a,b),M)=0.8 where M is the intended move which can be one of N,S,W or E. Pr(s(a,b),M)=0.04 where M is not in the intended direction Pr((a,b)(a,b),P)=0.04 Pr((a,b)(a,b),D)=0.04 Episode Segmentation The MDP in its original form only handles a single navigation request from a given start position to a given destination. In order to handle multiple navigation, it needs to be engineered suitably. The following describes how this is done. Each journey or episode needs to be broken down into sub-episodes. Using the example given in the Project document we have requests for pickup of passenger 1 (P1) at L1 (1,5), request for drop off at L3 (5,2), request for pickup of P2 at (5,1) and drop off of P2 at (1,5). This journey can be broken down into the following 4 sub-episodes: 1. The taxi moving from its start location (2,4) to pick up P1 from (1,5). 2. The taxi moving from (1,5) to drop off P1 at (5,2) 3. The taxi moving from (5,2) to pick up P2 from (5,1) 4. The taxi moving from (5,1) to drop off P2 at (1,5) In general. we run the MDP from the taxi's start location until the next request is serviced at one of the three locations L1, L2 or L3. This marks the conclusion of a sub-episode. Thereafter, we need to examine whether any further requests can be serviced at that location. If yes, then the MDP is run once again for the next sub-episode with all rewards as for the previous sub-episode. but all state values set to 0 (zero). On the other hand, if no pending pickup or drop-off requests can be met at that location, then the reward at that location is set to 0 in addition to the state values being set to 0 . Thus, for example suppose the first sub-episode had the taxi moving from its start location (2,4) to pick up P1 from (1,5). Then, the pending drop-off requests are at L3 and L1. The one at L3 does not count as it cannot be met from L1. The one at L2 also does not count as it requires passenger P2 to be already in the taxi which is not the case. Thus. the reward at L1 is set to zero as well all state values are set to 0 . Note that the positive rewards of 2000 are active at L2 and L3. The MDP is then run again. This enables the taxi to move from L1 to its next destination in sub-episode 2. Continuing with this example, it the next sub-episode is for the taxi to move from L1 Al other walues stur remin ate to drop off P1 at L3, then we have the same situation after the drop oll at L3. After L3. the taxi is empty and hence is unable to meet its pending drop-off service of passenger 2 at L1. Hence the same process happens after sub-episode 2: the reward at L3 is set to 0 as well as al cell states are set to 0 , and the MDP is run Iteration 2 again. Note that the positive rewards of 2000 are active at L2 and L1. The same stuation repeats for sub-episode 3 as the pending drop-olf request for P2 cannot be met at L2. Note that the Bellman utiify for an episode cnly needs to iterate over the sel of al - 15941 possible taxi lecabions as the other 4 components of the state space (pickups and drop-offs) remain constant for a given episode. Of course, these 4 components need to be stored but they are not part of the Bellman update and hence can be stored Sindaty {1,4]15954,1 separately. This greafly simplifies the officioncy of the MDP update as woll as the data stricture used to represent the ufilty value. It also simplifies the computstion of the path for a given episode (and its sub-episodes) as we only need to traverse a 2D data structure. let us work though some esanples of our seape sgace update using epliode 1 . Al walues of d are telt to 0 . We then infialiag (4,5)=40,7)+4{5,3)=20009 4{4,2}=10000 =10sec6 a 209652 - 41.5. Sub-episode 2 tteration 1 Sub-episode 1 - 14399=044,8 Iteration 2 = 15435 - 75415.7 Iteration 1 thewice, 4[2.4]=14199 [Solutions to this assignment must be submitted vio CANVAS prior to midnight on the due dote. These dates and times vory depending on the milestone to be submitted. Submissions up to one day late will be penalized 10\%. Submissions will not be accepted if more than one day later than the due date.] This project may be undertaken in pairs or individually. If working in a pair, state the names of the two people undertaking the project and the contributions that each has made. Only ONE submission should be made per group. Purpose: To gain a thorough understanding of the working of an agent that uses Markov Decision Processes (MDP) to implement a ride sharing application. The taxi agent should pick up passengers and drop them off at 3 designated fixed points (L1,L2 and L1) in a 55 grid. Each episode of the ride sharing application involves 2 passengers P1 and P2. Each passenger is picked up from one of the 3 locations and dropped off at one of the 2 remaining locations (it is meaningless to pick and drop off a passenger at the same location). The MDP is used to generate an optimal pick up and drop off schedule for any given pair of passengers. Figure 1 provides an example of the grid and the designated locations. Figure 1: Designated pick up and drop off locations of passengers with an example taxi location T In Figure 1 passenger P1 is at location L1 and has requested a drop off point of L3, while passenger P2 at location L2 has requested a drop off at L2. The Taxi is shown at location T but note that the taxi is free to move to any of the 25 locations in the grid unlike the passenger locations which are fixed. There is another fixed location I where extensive road works are being undertaken and hence is illegal to traverse. The objective is to construct a policy P such that the agent picks up and drops off the pair of passengers in their chosen locations while ensuring that the path traversed is kept to a minimum. This involves picking the right order in which to pick up and drop off passengers as suboptimal choices will either lead to illegal pick-ups/drop offs and/or elongated (unnecessarily long) paths. See the MDP stratecy document for details. We shall refer to a journey made by the agent to service a pair of customers as an episode. For each episode we shall train the MDP to obtain the optimal path. Thus, Figure 1 represents an episode. The training will involve running the Value Iteration algorithm a certain number of times. In order to keep the running time within reasonable bounds, we shall execute 10 iterations per episode and force convergence at the 10th iteration instead of relying on the usual threshold for convergence. You may make use of the code given in Tutorial 3 for the Value Iteration algorithm. Please do not use the environment given in Tutorial 3 as it is for a very different environment (although it may seem to be similar). Define your environment according to the requirements of the ride sharing application. Likewise, do not use Q learning instead of MDP - there are a few Q learning implementations on the key factors: the states, the transitions between states, the rewards to be used and finally the discounting factor Y. 1. A state in this application consists of a 5 tuple (T, P2,P4P2,P1P2D,P2D) where T is the location of the taxi in the grid, P2P is the pickup point for passenger 14P2P is the pickup point for passenger 2,P3D is the drop-off point for passenger 1 and P2D is the drop-off point for passenger 2 . Thus, for example for the episode given in Figure 1 we have: ((2,4),(1,5),(5,1),(5,3),(1,5)) using the cortuention of indexing first by column and then by row (to maintain consistency with the class notes; also, indexes start from 1 instead of 0 as in Python). From our definition of a state, we see that we have a total of 253322=900 states (we have 22 at the end instead of 33 as drop-off points need to differ from pick-up points). 2. In terms of actions, we have a total of 6 actions from each state. The set of actions A={N,S,W,E,P, D) where N refers to North, S to South, W to West, E to East, P to Pick-up and D to Drop-off. 3. We will define the transitions between states in terms of probabilities Pr(ss,a) which is the probability of moving from the current state s to a new state s using action a. The probability matrix Pr is defined using 2 rules: 1. For a designated pick-up/drop-off location (a,b) we have: Pr((a,b)(a,b),P)=0.4Pr((a,b)(a,b),D)=0.4Pr(s(a,b),M)=0.05whereM=(N,S,W,E)andsistheneighborofsdefinedbyactionM. II. For all other points, Pr(s(a,b),M)=0.8whereMistheintendedmovewhichcanbeoneofN,S,WorE. Pr(s(a,b),M)=0.04whereMisnotintheintendeddirectionPr((a,b)(a,b),P)=0.04Pr((a,b)(a,b),D)=0.04 4. For pick-ups and drop-off points (i.e., L1,L2 and L1 ) we will set a value of +20,000. For the illegal pick-up/drop-off point L, we will set a reward of 10,000. The live-in reward is set at a value of -1. 5. We set Y=1.0 Implementation: Once you have written the code to implement the 3 rules above and integrated with the Value Iteration method given in Tutorial 3 (which you may use when it is available on Thursday) the next step is to train your MDP on episodes that you generate. Generate a total of 5 episodes, starting with the episode given in Figure 1. In addition to this episode, generate 4 other episodes. For each of these 4 episodes, generate random pick-up and drop-off points for the 2 passengers from the 3 available locations L2,L2 and L3. Likewise, generate a random location T for the taxi T from any of the grid points, taking care to avoid illegal point I. Once each episode is generated, train the MDP by executing your Value Iteration algorithm with 10 iterations. Once the policy is generated the next step is to evaluate the policy. For policy evaluation, use location T as the starting point and follow the path defined by the policy until both passengers are dropped off. For the evaluation we shall compute the simple metric of path length (other metrics such as sum of expected discounted rewards are also possible, but we will not use them in this Project). The smaller the path length, the bigger is the reward obtained and hence we rank paths by length. Your task in this project is to implement the following requirements. The project has a single milestone which is your code that you used and the answers to the questions below. 1. For episode 1 given in Figure 1, work out the optimal policy by hand. The optimal policy is the one that results in the shortest path that picks up and drops off both passengers without traversing illegal point I. Work out this optimal policy by hand and display the path P as a sequence of grid locations (2D coordinates) starting with the Taxi location T. 2. Run your MDP code for episode 1 and display the path given by its policy, also as a sequence of grid locations starting with T. If your path differs from the path you constructed in 1 above, why do you think it is so? 3. Generate 4 other episodes. For each episode: a. print out the locations of the pick-up and drop-off points and the location of the Taxi b. print out the path and its length that was produced by your MDP to service the journey (i.e., the episode). The following three changes have been made to the project specification: 1. Positive rewards are +20000. 2. Negative reward for illegal pickup/drop off is 10000 3. For all points that are not designated as pickup or drop-off points (rule 2 in Project 3 Specification document) Pr(s(a,b),M)=0.8 where M is the intended move which can be one of N,S,W or E. Pr(s(a,b),M)=0.04 where M is not in the intended direction Pr((a,b)(a,b),P)=0.04 Pr((a,b)(a,b),D)=0.04 Episode Segmentation The MDP in its original form only handles a single navigation request from a given start position to a given destination. In order to handle multiple navigation, it needs to be engineered suitably. The following describes how this is done. Each journey or episode needs to be broken down into sub-episodes. Using the example given in the Project document we have requests for pickup of passenger 1 (P1) at L1 (1,5), request for drop off at L3 (5,2), request for pickup of P2 at (5,1) and drop off of P2 at (1,5). This journey can be broken down into the following 4 sub-episodes: 1. The taxi moving from its start location (2,4) to pick up P1 from (1,5). 2. The taxi moving from (1,5) to drop off P1 at (5,2) 3. The taxi moving from (5,2) to pick up P2 from (5,1) 4. The taxi moving from (5,1) to drop off P2 at (1,5) In general. we run the MDP from the taxi's start location until the next request is serviced at one of the three locations L1, L2 or L3. This marks the conclusion of a sub-episode. Thereafter, we need to examine whether any further requests can be serviced at that location. If yes, then the MDP is run once again for the next sub-episode with all rewards as for the previous sub-episode. but all state values set to 0 (zero). On the other hand, if no pending pickup or drop-off requests can be met at that location, then the reward at that location is set to 0 in addition to the state values being set to 0 . Thus, for example suppose the first sub-episode had the taxi moving from its start location (2,4) to pick up P1 from (1,5). Then, the pending drop-off requests are at L3 and L1. The one at L3 does not count as it cannot be met from L1. The one at L2 also does not count as it requires passenger P2 to be already in the taxi which is not the case. Thus. the reward at L1 is set to zero as well all state values are set to 0 . Note that the positive rewards of 2000 are active at L2 and L3. The MDP is then run again. This enables the taxi to move from L1 to its next destination in sub-episode 2. Continuing with this example, it the next sub-episode is for the taxi to move from L1 Al other walues stur remin ate to drop off P1 at L3, then we have the same situation after the drop oll at L3. After L3. the taxi is empty and hence is unable to meet its pending drop-off service of passenger 2 at L1. Hence the same process happens after sub-episode 2: the reward at L3 is set to 0 as well as al cell states are set to 0 , and the MDP is run Iteration 2 again. Note that the positive rewards of 2000 are active at L2 and L1. The same stuation repeats for sub-episode 3 as the pending drop-olf request for P2 cannot be met at L2. Note that the Bellman utiify for an episode cnly needs to iterate over the sel of al - 15941 possible taxi lecabions as the other 4 components of the state space (pickups and drop-offs) remain constant for a given episode. Of course, these 4 components need to be stored but they are not part of the Bellman update and hence can be stored Sindaty {1,4]15954,1 separately. This greafly simplifies the officioncy of the MDP update as woll as the data stricture used to represent the ufilty value. It also simplifies the computstion of the path for a given episode (and its sub-episodes) as we only need to traverse a 2D data structure. let us work though some esanples of our seape sgace update using epliode 1 . Al walues of d are telt to 0 . We then infialiag (4,5)=40,7)+4{5,3)=20009 4{4,2}=10000 =10sec6 a 209652 - 41.5. Sub-episode 2 tteration 1 Sub-episode 1 - 14399=044,8 Iteration 2 = 15435 - 75415.7 Iteration 1 thewice, 4[2.4]=14199

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts