Question: Solve all. 5 Consider the success run chain in Example 8.2.16. Suppose that the chain has been running for a while and is currently in

Solve all. 5 Consider the success run chain in Example 8.2.16. Suppose that the chain has been

running for a while and is currently in state 10. (a) What is the expected number of

steps until the chain is back at state 10? (b) What is the expected number of times the

chain visits state 9 before it is back at 10?

16 Consider a version of the success run chain in Example 8.2.16 where we disregard

sequences of consecutive tails, in the sense that for example T, T T, T T T, and so on,

all simply count as T. Describe this as a Markov chain and examine it in terms of

irreducibility, recurrence, and periodicity. Find the stationary distribution and compare

with Example 8.2.16. Is it the limit distribution?

17 Reversibility. Consider an ergodic Markov chain, observed at a late timepoint n. If

we look at the chain backward, we have the backward transition probability qij =

P(Xn?1 = j|Xn = i). (a) Express qij in terms of the forward transition probabilities

and the stationary distribution ?. (b)If the forward and backward transition probabilities

are equal, the chain is called reversible. Show that this occurs if and only if ?ipij =

?jpji for all states i, j (this identity is usually taken as the definition of reversibility).

(c) Show that if a probability distribution ? satisfies the equation ?ipij = ?jpji for all

i, j, then ? is stationary.

18 The intuition behind reversibility is that if we are given a sequence of consecutive states

under stationary conditions, there is no way to decide whether the states are given in

forward or backward time. Consider the ON/OFF system in Example 8.2.4; use the

definition in the previous problem to show that it is reversible and explain intuitively.

19 For which values of p is the following matrix the transition matrix of a reversible Markov

chain? Explain intuitively.

P =

0 p 1 ? p

1 ? p 0 p

p 1 ? p 0

!

20 Ehrenfest model of diffusion. Consider two containers containing a total of N gas

molecules, connected by a narrow aperture. Each time unit, one of the N molecules is

chosen at random to pass through the aperture from one container to the other. Let Xn

be the number of molecules in the first container. (a) Find the transition probabilities

for the Markov chain {Xn}. (b) Argue intuitively why the chain is reversible and why

the stationary distribution is a certain binomial distribution. Then use Problem 17 to

show that it is indeed the stationary distribution. (c) Is the stationary distribution also

the limit distribution?

21 Consider an irreducible and positive recurrent Markov chain with stationary distribution

? and let g : S ? R be a real-valued function on the state space. It can be shown that

1

n

Xn

k=1

g(Xk)

P?

X

j?S

g(j)?j

for any initial distribution, where we recall convergence in probability from Section 4.2.

This result is reminiscent of the law of large numbers, but the summands are not i.i.d.

We have mentioned that the interpretation of the stationary distribution is the long-term

proportion of time spent in each state. Show how a particular choice of the function g

above gives this interpretation (note that we do not assume aperiodicity)

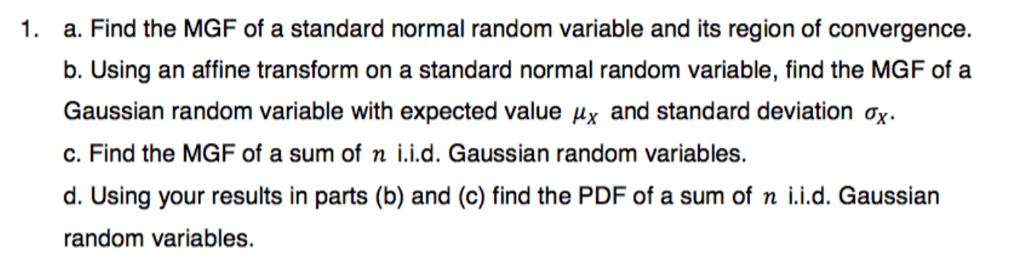

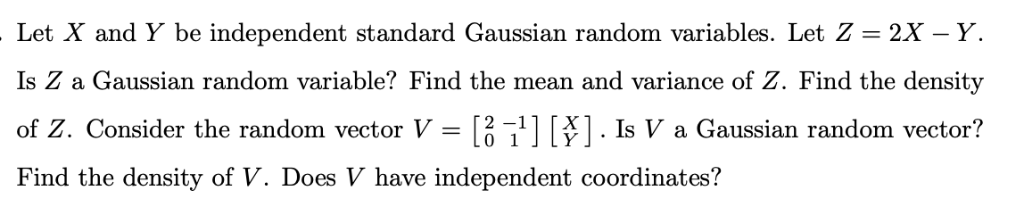

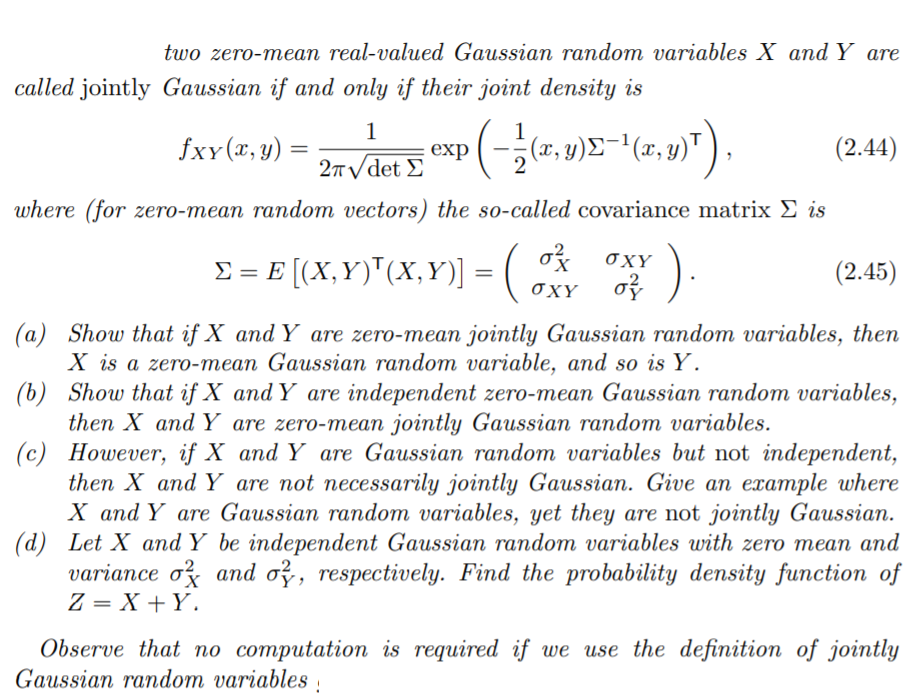

1. a. Find the MGF of a standard normal random variable and its region of convergence. b. Using an affine transform on a standard normal random variable, find the MGF of a Gaussian random variable with expected value ux and standard deviation ox. c. Find the MGF of a sum of n i.i.d. Gaussian random variables. d. Using your results in parts (b) and (c) find the PDF of a sum of n i.i.d. Gaussian random variables.Which of the following is NOT true? O Uncorrelated Gaussian random variables will always have a diagonal covariance matrix. O b ) Independent Gaussian random variables will always have a covariance matrix that has a determinant of zero. Gaussian random variables with a diagonal covariance matrix are uncorrelated. O d) Uncorrelated Gaussian random variables are also independent.. Let X and Y be independent stande Gaussian random variables. Let Z = 2X Y. Is Z a Gaussian random variable? Find the mean and variance of Z. Find the density of Z. Consider the random vector V = [g '11] [g] . Is V a Gaussian random vector? Find the density of V. Does V have independent coordinates? two zero-mean real-valued Gaussian random variables X and Y are called jointly Gaussian if and only if their joint density is 1 fxy(x, y) = 27 \\ det E exp -7 (x, y) 2-1 (x, y) T ) (2.44) where (for zero-mean random vectors ) the so-called covariance matrix E is E = E [(X, Y) (x, Y)] =( OXY (2.45) OXY (a) Show that if X and Y are zero-mean jointly Gaussian random variables, then X is a zero-mean Gaussian random variable, and so is Y. (b) Show that if X and Y are independent zero-mean Gaussian random variables, then X and Y are zero-mean jointly Gaussian random variables. (c) However, if X and Y are Gaussian random variables but not independent, then X and Y are not necessarily jointly Gaussian. Give an example where X and Y are Gaussian random variables, yet they are not jointly Gaussian. (d) Let X and Y be independent Gaussian random variables with zero mean and variance o'x and ov, respectively. Find the probability density function of Z = X+Y. Observe that no computation is required if we use the definition of jointly Gaussian random variables

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts