Answered step by step

Verified Expert Solution

Question

1 Approved Answer

solve all Nones using python Expected output: dw = [[1.22019716] [2.73509556]] db = 0.09519962669353813 cost = 6.9550195708335805 Task 3. Implement forward and backward computation 3

solve all Nones using python

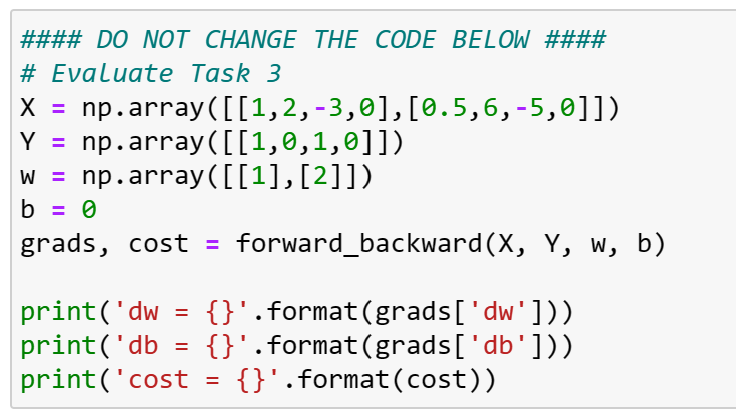

Expected output:

dw = [[1.22019716] [2.73509556]] db = 0.09519962669353813 cost = 6.9550195708335805

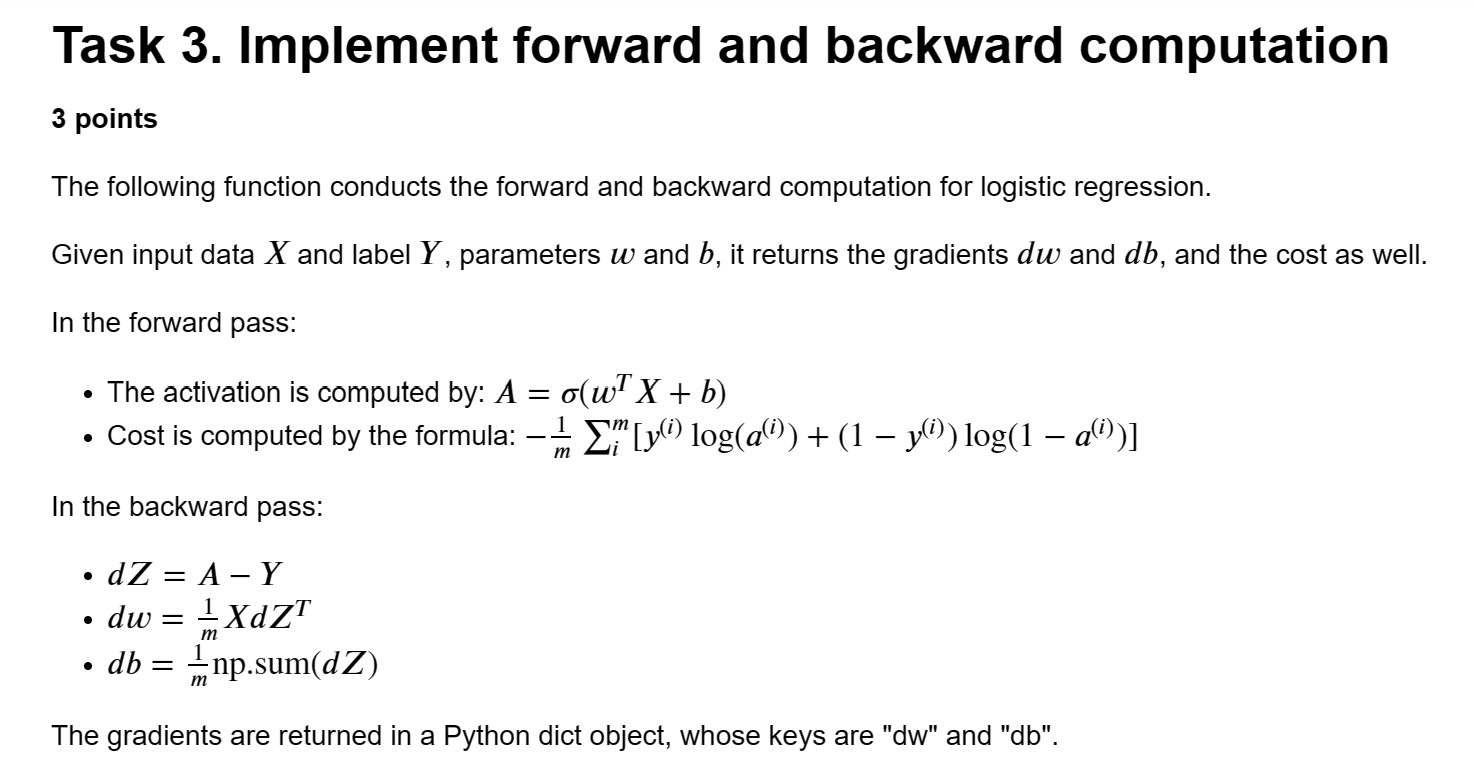

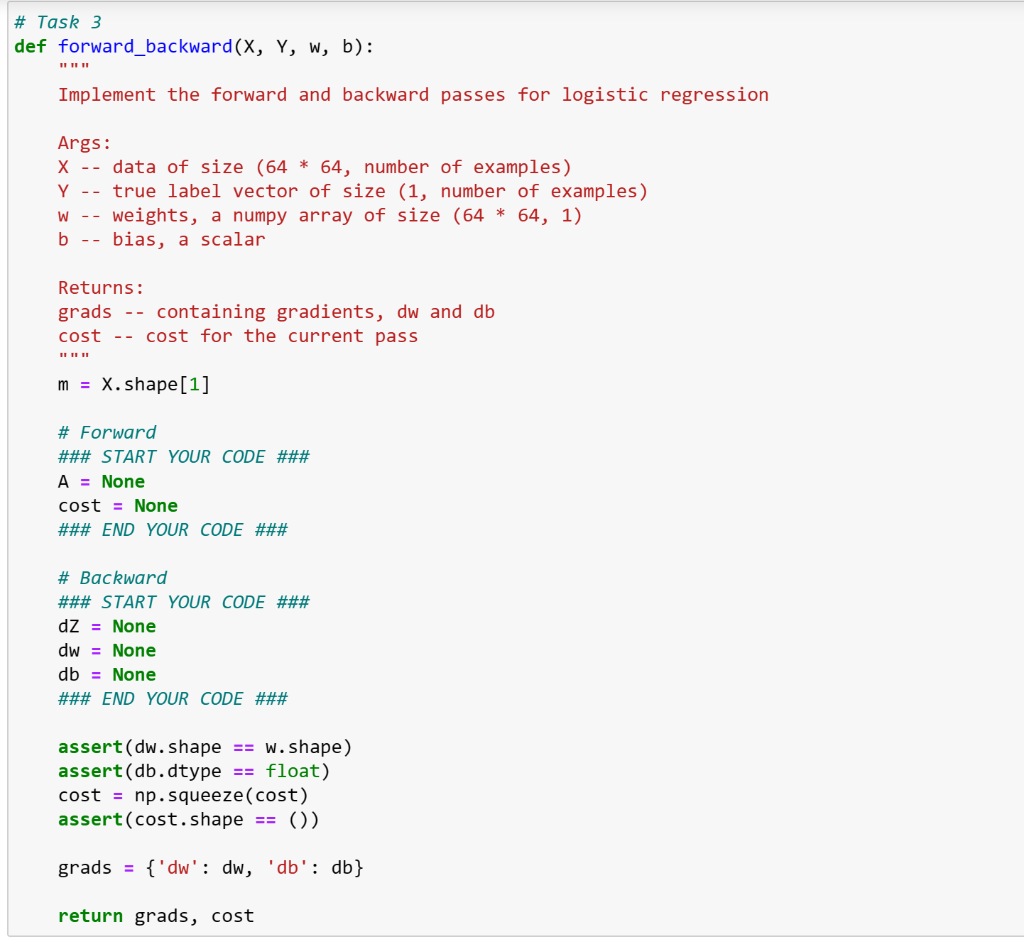

Task 3. Implement forward and backward computation 3 points The following function conducts the forward and backward computation for logistic regression. Given input data X and label Y, parameters w and b, it returns the gradients dw and db, and the cost as well. In the forward pass: - The activation is computed by: A=(wTX+b) - Cost is computed by the formula: m1im[y(i)log(a(i))+(1y(i))log(1a(i))] In the backward pass: - dZ=AY - dw=m1XdZT db=m1npsum(dZ) The gradients are returned in a Python dict object, whose keys are "dw" and "db". Task 3 ef forward_backward (X,Y,W,b) : " " " Implement the forward and backward passes for logistic regression Args : X - - data of size (64 64, number of examples) Y-- true label vector of size ( 1 , number of examples) W -- weights, a numpy array of size (6464,1) b -- bias, a scalar Returns: grads - - containing gradients, dw and db cost - - cost for the current pass "" m=X shape [1] \# Forward \#\#\# START YOUR CODE \#\#\# A= None cost = None \#\#\# END YOUR CODE \#\#\# \# Backward \#\#\# START YOUR CODE \#\#\# dZ= None dw= None db= None \#\#\# END YOUR CODE \#\#\# assert (dw. shape ==w. shape ) assert (db. dtype == float ) cost =np. squeeze ( cost ) assert ( cost. shape ==()) grads ={dw:dw,db:db} return grads, costStep by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started