Answered step by step

Verified Expert Solution

Question

1 Approved Answer

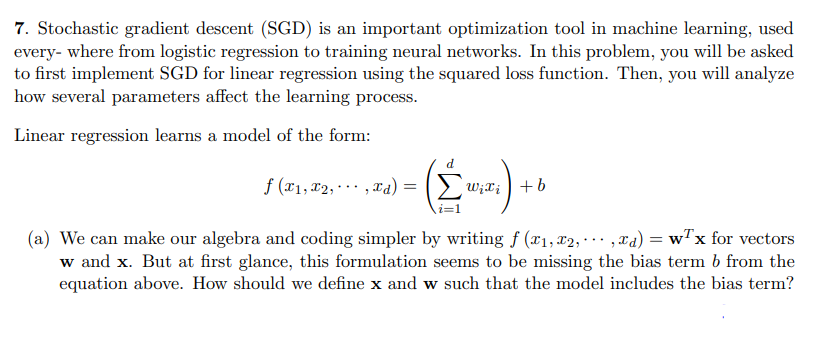

Stochastic gradient descent ( SGD ) is an important optimization tool in machine learning, used every - where from logistic regression to training neural networks.

Stochastic gradient descent SGD is an important optimization tool in machine learning, used

every where from logistic regression to training neural networks. In this problem, you will be asked

to first implement SGD for linear regression using the squared loss function. Then, you will analyze

how several parameters affect the learning process.

Linear regression learns a model of the form:

cdots,

a We can make our algebra and coding simpler by writing cdots, for vectors

and But at first glance, this formulation seems to be missing the bias term from the

equation above. How should we define and such that the model includes the bias term?

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started