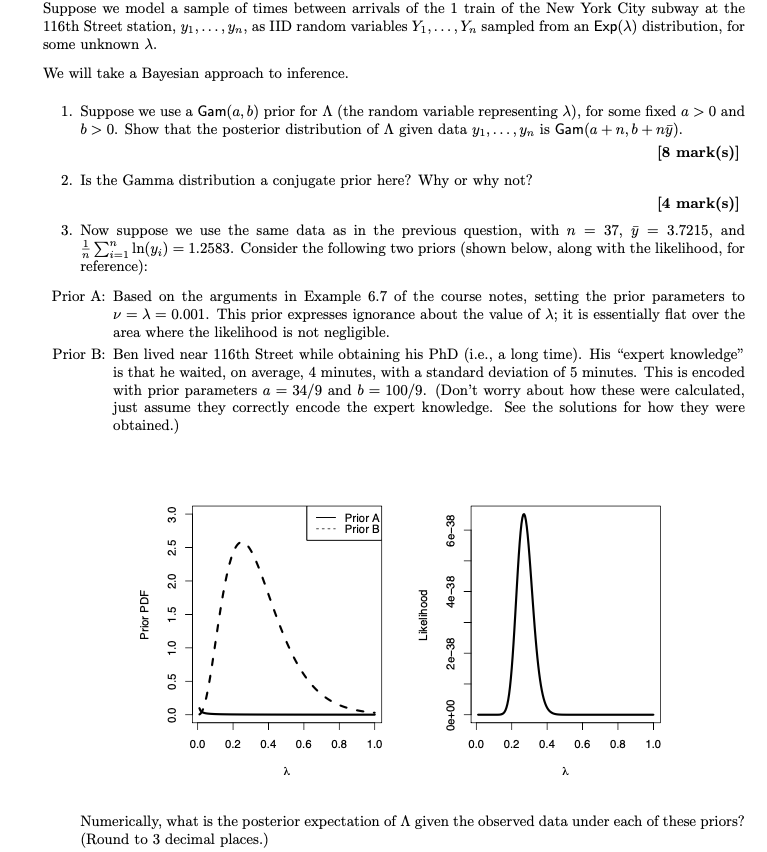

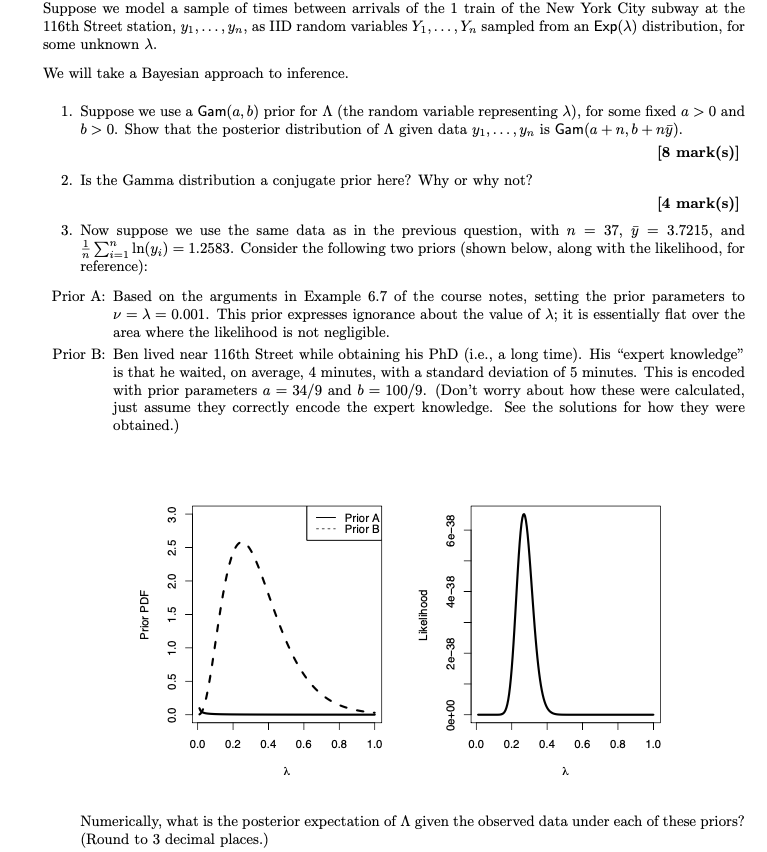

Suppose we model a sample of times between arrivals of the 1 train of the New York City subway at the 116th Street station, y, ..., yn, as IID random variables Y1, ..., Yn sampled from an Exp(2) distribution, for some unknown. We will take a Bayesian approach to inference. 72 1. Suppose we use a Gam(a, b) prior for A (the random variable representing \), for some fixed a > 0 and b>0. Show that the posterior distribution of A given data y, ... Yn is Gam(a +n, b+ny). [8 mark(s)] 2. Is the Gamma distribution a conjugate prior here? Why or why not? [4 mark(s)] 3. Now suppose we use the same data as in the previous question, with n = 37, y = 3.7215, and - In(yi) = 1.2583. Consider the following two priors (shown below, along with the likelihood, for reference): Prior A: Based on the arguments in Example 6.7 of the course notes, setting the prior parameters to v = ) = 0.001. This prior expresses ignorance about the value of ; it is essentially flat over the area where the likelihood is not negligible. Prior B: Ben lived near 116th Street while obtaining his PhD (i.e., a long time). His "expert knowledge" is that he waited, on average, 4 minutes, with a standard deviation of 5 minutes. This is encoded with prior parameters a = 34/9 and b = 100/9. (Don't worry about how these were calculated, just assume they correctly encode the expert knowledge. See the solutions for how they were obtained.) - Prior A Prior B 60-38 1.5 2.0 2.5 3.0 40-38 Prior PDF Likelihood 1.0 20-38 0.5 X 0.0 Oe+00 0.0 0.2 0.4 0.6 0.8 1.0 0.0 0.2 0.4 0.6 0.8 1.0 2. Numerically, what is the posterior expectation of A given the observed data under each of these priors? (Round to 3 decimal places.) Suppose we model a sample of times between arrivals of the 1 train of the New York City subway at the 116th Street station, y, ..., yn, as IID random variables Y1, ..., Yn sampled from an Exp(2) distribution, for some unknown. We will take a Bayesian approach to inference. 72 1. Suppose we use a Gam(a, b) prior for A (the random variable representing \), for some fixed a > 0 and b>0. Show that the posterior distribution of A given data y, ... Yn is Gam(a +n, b+ny). [8 mark(s)] 2. Is the Gamma distribution a conjugate prior here? Why or why not? [4 mark(s)] 3. Now suppose we use the same data as in the previous question, with n = 37, y = 3.7215, and - In(yi) = 1.2583. Consider the following two priors (shown below, along with the likelihood, for reference): Prior A: Based on the arguments in Example 6.7 of the course notes, setting the prior parameters to v = ) = 0.001. This prior expresses ignorance about the value of ; it is essentially flat over the area where the likelihood is not negligible. Prior B: Ben lived near 116th Street while obtaining his PhD (i.e., a long time). His "expert knowledge" is that he waited, on average, 4 minutes, with a standard deviation of 5 minutes. This is encoded with prior parameters a = 34/9 and b = 100/9. (Don't worry about how these were calculated, just assume they correctly encode the expert knowledge. See the solutions for how they were obtained.) - Prior A Prior B 60-38 1.5 2.0 2.5 3.0 40-38 Prior PDF Likelihood 1.0 20-38 0.5 X 0.0 Oe+00 0.0 0.2 0.4 0.6 0.8 1.0 0.0 0.2 0.4 0.6 0.8 1.0 2. Numerically, what is the posterior expectation of A given the observed data under each of these priors? (Round to 3 decimal places.)