Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Test Tensor flow with the below python codes at colab. import tensorflow as tf from keras.layers import Input, Dense from keras.models import Model import matplotlib.pyplot

Test Tensor flow with the below python codes at colab.

import tensorflow as tf

from keras.layers import Input, Dense

from keras.models import Model

import matplotlib.pyplot as plt

Test Tensor flow with linear regressions

import numpy as np

#Linear Regression with TensorFlow

from sklearn.datasets import fetchcaliforniahousing

housing fetchcaliforniahousing

m n housing.data.shape

housingdataplusbias npcnponesm housing.data

X tfconstanthousingdataplusbias, dtypetffloat nameX

y tfconstanthousingtarget.reshape dtypetffloat namey

XT tftransposeX

theta tfmatmultfmatmultflinalg.invtfmatmulXT X XT y

printtheta

Test Tensor flow with MNIST handwriting data set

# Plot ad hoc mnist instances

from keras.datasets import mnist

import matplotlib.pyplot as plt

# load downloaded if needed the MNIST dataset

Xtrain, ytrainXtest, ytest mnist.loaddata

# plot images as gray scale

pltsubplot

pltimshowXtrain cmappltgetcmapgray

pltsubplot

pltimshowXtrain cmappltgetcmapgray

pltsubplot

pltimshowXtrain cmappltgetcmapgray

pltsubplot

pltimshowXtrain cmappltgetcmapgray

# show the plot

pltshow

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import Dropout

from keras.utils import tocategorical

# fix random seed for reproducibility

seed

nprandom.seedseed

# load data

Xtrain, ytrainXtest, ytest mnist.loaddata

# flatten images to a vector for each image

numpixels Xtrain.shape Xtrain.shape

Xtrain Xtrain.reshapeXtrain.shape numpixelsastypefloat

Xtest Xtest.reshapeXtest.shape numpixelsastypefloat

# normalize inputs from to

Xtrain Xtrain

Xtest Xtest

# one hot encode outputs

ytrain tocategoricalytrain

ytest tocategoricalytest

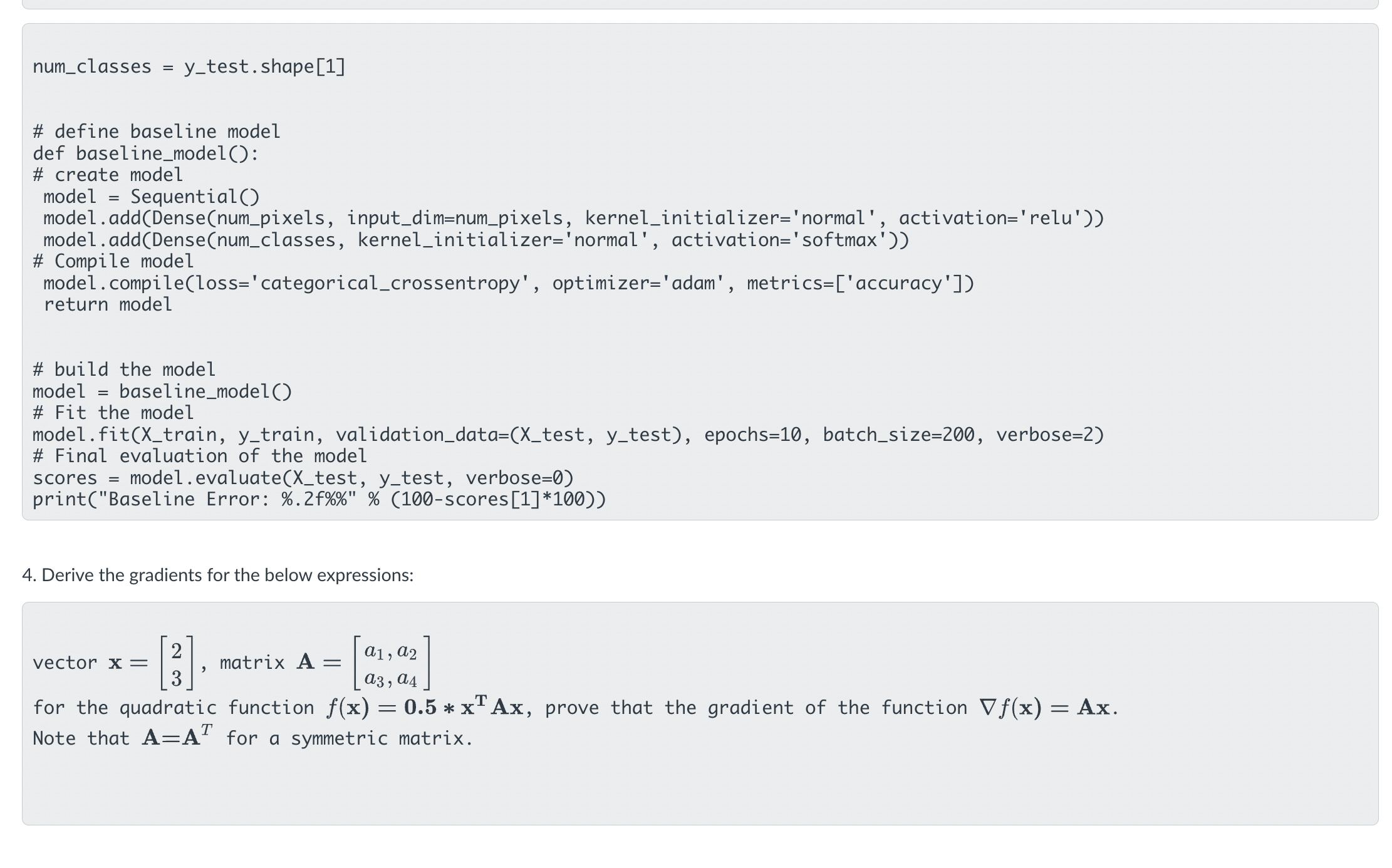

numclasses ytest.shape

# define baseline model

def baselinemodel:

# reate model

model Sequential

model.addDensenumpixels, inputdimnumpixels, kernelinitializer'normal', activation'relu'

model.addDensenumclasses, kernelinitializer'normal', activation'softmax'

# Compile model

model compileloss'categoricalcrossentropy' optimizer'adam' metricsaccuracy

return model

# build the model

model baselinemodel

# Fit the model

model.fitXtrain, ytrain, validationdataXtest, ytest epochs batchsize verbose

# Final evaluation of the model

scores model.evaluateXtest, ytest, verbose

printBaseline Error: fscores

TO DO:

Derive the gradients for the below expressions:

vector matrix

for the quadratic function prove that the gradient of the function gradf

Note that for a symmetric matrix.

ONLY step has to be done!!

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started