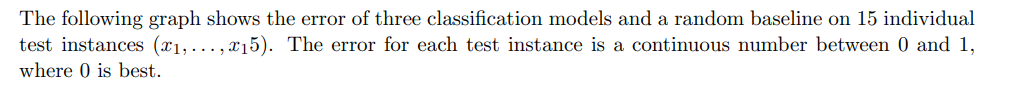

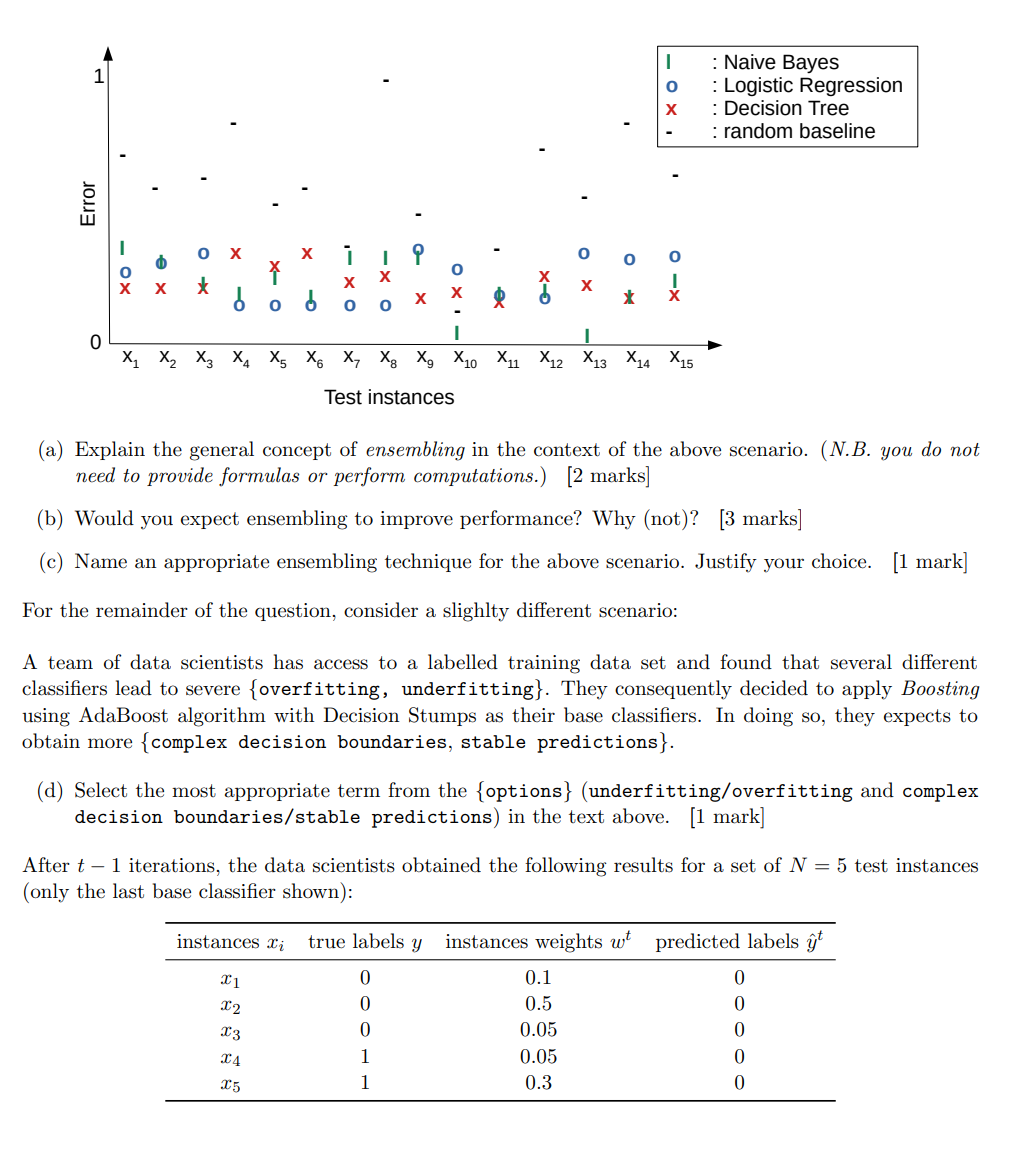

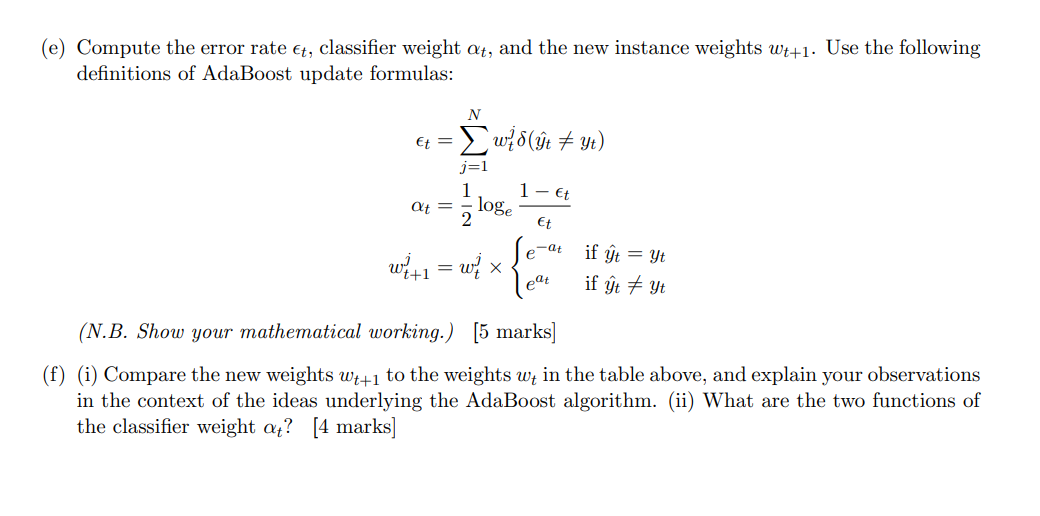

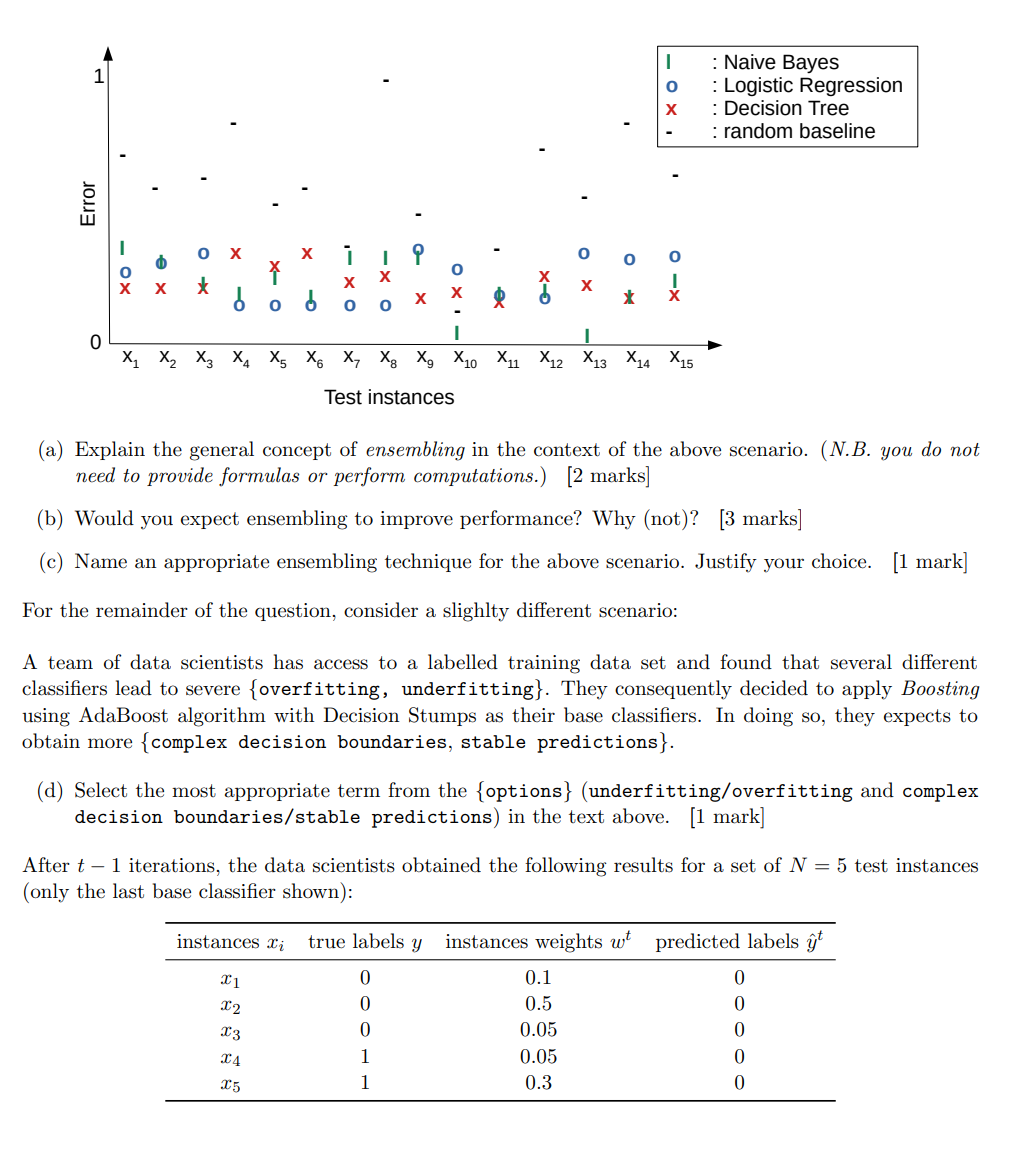

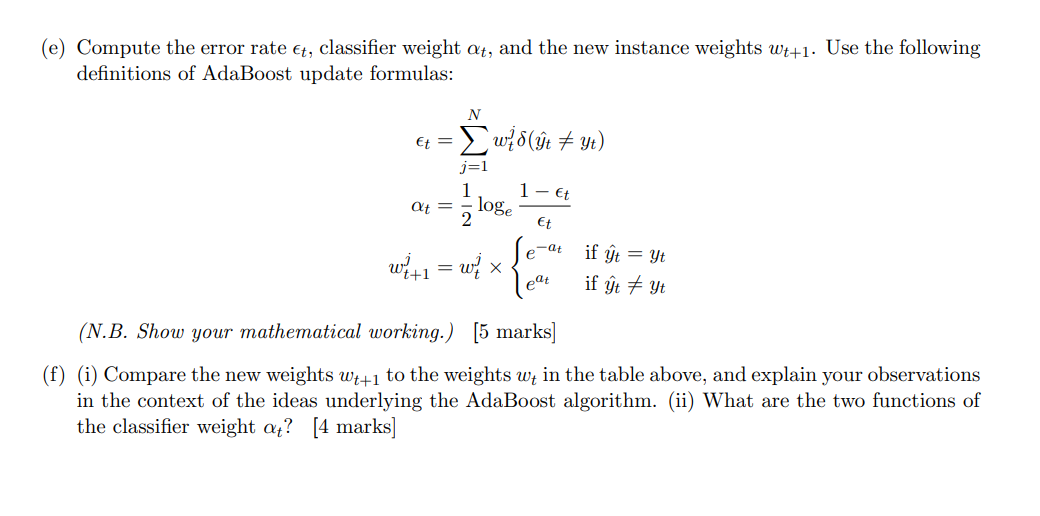

The following graph shows the error of three classification models and a random baseline on 15 individual test instances (21,...,115). The error for each test instance is a continuous number between 0 and 1, where 0 is best. o : Naive Bayes : Logistic Regression : Decision Tree : random baseline Error 0 i IP 0 O - OX X o * XO -X o * o o 0 0 1 1 , x, xx, , x X X2 X3 X4 X5 X6 X, X, X, X10 X11 X12 X13 X4 X 15 Test instances (a) Explain the general concept of ensembling in the context of the above scenario. (N.B. you do not need to provide formulas or perform computations.) [2 marks] (b) Would you expect ensembling to improve performance? Why (not)? [3 marks] (c) Name an appropriate ensembling technique for the above scenario. Justify your choice. [1 mark] For the remainder of the question, consider a slighlty different scenario: A team of data scientists has access to a labelled training data set and found that several different classifiers lead to severe {overfitting, underfitting}. They consequently decided to apply Boosting using AdaBoost algorithm with Decision Stumps as their base classifiers. In doing so, they expects to obtain more {complex decision boundaries, stable predictions}. (d) Select the most appropriate term from the {options} (underfitting/overfitting and complex decision boundaries/stable predictions) in the text above. [1 mark] After t - 1 iterations, the data scientists obtained the following results for a set of N = 5 test instances (only the last base classifier shown): instances i true labels y instances weights wt predicted labels t 11 0 0.1 0 12 0 0 0.5 0.05 13 0 0 14 1 0.05 0 15 1 0.3 0 (e) Compute the error rate t, classifier weight at, and the new instance weights Wt+1. Use the following definitions of AdaBoost update formulas: N Et = wld(t + yt) j=1 1 at = 2 1 - Et loge Et era w+1 = wi if gt = 9t if gt+ 9t eat (N.B. Show your mathematical working.) [5 marks (f) (i) Compare the new weights W7+1 to the weights wt in the table above, and explain your observations in the context of the ideas underlying the AdaBoost algorithm. (ii) What are the two functions of the classifier weight at? [4 marks] The following graph shows the error of three classification models and a random baseline on 15 individual test instances (21,...,115). The error for each test instance is a continuous number between 0 and 1, where 0 is best. o : Naive Bayes : Logistic Regression : Decision Tree : random baseline Error 0 i IP 0 O - OX X o * XO -X o * o o 0 0 1 1 , x, xx, , x X X2 X3 X4 X5 X6 X, X, X, X10 X11 X12 X13 X4 X 15 Test instances (a) Explain the general concept of ensembling in the context of the above scenario. (N.B. you do not need to provide formulas or perform computations.) [2 marks] (b) Would you expect ensembling to improve performance? Why (not)? [3 marks] (c) Name an appropriate ensembling technique for the above scenario. Justify your choice. [1 mark] For the remainder of the question, consider a slighlty different scenario: A team of data scientists has access to a labelled training data set and found that several different classifiers lead to severe {overfitting, underfitting}. They consequently decided to apply Boosting using AdaBoost algorithm with Decision Stumps as their base classifiers. In doing so, they expects to obtain more {complex decision boundaries, stable predictions}. (d) Select the most appropriate term from the {options} (underfitting/overfitting and complex decision boundaries/stable predictions) in the text above. [1 mark] After t - 1 iterations, the data scientists obtained the following results for a set of N = 5 test instances (only the last base classifier shown): instances i true labels y instances weights wt predicted labels t 11 0 0.1 0 12 0 0 0.5 0.05 13 0 0 14 1 0.05 0 15 1 0.3 0 (e) Compute the error rate t, classifier weight at, and the new instance weights Wt+1. Use the following definitions of AdaBoost update formulas: N Et = wld(t + yt) j=1 1 at = 2 1 - Et loge Et era w+1 = wi if gt = 9t if gt+ 9t eat (N.B. Show your mathematical working.) [5 marks (f) (i) Compare the new weights W7+1 to the weights wt in the table above, and explain your observations in the context of the ideas underlying the AdaBoost algorithm. (ii) What are the two functions of the classifier weight at? [4 marks]