Answered step by step

Verified Expert Solution

Question

1 Approved Answer

This is the input : This is the TinyToken startercode: This is the TinyScanner startercode: This is the TinyTest startercode: When I tried I had

This is the input :

This is the TinyToken startercode:

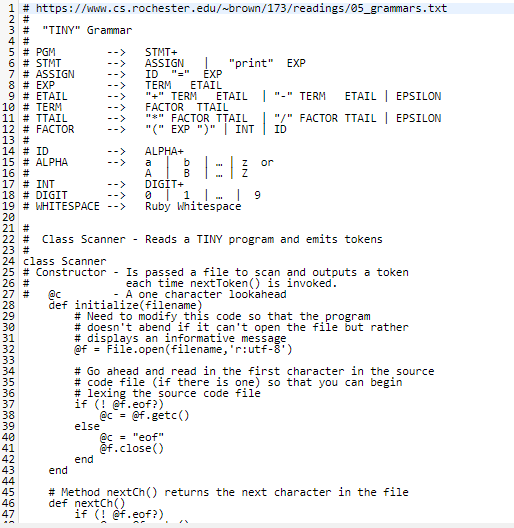

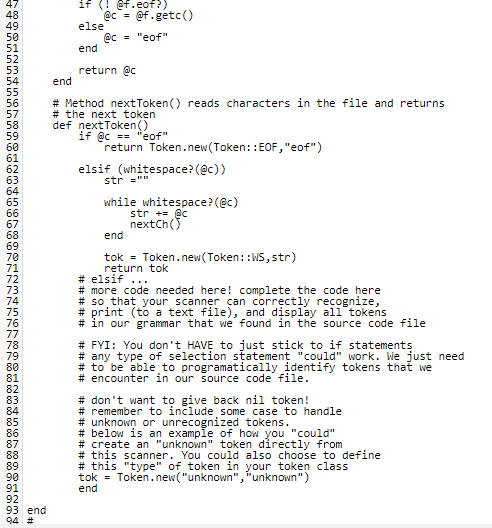

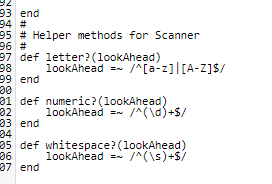

This is the TinyScanner startercode:

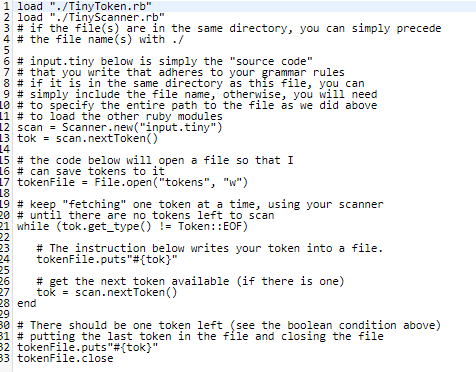

This is the TinyTest startercode:

When I tried I had a mismatch so it was one late if that makes sense. My error just said lexeme mismatch

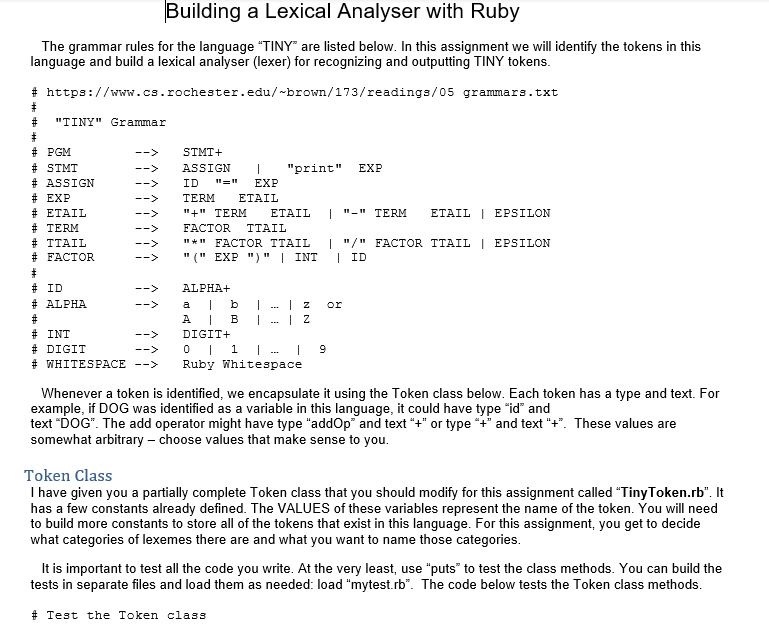

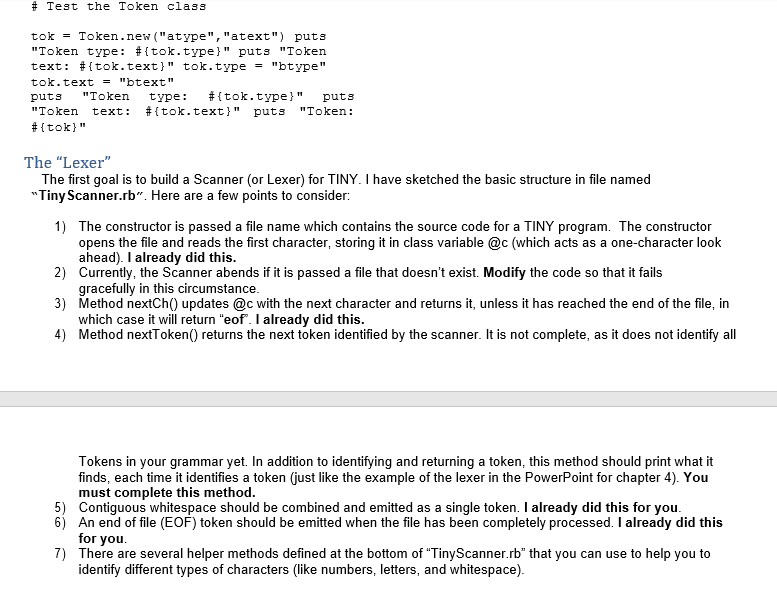

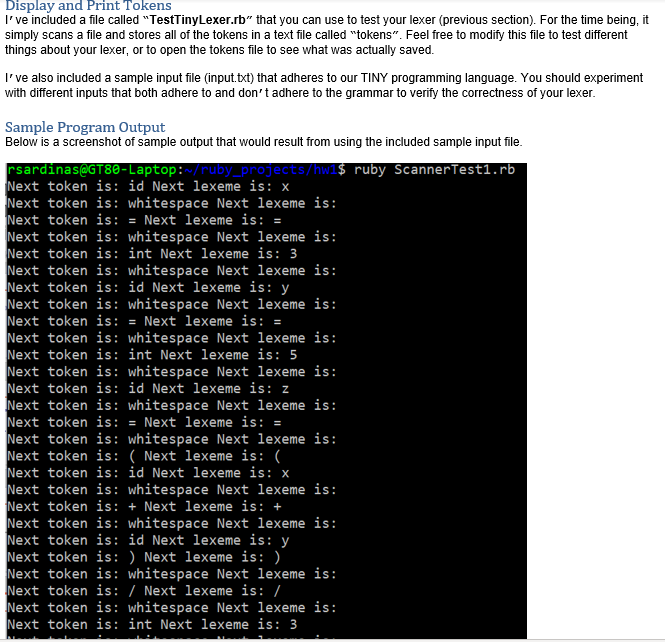

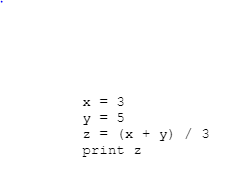

Building a Lexical Analyser with Ruby The grammar rules for the language "TINY" are listed below. In this assignment we will identify the tokens in this language and build a lexical analyser (lexer) for recognizing and outputting TINY tokens. # https://www.cs.rochester.edu/-brown/173/readings/05 grammars.txt + # "TINY" Grammar + # PGM -> # STMT -> # ASSIGN --> # EXP # ETAIL # TERM # TTAIL -> # FACTOR --> + # ID --> # ALPHA --> # # INT --> # DIGIT --> # WHITESPACE --> STMT+ ASSIGN "print" EXP ID EXP TERM ETAIL "+" TERM ETAIL "-" TERM ETAIL | EPSILON FACTOR TTAIL "#" FACTOR TTAIL | "/" FACTOR TTAILEPSILON "(" EXP ")" | INT | ID or ALPHA+ b 1 B |Z DIGIT+ i Ruby Whitespace 9 Whenever a token is identified, we encapsulate it using the Token class below. Each token has a type and text. For example, if DOG was identified as a variable in this language, it could have type "id" and text "DOG". The add operator might have type "addOp and text "+" or type "+" and text "+". These values are somewhat arbitrary - choose values that make sense to you. Token Class I have given you a partially complete Token class that you should modify for this assignment called "TinyToken.rb". It has a few constants already defined. The VALUES of these variables represent the name of the token. You will need to build more constants to store all of the tokens that exist in this language. For this assignment, you get to decide what categories of lexemes there are and what you want to name those categories. It is important to test all the code you write. At the very least, use "puts to test the class methods. You can build the tests in separate files and load them as needed: load "mytest.rb". The code below tests the Token class methods. # Test the Token class # Test the Token class tok = Token.new("atype", "atext") puts "Token type: #{tok.type}" puts "Token text: #{tok.text}" tok.type = "type" tok.text = "text" puts "Token type: #{tok.type}" puts "Token text: #{tok.text}" puts "Token: #{tok)" The "Lexer" The first goal is to build a Scanner (or Lexer) for TINY. I have sketched the basic structure in file named "Tiny Scanner.rb. Here are a few points to consider: 1) The constructor is passed a file name which contains the source code for a TINY program. The constructor opens the file and reads the first character, storing it in class variable @c (which acts as a one-character look ahead). I already did this. 2) Currently, the Scanner abends if it is passed a file that doesn't exist. Modify the code so that it fails gracefully in this circumstance. 3) Method nextCh() updates @c with the next character and returns it, unless it has reached the end of the file, in which case it will return "eof. I already did this. 4) Method nextToken() returns the next token identified by the scanner. It is not complete, as it does not identify all Tokens in your grammar yet. In addition to identifying and returning a token, this method should print what it finds, each time it identifies a token (just like the example of the lexer in the PowerPoint for chapter 4). You must complete this method. 5) Contiguous whitespace should be combined and emitted as a single token. I already did this for you. 6) An end of file (EOF) token should be emitted when the file has been completely processed. I already did this 7) There are several helper methods defined at the bottom of "Tiny Scanner.rb" that you can use to help you to identify different types of characters (like numbers, letters, and whitespace). for you. Display and Print Tokens I've included a file called "TestTinyLexer.rb" that you can use to test your lexer (previous section). For the time being, it simply scans a file and stores all of the tokens in a text file called "tokens". Feel free to modify this file to test different things about your lexer, or to open the tokens file to see what was actually saved. I've also included a sample input file (input.txt) that adheres to our TINY programming language. You should experiment with different inputs that both adhere to and don't adhere to the grammar to verify the correctness of your lexer. Sample Program Output Below is a screenshot of sample output that would result from using the included sample input file. rsardinas@GT80-Laptop: /ruby_projects/hw $ ruby ScannerTest1.rb Next token is: id Next lexeme is: x Next token is: whitespace Next lexeme is: Next token is: = Next lexeme is: = Next token is: whitespace Next lexeme is: Next token is: int Next lexeme is: 3 Next token is: whitespace Next lexeme is: Next token is: id Next lexeme is: y Next token is: whitespace Next lexeme is: Next token is: = Next lexeme is: = Next token is: whitespace Next lexeme is: Next token is: int Next lexeme is: 5 Next token is: whitespace Next lexeme is: Next token is: id Next lexeme is: z Next token is: whitespace Next lexeme is: Next token is: = Next lexeme is: = Next token is: whitespace Next lexeme is: Next token is: ( Next lexeme is: ( Next token is: id Next lexeme is: X Next token is: whitespace Next lexeme is: Next token is: + Next lexeme is: + Next token is: whitespace Next lexeme is: Next token is: id Next lexeme is: y Next token is: ) Next lexeme is: ) Next token is: whitespace Next lexeme is: Next token is: / Next lexeme is: / Next token is: whitespace Next lexeme is: Next token is: int Next lexeme is: 3 x = 3 y = 5 z = (x + (x + y) / 3 print z 1 2 # Class Token - Encapsulates the tokens in TINY 3 4 # @type - the type of token (Category) 5 # text - the text the token represents (Lexeme) 7 class Token 8 attr_accessor : type 9 attr_accessor :text 10 11 # This is the only part of this class that you need to 12 # modify 13 EOF = "eof" 14 LPAREN = "" 15 RPAREN = ")" 16 ADDOP = "4" 17 WS = "whitespace" 18 # add the rest of the tokens needed based on the grammar 19 # specified in the Scanner class "TinyScanner.rb" 20 21 #constructor 22 def initialize(type, text) 23 @type = type 24 @text = text 25 end 26 27 def get_type 28 return @type 29 end 30 31 def get_text 32 return @text 33 end 34 35 # to string method 36 37 return "#{@type} #{@text}" 38 end 39 end def to_s ID 1 # https://www.cs.rochester.edu/-brown/173/readings/05_grammars.txt 2 # 3 # "TINY" Grammar 4 # 5 # PGM STMT+ 6 # STMT --> ASSIGN I "print" EXP 7 # ASSIGN --> ID EXP 8 # EXP TERM ETAIL 9 # ETAIL "+" TERM ETAIL | "." TERM ETAIL | EPSILON 10 # TERM FACTOR TTAIL 11 # TTAIL "*" FACTOR TTAIL "/" FACTOR TTAIL | EPSILON 12 # FACTOR "(" EXP ")" | INT 13 # 14 # ID ALPHA+ 15 # ALPHA --> Z or 16 # A B 17 # INT DIGIT+ 18 # DIGIT 0 1 - 9 19 # WHITESPACE Ruby Whitespace 20 21 # 22 # Class Scanner - Reads a TINY program and emits tokens 23 # 24 class Scanner 25 # Constructor - Is passed a file to scan and outputs a token 26 # each time nextToken() is invoked. 27 # A one character lookahead 28 def initialize(filename) 29 # Need to modify this code so that the program 30 # doesn't abend if it can't open the file but rather 31 # displays an informative message 32 @f = File.open(filename, 'r: utf-8") 33 34 # Go ahead and read in the first character in the source 35 # code file (if there is one) so that you can begin 36 # lexing the source code fil 37 if (! @f.eof?) 38 @c= @f.getc) 39 else 40 @C = f" 41 ef.close() 42 end 43 end 44 45 # Method nextch() returns the next character in the file 46 def nextch) 47 if (!ef.eof?) @c 48 49 50 51 52 if (! @f.eof?) @c = @f.getc) else @C = "eof" end return @c end # Method nextToken() reads characters in the file and returns # the next token def nextToken() if @C == "eof" return Token.new(Token::EOF, "eof") elsif (whitespace?(@c) str "" while whitespace? (@c) str += @C nextch end 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 end 94 # tok - Token.new(Token::WS, str) return tok #elsif ... # more code needed here! complete the code here # so that your scanner can correctly recognize, # print (to a text file), and display all tokens # in our grammar that we found in the source code file # FYI: You don't HAVE to just stick to if statements # any type of selection statement "could" work. We just need # to be able to programatically identify tokens that we # encounter in our source code file. # don't want to give back nil token! # remember to include some case to handle # unknown or unrecognized tokens. # below is an example of how you "could" # create an "unknown" token directly from # this scanner. You could also choose to define # this "type" of token in your token class tok = Token.new("unknown", "unknown") end 92 93 end 94 # 95 # Helper methods for Scanner 96 # 97 def letter?(lookAhead) 98 lookAhead == /[a-z] [A-Z]$/ 99 end 30 01 def numeric?(lookAhead) 32 lookAhead En /(\d)+$/ 23 end 34 25 def whitespace? (lookAhead) 06 lookAhead = /(\s)+$/ 27 end 5 1 load "./TinyToken.rb", 2 load "./Tinyscanner.rb" 3 # if the file(s) are in the same directory, you can simply precede 4 # the file name (s) with ./ 6 # input.tiny below is simply the "source code" 7 # that you write that adheres to your grammar rules 8 # if it is in the same directory as this file, you can 9 # simply include the file name, otherwise, you will need le # to specify the entire path to the file as we did above 11 # to load the other ruby modules 12 scan = Scanner.new("input.tiny") 13 tok = scan.nextToken() 14 15 # the code below will open a file so that I 16 # can save tokens to it 17 tokenFile = File.open("tokens", "w") 18 19 # keep "fetching" one token at a time, using your scanner 20 # until there are no tokens left to scan 21 while (tok.get_type() != Token :: EOF) 22 23 # The instruction below writes your token into a file. 24 tokenFile.puts"#{tok}" 25 26 # get the next token available (if there is one) 27 tok = scan.nextToken() 28 end 30 # There should be one token left (see the boolean condition above) 31 # putting the last token in the file and closing the file 32 tokenFile.puts"#{tok}" 33 tokenFile.close INMI 29 Building a Lexical Analyser with Ruby The grammar rules for the language "TINY" are listed below. In this assignment we will identify the tokens in this language and build a lexical analyser (lexer) for recognizing and outputting TINY tokens. # https://www.cs.rochester.edu/-brown/173/readings/05 grammars.txt + # "TINY" Grammar + # PGM -> # STMT -> # ASSIGN --> # EXP # ETAIL # TERM # TTAIL -> # FACTOR --> + # ID --> # ALPHA --> # # INT --> # DIGIT --> # WHITESPACE --> STMT+ ASSIGN "print" EXP ID EXP TERM ETAIL "+" TERM ETAIL "-" TERM ETAIL | EPSILON FACTOR TTAIL "#" FACTOR TTAIL | "/" FACTOR TTAILEPSILON "(" EXP ")" | INT | ID or ALPHA+ b 1 B |Z DIGIT+ i Ruby Whitespace 9 Whenever a token is identified, we encapsulate it using the Token class below. Each token has a type and text. For example, if DOG was identified as a variable in this language, it could have type "id" and text "DOG". The add operator might have type "addOp and text "+" or type "+" and text "+". These values are somewhat arbitrary - choose values that make sense to you. Token Class I have given you a partially complete Token class that you should modify for this assignment called "TinyToken.rb". It has a few constants already defined. The VALUES of these variables represent the name of the token. You will need to build more constants to store all of the tokens that exist in this language. For this assignment, you get to decide what categories of lexemes there are and what you want to name those categories. It is important to test all the code you write. At the very least, use "puts to test the class methods. You can build the tests in separate files and load them as needed: load "mytest.rb". The code below tests the Token class methods. # Test the Token class # Test the Token class tok = Token.new("atype", "atext") puts "Token type: #{tok.type}" puts "Token text: #{tok.text}" tok.type = "type" tok.text = "text" puts "Token type: #{tok.type}" puts "Token text: #{tok.text}" puts "Token: #{tok)" The "Lexer" The first goal is to build a Scanner (or Lexer) for TINY. I have sketched the basic structure in file named "Tiny Scanner.rb. Here are a few points to consider: 1) The constructor is passed a file name which contains the source code for a TINY program. The constructor opens the file and reads the first character, storing it in class variable @c (which acts as a one-character look ahead). I already did this. 2) Currently, the Scanner abends if it is passed a file that doesn't exist. Modify the code so that it fails gracefully in this circumstance. 3) Method nextCh() updates @c with the next character and returns it, unless it has reached the end of the file, in which case it will return "eof. I already did this. 4) Method nextToken() returns the next token identified by the scanner. It is not complete, as it does not identify all Tokens in your grammar yet. In addition to identifying and returning a token, this method should print what it finds, each time it identifies a token (just like the example of the lexer in the PowerPoint for chapter 4). You must complete this method. 5) Contiguous whitespace should be combined and emitted as a single token. I already did this for you. 6) An end of file (EOF) token should be emitted when the file has been completely processed. I already did this 7) There are several helper methods defined at the bottom of "Tiny Scanner.rb" that you can use to help you to identify different types of characters (like numbers, letters, and whitespace). for you. Display and Print Tokens I've included a file called "TestTinyLexer.rb" that you can use to test your lexer (previous section). For the time being, it simply scans a file and stores all of the tokens in a text file called "tokens". Feel free to modify this file to test different things about your lexer, or to open the tokens file to see what was actually saved. I've also included a sample input file (input.txt) that adheres to our TINY programming language. You should experiment with different inputs that both adhere to and don't adhere to the grammar to verify the correctness of your lexer. Sample Program Output Below is a screenshot of sample output that would result from using the included sample input file. rsardinas@GT80-Laptop: /ruby_projects/hw $ ruby ScannerTest1.rb Next token is: id Next lexeme is: x Next token is: whitespace Next lexeme is: Next token is: = Next lexeme is: = Next token is: whitespace Next lexeme is: Next token is: int Next lexeme is: 3 Next token is: whitespace Next lexeme is: Next token is: id Next lexeme is: y Next token is: whitespace Next lexeme is: Next token is: = Next lexeme is: = Next token is: whitespace Next lexeme is: Next token is: int Next lexeme is: 5 Next token is: whitespace Next lexeme is: Next token is: id Next lexeme is: z Next token is: whitespace Next lexeme is: Next token is: = Next lexeme is: = Next token is: whitespace Next lexeme is: Next token is: ( Next lexeme is: ( Next token is: id Next lexeme is: X Next token is: whitespace Next lexeme is: Next token is: + Next lexeme is: + Next token is: whitespace Next lexeme is: Next token is: id Next lexeme is: y Next token is: ) Next lexeme is: ) Next token is: whitespace Next lexeme is: Next token is: / Next lexeme is: / Next token is: whitespace Next lexeme is: Next token is: int Next lexeme is: 3 x = 3 y = 5 z = (x + (x + y) / 3 print z 1 2 # Class Token - Encapsulates the tokens in TINY 3 4 # @type - the type of token (Category) 5 # text - the text the token represents (Lexeme) 7 class Token 8 attr_accessor : type 9 attr_accessor :text 10 11 # This is the only part of this class that you need to 12 # modify 13 EOF = "eof" 14 LPAREN = "" 15 RPAREN = ")" 16 ADDOP = "4" 17 WS = "whitespace" 18 # add the rest of the tokens needed based on the grammar 19 # specified in the Scanner class "TinyScanner.rb" 20 21 #constructor 22 def initialize(type, text) 23 @type = type 24 @text = text 25 end 26 27 def get_type 28 return @type 29 end 30 31 def get_text 32 return @text 33 end 34 35 # to string method 36 37 return "#{@type} #{@text}" 38 end 39 end def to_s ID 1 # https://www.cs.rochester.edu/-brown/173/readings/05_grammars.txt 2 # 3 # "TINY" Grammar 4 # 5 # PGM STMT+ 6 # STMT --> ASSIGN I "print" EXP 7 # ASSIGN --> ID EXP 8 # EXP TERM ETAIL 9 # ETAIL "+" TERM ETAIL | "." TERM ETAIL | EPSILON 10 # TERM FACTOR TTAIL 11 # TTAIL "*" FACTOR TTAIL "/" FACTOR TTAIL | EPSILON 12 # FACTOR "(" EXP ")" | INT 13 # 14 # ID ALPHA+ 15 # ALPHA --> Z or 16 # A B 17 # INT DIGIT+ 18 # DIGIT 0 1 - 9 19 # WHITESPACE Ruby Whitespace 20 21 # 22 # Class Scanner - Reads a TINY program and emits tokens 23 # 24 class Scanner 25 # Constructor - Is passed a file to scan and outputs a token 26 # each time nextToken() is invoked. 27 # A one character lookahead 28 def initialize(filename) 29 # Need to modify this code so that the program 30 # doesn't abend if it can't open the file but rather 31 # displays an informative message 32 @f = File.open(filename, 'r: utf-8") 33 34 # Go ahead and read in the first character in the source 35 # code file (if there is one) so that you can begin 36 # lexing the source code fil 37 if (! @f.eof?) 38 @c= @f.getc) 39 else 40 @C = f" 41 ef.close() 42 end 43 end 44 45 # Method nextch() returns the next character in the file 46 def nextch) 47 if (!ef.eof?) @c 48 49 50 51 52 if (! @f.eof?) @c = @f.getc) else @C = "eof" end return @c end # Method nextToken() reads characters in the file and returns # the next token def nextToken() if @C == "eof" return Token.new(Token::EOF, "eof") elsif (whitespace?(@c) str "" while whitespace? (@c) str += @C nextch end 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 end 94 # tok - Token.new(Token::WS, str) return tok #elsif ... # more code needed here! complete the code here # so that your scanner can correctly recognize, # print (to a text file), and display all tokens # in our grammar that we found in the source code file # FYI: You don't HAVE to just stick to if statements # any type of selection statement "could" work. We just need # to be able to programatically identify tokens that we # encounter in our source code file. # don't want to give back nil token! # remember to include some case to handle # unknown or unrecognized tokens. # below is an example of how you "could" # create an "unknown" token directly from # this scanner. You could also choose to define # this "type" of token in your token class tok = Token.new("unknown", "unknown") end 92 93 end 94 # 95 # Helper methods for Scanner 96 # 97 def letter?(lookAhead) 98 lookAhead == /[a-z] [A-Z]$/ 99 end 30 01 def numeric?(lookAhead) 32 lookAhead En /(\d)+$/ 23 end 34 25 def whitespace? (lookAhead) 06 lookAhead = /(\s)+$/ 27 end 5 1 load "./TinyToken.rb", 2 load "./Tinyscanner.rb" 3 # if the file(s) are in the same directory, you can simply precede 4 # the file name (s) with ./ 6 # input.tiny below is simply the "source code" 7 # that you write that adheres to your grammar rules 8 # if it is in the same directory as this file, you can 9 # simply include the file name, otherwise, you will need le # to specify the entire path to the file as we did above 11 # to load the other ruby modules 12 scan = Scanner.new("input.tiny") 13 tok = scan.nextToken() 14 15 # the code below will open a file so that I 16 # can save tokens to it 17 tokenFile = File.open("tokens", "w") 18 19 # keep "fetching" one token at a time, using your scanner 20 # until there are no tokens left to scan 21 while (tok.get_type() != Token :: EOF) 22 23 # The instruction below writes your token into a file. 24 tokenFile.puts"#{tok}" 25 26 # get the next token available (if there is one) 27 tok = scan.nextToken() 28 end 30 # There should be one token left (see the boolean condition above) 31 # putting the last token in the file and closing the file 32 tokenFile.puts"#{tok}" 33 tokenFile.close INMI 29Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started