write a python program!

submit the code in part 2.

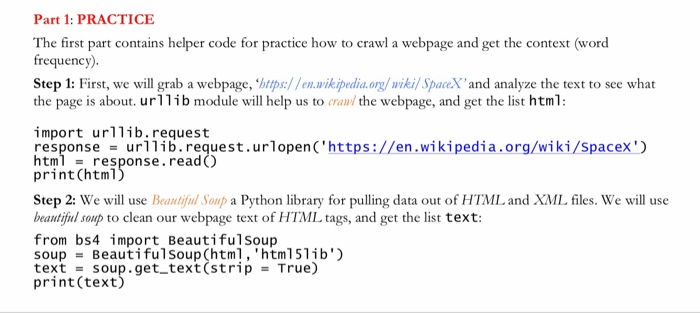

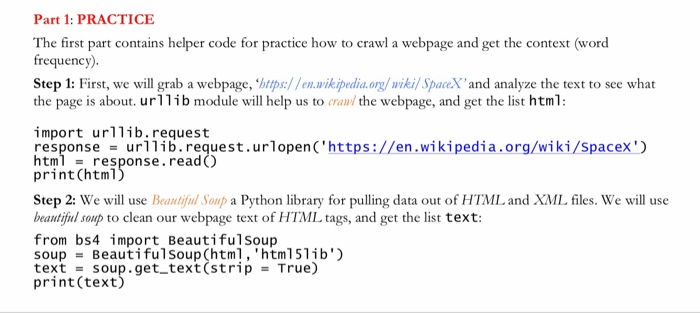

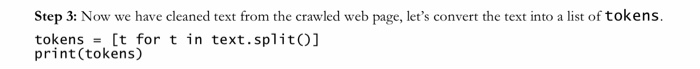

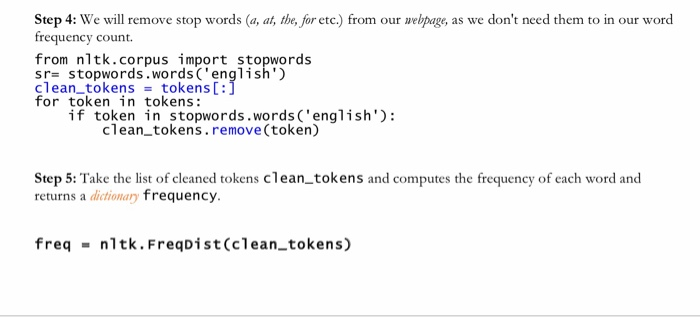

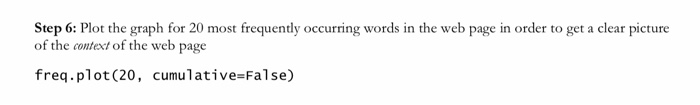

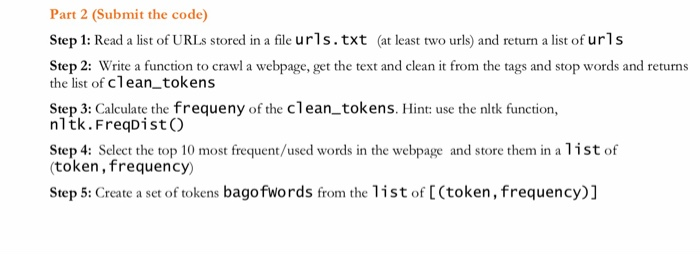

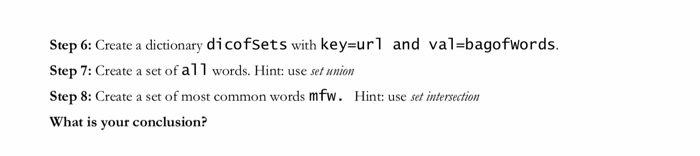

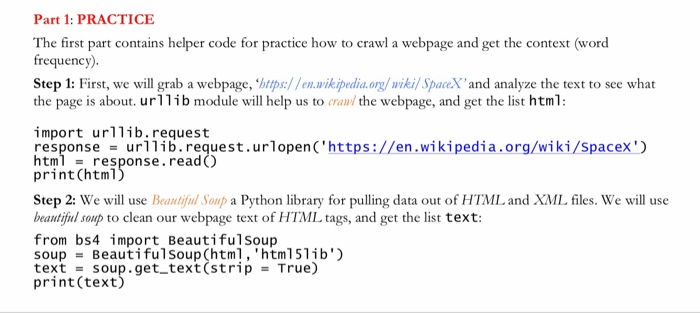

Part 1: PRACTICE The first part contains helper code for practice how to crawl a webpage and get the context (word frequency). Step 1: First, we will grab a webpage, 'btps://en.wikipedia.org/wikil SpaceX'and analyze the text to see what the page is about. urllib module will help us to craw the webpage, and get the list html import urllib.request responseurllib.request.urlopen ('https://en.wikipedia.org/wiki/Spacex) htmlresponse.readO print(html Step 2: We will use Beantiful Soup a Python library for pulling data out of HTML and XML files. We will use beautiful soup to clean our webpage text of HTML tags, and get the list text: from bs4 import Beautifulsoup soup = Beautifulsou p (html, 'html 51 ib') text = soup . get-text(strip = True) print (text Step 3: Now we have cleaned text from the crawled web page, let's convert the text into a list of tokens. tokens[t for t in text.splitO] print(tokens) Step 4: We will remove stop words (a, at, the, for etc.) from our webpage, as we don't need them to in our word frequency count. from nltk.corpus import stopwords sr- stopwords.words ('english') clean_tokenstokens[ for token in tokens: if token in stopwords.words'english' clean_tokens.remove (token) Step 5: Take the list of cleaned tokens clean_tokens and computes the frequency of each word and returns a dictionary frequency. freq nltk.FreqDist (clean tokens) Step 6: Plot the graph for 20 most frequently occurring words in the web page in order to get a clear picture of the context of the web page freq.plot (20, cumulative-False) Part 2 (Submit the code) Step 1: Read a list of URLs stored in a file urls.txt (at least two urls) and return a list of urls Step 2: Write a function to crawl a webpage, get the text and clean it from the tags and stop words and returns the list of clean_tokens Step 3: Calculate the frequeny of the cleantokens. Hint: use the nltk function, nltk.FreqDistO Step 4: Select the top 10 most frequent/used words in the webpage and store them in a list of (token, frequency) Step 5: Create a set of tokens bagofwords from the list of [(token, frequency)] Step 6: Create a dictionary dicofsets with key-url and val-bagofwords. Step 7: Create a set of al1 words. Hint: use set union Step 8: Create a set of most common words mfw. Hint use ection What is your conclusion