Question: Write matlab code to give the desired outputs.Write Y_train, Y_test, x_train and x_test data to pull from my file on my computer. In this assignment,

Write matlab code to give the desired outputs.Write Y_train, Y_test, x_train and x_test data to pull from my file on my computer.

In this assignment, you will train feedforward neural networks with three layers to classify motion data. In total, there are 10299 movement patterns with 561 features belonging to 6 classes in the data set. This data set is divided into two as training and test subsets. Training and test sets include 7352 and 2947 patterns, respectively.As a first step, load training and test data sets with similar codes to the following. X_train = load("human+activity+recognition+using+smartphones/UCI HAR Dataset/train/X_train.txt"); Y_train = load("human+activity+recognition+using+smartphones/UCI HAR Dataset/train/Y_train.txt"); X_test = load("human+activity+recognition+using+smartphones/UCI HAR Dataset/test/X_test.txt"); Y_test = load("human+activity+recognition+using+smartphones/UCI HAR Dataset/test/Y_test.txt");

Here, Y_train and Y_test variables include class labels from 1 to 6. You must transform them into the one hot encoding scheme. After that, you must generate a three-layer neural network, which takes 561 features to the first hidden layer consisting of 200 neurons with hyperbolic tangent activation functions. The second hidden layer of the neural network consists of 100 neurons with rectified linear unit activation functions. The output layer consists of 6 neurons with either sigmoid or softmax activation functions. Finally, cost function will be chosen either MSE or Cross Entropy. To do these, you benefit from following codes. h1_size = 200; h2_size = 100; epochLimit = 80; input_size = size(X_train,2); Wh1e = (rand(h1_size, input_size + 1)-0.5)/100; Wh2e = (rand(h2_size, h1_size + 1)-0.5)/100; Woe = (rand(length(unique(Y_test)), h2_size + 1)-0.5)/100;  1.Perform a training using sigmoid activation function on output layer and mean squared error metric with learning rate eta = 0.001 for 80 epochs on training set data. Using the plot function, show the change of MSE loss over training and test sets in the same figure with respect to epoch number (20 points). Find the occurred minimum loss value and its epoch number on each set (4 points). Generate a confusion matrix for training and test sets using weights at the end of the training (16 points). Calculate the ratio of successful classifications to all patterns inside each set using similar codes to the following (6 points). All related codes should be explained to take these points. trainingSuccessRate = sum(diag(confusionMatrixTraining))/sum(sum(confusionMatrixTraining)) testSuccessRate = sum(diag(confusionMatrixTest))/sum(sum(confusionMatrixTest)) 2.Repeat first question using softmax activation function on output layer and cross entropy cost function instead of sigmoid and MSE (46 points). 3. Comment on difference between sigmoid + MSE and softmax + CE utilization considering their advantages and drawbacks (8 points).

1.Perform a training using sigmoid activation function on output layer and mean squared error metric with learning rate eta = 0.001 for 80 epochs on training set data. Using the plot function, show the change of MSE loss over training and test sets in the same figure with respect to epoch number (20 points). Find the occurred minimum loss value and its epoch number on each set (4 points). Generate a confusion matrix for training and test sets using weights at the end of the training (16 points). Calculate the ratio of successful classifications to all patterns inside each set using similar codes to the following (6 points). All related codes should be explained to take these points. trainingSuccessRate = sum(diag(confusionMatrixTraining))/sum(sum(confusionMatrixTraining)) testSuccessRate = sum(diag(confusionMatrixTest))/sum(sum(confusionMatrixTest)) 2.Repeat first question using softmax activation function on output layer and cross entropy cost function instead of sigmoid and MSE (46 points). 3. Comment on difference between sigmoid + MSE and softmax + CE utilization considering their advantages and drawbacks (8 points).

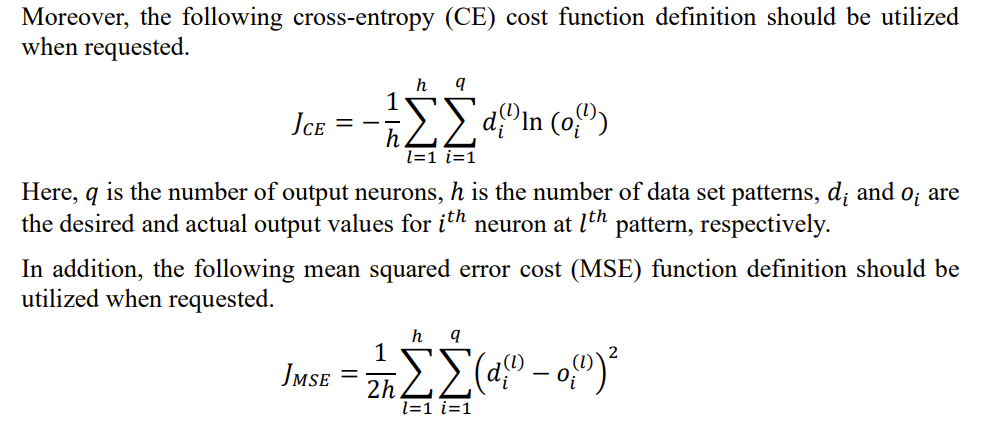

Moreover, the following cross-entropy (CE) cost function definition should be utilized when requested. JCE=h1l=1hi=1qdi(l)ln(oi(l)) Here, q is the number of output neurons, h is the number of data set patterns, di and oi are the desired and actual output values for ith neuron at lth pattern, respectively. In addition, the following mean squared error cost (MSE) function definition should be utilized when requested. JMSE=2h1l=1hi=1q(di(l)oi(l))2

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts