Question: Written in Java. Please help. Any information would be greatly appreciated. Even shelling of the code would be beneficial if you cannot understand the whole

Written in Java. Please help. Any information would be greatly appreciated. Even shelling of the code would be beneficial if you cannot understand the whole thing. Thank you!!!!!!!!!!!!!!!!!!

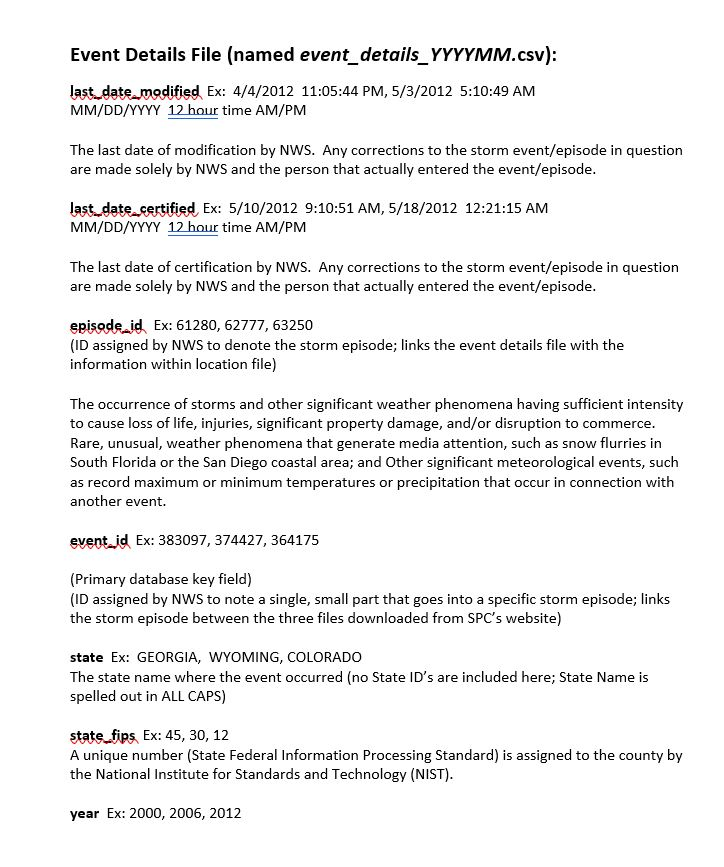

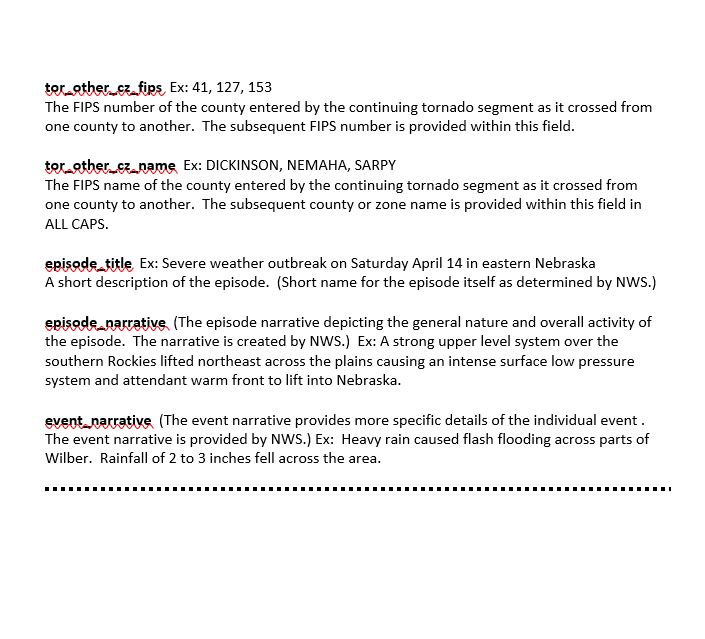

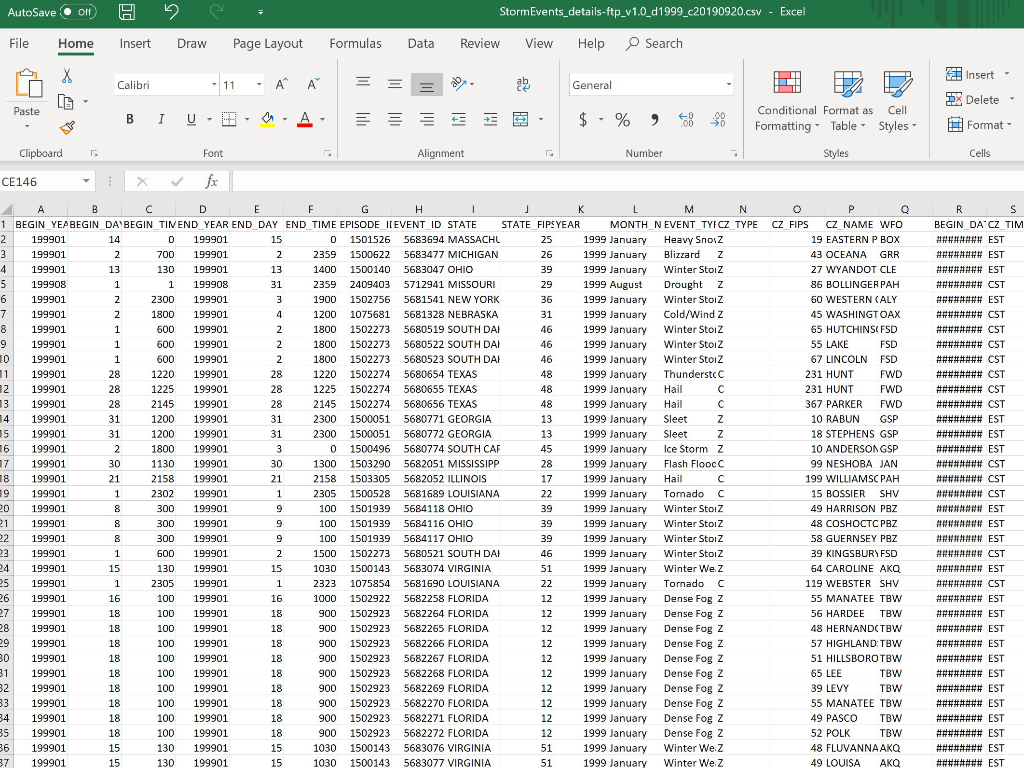

Example of what the Excell file looks like

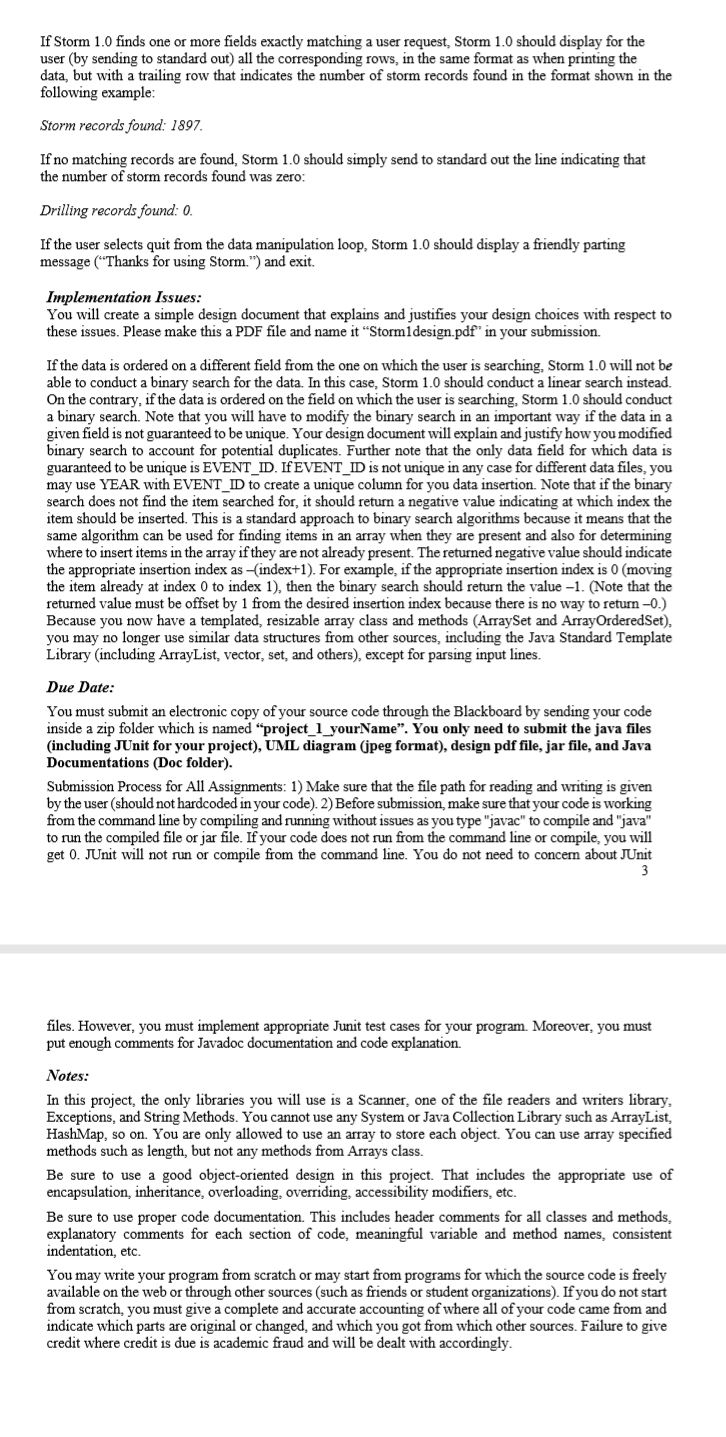

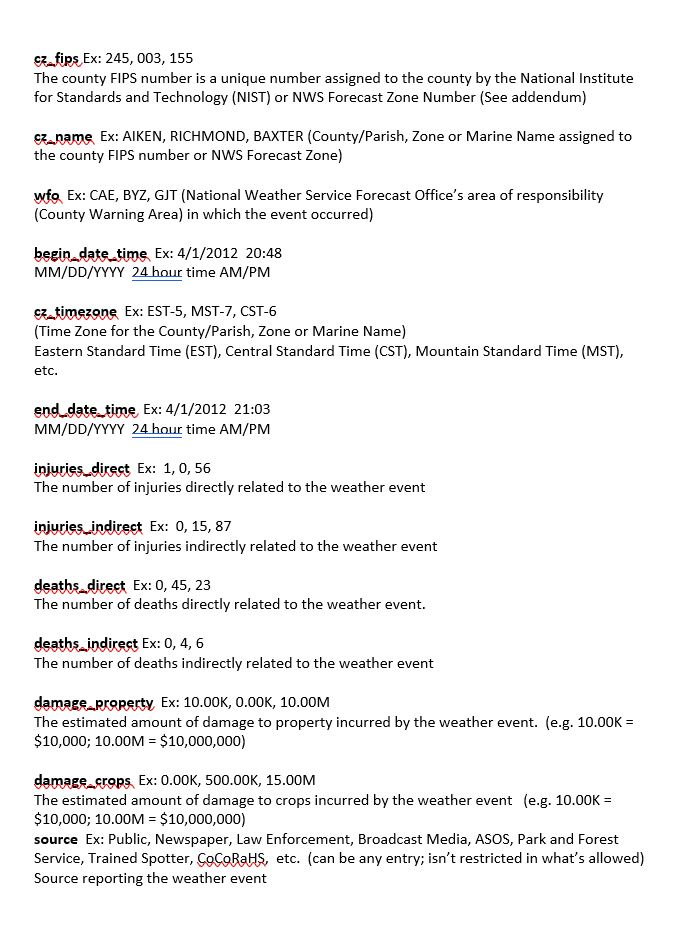

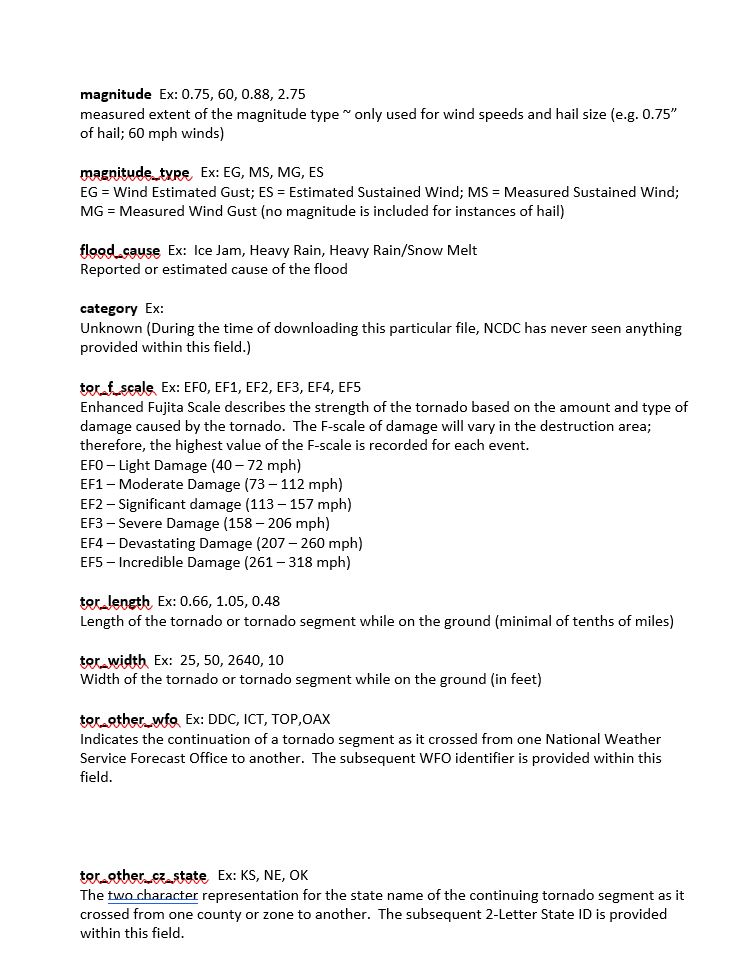

User Request: "Create a simple system to read, search, remove, and write storm data." Objectives: 1. Use standard Java I/O to read, write and user interaction, using appropriate exception 15 points handling for files. Store the data entries in the order in a data structure that varies in size as elements are 15 points inserted and removed. (Array with resize and shrink) Integrate appropriate exception handling in resizable array and storm record classes. 5 points 4. Use binary search to search to find a place to insert data and remove data entries from 15 points an initially empty data structure. (Insertion should be ordered based on "EVENT ID" column comparison. If EVENT_ID is not unique, you may combine YEAR with EVENT_ID to create a unique column for all data.) 5. Efficiently search the data using a comparator based on the field specified by the user. 20 points 10 Points 6. JUnit Test cases for your data operation methods. Develop and use an appropriate design Use proper documentation and formatting. 10 points 10 points Description: For this project, you will put together several techniques and concepts you have learned in CS 210 (or from a similar background) and some new techniques to make Storm 1.0, an application that reads from multiple data files, stores, and output. Storm 1.0 will read through simplified CSV files, store the data from each row entry in a structured way (e.g., the list of columns can be Object and will be stored as an array of object), and your Strom 1.0 will take user input that allows users to specify the files in which data is stored and to search for entries based on various data fields. Operational Issues: Storm 1.0 will read storm data files via Java file I/O. The names of the folder that the data files inside will be specified by the user using standard input. When Storm 1.0 starts, it will enter the data input loop and prompt the user for the name of a folder name that includes data files ("Enter data folder name: ") and waiting for the user to type a folder path and hit enter. If the user enters the name of an available data folder, Storm 1.0 will open all CSV data files using Java file I/O and read the data. If the user enters the name of a folder that is not accessible, Storm 1.0 will report the error to the user ("Folder is not available.") and continue in the loop, repeating the prompt and waiting again for a folder name. If the user hits enter without entering anything else, Storm 1.0 will exit the data input loop. If no data has been read in when Storm 1.0 exits the data input loop, Storm 1.0 will exit. Otherwise, Strom 1.0 will move on to a data manipulation loop (see below). The data files will be organized as they are explained in the word file. However, some of the column information may be null or empty for this project. Moreover, the storm data is spread across multiple files. When Storm reads in each file, it must make sure the data in that file is internally consistent and alert the user to any errors encountered if the file cannot be read. After reading and storing all storm data from all CSV files in the specified folder, Storm will enter a data manipulation loop (as mentioned above). In this loop, Storm 1.0 will prompt the user with four options: 'o' for output, 'sum' for summary, 'f for find, and 'q' for quit ("Enter (output, (summary, (f)ind, or (q)uit: "). If the user selects output, Storm 1.0 will prompt the user for a filename ("Enter output file name: ") and read it in from the console. If the user hits enter without specifying a filename, Storm 1.0 will send output to standard out. Otherwise, it will attempt to open the specified file for writing. If the user enters the name of a file that is not accessible, Storm 1.0 will report the error ("File is not available.") and repeat the prompt for the file name. If the file is accessible or the user has specified the standard out, Storm 1.0 will print out the storm data in whichever order it is presently ordered, followed by a row showing the internal tallies, for example: Data lines read: 15847; Storm records in memory: 15846 Other than possible differences in the order of the rows, the data output format of Storm 1.0 will exactly match the output format used by input files. If the user selects summary, Storm 1.0 will prompt the user for the data year on which to report a summary of the records ("Enter summary year: '). The user may select any numeric value from 0 to 9999 (inclusive), which corresponds to the year number. If the user enters an invalid value for the data field on for summary, Storm 1.0 will return to the data manipulation loop. If the user selects for the summary by any valid data field, Storm 1.0 will print total INJURIES_DIRECT, total INJURIES INDIRECT total DEATHS_DIRECT, total DEATHS_INDIRECT, total DAMAGE_PROPERTY, total DAMAGE CROPS for the given valid year as follows: Year: 2020; total INJURIES_DIRECT:15; total INJURIES_INDIRECT: 30; total DEATHS_DIRECT: 3; total DEATHS_INDIRECT: 6; total DAMAGE_PROPERTY: 200K; total DAMAGE CROPS: 1000K. If the user selects find from the data manipulation loop, Storm 1.0 will prompt the user for the data field on which to search ("Enter search field (0-10): "). 0-10 represents the first 10 column tags in 2019 data: BEGIN_YEARMONTH, BEGIN_DAY, BEGIN_TIME, END YEARMONTH, END_DAY, END TIME, EPISODE ID, EVENT ID, STATE, STATE FIPS. The user may select any numeric value from 0 to 10 (inclusive) and if the user selects any other value, Storm 1.0 will return to the data manipulation loop If the user chooses to find by any numeric column, Storm 1.0 will prompt the user for the numeric value on which to search ("Enter field value: "). If the user enters any text, Storm 1.0 will ensure that the value entered is valid (can be converted to a positive number) and, if it is, search for the given value. If the user entry is invalid, Storm will return to the data manipulation loop. If the user chooses to find by any other valid field, Storm 1.0 will prompt the user for the value on which to search ("Enter exact text on which to search: "). If the user enters any text, enter), Storm 1.0 will search for the given value but does not need to perform any validity check on the input data. If the user just hits enter without giving a search string, Storm 1.0 will return to the data manipulation loop. If Storm 1.0 finds one or more fields exactly matching a user request, Storm 1.0 should display for the user (by sending to standard out) all the corresponding rows, in the same format as when printing the data, but with a trailing row that indicates the number of storm records found in the format shown in the following example: Storm records found: 1897. If no matching records are found, Storm 1.0 should simply send to standard out the line indicating that the number of storm records found was zero: Drilling records found: 0 If the user selects quit from the data manipulation loop, Storm 1.0 should display a friendly parting message ("Thanks for using Storm.") and exit. Implementation Issues: You will create a simple design document that explains and justifies your design choices with respect to these issues. Please make this a PDF file and name it "Stormidesign.pdf" in your submission. If the data is ordered on a different field from the one on which the user is searching, Storm 1.0 will not be able to conduct a binary search for the data. In this case, Storm 1.0 should conduct a linear search instead. On the contrary, if the data is ordered on the field on which the user is searching, Storm 1.0 should conduct a binary search. Note that you will have to modify the binary search in an important way if the data in a given field is not guaranteed to be unique. Your design document will explain and justify how you modified binary search to account for potential duplicates. Further note that the only data field for which data is guaranteed to be unique is EVENT_ID. If EVENT_ID is not unique in any case for different data files, you may use YEAR with EVENT_ID to create a unique column for you data insertion. Note that if the binary search does not find the item searched for, it should return a negative value indicating at which index the item should be inserted. This is a standard approach to binary search algorithms because it means that the same algorithm can be used for finding items in an array when they are present and also for determining where to insert items in the array if they are not already present. The returned negative value should indicate the appropriate insertion index as-index+1). For example, if the appropriate insertion index is 0 (moving the item already at index 0 to index 1), then the binary search should return the value -1. (Note that the returned value must be offset by 1 from the desired insertion index because there is no way to return-0.) Because you now have a templated, resizable array class and methods (ArraySet and ArrayOrdered Set), you may no longer use similar data structures from other sources, including the Java Standard Template Library (including ArrayList, vector, set, and others), except for parsing input lines. Due Date: You must submit an electronic copy of your source code through the Blackboard by sending your code inside a zip folder which is named "project_1_yourName". You only need to submit the java files (including JUnit for your project), UML diagram (jpeg format), design pdf file, jar file, and Java Documentations (Doc folder). Submission Process for All Assignments: 1) Make sure that the file path for reading and writing is given by the user (should not hardcoded in your code). 2) Before submission, make sure that your code is working from the command line by compiling and running without issues as you type "javac" to compile and "java" to run the compiled file or jar file. If your code does not run from the command line or compile, you will get 0. JUnit will not run or compile from the command line. You do not need to concern about JUnit files. However, you must implement appropriate Junit test cases for your program. Moreover, you must put enough comments for Javadoc documentation and code explanation Notes: In this project, the only libraries you will use is a Scanner, one of the file readers and writers library, Exceptions, and String Methods. You cannot use any System or Java Collection Library such as ArrayList, HashMap, so on. You are only allowed to use an array to store each object. You can use array specified methods such as length, but not any methods from Arrays class. Be sure to use a good object-oriented design in this project. That includes the appropriate use of encapsulation, inheritance, overloading, overriding, accessibility modifiers, etc. Be sure to use proper code documentation. This includes header comments for all classes and methods, explanatory comments for each section of code, meaningful variable and method names, consistent indentation, etc. You may write your program from scratch or may start from programs for which the source code is freely available on the web or through other sources (such as friends or student organizations). If you do not start from scratch, you must give a complete and accurate accounting of where all of your code came from and indicate which parts are original or changed, and which you got from which other sources. Failure to give credit where credit is due is academic fraud and will be dealt with accordingly. Event Details File (named event_details_YYYYMM.csv): last date.modified Ex: 4/4/2012 11:05:44 PM, 5/3/2012 5:10:49 AM MM/DD/YYYY 12 hour time AM/PM The last date of modification by NWS. Any corrections to the storm event/episode in question are made solely by NWS and the person that actually entered the event/episode. last date certified Ex: 5/10/2012 9:10:51 AM, 5/18/2012 12:21:15 AM MM/DD/YYYY 12 hour time AM/PM The last date of certification by NWS. Any corrections to the storm event/episode in question are made solely by NWS and the person that actually entered the event/episode. episode_id Ex: 61280, 62777, 63250 (ID assigned by NWS to denote the storm episode; links the event details file with the information within location file) The occurrence of storms and other significant weather phenomena having sufficient intensity to cause loss of life, injuries, significant property damage, and/or disruption to commerce. Rare, unusual, weather phenomena that generate media attention, such as snow flurries in South Florida or the San Diego coastal area; and Other significant meteorological events, such as record maximum or minimum temperatures or precipitation that occur in connection with another event. event_id Ex: 383097, 374427, 364175 (Primary database key field) (ID assigned by NWS to note a single, small part that goes into a specific storm episode; links the storm episode between the three files downloaded from SPC's website) state Ex: GEORGIA, WYOMING, COLORADO The state name where the event occurred (no State ID's are included here; State Name is spelled out in ALL CAPS) state.fips Ex: 45, 30, 12 A unique number (State Federal Information Processing Standard) is assigned to the county by the National Institute for Standards and Technology (NIST). year Ex: 2000, 2006, 2012 sz_fips Ex: 245, 003, 155 The county FIPS number is a unique number assigned to the county by the National Institute for Standards and Technology (NIST) or NWS Forecast Zone Number (See addendum) cz name Ex: AIKEN, RICHMOND, BAXTER (County/Parish, Zone or Marine Name assigned to the county FIPS number or NWS Forecast Zone) wfo Ex: CAE, BYZ, GJT (National Weather Service Forecast Office's area of responsibility (County Warning Area) in which the event occurred) begin date time Ex: 4/1/2012 20:48 MM/DD/YYYY 24 hour time AM/PM sz_timezone Ex: EST-5, MST-7, CST-6 (Time Zone for the County/Parish, Zone or Marine Name) Eastern Standard Time (EST), Central Standard Time (CST), Mountain Standard Time (MST), etc. end_date_time Ex: 4/1/2012 21:03 MM/DD/YYYY 24 hour time AM/PM injuries direct Ex: 1, 0,56 The number of injuries directly related to the weather event injuries indirect Ex: 0, 15, 87 The number of injuries indirectly related to the weather event deaths direct Ex: 0, 45, 23 The number of deaths directly related to the weather event. deaths indirect Ex: 0, 4, 6 The number of deaths indirectly related to the weather event damage property Ex: 10.00K, O.OOK, 10.00M The estimated amount of damage to property incurred by the weather event. (e.g. 10.00K = $10,000; 10.00M = $10,000,000) damage crops Ex: 0.00K, 500.00K, 15.00M The estimated amount of damage to crops incurred by the weather event (e.g. 10.00K = $10,000; 10.00M = $10,000,000) source Ex: Public, Newspaper, Law Enforcement, Broadcast Media, ASOS, Park and Forest Service, Trained Spotter, CocoRahs, etc. (can be any entry; isn't restricted in what's allowed) Source reporting the weather event magnitude Ex: 0.75, 60, 0.88, 2.75 measured extent of the magnitude type only used for wind speeds and hail size (e.g. 0.75" of hail; 60 mph winds) magnitude_type Ex: EG, MS, MG, ES EG = Wind Estimated Gust; ES = Estimated Sustained Wind; MS = Measured Sustained Wind; MG = Measured Wind Gust (no magnitude is included for instances of hail) flood cause Ex: Ice Jam, Heavy Rain, Heavy Rain/Snow Melt Reported or estimated cause of the flood category Ex: Unknown (During the time of downloading this particular file, NCDC has never seen anything provided within this field.) tor_f scale Ex: EFO, EF1, EF2, EF3, EF4, EF5 Enhanced Fujita Scale describes the strength of the tornado based on the amount and type of damage caused by the tornado. The F-scale of damage will vary in the destruction area; therefore, the highest value of the F-scale is recorded for each event. EFO - Light Damage (40-72 mph) EF1 - Moderate Damage (73 - 112 mph) EF2 - Significant damage (113-157 mph) EF3-Severe Damage (158 - 206 mph) EF4 - Devastating Damage (207 - 260 mph) EF5 - Incredible Damage (261 - 318 mph) tor_length Ex: 0.66, 1.05, 0.48 Length of the tornado or tornado segment while on the ground (minimal of tenths of miles) tor_width Ex: 25, 50, 2640, 10 Width of the tornado or tornado segment while on the ground (in feet) tor_other_wfo Ex: DDC, ICT, TOP, OAX Indicates the continuation of a tornado segment as it crossed from one National Weather Service Forecast Office to another. The subsequent WFO identifier is provided within this field. tor_other_sz state Ex: KS, NE, OK The two character representation for the state name of the continuing tornado segment as it crossed from one county or zone to another. The subsequent 2-Letter State ID is provided within this field. tor_other_ezfips Ex: 41, 127, 153 The FIPS number of the county entered by the continuing tornado segment as it crossed from one county to another. The subsequent FIPS number is provided within this field. tor_other_cz name Ex: DICKINSON, NEMAHA, SARPY The FIPS name of the county entered by the continuing tornado segment as it crossed from one county to another. The subsequent county or zone name is provided within this field in ALL CAPS. episode title Ex: Severe weather outbreak on Saturday April 14 in eastern Nebraska A short description of the episode. (Short name for the episode itself as determined by NWS.) episode narrative (The episode narrative depicting the general nature and overall activity of the episode. The narrative is created by NWS.) Ex: A strong upper level system over the southern Rockies lifted northeast across the plains causing an intense surface low pressure system and attendant warm front to lift into Nebraska. event narrative (The event narrative provides more specific details of the individual event. The event narrative is provided by NWS.) Ex: Heavy rain caused flash flooding across parts of Wilber. Rainfall of 2 to 3 inches fell across the area. AutoSave on H o e StormEvents_details-ftp_v1.0_d1999_c20190920.csv - Excel File Home Insert Draw Page Layout Formulas Data Review View Help Search = = la X Pastea LG Paste Calibri - 11 - A A = BI U - A 3 2. Insert - Delete- Format General $ - % 3 . , Conditional Format as Formatting Table Cell Styles Cells 29 van 2 46 28 48 48 3 Clipboard Font Alignment Number Styles CE146 - X fx A B C D E F G H I J K L M N O P Q 1 BEGINYEABEGIN DA BEGIN TIMEND YEAR END DAY END TIME EPISODE [EVENT ID STATE STATE FIPS YEAR MONTHN EVENT TYFCZ TYPE CZ FIPS CZ NAME WFO 199901 14 0 199901 15 0 1501526 5683694 MASSACHL 25 1999 January Heavy Snosz 19 EASTERN P BOX 199901 2 700 199901 2 2359 1500622 5683477 MICHIGAN 26 1999 January Blizzard Z 43 OCEANA GRR 199901 13 130 199901 13 1 400 1500140 5683047 OHIO 39 1999 January Winter Stoiz 27 WYANDOT CLE 199908 1 1 199908 31 2359 2409403 5712941 MISSOURI 1999 August Drought Z 86 BOLLINGERPAH 199901 2300 199901 1900 1502756 5681541 NEW YORK 36 1999 January Winter Stoiz 60 WESTERN CALY 199901 2 1800 199901 1200 1075681 5681328 NEBRASKA 31 1999 January Cold/Wind Z 45 WASHINGT OAX 199901 600 199901 1800 1502273 5680519 SOUTH DAH 46 1999 January Winter Stoiz 65 HUTCHINSCFSD 199901 1 600 199901 2 1800 15022735680522 SOUTH DAH 1999 January Winter Stor Z 55 LAKE FSD 199901 600 199901 1800 1502273 5680523 SOUTH DAH 1999 January Winter Stoiz 67 LINCOLN FSD 199901 1220 199901 1220 1502274 5680654 TEXAS 1999 January Thunderstcc 231 HUNT FWD 199901 1225 199901 1225 1502274 5680655 TEXAS 1999 January Hail C 231 HUNT FWD 199901 2145199901 2145 1502274 5680656 TEXAS 1999 January Hail C 367 PARKER FWD 199901 1200 199901 2300 1500051 5680771 GEORGIA 1999 January Sleet Z 10 RABUN GSP 199901 311200 199901 31 2300 1500051 5680772 GEORGIA 13 1999 January Sleet Z 18 STEPHENS GSP 16 199901 2 1800 199901 0 1500496 5680774 SOUTH CAF 45 1999 January Ice Storm Z 10 ANDERSON GSP 199901 30 1130 199901 30 1300 1503290 5682051 MISSISSIPP 28 1999 January Flash Flood 99 NESHOBA JAN 199901 21 2158 199901 21 2158 1503305 5682052 ILLINOIS 17 1999 January Hail 199 WILLIAMSC PAH 199901 1 2302 199901 1 2305 1500528 5681689 LOUISIANA 22 1999 January Tomado C 15 BOSSIER SHV 20 199901 8 300 199901 9 100 1501939 5684118 OHIO 39 1999 January Winter Storz 49 HARRISON PBZ 21 199901 8 300 199901 9 100 1501939 5684116 OHIO 39 1999 January Winter Stoz 48 COSHOCTC PBZ 199901 300 199901 9 100 1501939 5684117 OHIO 39 1999 January Winter Stoz 58 GUERNSEY PBZ 199901 1 600 199901 2 1500 15022735680521 SOUTH DAH 46 1999 January Winter Storz 39 KINGSBURYFSD 199901 130 199901 15 1030 1500143 5683074 VIRGINIA 51 1999 January Winter We z 64 CAROLINE AKO 199901 2305 199901 1 2323 1075854 5681690 LOUISIANA 1999 January Tornado C 119 WEBSTER SHV 199901 100 199901 16 1000 1502922 5682258 FLORIDA 1999 January Dense Fog Z 55 MANATEE TBW 199901 100 199901 900 1502923 5682264 FLORIDA 1999 January Dense Fog Z 56 HARDEE TBW 199901 100 199901 18 900 1502923 5682265 FLORIDA 1999 January Dense Fog Z 48 HERNANDCTBW 199901 100 199901 900 1502923 5682266 FLORIDA 1999 January Dense Fog Z 57 HIGHLAND TBW 199901 100 199901 900 1502923 5682267 FLORIDA 1999 January Dense Fog Z 51 HILLSBOROTBW 199901 100 199901 900 1502923 5682268 FLORIDA 1999 January Dense Fog Z 65 LEE TBW 199901 100 199901 900 1502923 5682269 FLORIDA 1999 January Dense Fog Z 39 LEVY TBW 199901 100 199901 18 900 1502923 5682270 FLORIDA 1999 January Dense Fog Z 55 MANATEE TBW 199901 100 199901 18 900 1502923 5682271 FLORIDA 1999 January Dense Fog Z 49 PASCO TBW 199901 100 199901 900 1502923 5682272 FLORIDA 12 1999 January Dense Fog Z 52 POLK TBW 199901 130 199901 1030 1500143 5683076 VIRGINIA 51 1999 January Winter We Z 48 FLUVANNA AKO 199901 130 199901 15 1030 1500143 5683077 VIRGINIA 51 1999 January Winter Wez 49 LOUISA AKO R S BEGIN DA CZ TIM ######## EST ######## EST ######## EST ##### CT ######## EST ######## CST ######## CST ######## CST ######## CST ######## CST ######## CST ######## CST ######## EST ######## EST ######## EST ######## CST ######## CST ######## CST #### ## EST A### ## EST ####### EST ####### CST ### # EST ######## CST ######## EST ##### EST ######## EST ######## EST ######## EST ######## EST ######## EST ######## EST ######## EST ######## EST ######## EST ######## EST 22 18 12 18 15 87 User Request: "Create a simple system to read, search, remove, and write storm data." Objectives: 1. Use standard Java I/O to read, write and user interaction, using appropriate exception 15 points handling for files. Store the data entries in the order in a data structure that varies in size as elements are 15 points inserted and removed. (Array with resize and shrink) Integrate appropriate exception handling in resizable array and storm record classes. 5 points 4. Use binary search to search to find a place to insert data and remove data entries from 15 points an initially empty data structure. (Insertion should be ordered based on "EVENT ID" column comparison. If EVENT_ID is not unique, you may combine YEAR with EVENT_ID to create a unique column for all data.) 5. Efficiently search the data using a comparator based on the field specified by the user. 20 points 10 Points 6. JUnit Test cases for your data operation methods. Develop and use an appropriate design Use proper documentation and formatting. 10 points 10 points Description: For this project, you will put together several techniques and concepts you have learned in CS 210 (or from a similar background) and some new techniques to make Storm 1.0, an application that reads from multiple data files, stores, and output. Storm 1.0 will read through simplified CSV files, store the data from each row entry in a structured way (e.g., the list of columns can be Object and will be stored as an array of object), and your Strom 1.0 will take user input that allows users to specify the files in which data is stored and to search for entries based on various data fields. Operational Issues: Storm 1.0 will read storm data files via Java file I/O. The names of the folder that the data files inside will be specified by the user using standard input. When Storm 1.0 starts, it will enter the data input loop and prompt the user for the name of a folder name that includes data files ("Enter data folder name: ") and waiting for the user to type a folder path and hit enter. If the user enters the name of an available data folder, Storm 1.0 will open all CSV data files using Java file I/O and read the data. If the user enters the name of a folder that is not accessible, Storm 1.0 will report the error to the user ("Folder is not available.") and continue in the loop, repeating the prompt and waiting again for a folder name. If the user hits enter without entering anything else, Storm 1.0 will exit the data input loop. If no data has been read in when Storm 1.0 exits the data input loop, Storm 1.0 will exit. Otherwise, Strom 1.0 will move on to a data manipulation loop (see below). The data files will be organized as they are explained in the word file. However, some of the column information may be null or empty for this project. Moreover, the storm data is spread across multiple files. When Storm reads in each file, it must make sure the data in that file is internally consistent and alert the user to any errors encountered if the file cannot be read. After reading and storing all storm data from all CSV files in the specified folder, Storm will enter a data manipulation loop (as mentioned above). In this loop, Storm 1.0 will prompt the user with four options: 'o' for output, 'sum' for summary, 'f for find, and 'q' for quit ("Enter (output, (summary, (f)ind, or (q)uit: "). If the user selects output, Storm 1.0 will prompt the user for a filename ("Enter output file name: ") and read it in from the console. If the user hits enter without specifying a filename, Storm 1.0 will send output to standard out. Otherwise, it will attempt to open the specified file for writing. If the user enters the name of a file that is not accessible, Storm 1.0 will report the error ("File is not available.") and repeat the prompt for the file name. If the file is accessible or the user has specified the standard out, Storm 1.0 will print out the storm data in whichever order it is presently ordered, followed by a row showing the internal tallies, for example: Data lines read: 15847; Storm records in memory: 15846 Other than possible differences in the order of the rows, the data output format of Storm 1.0 will exactly match the output format used by input files. If the user selects summary, Storm 1.0 will prompt the user for the data year on which to report a summary of the records ("Enter summary year: '). The user may select any numeric value from 0 to 9999 (inclusive), which corresponds to the year number. If the user enters an invalid value for the data field on for summary, Storm 1.0 will return to the data manipulation loop. If the user selects for the summary by any valid data field, Storm 1.0 will print total INJURIES_DIRECT, total INJURIES INDIRECT total DEATHS_DIRECT, total DEATHS_INDIRECT, total DAMAGE_PROPERTY, total DAMAGE CROPS for the given valid year as follows: Year: 2020; total INJURIES_DIRECT:15; total INJURIES_INDIRECT: 30; total DEATHS_DIRECT: 3; total DEATHS_INDIRECT: 6; total DAMAGE_PROPERTY: 200K; total DAMAGE CROPS: 1000K. If the user selects find from the data manipulation loop, Storm 1.0 will prompt the user for the data field on which to search ("Enter search field (0-10): "). 0-10 represents the first 10 column tags in 2019 data: BEGIN_YEARMONTH, BEGIN_DAY, BEGIN_TIME, END YEARMONTH, END_DAY, END TIME, EPISODE ID, EVENT ID, STATE, STATE FIPS. The user may select any numeric value from 0 to 10 (inclusive) and if the user selects any other value, Storm 1.0 will return to the data manipulation loop If the user chooses to find by any numeric column, Storm 1.0 will prompt the user for the numeric value on which to search ("Enter field value: "). If the user enters any text, Storm 1.0 will ensure that the value entered is valid (can be converted to a positive number) and, if it is, search for the given value. If the user entry is invalid, Storm will return to the data manipulation loop. If the user chooses to find by any other valid field, Storm 1.0 will prompt the user for the value on which to search ("Enter exact text on which to search: "). If the user enters any text, enter), Storm 1.0 will search for the given value but does not need to perform any validity check on the input data. If the user just hits enter without giving a search string, Storm 1.0 will return to the data manipulation loop. If Storm 1.0 finds one or more fields exactly matching a user request, Storm 1.0 should display for the user (by sending to standard out) all the corresponding rows, in the same format as when printing the data, but with a trailing row that indicates the number of storm records found in the format shown in the following example: Storm records found: 1897. If no matching records are found, Storm 1.0 should simply send to standard out the line indicating that the number of storm records found was zero: Drilling records found: 0 If the user selects quit from the data manipulation loop, Storm 1.0 should display a friendly parting message ("Thanks for using Storm.") and exit. Implementation Issues: You will create a simple design document that explains and justifies your design choices with respect to these issues. Please make this a PDF file and name it "Stormidesign.pdf" in your submission. If the data is ordered on a different field from the one on which the user is searching, Storm 1.0 will not be able to conduct a binary search for the data. In this case, Storm 1.0 should conduct a linear search instead. On the contrary, if the data is ordered on the field on which the user is searching, Storm 1.0 should conduct a binary search. Note that you will have to modify the binary search in an important way if the data in a given field is not guaranteed to be unique. Your design document will explain and justify how you modified binary search to account for potential duplicates. Further note that the only data field for which data is guaranteed to be unique is EVENT_ID. If EVENT_ID is not unique in any case for different data files, you may use YEAR with EVENT_ID to create a unique column for you data insertion. Note that if the binary search does not find the item searched for, it should return a negative value indicating at which index the item should be inserted. This is a standard approach to binary search algorithms because it means that the same algorithm can be used for finding items in an array when they are present and also for determining where to insert items in the array if they are not already present. The returned negative value should indicate the appropriate insertion index as-index+1). For example, if the appropriate insertion index is 0 (moving the item already at index 0 to index 1), then the binary search should return the value -1. (Note that the returned value must be offset by 1 from the desired insertion index because there is no way to return-0.) Because you now have a templated, resizable array class and methods (ArraySet and ArrayOrdered Set), you may no longer use similar data structures from other sources, including the Java Standard Template Library (including ArrayList, vector, set, and others), except for parsing input lines. Due Date: You must submit an electronic copy of your source code through the Blackboard by sending your code inside a zip folder which is named "project_1_yourName". You only need to submit the java files (including JUnit for your project), UML diagram (jpeg format), design pdf file, jar file, and Java Documentations (Doc folder). Submission Process for All Assignments: 1) Make sure that the file path for reading and writing is given by the user (should not hardcoded in your code). 2) Before submission, make sure that your code is working from the command line by compiling and running without issues as you type "javac" to compile and "java" to run the compiled file or jar file. If your code does not run from the command line or compile, you will get 0. JUnit will not run or compile from the command line. You do not need to concern about JUnit files. However, you must implement appropriate Junit test cases for your program. Moreover, you must put enough comments for Javadoc documentation and code explanation Notes: In this project, the only libraries you will use is a Scanner, one of the file readers and writers library, Exceptions, and String Methods. You cannot use any System or Java Collection Library such as ArrayList, HashMap, so on. You are only allowed to use an array to store each object. You can use array specified methods such as length, but not any methods from Arrays class. Be sure to use a good object-oriented design in this project. That includes the appropriate use of encapsulation, inheritance, overloading, overriding, accessibility modifiers, etc. Be sure to use proper code documentation. This includes header comments for all classes and methods, explanatory comments for each section of code, meaningful variable and method names, consistent indentation, etc. You may write your program from scratch or may start from programs for which the source code is freely available on the web or through other sources (such as friends or student organizations). If you do not start from scratch, you must give a complete and accurate accounting of where all of your code came from and indicate which parts are original or changed, and which you got from which other sources. Failure to give credit where credit is due is academic fraud and will be dealt with accordingly. Event Details File (named event_details_YYYYMM.csv): last date.modified Ex: 4/4/2012 11:05:44 PM, 5/3/2012 5:10:49 AM MM/DD/YYYY 12 hour time AM/PM The last date of modification by NWS. Any corrections to the storm event/episode in question are made solely by NWS and the person that actually entered the event/episode. last date certified Ex: 5/10/2012 9:10:51 AM, 5/18/2012 12:21:15 AM MM/DD/YYYY 12 hour time AM/PM The last date of certification by NWS. Any corrections to the storm event/episode in question are made solely by NWS and the person that actually entered the event/episode. episode_id Ex: 61280, 62777, 63250 (ID assigned by NWS to denote the storm episode; links the event details file with the information within location file) The occurrence of storms and other significant weather phenomena having sufficient intensity to cause loss of life, injuries, significant property damage, and/or disruption to commerce. Rare, unusual, weather phenomena that generate media attention, such as snow flurries in South Florida or the San Diego coastal area; and Other significant meteorological events, such as record maximum or minimum temperatures or precipitation that occur in connection with another event. event_id Ex: 383097, 374427, 364175 (Primary database key field) (ID assigned by NWS to note a single, small part that goes into a specific storm episode; links the storm episode between the three files downloaded from SPC's website) state Ex: GEORGIA, WYOMING, COLORADO The state name where the event occurred (no State ID's are included here; State Name is spelled out in ALL CAPS) state.fips Ex: 45, 30, 12 A unique number (State Federal Information Processing Standard) is assigned to the county by the National Institute for Standards and Technology (NIST). year Ex: 2000, 2006, 2012 sz_fips Ex: 245, 003, 155 The county FIPS number is a unique number assigned to the county by the National Institute for Standards and Technology (NIST) or NWS Forecast Zone Number (See addendum) cz name Ex: AIKEN, RICHMOND, BAXTER (County/Parish, Zone or Marine Name assigned to the county FIPS number or NWS Forecast Zone) wfo Ex: CAE, BYZ, GJT (National Weather Service Forecast Office's area of responsibility (County Warning Area) in which the event occurred) begin date time Ex: 4/1/2012 20:48 MM/DD/YYYY 24 hour time AM/PM sz_timezone Ex: EST-5, MST-7, CST-6 (Time Zone for the County/Parish, Zone or Marine Name) Eastern Standard Time (EST), Central Standard Time (CST), Mountain Standard Time (MST), etc. end_date_time Ex: 4/1/2012 21:03 MM/DD/YYYY 24 hour time AM/PM injuries direct Ex: 1, 0,56 The number of injuries directly related to the weather event injuries indirect Ex: 0, 15, 87 The number of injuries indirectly related to the weather event deaths direct Ex: 0, 45, 23 The number of deaths directly related to the weather event. deaths indirect Ex: 0, 4, 6 The number of deaths indirectly related to the weather event damage property Ex: 10.00K, O.OOK, 10.00M The estimated amount of damage to property incurred by the weather event. (e.g. 10.00K = $10,000; 10.00M = $10,000,000) damage crops Ex: 0.00K, 500.00K, 15.00M The estimated amount of damage to crops incurred by the weather event (e.g. 10.00K = $10,000; 10.00M = $10,000,000) source Ex: Public, Newspaper, Law Enforcement, Broadcast Media, ASOS, Park and Forest Service, Trained Spotter, CocoRahs, etc. (can be any entry; isn't restricted in what's allowed) Source reporting the weather event magnitude Ex: 0.75, 60, 0.88, 2.75 measured extent of the magnitude type only used for wind speeds and hail size (e.g. 0.75" of hail; 60 mph winds) magnitude_type Ex: EG, MS, MG, ES EG = Wind Estimated Gust; ES = Estimated Sustained Wind; MS = Measured Sustained Wind; MG = Measured Wind Gust (no magnitude is included for instances of hail) flood cause Ex: Ice Jam, Heavy Rain, Heavy Rain/Snow Melt Reported or estimated cause of the flood category Ex: Unknown (During the time of downloading this particular file, NCDC has never seen anything provided within this field.) tor_f scale Ex: EFO, EF1, EF2, EF3, EF4, EF5 Enhanced Fujita Scale describes the strength of the tornado based on the amount and type of damage caused by the tornado. The F-scale of damage will vary in the destruction area; therefore, the highest value of the F-scale is recorded for each event. EFO - Light Damage (40-72 mph) EF1 - Moderate Damage (73 - 112 mph) EF2 - Significant damage (113-157 mph) EF3-Severe Damage (158 - 206 mph) EF4 - Devastating Damage (207 - 260 mph) EF5 - Incredible Damage (261 - 318 mph) tor_length Ex: 0.66, 1.05, 0.48 Length of the tornado or tornado segment while on the ground (minimal of tenths of miles) tor_width Ex: 25, 50, 2640, 10 Width of the tornado or tornado segment while on the ground (in feet) tor_other_wfo Ex: DDC, ICT, TOP, OAX Indicates the continuation of a tornado segment as it crossed from one National Weather Service Forecast Office to another. The subsequent WFO identifier is provided within this field. tor_other_sz state Ex: KS, NE, OK The two character representation for the state name of the continuing tornado segment as it crossed from one county or zone to another. The subsequent 2-Letter State ID is provided within this field. tor_other_ezfips Ex: 41, 127, 153 The FIPS number of the county entered by the continuing tornado segment as it crossed from one county to another. The subsequent FIPS number is provided within this field. tor_other_cz name Ex: DICKINSON, NEMAHA, SARPY The FIPS name of the county entered by the continuing tornado segment as it crossed from one county to another. The subsequent county or zone name is provided within this field in ALL CAPS. episode title Ex: Severe weather outbreak on Saturday April 14 in eastern Nebraska A short description of the episode. (Short name for the episode itself as determined by NWS.) episode narrative (The episode narrative depicting the general nature and overall activity of the episode. The narrative is created by NWS.) Ex: A strong upper level system over the southern Rockies lifted northeast across the plains causing an intense surface low pressure system and attendant warm front to lift into Nebraska. event narrative (The event narrative provides more specific details of the individual event. The event narrative is provided by NWS.) Ex: Heavy rain caused flash flooding across parts of Wilber. Rainfall of 2 to 3 inches fell across the area. AutoSave on H o e StormEvents_details-ftp_v1.0_d1999_c20190920.csv - Excel File Home Insert Draw Page Layout Formulas Data Review View Help Search = = la X Pastea LG Paste Calibri - 11 - A A = BI U - A 3 2. Insert - Delete- Format General $ - % 3 . , Conditional Format as Formatting Table Cell Styles Cells 29 van 2 46 28 48 48 3 Clipboard Font Alignment Number Styles CE146 - X fx A B C D E F G H I J K L M N O P Q 1 BEGINYEABEGIN DA BEGIN TIMEND YEAR END DAY END TIME EPISODE [EVENT ID STATE STATE FIPS YEAR MONTHN EVENT TYFCZ TYPE CZ FIPS CZ NAME WFO 199901 14 0 199901 15 0 1501526 5683694 MASSACHL 25 1999 January Heavy Snosz 19 EASTERN P BOX 199901 2 700 199901 2 2359 1500622 5683477 MICHIGAN 26 1999 January Blizzard Z 43 OCEANA GRR 199901 13 130 199901 13 1 400 1500140 5683047 OHIO 39 1999 January Winter Stoiz 27 WYANDOT CLE 199908 1 1 199908 31 2359 2409403 5712941 MISSOURI 1999 August Drought Z 86 BOLLINGERPAH 199901 2300 199901 1900 1502756 5681541 NEW YORK 36 1999 January Winter Stoiz 60 WESTERN CALY 199901 2 1800 199901 1200 1075681 5681328 NEBRASKA 31 1999 January Cold/Wind Z 45 WASHINGT OAX 199901 600 199901 1800 1502273 5680519 SOUTH DAH 46 1999 January Winter Stoiz 65 HUTCHINSCFSD 199901 1 600 199901 2 1800 15022735680522 SOUTH DAH 1999 January Winter Stor Z 55 LAKE FSD 199901 600 199901 1800 1502273 5680523 SOUTH DAH 1999 January Winter Stoiz 67 LINCOLN FSD 199901 1220 199901 1220 1502274 5680654 TEXAS 1999 January Thunderstcc 231 HUNT FWD 199901 1225 199901 1225 1502274 5680655 TEXAS 1999 January Hail C 231 HUNT FWD 199901 2145199901 2145 1502274 5680656 TEXAS 1999 January Hail C 367 PARKER FWD 199901 1200 199901 2300 1500051 5680771 GEORGIA 1999 January Sleet Z 10 RABUN GSP 199901 311200 199901 31 2300 1500051 5680772 GEORGIA 13 1999 January Sleet Z 18 STEPHENS GSP 16 199901 2 1800 199901 0 1500496 5680774 SOUTH CAF 45 1999 January Ice Storm Z 10 ANDERSON GSP 199901 30 1130 199901 30 1300 1503290 5682051 MISSISSIPP 28 1999 January Flash Flood 99 NESHOBA JAN 199901 21 2158 199901 21 2158 1503305 5682052 ILLINOIS 17 1999 January Hail 199 WILLIAMSC PAH 199901 1 2302 199901 1 2305 1500528 5681689 LOUISIANA 22 1999 January Tomado C 15 BOSSIER SHV 20 199901 8 300 199901 9 100 1501939 5684118 OHIO 39 1999 January Winter Storz 49 HARRISON PBZ 21 199901 8 300 199901 9 100 1501939 5684116 OHIO 39 1999 January Winter Stoz 48 COSHOCTC PBZ 199901 300 199901 9 100 1501939 5684117 OHIO 39 1999 January Winter Stoz 58 GUERNSEY PBZ 199901 1 600 199901 2 1500 15022735680521 SOUTH DAH 46 1999 January Winter Storz 39 KINGSBURYFSD 199901 130 199901 15 1030 1500143 5683074 VIRGINIA 51 1999 January Winter We z 64 CAROLINE AKO 199901 2305 199901 1 2323 1075854 5681690 LOUISIANA 1999 January Tornado C 119 WEBSTER SHV 199901 100 199901 16 1000 1502922 5682258 FLORIDA 1999 January Dense Fog Z 55 MANATEE TBW 199901 100 199901 900 1502923 5682264 FLORIDA 1999 January Dense Fog Z 56 HARDEE TBW 199901 100 199901 18 900 1502923 5682265 FLORIDA 1999 January Dense Fog Z 48 HERNANDCTBW 199901 100 199901 900 1502923 5682266 FLORIDA 1999 January Dense Fog Z 57 HIGHLAND TBW 199901 100 199901 900 1502923 5682267 FLORIDA 1999 January Dense Fog Z 51 HILLSBOROTBW 199901 100 199901 900 1502923 5682268 FLORIDA 1999 January Dense Fog Z 65 LEE TBW 199901 100 199901 900 1502923 5682269 FLORIDA 1999 January Dense Fog Z 39 LEVY TBW 199901 100 199901 18 900 1502923 5682270 FLORIDA 1999 January Dense Fog Z 55 MANATEE TBW 199901 100 199901 18 900 1502923 5682271 FLORIDA 1999 January Dense Fog Z 49 PASCO TBW 199901 100 199901 900 1502923 5682272 FLORIDA 12 1999 January Dense Fog Z 52 POLK TBW 199901 130 199901 1030 1500143 5683076 VIRGINIA 51 1999 January Winter We Z 48 FLUVANNA AKO 199901 130 199901 15 1030 1500143 5683077 VIRGINIA 51 1999 January Winter Wez 49 LOUISA AKO R S BEGIN DA CZ TIM ######## EST ######## EST ######## EST ##### CT ######## EST ######## CST ######## CST ######## CST ######## CST ######## CST ######## CST ######## CST ######## EST ######## EST ######## EST ######## CST ######## CST ######## CST #### ## EST A### ## EST ####### EST ####### CST ### # EST ######## CST ######## EST ##### EST ######## EST ######## EST ######## EST ######## EST ######## EST ######## EST ######## EST ######## EST ######## EST ######## EST 22 18 12 18 15 87

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts