Question: 1 In the recursive construction of decision trees, it sometimes happens that a mixed set of positive and negative examples remains at a leaf node,

1 In the recursive construction of decision trees, it sometimes happens that a mixed set of positive and negative examples remains at a leaf node, even after all the attributes have been used. Suppose that we have p positive examples and n negative examples.

a. Show that the solution used by DECISION-TREE-LEARNING, which picks the majority classification, minimizes the absolute error over the set of examples at the leaf.

b. Show that the class probability CLASS PROBABILITY p/(p + n) minimizes the sum of squared errors.

5 Suppose that an attribute splits the set of examples E into subsets E and that each subset has pk positive examples and nk negative examples. Show that the attribute has strictly positive information gain unless the ratio pk/(pk + nk) is the same for all k.

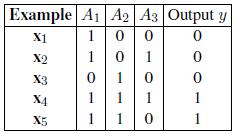

6 Consider the following data set comprised of three binary input attributes (A1,A2, and A3) and one binary output:

Use the algorithm in Figure 5 to learn a decision tree for these data. Show the computations made to determine the attribute to split at each node.

X1 1 Example A1 A2 A3 Output y 00 0 x2 1 0 1 0 X3 0 1 0 0 x4 1 1 X5 1 1 0 1

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts