Question

1. TRAINING THE NAVE BAYES CLASSIFIER FOR MOVIE REVIEW CLASSIFICATION i). Implement in Python a Nave Bayes classier with bag-of-word features and add-1 smoothing. Note:

1. TRAINING THE NAVE BAYES CLASSIFIER FOR MOVIE REVIEW CLASSIFICATION

i). Implement in Python a Nave Bayes classier with bag-of-word features and add-1 smoothing. Note: Smoothing should be used for the context features (bag-of-word features)only. Do not use smoothing for the prior parameters.

ii) Use the following small corpus of movie reviews to train your classier. Save the parameters of your model in a le called movie-review.NB

a) fun,couple,love,love - comedy(Label)

b) fast,furious,shoot - action(label)

c) couple,y,fast,fun,fun - comedy(Label)

d) furious,shoot,shoot,fun - action(Label)

e) y,fast,shoot,love - action(Label)

iii) Test you classier on the new document below: {fast, couple, shoot, y}. Compute the most likely class. Report the probabilities for each class.

Bag of words - {fly, fast, shoot, fun, furious, couple, love }

Please use following concept to implement in python.

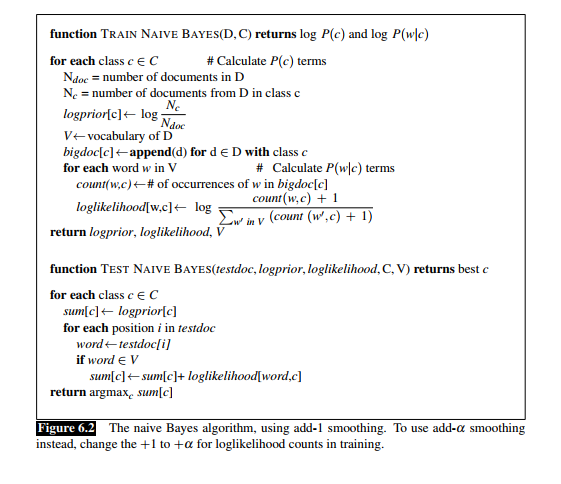

Naive Bayes is a probabilistic classifier, meaning that for a document d, out of all classes c C the classifier returns the class c which has the maximum posterior probability given the document.

function TRAIN NAIVE BAYEs(D, C) returns log P(c) and log P(wlc) for each class cE C # Calculate P(c) terms Ndoc number of documents in D Ne number of documents from D in class c logpriorfc1+-logie- doc V vocabulary of D bigdoc[c] append(d) for d E D with class c for each word w in V # Calculate P(w|c) terms cownt(w,c) # of occurrences of w in bigdoc[c] loglikelihood[w.c] log count(w,c) 1 w in V Count (w.c) 1 return logprior, loglikelihood, V function TEST NAIVE BAYES(testdoc, logprior, loglikelihood, C, V) returns best c for each class c E C sum[c] logprior[c] for each position i in testdoc word testdoc[i] if word V sum[c] sum[c]+ loglikelihood| word,c] return argmaxe sum[o] The naive Bayes algorithm, using add-1 smoothing. To use add-a smoothing Figure 6.2 instead, change the +1 to + for loglikelihood counts in training. function TRAIN NAIVE BAYEs(D, C) returns log P(c) and log P(wlc) for each class cE C # Calculate P(c) terms Ndoc number of documents in D Ne number of documents from D in class c logpriorfc1+-logie- doc V vocabulary of D bigdoc[c] append(d) for d E D with class c for each word w in V # Calculate P(w|c) terms cownt(w,c) # of occurrences of w in bigdoc[c] loglikelihood[w.c] log count(w,c) 1 w in V Count (w.c) 1 return logprior, loglikelihood, V function TEST NAIVE BAYES(testdoc, logprior, loglikelihood, C, V) returns best c for each class c E C sum[c] logprior[c] for each position i in testdoc word testdoc[i] if word V sum[c] sum[c]+ loglikelihood| word,c] return argmaxe sum[o] The naive Bayes algorithm, using add-1 smoothing. To use add-a smoothing Figure 6.2 instead, change the +1 to + for loglikelihood counts in trainingStep by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started