Question: 2 . 1 1 . 0 point possible ( graded , results hidden ) If we again use the linear perceptron algorithm to train the

point possible graded results hidden

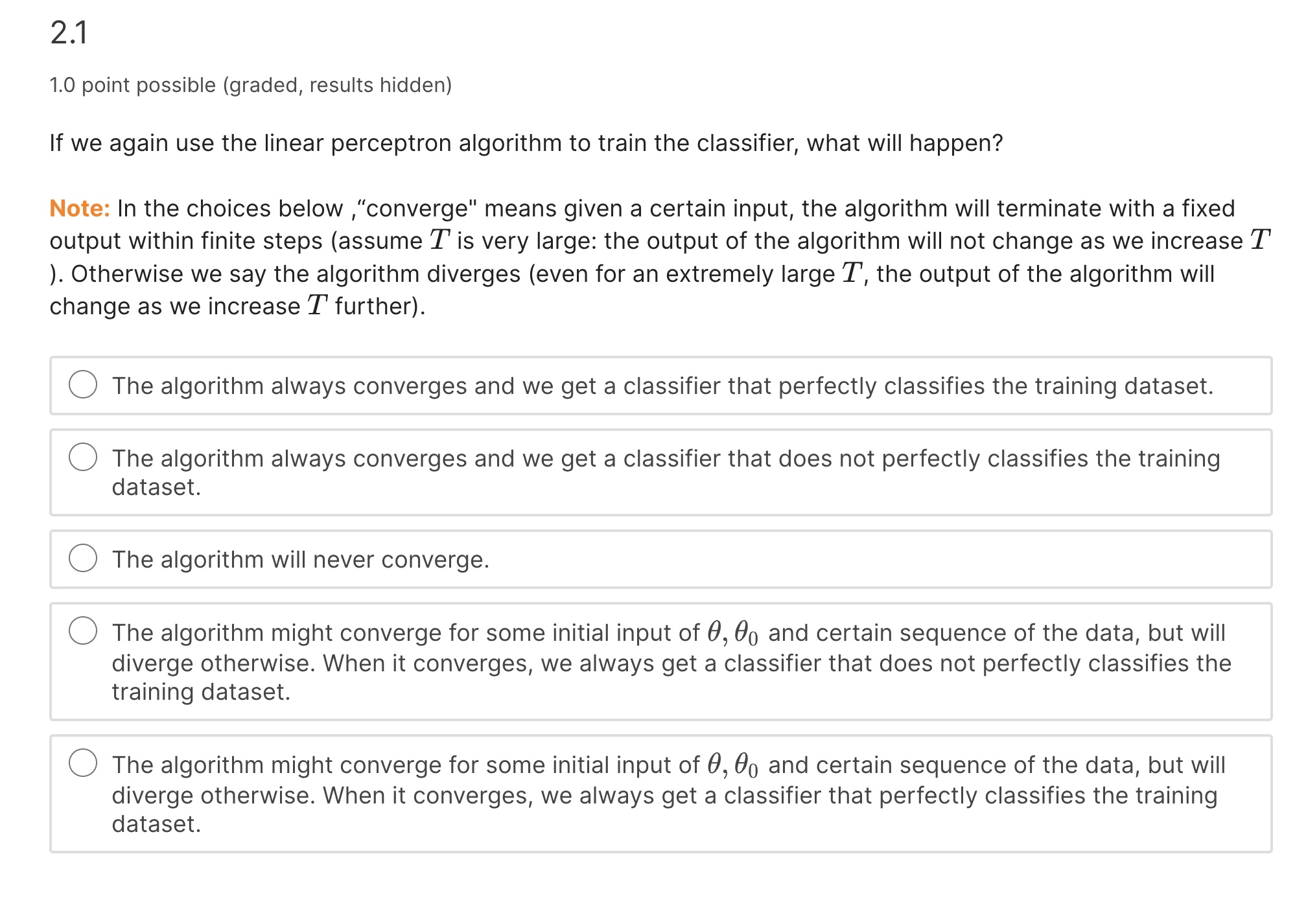

If we again use the linear perceptron algorithm to train the classifier, what will happen?

Note: In the choices below "converge" means given a certain input, the algorithm will terminate with a fixed

output within finite steps assume is very large: the output of the algorithm will not change as we increase

Otherwise we say the algorithm diverges even for an extremely large the output of the algorithm will

change as we increase further

The algorithm always converges and we get a classifier that perfectly classifies the training dataset.

The algorithm always converges and we get a classifier that does not perfectly classifies the training

dataset.

The algorithm will never converge.

The algorithm might converge for some initial input of and certain sequence of the data, but will

diverge otherwise. When it converges, we always get a classifier that does not perfectly classifies the

training dataset.

The algorithm might converge for some initial input of and certain sequence of the data, but will

diverge otherwise. When it converges, we always get a classifier that perfectly classifies the training

dataset.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock