Answered step by step

Verified Expert Solution

Question

1 Approved Answer

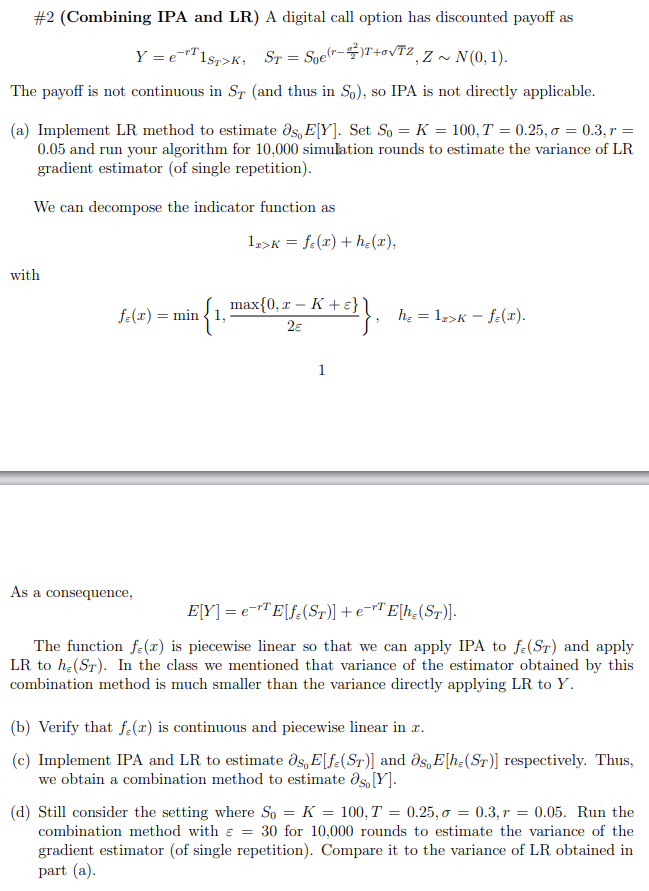

#2 (Combining IPA and LR) A digital call option has discounted payoff as -rT Y = eT 1ST>K, ST = Soe(-4)+TZ, Z ~ N(0,

#2 (Combining IPA and LR) A digital call option has discounted payoff as -rT Y = eT 1ST>K, ST = Soe(-4)+TZ, Z ~ N(0, 1). The payoff is not continuous in ST (and thus in So), so IPA is not directly applicable. (a) Implement LR method to estimate Os, E[Y]. Set So = K = 100, T = 0.25, = 0.3, r = 0.05 and run your algorithm for 10,000 simulation rounds to estimate the variance of LR gradient estimator (of single repetition). We can decompose the indicator function as 1>K = f(x)+h(x), with f(x) = min :{1, max{0,xK+} \ h = 1x>K - f(x). 2 1 As a consequence, E[Y] = e E[f:(ST)] + eTE[h(ST)]. The function f(x) is piecewise linear so that we can apply IPA to f(ST) and apply LR to he(ST). In the class we mentioned that variance of the estimator obtained by this combination method is much smaller than the variance directly applying LR to Y. (b) Verify that f(x) is continuous and piecewise linear in .x. (c) Implement IPA and LR to estimate Os, E[f(ST)] and Os, E[h. (ST)] respectively. Thus, we obtain a combination method to estimate s, [Y]. (d) Still consider the setting where So = K = 100, T = 0.25, = 0.3, r = 0.05. Run the combination method with = 30 for 10,000 rounds to estimate the variance of the gradient estimator (of single repetition). Compare it to the variance of LR obtained in part (a).

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started