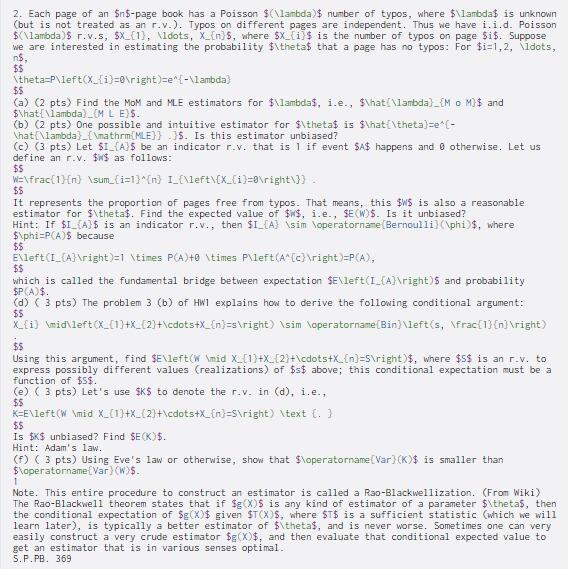

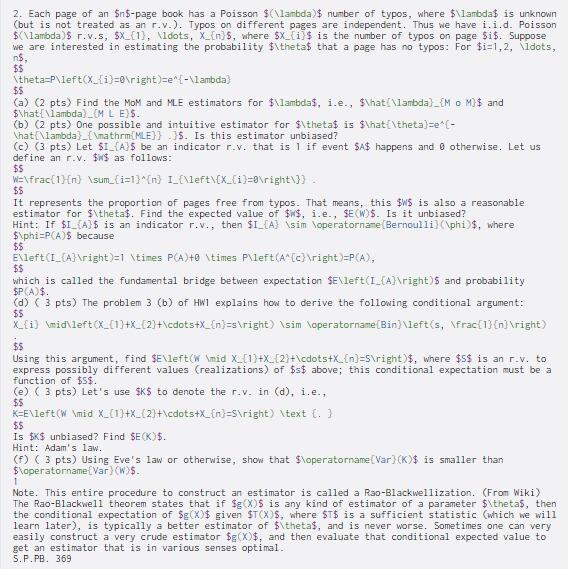

2. Each page of an $n$-page book has a Poisson $(\lambda)$ number of typos, where $Vlambda$ is unknown (but is not treated as an r.v.). Typos on different pages are independent. Thus we have i.i.d. Poisson $(\lambda5 r.v.s, sx_{1), dots, X_En 5, where sx_{i} $ is the number of typos on page Si$. Suppose we are interested in estimating the probability $\thetas that a page has no typos: For $i=1,2, \ldots, ns, $$ \theta=P\left(x_{1}= ight)=e^{-\lambda) $$ (a) (2 pts) Find the Mom and ME estimators for $\lambdas, i.e., $\hat \lambda)_[Mo M$ and $What {\lambda) M L ES. (0) (2 pts) One possible and intuitive estimator for $\theta$ is Shat[theta)se E- What lambda}_{\mathrm{MLE}}}5. Is this estimator unbiased? (0) (3 pts) Let $1_{A}$ be an indicator r.v. that is 1 if event $A$ happens and 8 otherwise. Let us define an r.v. $$ as follows: $ We\frac{1}{n} \sum_{i=1}^{n} I_(\left\{X_{i}= ight\}} : $$ It represents the proportion of pages free from typos. That means, this $WS is also a reasonable estimator for $\thetas. Find the expected value of $$, i.e., $E(W)$. Is it unbiased? Hint: If $I_{A}$ is an indicator r.v., then $I_{A} sim operatorname (Bernoulli)(\phi$, where $\phi-PCAS because $$ E\left(I_{A} ight)=1 \times PCA)+0 \times P\left(Acc} ight)=P(A), $$ which is called the fundamental bridge between expectation $E\left(I_{A} ight)5 and probability $P(A)$. (d) (3 pts) The problem 3 (b) of HW1 explains how to derive the following conditional argument: X_{i} \mid\left(x_{1}+x_{2}+\cdots+x_{n}=s ight) \sim operatorname[Bin}\left(s, \frac{1}{n} ight) $6 Using this argument, find $E\left( \mid x_{1}+x_{2}+\cdots+x_{n})=s ight)$, where $5$ is an r.v. to express possibly different values Crealizations) of $s$ above; this conditional expectation must be a function of $5$. (e) (3 pts) Let's use $K$ to denote the r.v. in (d), i.e., $$ KEE\left( \mid X_{1}+x_{2}+\cdots+x_{n-S ight) \text {. } $$ Is $K$ unbiased? Find SE(K)$. Hint: Adam's law. (F) ( 3 pts) Using Eve's law or otherwise, show that $\operatorname[Var)(K)$ is smaller than $\operatorname(Var) (W$. Note. This entire procedure to construct an estimator is called a Rao-Blackwellization. (From Wiki) The Rao-Blackwell theorem states that if $g (X)$ is any kind of estimator of a parameter $\thetas, then the conditional expectation of $g(X$ given $T(X), where $T$ is a sufficient statistic (which we will learn later), is typically a better estimator of $\theta$, and is never worse. Sometimes one can very easily construct a very crude estimator $g(x)$, and then evaluate that conditional expected value to get an estimator that is in various senses optimal. S.P.PB. 369 $$ 1