Question: 27. Explain what is hardware prefetching? What is the granularity of prefetched data? 28. Stride prefetching is a hardware prefetching mechanism that identifies memory access

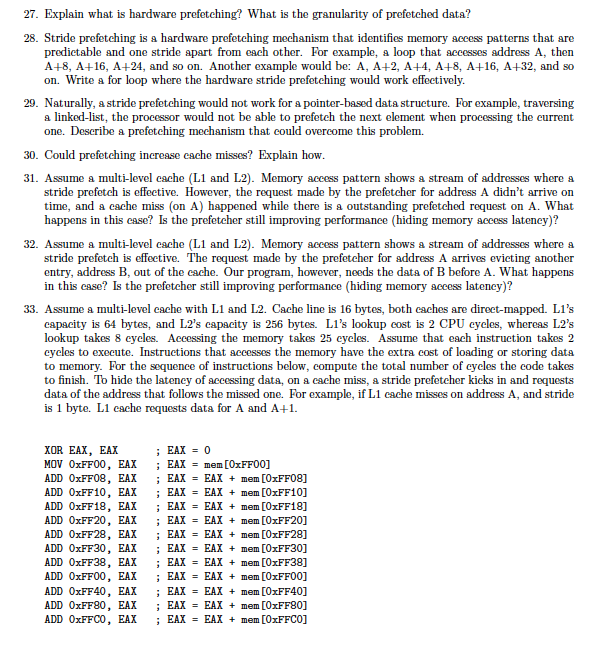

27. Explain what is hardware prefetching? What is the granularity of prefetched data? 28. Stride prefetching is a hardware prefetching mechanism that identifies memory access patterns that are predictable and one stride apart from each other. For example, a loop that accesses address A, then A+8, A+16, A+24, and so on. Another example would be: A, A+2, A+4, A+8, A+16, A+32, and so on. Write a for loop where the hardware stride prefetching would work effectively Naturally, a stride prefetching would not work for a pointer-based data structure. For example, traversing a linked-list, the processor would not be able to prefetch the next element when processing the current one. Describe a prefetching mechanism that could overcome this problem. 29. 30. Could prefetching increase cache misses? Explain how 31. Assume a multi-level cache (L1 and L2). Memory access pattern shows a stream of addresses where a stride prefetch is effective. However, the request made by the prefetcher for address A didn't arrive on time, and a cache miss (on A) happened while there is a outstanding prefetched request on A. What happens in this case? Is the prefetcher still improving performance (hiding memory access lateney)? 32. Assume a multi-level cache (L1 and L2). Memory access pattern shows a stream of addresses where a stride prefetch is effeetive. The request made by the prefetcher for address A arrives evicting another entry, address B, out of the cache. Our program, however, needs the data of B before A. What happens in this case? Is the prefetcher still improving performance (hiding memory access lateney)? 33. Assume a multi-level cache with L1 and L2. Cache line is 16 bytes, both caches are direct-mapped. L1's capacity is 64 bytes, and L2's capscity is 256 bytes. L1's lookup cost is 2 CPU cycles, whereas L2's lookup takes 8 eycles. Accessing the memory takes 25 eycles. Assume that each instruction takes 2 cycles to execute. Instructions that accesses the memory have the extra cost of loading or storing data to memory. For the sequence of instruetions below, compute the total number of cycles the code takes to finish. To hide the latency of accessing data, on a cache miss, a stride prefetcher kicks in and requests data of the address that follows the missed one. For example, if L1 cache misses on address A, and stride is 1 byte. L1 cache requests data for A and A+1. XOR EAX, EAX MOV 0xFF00, EAX ; EAX= mem [ ADD 0xFF08, EAX ; EAX = EAX + mem [0xFF08] ADD OxFF10, EAX ; EAX -EAX mem [0xFF10] ADD OxFF18, EAX ; EAX -EAX mem [0xFF18] ADD 0xFF20, EAX ; EAX = EAX + mem [0xFF20] ADD 0xFF28, EAX ; EAX = EAX + mem [0xFF28] ADD 0xFF30, EAX ; EAX -EAX mem [0xFF30] ADD 0xFF38, EAX ; EAX -EAX mem [0xFF38] ADD 0xFF00, EAX ; EAX = EAX + mem [0xFF00] ADD 0xFF40, EAX ; EAX = EAX + mem [0xFF40] ADD 0xFF80, EAX ; EAX = EAX + mem [0xFF80] ADD OxFFCO, EAX ; EAX = EAX + mem [OxFFCO]

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts