Answered step by step

Verified Expert Solution

Question

1 Approved Answer

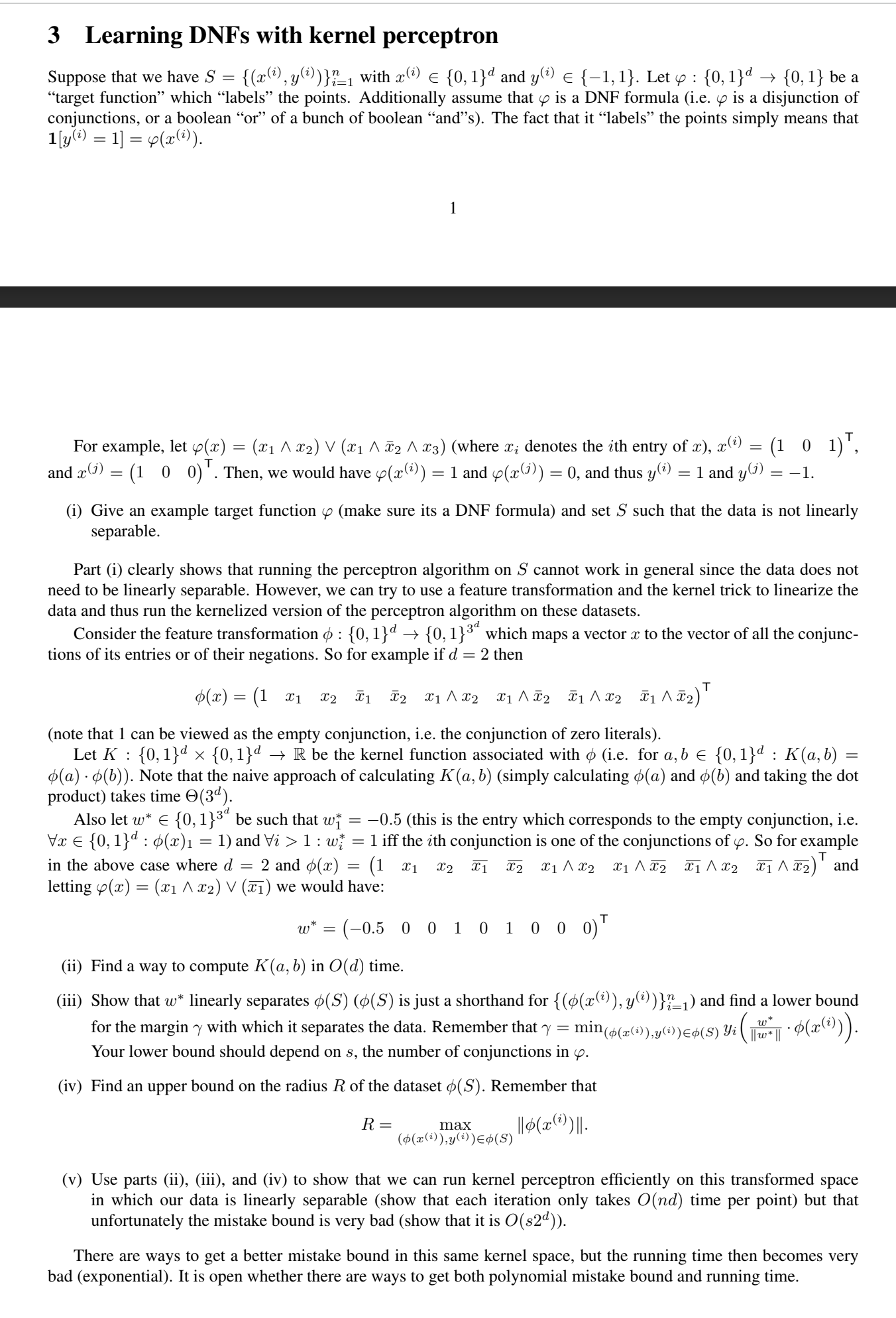

3 Learning DNFs with kernel perceptron Suppose that we have S = { ( x ( i ) , y ( i ) ) }

Learning DNFs with kernel perceptron

Suppose that we have with and Let : be a

"target function" which "labels" the points. Additionally assume that is a DNF formula ie is a disjunction of

conjunctions, or a boolean or of a bunch of boolean "and"s The fact that it "labels" the points simply means that

For example, let where denotes the th entry of :

and Then, we would have and and thus and

i Give an example target function make sure its a DNF formula and set such that the data is not linearly

separable.

Part i clearly shows that running the perceptron algorithm on cannot work in general since the data does not

need to be linearly separable. However, we can try to use a feature transformation and the kernel trick to linearize the

data and thus run the kernelized version of the perceptron algorithm on these datasets.

Consider the feature transformation : which maps a vector to the vector of all the conjunc

tions of its entries or of their negations. So for example if then

note that can be viewed as the empty conjunction, ie the conjunction of zero literals

Let : be the kernel function associated with ie for bin:

Note that the naive approach of calculating simply calculating and and taking the dot

product takes time

Also let be such that this is the entry which corresponds to the empty conjunction, ie

:AAxin: and AAi: iff the th conjunction is one of the conjunctions of So for example

letting we would have:

ii Find a way to compute in time.

iii Show that linearly separates is just a shorthand for : and find a lower bound

for the margin with which it separates the data. Remember that

Your lower bound should depend on the number of conjunctions in

iv Find an upper bound on the radius of the dataset Remember that

v Use parts iiiii and iv to show that we can run kernel perceptron efficiently on this transformed space

in which our data is linearly separable show that each iteration only takes time per point but that

unfortunately the mistake bound is very bad show that it is

There are ways to get a better mistake bound in this same kernel space, but the running time then becomes very

bad exponential It is open whether there are ways to get both polynomial mistake bound and running time.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started