Answered step by step

Verified Expert Solution

Question

1 Approved Answer

[ 3 pts ] Explain why ( in most cases ) minimizing KL divergence is equivalent to minimizing cross - entropy [ 3 pts ]

pts Explain why in most cases minimizing KL divergence is equivalent to minimizing crossentropy

pts Explain why sigmoid causes vanishing gradient

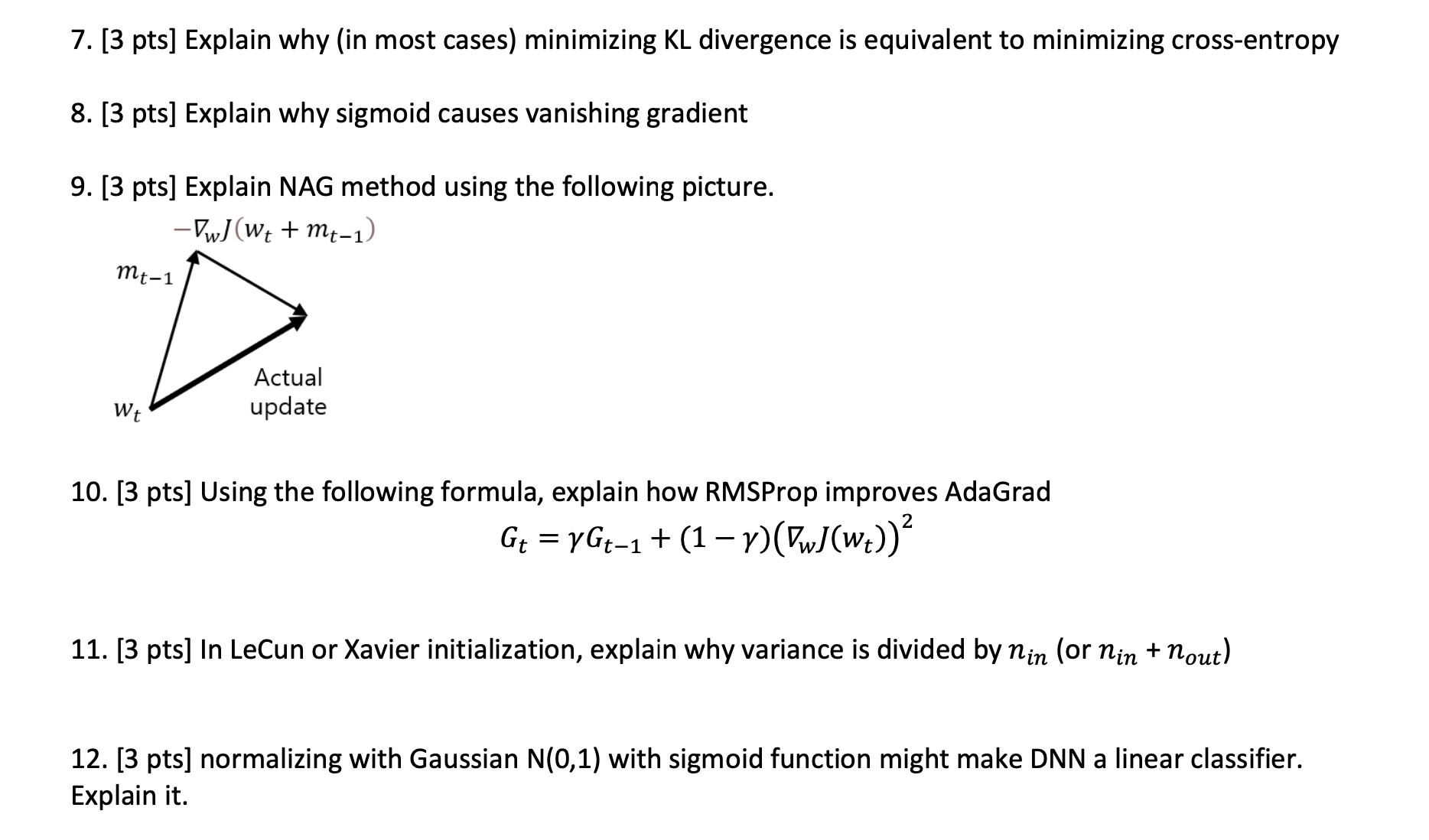

pts Explain NAG method using the following picture.

pts Using the following formula, explain how RMSProp improves AdaGrad

pts In LeCun or Xavier initialization, explain why variance is divided by or

pts normalizing with Gaussian with sigmoid function might make DNN a linear classifier.

Explain it

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started