Question

6. Claude Shannon, widely credited as the founder of information theory, introduced the concept of information entropy in his 1948 paper A Mathematical Theory

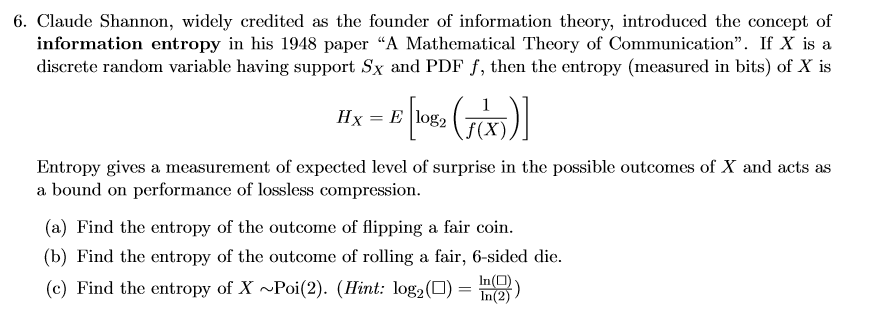

6. Claude Shannon, widely credited as the founder of information theory, introduced the concept of information entropy in his 1948 paper "A Mathematical Theory of Communication". If X is a discrete random variable having support Sx and PDF f, then the entropy (measured in bits) of X is log Hx - E [1062 (IX))] [log2 = Entropy gives a measurement of expected level of surprise in the possible outcomes of X and acts as a bound on performance of lossless compression. (a) Find the entropy of the outcome of flipping a fair coin. (b) Find the entropy of the outcome of rolling a fair, 6-sided die. In(O) (c) Find the entropy of X ~Poi(2). (Hint: log2() = In(2)

Step by Step Solution

3.48 Rating (158 Votes )

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get StartedRecommended Textbook for

International Economics

Authors: Robert Carbaugh

18th Edition

0357518918, 978-0357518915

Students also viewed these Statistics questions

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

View Answer in SolutionInn App