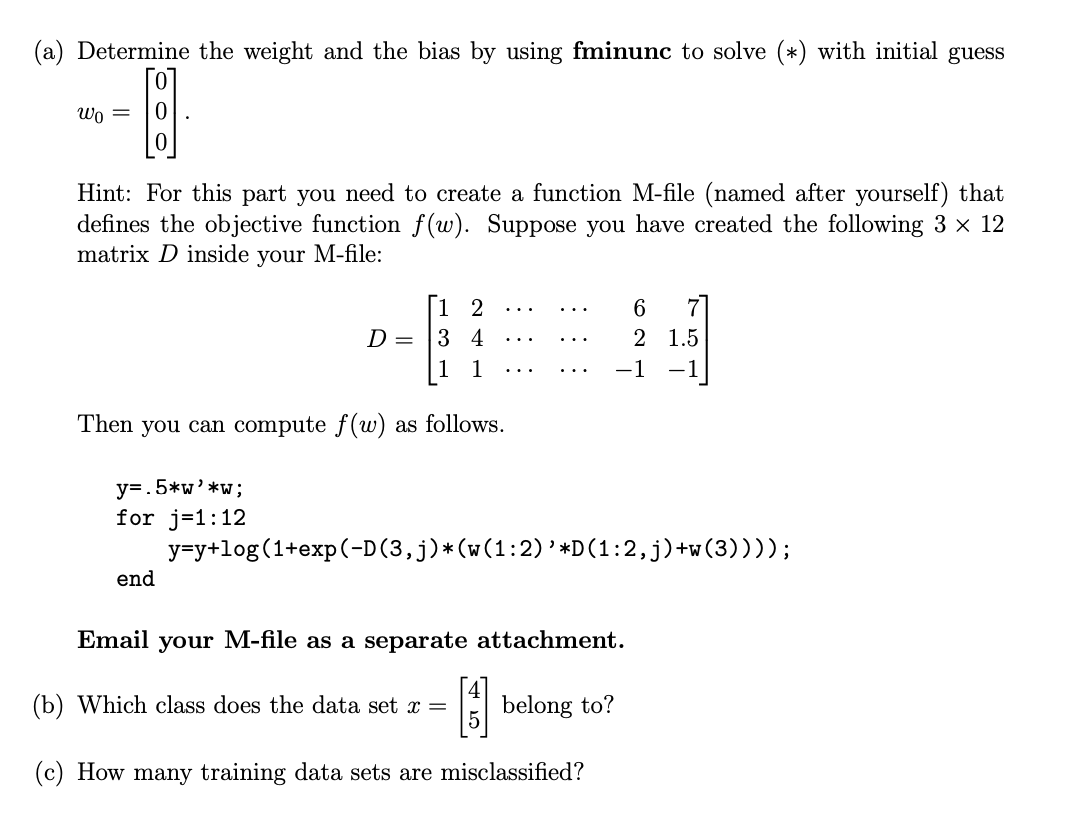

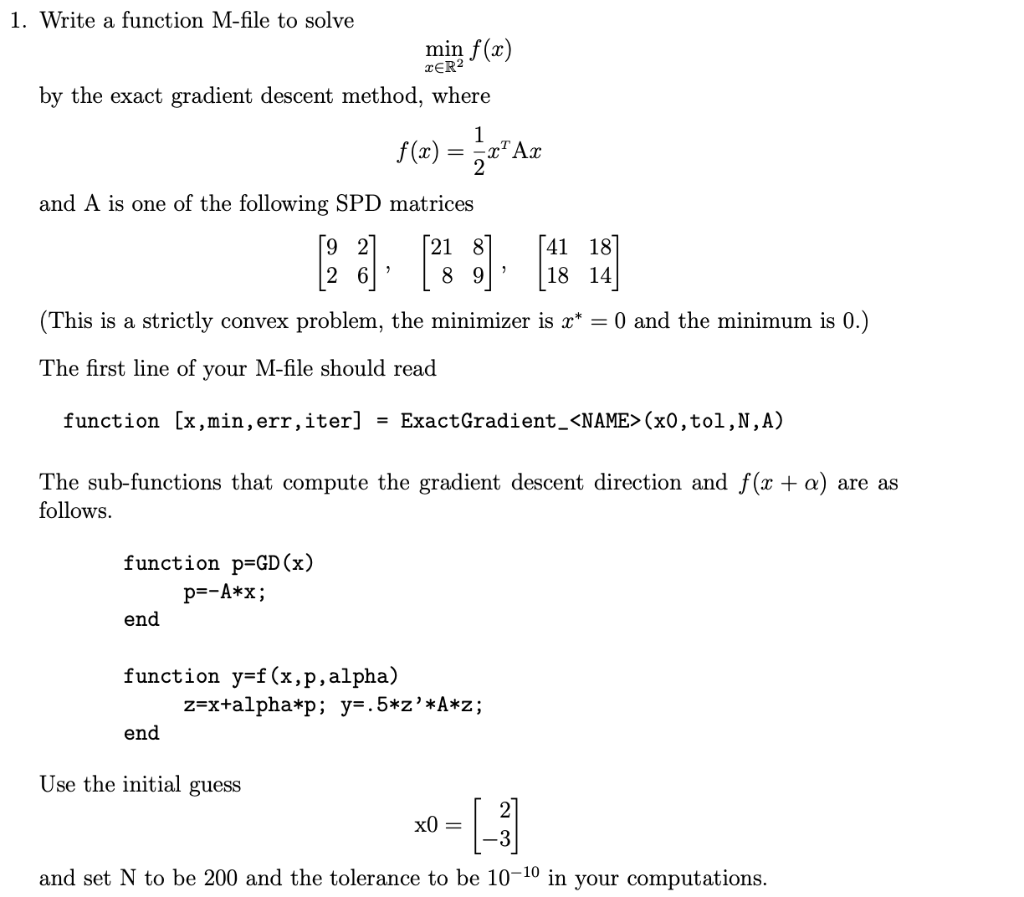

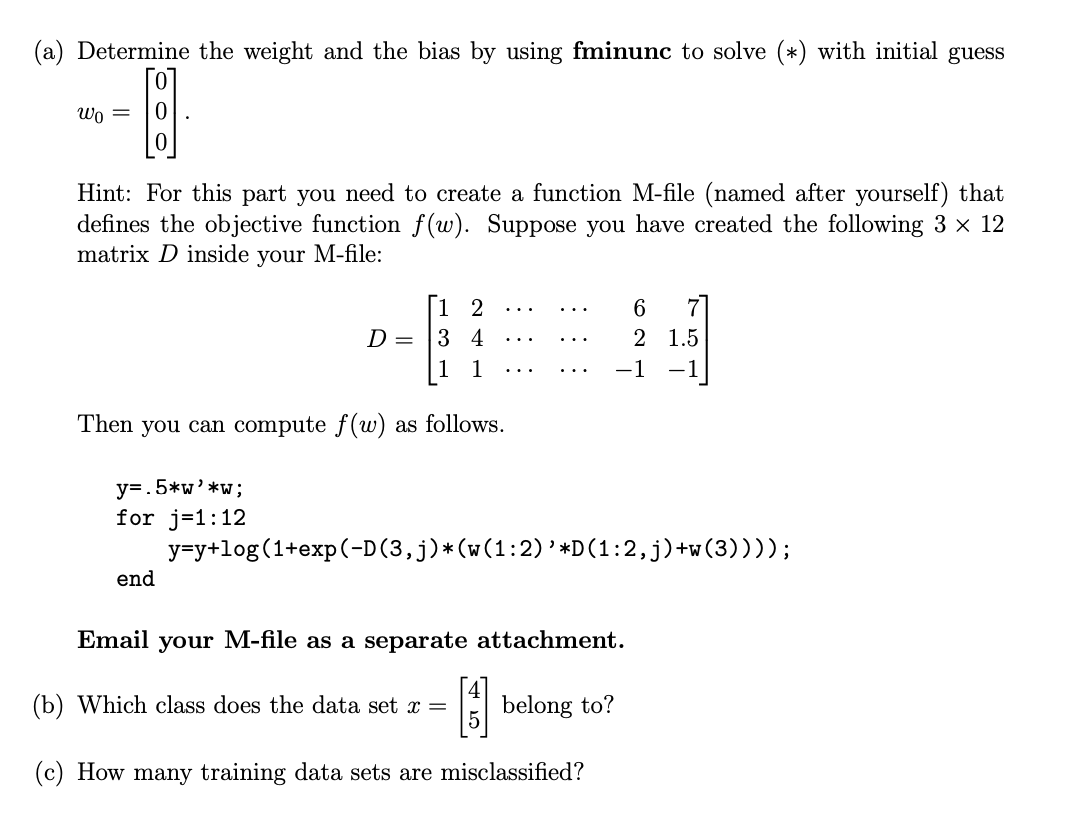

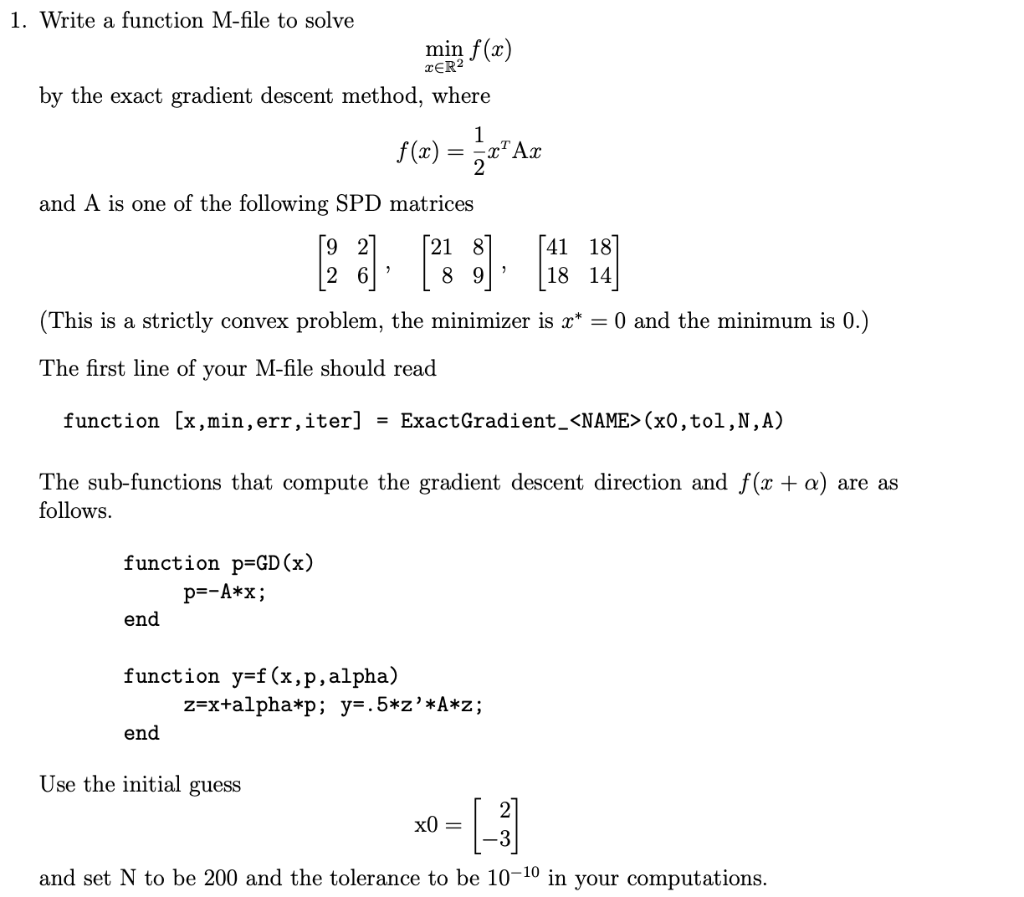

(a) Determine the weight and the bias by using fminunc to solve (*) with initial guess Wo = 0 Hint: For this part you need to create a function M-file (named after yourself) that defines the objective function f(w). Suppose you have created the following 3 x 12 matrix D inside your M-file: i 2 D= 3 4 1 6 7 2 1.5 - 1 Then you can compute f(w) as follows. y=.5*w'*W; for j=1:12 y=y+log (1+exp(-D(3,j) *(w(1:2) ' *D(1:2,j)+w(3)))); end Email your M-file as a separate attachment. (b) Which class does the data set x = belong to? (c) How many training data sets are misclassified? TER2 " 1. Write a function M-file to solve min f(x) by the exact gradient descent method, where 1 f(x) = = x*Ax and A is one of the following SPD matrices 192 21 8 41 18 8 9 18 14] (This is a strictly convex problem, the minimizer is x* = 0 and the minimum is 0.) The first line of your M-file should read 7 function [x,min, err,iter] = ExactGradient_

(x0, tol,N, A) The sub-functions that compute the gradient descent direction and f(x + a) are as follows. function p=GD(x) p=-A*x; end function y=f(x,p, alpha) z=x+alpha*p; y=.5*Z'*A*z; end Use the initial guess x0 = and set N to be 200 and the tolerance to be 10-10 in your computations. (a) Determine the weight and the bias by using fminunc to solve (*) with initial guess Wo = 0 Hint: For this part you need to create a function M-file (named after yourself) that defines the objective function f(w). Suppose you have created the following 3 x 12 matrix D inside your M-file: i 2 D= 3 4 1 6 7 2 1.5 - 1 Then you can compute f(w) as follows. y=.5*w'*W; for j=1:12 y=y+log (1+exp(-D(3,j) *(w(1:2) ' *D(1:2,j)+w(3)))); end Email your M-file as a separate attachment. (b) Which class does the data set x = belong to? (c) How many training data sets are misclassified? TER2 " 1. Write a function M-file to solve min f(x) by the exact gradient descent method, where 1 f(x) = = x*Ax and A is one of the following SPD matrices 192 21 8 41 18 8 9 18 14] (This is a strictly convex problem, the minimizer is x* = 0 and the minimum is 0.) The first line of your M-file should read 7 function [x,min, err,iter] = ExactGradient_(x0, tol,N, A) The sub-functions that compute the gradient descent direction and f(x + a) are as follows. function p=GD(x) p=-A*x; end function y=f(x,p, alpha) z=x+alpha*p; y=.5*Z'*A*z; end Use the initial guess x0 = and set N to be 200 and the tolerance to be 10-10 in your computations