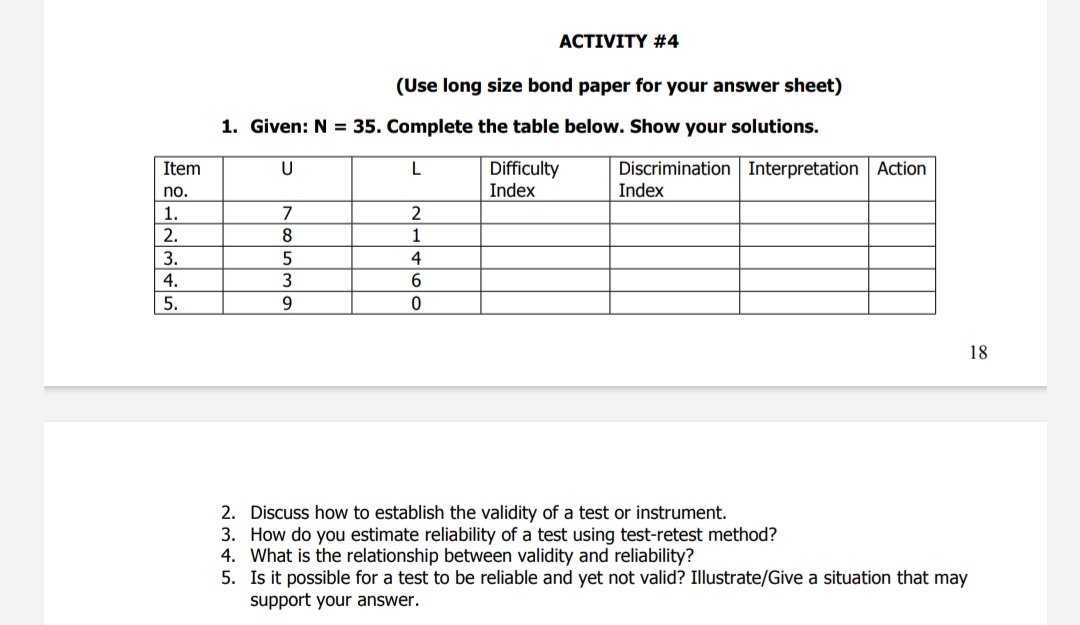

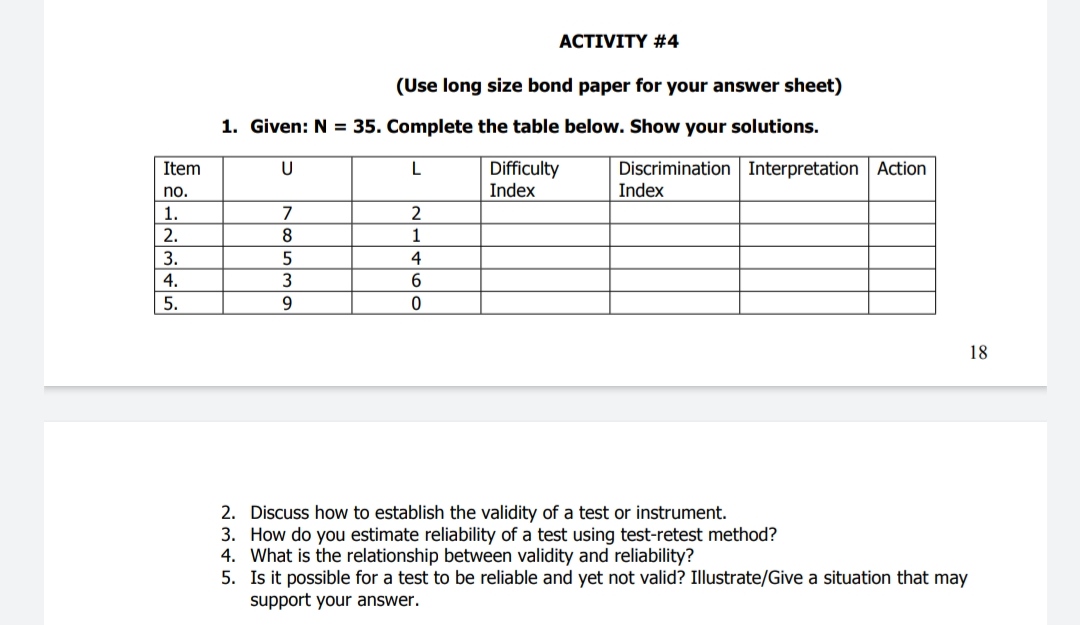

Question: ACTIVITY #4 (Use long size bond paper for your answer sheet) 1. Given: N = 35. Complete the table below. Show your solutions. Item U

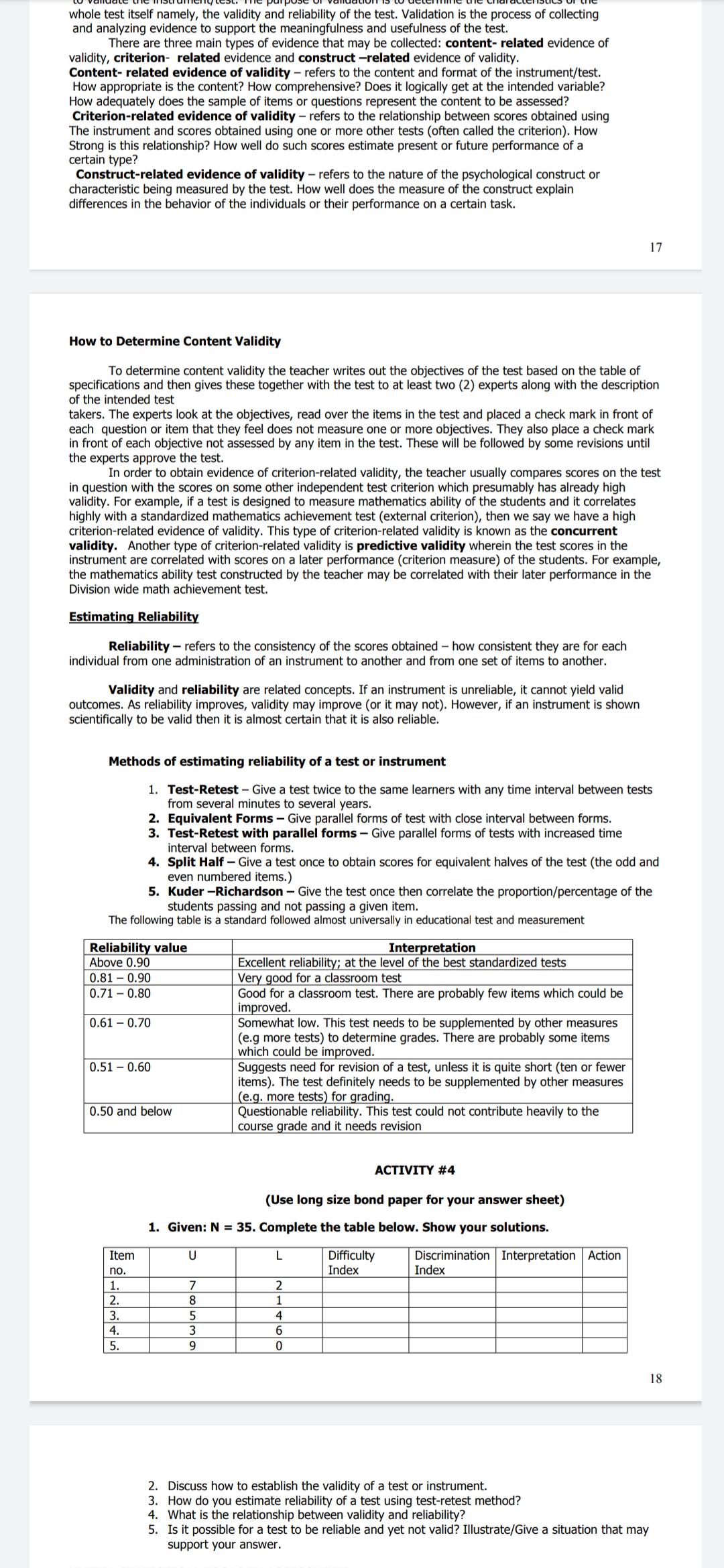

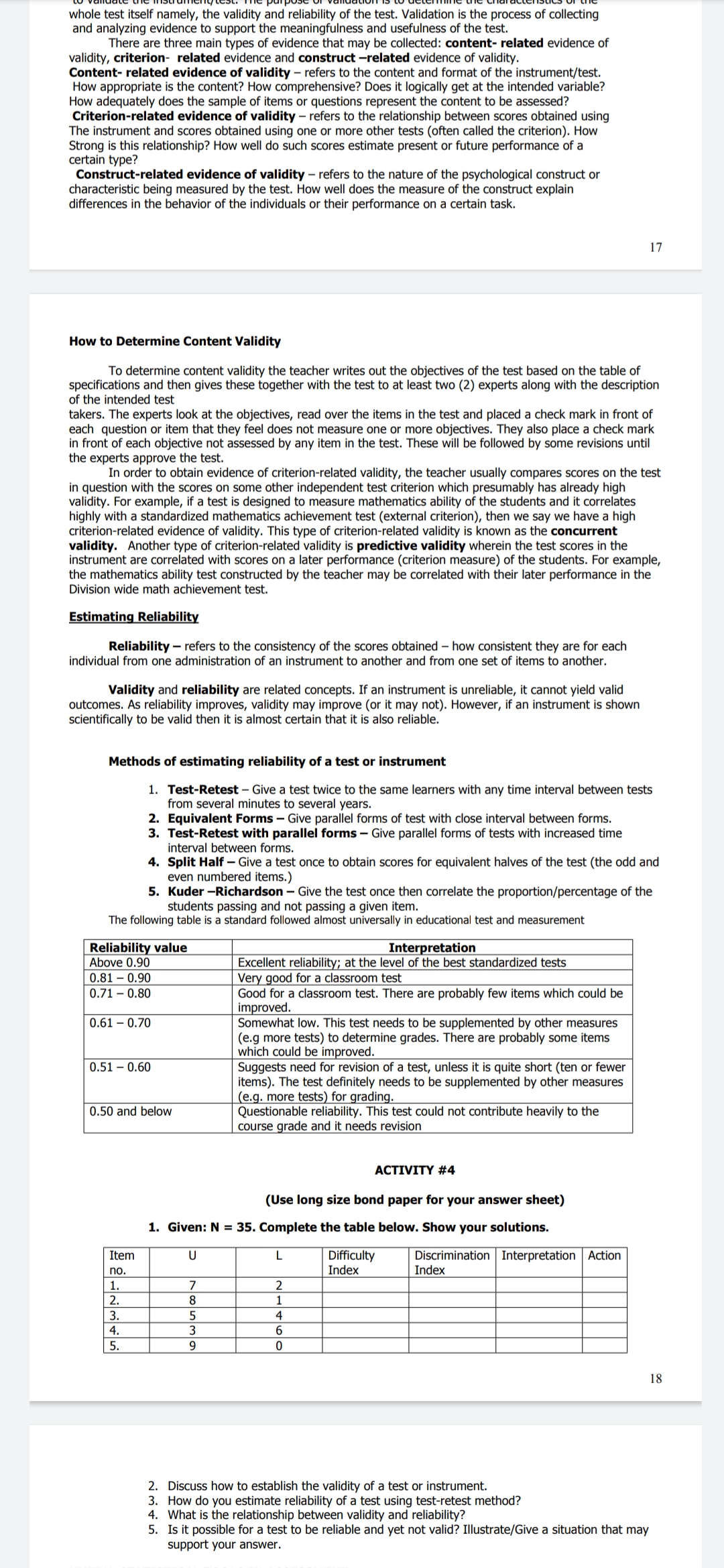

ACTIVITY #4 (Use long size bond paper for your answer sheet) 1. Given: N = 35. Complete the table below. Show your solutions. Item U Difficulty Discrimination Interpretation Action no. Index Index 1. 7 2 2. 3. 4 4. 6 5. O 18 2. Discuss how to establish the validity of a test or instrument. 3. How do you estimate reliability of a test using test-retest method? 4. What is the relationship between validity and reliability? 5. Is it possible for a test to be reliable and yet not valid? Illustrate/Give a situation that may support your answer.UNIT IV. ADMINISTERING, ANALYZING AND IMPROVING THE TEST Objectives: At the end of the topic, the students must have: 1. Discussed and applied the principles of assembling and administering test; . explained the importance of item analysis; 3. performed item analysis to improve the test; discussed how to establish the validity of a test or instrument; 5. identified and explained the methods of estimating validity of a test. Assembling the test Test assembling - means recording test items; review test items; arrange test items Items should be arranged according to types Items should be arranged in order of difficulty Items of the same subject matter content maybe grouped together How to assemble the test 1. Record items on index cards. File them in a item bank. 2. Double check all individual test item. Review the checklist for each item type 3. Double check the items as a set. Still follows the TOS? Enough items for desired interpretations? Difficulty level appropriate? Items non-overlapping so don't give clues? 4. Arrange items appropriately, which usually means: Keep all items of one type together Put lowest -level item types first Within item types, put easiest learning outcomes first. 5. Prepare directions How to allot time . How to respond How and where to record answers 15 6. Reproduce the test . Leave ample white space on every page Keep all parts of an item on the same page When not using a separate answer sheet, provide spaces for answering down one side of the page Administering the test 16/42 . . Guidelines: 1. Observe precision in giving instructions or clarification. 2. Avoid interruptions. 3. Avoid giving hints to students who ask about individual items. If the item is ambiguous it should be clarified for the entire group. 4. Discourage cheating. Analyzing Test results Item Analysis - a statistical method used to determine the quality of a test by looking at each individual item or question and determining if they are sound or effective. It helps identify individual item or question that are not good question and whether or not they should be discarded, kept, or revised. It is used for selecting and rejecting the item on the basis their difficulty value and discriminating power. Item analysis procedure provides the following information: 1. The difficulty of the item 2. The discriminating power of the item 3. The effectiveness of each alternatives/options Benefits derived from item analysis 1. It provides useful information for class discussion of the test. 2. It provides data which helps students improve their learning. 3. It provides insights and skills that lead to the preparation of better tests in the future. Qualitative Item Analysis - a non-numerical method for analyzing test item not employing students' responses but considering test objective, content validity and technical item quality. - a process in which the teacher or expert carefully proofreads the test before it is administered to check if there are typographical errors, to avoid grammatical clues that may lead to giving away the correct answer , and to ensure that the level of reading materials is appropriate. Quantitative Item Analysis - a numerical method for analyzing test item employing students' responses to the alternatives or options. evaluating items in terms of statistical properties such as item difficulty and item discrimination. Difficulty index - is a proportion of students who answered the item correctly. It indicates whether the item is easy, of average difficulty or difficult. Discrimination index - measure of the extent to which a test item discriminates or Differentiates between students who do well on the overall test and those who do not do well on the overall test. -The extent to which the test item discriminates between the bright and poor students. How to do item analysis (using the upper/lower 27% index method) Steps: 1. Rank the scores of the students (arrange from highest to lowest or vice versa). 2. Compute 27% of the total number of students who took the test to determine the number of students that belong to the upper/lower 27%. Ex. Number of students who took the test (N) = 60 27% of 30 = 0.27 x 30 = 8.1 or 8 This means that there will be 8 students from the top and another 8 from the bottom so only 16 test papers out of 60 will be analyzed. 3. Separate the test papers of upper 27% from the lower 27%. 4. Determine (tally) the number of students from the upper 27% who got the item right. Label it (U). The same procedure will be applied to the lower 27%. Label it (L) 5. Compute for the difficulty and discrimination indices using the formula below:Difficulty Index (DfI) = 7 , where T = total no. of test paper to be analyzed ( upper 27% + lower27%) For U and L (refer to step 4 for the meaning) Discrimination Index (DsI) = , 6. Interpret the results using the scale below. Scale for Interpreting Difficulty Index: Range Interpretation Action 0.00 - 0.25 Difficult Revise or Discard 0.26 - 0.75 Right Difficulty Retain 0.76 - above Easy Revise or Discard Scale for Interpreting Discrimination Index: Range Interpretation Action -1.0 - -0.56 can discriminate but item Discard But item is questionable -0.55 - 0.45 Non-discriminating item Revise 0.46 - 1.0 Discriminating item Include Examples: 1. Given: N = 60 (total number of students in the class) a) How many students belong to the upper or lower 27%? Solution: 0.27 x 60 = 16.2 or 16 students(answer, rounded off to the nearest whole no. b) What is the total number of test papers/students was analyzed (T)? Solution: 16 students in the upper 27% + 16 students in the lower 27% = 32(answer) c) If U = 14 and L = 2, and T = 32, compute for difficulty index and discrimination index. Solutions: DfI = T _U+L _14+2 _16 32 = 32 = 0.5 DSI = U -L 14-2 12 5 = 16 = 0.75 2. Interpret the results. Solution: For the difficulty index, since 0.5 lie/fall within the range 0.26 - 0.75 (refer to the scale), hence the item has a right difficulty or average difficulty (answer) Based on the discrimination index, 0.75 is within the range 0.46 - 1.0, theunfun the item is discriminating (answer). 3. Based on the interpretations what action will be taken about the item 17/42 . . . . Solution: Since the item has aright difficulty, hence it should be reta Since the item is discriminating, hence it should be included (answer ) Establishing Validity Validity - the extent to which a test measures what it intends to measure After performing the item analysis and revising the items which need revision, the next step is to validate the instrument/test. The purpose of validation is to determine the characteristics of the whole test itself namely, the validity and reliability of the test. Validation is the process of collecting and analyzing evidence to support the meaningfulness and usefulness of the test. There are three main types of evidence that may be collected: content- related evidence of validity, criterion- related evidence and construct -related evidence of validity. Content- related evidence of validity - refers to the content and format of the instrument/test. How appropriate is the content? How comprehensive? Does it logically get at the intended variable? How adequately does the sample of items or questions represent the content to be assessed? Criterion-related evidence of validity - refers to the relationship between scores obtained using The instrument and scores obtained using one or more other tests (often called the criterion). How Strong is this relationship? How well do such scores estimate present or future performance of a certain type? Construct-related evidence of validity - refers to the nature of the psychological construct or characteristic being measured by the test. How well does the measure of the construct explain differences in the behavior of the individuals or their performance on a certain task. 17 How to Determine Content Validity To determine content validity the teacher writes out the objectives of the test based on the table of specifications and then gives these together with the test to at least two (2) experts along with the description of the intended test takers. The experts look at the objectives, read over the items in the test and placed a check mark in front of each question or item that they feel does not measure one or more objectives. They also place a check mark in front of each objective not assessed by any item in the test. These will be followed by some revisions until the experts approve the test. In order to obtain evidence of criterion-related validity, the teacher usually compares scores on the test in question with the scores on some other independent test criterion which presumably has already high validity. For example, if a test is designed to measure mathematics ability of the students and it correlates highly with a standardized mathematics achievement test (external criterion), then we say we have a high criterion-related evidence of validity. This type of criterion-related validity is known as the concurrent validity. Another type of criterion-related validity is predictive validity wherein the test scores in the instrument are correlated with scores on a later performance (criterion measure) of the students. For example, the mathematics ability test constructed by the teacher may be correlated with their later performance in the Division wide math achievement test. Estimating Reliability Reliability - refers to the consistency of the scores obtained - how consistent they are for each individual from one administration of an instrument to another and from one set of items to another. Validity and reliability are related concepts. If an instrument is unreliable, it cannot yield valid outcomes. As reliability improves, validity may improve (or it may not). However, if an instrument is shownwhole test itself namely, the validity and reliability of the test. Validation is the process of collecting and analyzing evidence to support the meaningfulness and usefulness of the test. There are three main types of evidence that may be collected: content- related evidence of validity, criterion- related evidence and construct -related evidence of validity. Content- related evidence of validity - refers to the content and format of the instrument/test. How appropriate is the content? How comprehensive? Does it logically get at the intended variable? How adequately does the sample of items or questions represent the content to be assessed? Criterion-related evidence of validity - refers to the relationship between scores obtained using The instrument and scores obtained using one or more other tests (often called the criterion). How Strong is this relationship? How well do such scores estimate present or future performance of a certain type? Construct-related evidence of validity - refers to the nature of the psychological construct or characteristic being measured by the test. How well does the measure of the construct explain differences in the behavior of the individuals or their performance on a certain task. 17 How to Determine Content Validity To determine content validity the teacher writes out the objectives of the test based on the table of specifications and then gives these together with the test to at least two (2) experts along with the description of the intended test takers. The experts look at the objectives, read over the items in the test and placed a check mark in front of each question or item that they feel does not measure one or more objectives. They also place a check mark in front of each objective not assessed by any item in the test. These will be followed by some revisions until the experts approve the test. In order to obtain evidence of criterion-related validity, the teacher usually compares scores on the test in question with the scores on some other independent test criterion which presumably has already high validity. For example, if a test is designed to measure mathematics ability of the students and it correlates highly with a standardized mathematics achievement test (external criterion), then we say we have a high criterion-related evidence of validity. This type of criterion-related validity is known as the concurrent validity. Another type of criterion-related validity is predictive validity wherein the test scores in the instrument are correlated with scores on a later performance (criterion measure) of the students. For example, the mathematics ability test constructed by the teacher may be correlated with their later performance in the Division wide math achievement test. Estimating Reliability Reliability - refers to the consistency of the scores obtained - how consistent they are for each individual from one administration of an instrument to another and from one set of items to another. Validity and reliability are related concepts. If an instrument is unreliable, it cannot yield valid outcomes. As reliability improves, validity may improve (or it may not). However, if an instrument is shown scientifically to be valid then it is almost certain that it is also reliable. Methods of estimating reliability of a test or instrument 1. Test-Retest - Give a test twice to the same learners with any time interval between tests from several minutes to several years. 2. Equivalent Forms - Give parallel forms of test with close interval between forms. 3. Test-Retest with parallel forms - Give parallel forms of tests with increased time interval between forms. 4. Split Half - Give a test once to obtain scores for equivalent halves of the test (the odd and even numbered items.) 5. Kuder -Richardson - Give the test once then correlate the proportion/percentage of the students passing and not passing a given item. The following table is a standard followed almost universally in educational test and measurement Reliability value Interpretation Above 0.90 Excellent reliability; at the level of the best standardized tests 0.81 - 0.90 Very good for a classroom test 0.71 - 0.80 Good for a classroom test. There are probably few items which could be improved. 0.61 - 0.70 Somewhat low. This test needs to be supplemented by other measures (e.g more tests) to determine grades. There are probably some items which could be improved. 0.51 - 0.60 Suggests need for revision of a test, unless it is quite short (ten or fewer items). The test definitely needs to be supplemented by other measures (e.g. more tests) for grading. 0.50 and below Questionable reliability. This test could not contribute heavily to the course grade and it needs revision ACTIVITY #4 (Use long size bond paper for your answer sheet) 1. Given: N = 35. Complete the table below. Show your solutions. Item U L Difficulty Discrimination Interpretation |Action no. Index Index 1. 2 . 3. DOAUN 18 2. Discuss how to establish the validity of a test or instrument. 3. How do you estimate reliability of a test using test-retest method? 4. What is the relationship between validity and reliability? 5. Is it possible for a test to be reliable and yet not valid? Illustrate/Give a situation that may support your

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts