Question: Answer three questions below on the article. 1- Racial slur was mentioned in the article. Provide a definition for this term. And analyze how the

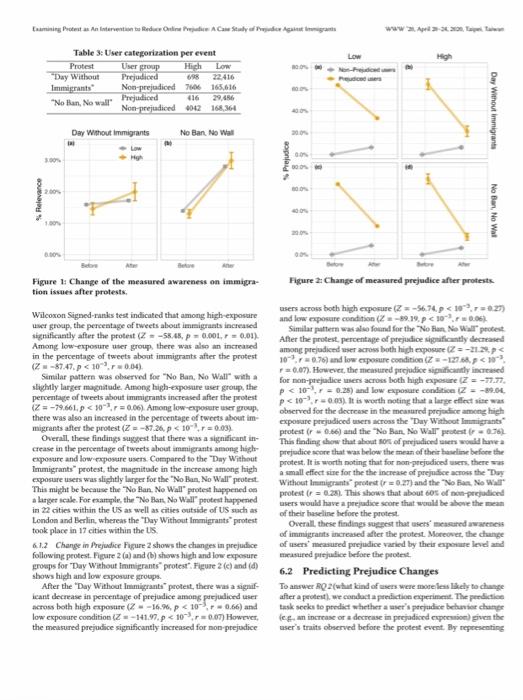

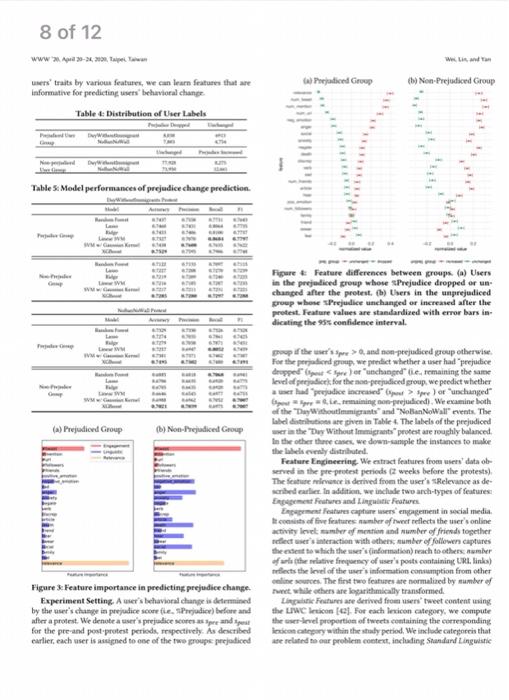

Examining Protest as An Intervention to Reduce Online Prejudice: A Case Study of Prejudice Against Immigrants KaiWeiUniversityofPittsburghPittsburgh,PA.USAkaiweeamazonscomYu-RuLinUniversityofPittsburghPittsburgh,PA,USAyurulinepitt.eduMuhengYanUniversityofPittsburghPittsburgh.PA,USAmuheng.yan(epitt.edu Abstract perceptions (16,16,39) to spreading racis beliefs and to incite There has been a growing concers ahout oeline eiers uiting racial violenoe offline [7,15}. Expressions of such prejudice and even there has been a growing concera alout online turn uting locial prejudice and hatred againt other individuals of hatred, as well as their prevalence and influence, have become a groups. While there has been research in developing autonated serious societal basue. This is of particulat concean for youths and techniques to identify online prejudice acts and hate speech, how young adults because they are not ealy active social media alapteas to effectively counter online prejudice remains a societal challenge. [26] bet mote libely to be affected and inilluenced by hatred and Social peotests, on the other hand, have bere frequently used as an exiremist ideas propagated threagh the Web [16]: Recem research intervention for countering prejudice. However, tesearch to date also found that people from mibority ethnic, gender, and sesual mibas not examined the relationship between protests and online nority groupt have continaed to be the primary targets for various prejudice Using large-scale panel dats coliected from Twiter, we forms of peejulice in woctul metla [47], with inmigrants being ene ecamine the changes in asers' tweeting behaviers relating to pecj- of the groups particularly susceptible to the real, adverie effects of eratine against immaigrants followiag reent protests in the US on online prejudice [11,17]. immigration related tefics. Thas is the first empirical tholy examin- Prejudice refers to individuale' antipathy towand a person or a ing the eflect of protests on aeducing online poejulies. Our ersults groop. People may convey their prejudice through private or pobbic show that there were both negative and positive changes in the speech. This work focuses on peejudice coeveyed in the public measured prejudice after a protest, sugeesting protest might have conline space. We define pecjudiced speech as aatipathetic (a deepa mived effect on reducing prejudice. We further identify users seated dialike) remarks against a person, group, or comusunity- It who are likely to change (oe resiat change) after a peotest. This should be noted that prepudiced rpeech and hate are relased but work eontributes to the understanding of enline paejadice and its separnte coocepts. Hate speeth epresses hatredt prejudiced speech intervention effect. The findings of this seseanch have implications expreswes feelings of a strong dedike, opposition, of anger, which for desipning targeted intervention ate not merkarily hate. While thete has been growing interest in developing automated CCS Concepts techniques to identify online prejudice acts and hate speech, bow - Applied computing Law, social and behavioral sciences: to effectively counter online ptejudice remiains a societal challenge - Information systems Web mining. Previous reseatch oa peejulioe has peoposed that peotest can sippKeywords press prejublice againt an out proup [1B]. One notable example is that civil riphts movement drastically teduced peejulice apainst computational social science, social movement, online hate and Macks in the US. [37] Hewerer, research to date has aot spectifally pevjudice, civic peotect. immigration examined the effects of social protests in onlise prejudice. ThereACM Heference Format: Soce, this roweirh takes the first strp towards understanding the - We present the first empinical study on the eflect of using protests 1 Introduction as an intervention to reduce online prejudice. We empirically 1 Introduction . Whow that these were both negative and poditive changes in the There has been a growing conern about the sugge of oaline hate incasured pecjudiec affer a poutest, suggesting peutest might have grouss and their influchce ranging from shuping socid values and a mived effect on reducing prejulice. Cirinpending ander. - We prepose a stody design that includes building a prejudice prejodice following protesti. - We further identify themes to contectulize the change of meathe identified perjudiord users chagge their prejudiced experssion followiag ptotesti. Ouz sesults have implications for desigming: "This itatement expess this person's prejudice because it ahows targeted interventions for reducing online prejudice. antipathy tewards inumigrants. However, it is not hate spech. Hate 2. Related Work speech against immiprants (e.g, "phere fucking Wllogals are not here We neview studies an online puejulice and the theoeics and prelim. jour thilnen, and you, and me 7, oe the other hasd, would expens. inary evidence for the effects of soctal protests in online prejudice. thuch mote intence and explicit feeling of dishike about imunigrants. 2.1 Online Prejudice This work takes the first step to identify online prejudice. More: stereotypes about a personal of a groap [1]. The close line of te- through aabomated technicues is an important step in regulating starch on studying online prejulice is hate specch detectian. There online space [9], solely relying on monitoeing is not sufficsent to reare three approaches to online hate specch detection 1) dictionary- dace the derelopment and inflocnoe of vuch behaviors. Yet, to date. based and sentence-syntax based approaches [31,34,49), 2) tradi- there have been no studies focusing on the effect of intervention tional machine leaming approach [21], and 3 ) decp iearning ap-_ stratcpies such as protest. proach [6] Early reseasch has ised dictionary-based and seatence-ayntax 2.2 Protest as Intervention based approaches. For example, [31] used racial shurs in Twitter Frotest is a form of sociopolitical collective action in which memposts to detect racist userx, and found that racist tweets appeared in bers of a group act together to express objection to particular actions both political leaders' Twitter followers. Whale this nethod oflersa or situations [2]. It can take many forms, sach as letter writine. simple way to identify hate speech, using zacial slars to determine public denuaciations, marehes, sit-ins, asd boycotts derectrd toward people's prejedices against a group is unable to capture powjudiced ptefudiced, offendive or atigmatiaing practices [19]. Participants in statemsents that do not have racial slurs. As a result, this approoch a pretest often believe that their actions can make the public more often has a low recall for retricving prejudiced speech and can in- aware of certain eritical issues [25]. pressure the govemment to troduce racial bias in detecting hase [48] In addition, some research take actions in policy change [2], and shift the social valses and also adopted syntactic approach to detect hate speech [49). How norms [5]. Recent research suggests that protests could serve as ever, this approach also sullered a low recall, as the way people gritical coonter-political vaices [51] to resist prejudice and discrimexpress prejudice or hate is not as explicil as defined above. ination [53]. However, there has also been growing concems about Traditional machine learning techniques were also applied to the effect of protest on prejudice. This section reviews the literature detecting hate ppeech detection. For example. [13] applied a su- on protest and prejudice in order to highlight the need to further pervised machine learning approach and studied cyber hate across study the effect of protest on online prejudice. multiple groups (race, sex, and disabality) on Twitter and defined Social movement theory poses that protest can have an impact cybethate as the "otheriag" langage such as wiing "them" to mefer on society, lealing, to changes in political and cultaral outcotnet an out-group. However, this work did not distinguish offensive (24]. Previous research has linked nonviolent protest to attitude language from hate: for example, using terms such as "jokes" and change, and found that the changes in attitudes can persist [37]. "really druck" to mock disabled athletes seems to be oflensive but for example, protest was found to have a positive effect on redueaot necesiarily hateful As supested by a receat sescarch [21], hate ing negative stereotypes, and that the positive effect rematned at speech detection shoald consider the difference betwern offencive one-werk follow-ep [11]. It was also found that after the 2006 immalanguage and hate speech as hate speech is wsed to target a social gration protests, foreigo-borm Latinos were reported more positive group with an intention to exclade that proup. attilides towards immigrants and support for benign immigration Recent works have applied deep learning techriques to online policy le. g, immediate legalization of eurrent unauthorized immihate spevch detectson. For esample. [6] focuaed on the detection of grants) [12]. White Southernets living in cocinties whicte a ait-is hate speech against imanigrants and women in Spranish and Engliah oecurred were observed to be mote lkely to support the protest. messuges extracted from Twitter. This work showed than Support compared with those counties with no sit-in event [3]. These preVector Machines (SVM) outperformed sophisticated systems sach vious studies highlighted the relationship between protests and as Convolutional Neural Networks (CNNs) and Long Short Termi brouder attitudinal ehange, and supported the prenises that protest Memory networks (LSTMs) in hate sperch detection task. Conkis- could be used as an intervention to redace online prejadice. tent with this finding, [21] reported the beat perfouming classifier However, there has been resaarch shuwing that protest has no effor hate spech detection is 5VM, with a F1 score of o.90 for class- fect and even a ncgative effect on ptejudice. In a meta-analyais, Corfying offensive and non-offensive language. There works suggested rigan [18] examined peblications between 1972 to 2010 that focused that traditional machine learning techniques remain elfective in on the effects of the anti-stigma appreaches on pablic stigrna rerelated tasks. Wated to mental alloss. Amoag 72 examined titudies, only one tested While these purvious woqks have exteasvely studied hate apeech, the effectiveness of peotest, which yielded ace aigaificant findings few have focuced on online prejudice. Overall, prejuliced speech for the effect of fact sherts froen Prychiatrists' Changing Minds has a breader rcope than hate speech. Whale prejudice can be man- campuign on reducing prejudiced attitudes against schizoplarenia ifested in hate specch. not all menifetations of prejudice are hate and alcoholism [35]. Several studies even suggested that protests speech. For example, someone can expess pajudice against imma- that attempted to suppeess peejudice ean produce an unintended grants by saying "immigrants arr laty and they stral our johs awyy? "Feboend" in which projulices about a group remain anchanged ar WWw "25. April at-2t. 2023. Taipet. Takan actually become worse [36, 54, 55]. A recent experimental studied Cempare asers' bebavional oufcome(v) before and ufter an rwent In also showed that the anintended "rrbound" effect is conditioned this framework, users' behavioeal outeome(s) before a focal rexat on social norms and participants lovels of prejudices. Specifically. is contidered a haseline measiar. The differences in the user' participants suppressed their prejodice against homosexual group behavioral oufcome(s) before and after the event are regarded as when they expected to share their responses wath others. Partic" the chanpes related to the focal event. In this work, we coenpared ipants with higher prejudice are more likely to exhbbir prejudice users" online prejudice against immigrants before and after proest robound and rated bomosexual group more steteotypically [23] events. There previous studies supgeited that protest can leid to changes in atsitudes. However, there has been mixed findings on the effect 3.2 Study Context of protest en prejodice. Moreover, little is known about protet as To understand the ingact of protest on online peejudice, we focused an intervention strabesy to reduce online prejudice. on two protest events: the "Day Without limmigrants" and the "No 3 Research Questions and Study Design This section introduces study design, study consext, and research most recent nationwide protests that ained to show the important questions contrabutions of immigration and to resist penitive imanigration policiei. 3.1 Study Design In this work, we adapt "competational focus groups" method [32] to __ "Day Without lmmigrants" proteit As a response to President. study users' conline prejodiced specch and how immigrant protest Donald Trump'splans to buld a border wall strip sanctuary cities of events are related to its changes. Computational focus groups is a federal funding, and deport potentially mallions of wnbocumented framework for traklang changes in social media users' emotions immigrants [8], this protest took place on February 16, 2017 in attitudec, of opinions about a group or an isue followine specific multiple cities across the US. It aimed to show the importance eveass [32]. Specifically, it tracks users' behwioral cutcomes by of immigrants to the US econony and in the day to day lives of analyzing the coefent of soeial media users' posts. This framework American citizens. Socid media and other means were used to is similar to traditional intervention studies in that it requires an diseminate the information about this protest [46]. On the protest intervention (a focal event) and a measurable outcome (users' be- day, shops and restaurants were dosed in several majot US cities. havioral outceenes). However, the major difference between these For example, moee than so restaurasts were closed in Whathington two is the methods ued to obtain outcomes. This is mainly because DC [20], and oree 1000 busibesses were cloaed in Dallas [45], and online users express their emotions, attitudes, and opinions in the thoasands of children did not attend achool [14,46]. teat mining techaiques to tura enstructured tects iato numbers. 2017 as a response to President Donald Trump s plan to ban citiaens Out stady desigp ischudes the following tept. of certain Mulaim countries from entering the US, and suspend Idendify a focal nunt A focal event is an event that has potental admission of all refugees entering the country [22]. It was also impact on people's behavional eutcomes. For example, previous re- plansed and disseminated via social media. and simultancossly search hasused computational foctu groups in studying events sach exerated in multiple cities in the Us, including New York Los as terrorist attacks and persidential debates [32, 33]. in this woek. Angeles, and Philadelphia [4]. On the protest day, in Seatle-Tacoena ve seled two most recent immienant protests as focal events: 'Dw Airpert alane, about 3,000 protesters gathered to protest Truma's Without lmmigrants" protest and the "No Ban, No Wall" protest. ptan to ban citizens of certain Muslim coentries from entering the These two events are selected becanse they are the most recent US [43], Thousands of protesters abo gathered in major airports in nationwide immigrant protest in the U.S. cities such as Fortland [t4], Los Angeles [44], and Philadelphia [28], Converuet focus groupu Focus groupc, traditionally, are a form Compared with the tactici employed by "Day Without lemmigants", of grosp interview that capdalizer ea commenieation between re- the tacties used in thes peotest were both mote traditional and less. search participants in order to generate text data [30]. Social media disruptive. Differences in social medis responses to these tactics users generate data by communicating their enotions, attitudes, of may provide important implications for achieving desirable protest optrains about a group or an istue by porting short test messages. This online platforms prevides wealth of data that would ofherwise require thousands of group interviews. In this wotk, we constructes 3.3 Research Questions a user pand wbo showed interest in discussing the tepios relevant This woak aims to ecamine protest as an intervention to fiduce pevjto immigrants and divided them into sub-eroups based os their udice, with a focus in pregudice againit immigrants. Speciecially. expoiure level to protest cities. we seck to answer the following research questions: Track user's behavond aufoomes Uscrs' behavioral outcomes are tracked by leveraging teat mining techniques to quantify users' RQ1 To what extent protest increase awazeness of immigrants? soeial media posts. In this wosk, we leverage text mining technigues RQ2 To what estent jrotest reduce online prejudice? to ideatify whether a tweet is prejudiend spech againt immigrats RQ3 What kind of usen were mare likely to change for resiat to or not; and whether a tweet is about immigrants or not. Then, we change) after a relevant peotedt? aggregate all the tweets at the user level RQ4 In what way uners changed the prejudiced expression? 4 Data noem ina interest groop [40]. To identify such accounts, we ased the selected from multiple data noerces and filtered based on exdubon acoochts, a total of 102,094 users remained. criteria. For each velected wer, all available tweets during the stady period were collected from their timeline and peofile. Duta source Multiple data siares were und for welecting wers which dimplws the latest tweets frots the specified (public) Twitter Gro-based datasets were waed as initial datasets because one of all avallale twerts durine the stady peried (two werks belore, two the study aims was to examine the relationship between levels weris aftrr for each protent event). In bital, we collected a total of immigrants. Howrver, sokely relying geo-tagend datasets posed risks to sampling bias, with only about 15 of tweets have geotagped information [2v]. To mitigate the tiskx we also collevted 5 Detecting Prejudice additional data that contains proteit rvent frlated hasta pa. The following wection deseriles details about geo-hased and hashuag- Oes of the majer ithallengess for studying the impact of a protest based datacts. oen celine user's prejaline is to driclop reliably measurement for The geo-tagged datiset was peovided by a rescatch eollohogatoe. suatifyine the level of prejudice. To aldress this challenge. we deTheie pro-based users were inchudod in the initid dutase becazer. velpp nipervised leaming technigees, which includes (1) immiprantwe were interested in the role of ceorposiare in macr's oeline pret. melased tweet classification to ideatify twerts relevant to the inand udice against iemmigrants. To achicve our goal one of the critical. gration bopirk and (z) perjuliced tweet clawification to identify explicitly expreis prejudiced iperch againat immigrante. To this meope tweets that are potentially televant to the immieration topica (section 52h and (ia) train and evaluate the supervised learning "immigra") la total, there were 135,759 uien induded in this stady 5.1 Scoping Potentially Relevant Tweets frem the geo tagend dataiet. Admiftedly, solely relying on geo-based data introdaced sum- In oeder to cerrectly ilentify plejudice apainat immierants, we beed pling brias because not all Twitter eact choose to duclowe thrie gre- to first ibentify fwevtu that age relivant to immigrants or imnigra: graphic locations, Thus, we cellented additional users who dhewed thon topiss. To mube this step nove eflicient, we leverage leryword. plicated asers, social bots, and onganiational wiers. Since users hlea of krywond expansion [41], where a set of initial keywords wete identified fram nultiple sourve, wx firnt temioved users whe was aned to bootitrap works similar to theie werds. appeared more than once in the data. Alter nrmoning these miens. In cor work, "immigrant" of "immigrants" were ased as initial 150,702 users remained in the data. Bnewede. To copand the keywords, we first trained a Word2Vee: Social bots aze accounts controlled by softwate that antonat- model wing the Cenuim package to canatruct the semantic vecically genetates cuateats [S2] Given the intereit of this study is twes of wats. We then comprited the conine similarity between humaa users, and thus bots were excluded prier to data analysic. the woed vectoes and iteratively retrieved the wogds having the To remove the social bots from these tuers. wr used the Eotometr highest similarity with the set of relevart keywords and added AIN [52]. a system that has been shown effective in bet detection them inte the kryword set. The iserative process ended when no (with an AUC of 94 s) to detect and remove bote. After cudodine werds were identified at the top by manual inspection. In total. social bots, a total of 112 ,142 ewers remained. these were 839,579 poleatially nevevant twerts from 71,$19 Twitter Organimativeal user accounts were alio remoned feeen this study. Wsen. Frven these poteatially relevant tweets, we fuather clanailied A wiet is considered to have an orpanizstional acovant if the ac. them as tweets alvent immigrants, and rweets prejudiced against coent represents an institution, corporitien, ageney, news mofta, er 5.2 Establishing Ground Truth As discussed in section 2, existing online hate speech detection tech. niques ane not suiable for detecting prejudiced speech as the latter has a breader scope. To develop suitable prejudiced specch elasq. fieri we aned to establish proper groind truth. which is constrocted based on the following human coding process. 5.3 Experiment Setup 5.2.1 Human ooling proces In thas study, a codebook was devel. Experiments were carried out to select machine learning models oped for human coders to chasify whether a tweet was about inmbefrants, and whether it expesses prejudice. The cedebook included for dassifying, twrets about iamigrants, and tweets with prejudice a definition of immigrants and prejudice, and related examples and againt immigrants. Both were binary classification tasks with the rationales for coding a tweet as about immigrants of prejudice. objective of chasifying whether a tweet belonged to oae category or the other. The indicatoes and examples were derived from coding a random sample of twets. Rationales wete beief descriptions of teaions for Preprorasing Prior to training clasufication modelk, lext precoding the tweets. peceising was performed on both labeled and unlabeled twects to Coding Two independeat cobers were recruited to ansit the cod. reosove noise and prepare the text for chasification. In this procent ing process, bohh being native Fagliah sprakers of college-kret - we femoved stop words, URLs, and mentions (Ghusemarse). The ducation who are active cocial media uvers and check wocial mec- Labeled tweets were split into fas as training 20\% as test, and 20% as development, a coumon practice in machine learning. dia posts every day. Two training sessions were condocted befote coders were asked to code the tweets independently. In the first Feafure The mean vectors of the Word 2 ec model wete ased as training session, we provided a brief everview of the itudy, dis. features to train the classification models. Specifically, each tweet cassing coding tasks and overall woek flow. Following the trais. consists of words. After training the WordzVec model, each word ing session, coders were asked to coble a random sample of 200 is reperented in a 300-dimensional vectoe. The mean vectorization immi prant-related tweets that had already been cobed by the athor of the cabbedding model for a given tweet is defised as takiang the based on the codebook: this batch was used to facilitate training. In average of all the werd vertors in the tweet. the second training session, we discussed the coding resilts with Classifer ln the experiments, we tested the following supervised the coders, and we eadh explained our reasona for the anwwer codes machine learning models: Naive Buyes, Adyptive Boosting Support in the batch. Through the discussion. we found that misclasift. Vector Machines, Logistics Regression. and Extrene Gradient Boostcations were due peinarily to the misinterpretation of the tweets. ine. These models were chosen because they have been shown to sweep of Muslim Americans raises surveillance fears," to be about immigrants. The tweet is about Muslia Americans, not Mesliai Imaviancrd deta and over-ampling As ahown in Table 1, threr imaigrants. After the discusion, we reviewrdour eoding. The final was a major issae with imbalanced data where only ahout 16 ? codes for this batch were based on majority rule, and this batch were labeled prejuliced apainst immigrants, Previous revearch has was then used as the gold standard for future coding. After trainine shown that classification of data with imbulanced chas distribution sessions, coders proceeded to code four butches of 200 rahidomly can suffer sienificant drawhacks in model performance beeause sampled tweets. Each batch was coded independenthy by the codern. mout standard classifer leaming algozithms assume a relatively The results of these batehes were wsed to test codebook reliability. balanerd class distribution and equal aidiclassification costs. Thai would lead classifiers to be more sensitive to deterting the majorReliability Evaluation Both coders had substantial agreerient in ity class and less sentitive to the minority class [50]. To address terma of Cohen's kappa coefficient (k>0.61) with the author on the issue of imbalanced tweets that contained jecjudiced speech the gold atandard batch before they proceeded to test codebook against immigrants, we uied the aive random over-ampting lechreliability, which consisted of four batches. Coders had subetantial nique to generate tweets that wete labeled as peejudiced speech acreement in coding each batch, which indicates that the codebook (the misonity class). This over-sampline technique geberates new achieved high relability. samples by randoenly sampling the replacements of the current Following the codebook reliability testing, coders and the authoe available smples. The over-armpling was only applied to the traincoded an additional a000 tweets. The average poirwise Cohen's ing dataset. The random over-armpling was implemented uing the kappa coeficient was 0.87 for coding tweets about immierants. imhulanced-learn lythen porkage. and 0.63 for coding tweets that exhibited prejodice against immigrants. The majority rule was used to decide the final code for each 5.4 Classification Performance tweet. In total, there were 3000 hbeled tweets. Table 2 shows the Accuracy of the models was detemined based on precialon, moull distribution of labeled tweets. A total of 1717 tweets (about Sors) Fl-3cere, and ALC. The models that praformeed the best were used were tabeled as about immigrants. A total of 471 (about 16s) were to dasify the remaining data. prejudiced against inmeigrants. This coding process eenerated a Table 2 shows the accuracy of supervised leaming models for random sample of 3000 labeled tweets from all relevant tweets dur- (1) immigrant-related tweet clasxification and (2) prejadiced twret ing the study period, which was used for training and evaluating classificationt ln the first task, both Adaloost and SVM had good the machine clasxifiers. puecision (above sos), recall (above sos), and F1-score (above s0:). Wri, Lin and Ya sucgesting that the selected features in combination with the mod- Last, we alwo saw false aegative cases where machine dassified els were able to retrieve most of the rweets that were about immi- the tweet as non-frejudice when the rweet is prejudioed. For examgrants and had few lalse positives. In addition, both models alse ple, "Cive me yoar poor, yoor dircd, your diskustiog masse.." As we esached an AUC above o.s, showing that they were reliable peedic- know, a similur phases were written on the Statue of Liberty in the tion models for clasifyisg whether a fweet was aloout imanigrants. US. "Give me your tirel, your poor, tour hudMhal maues yearming to of SVM wis iliphtly better, with 1.6 performance gain over Ad- homeles, tempoif-forand to me, f hift my lamp brude the golden door? aboost for F1-score. Therefore, the performance of SVM was the "This eripinal poem was expressine welcome to immigrants and best amosg all evaluated models. For the second takk, the everall frfagees to this country. Horwever, this aier changed its meaning perlormance of Adaboest and XCFHoot was better than the other to a negative way and linked lamigration to "disguating masies" models. Beth of these models reached good precisien (alvove sos) While machine clawily thas twert as ahout immagrant but not pecj. recall (above sow), and F1-1core (above Bor), and AUC (above 6.8). viticed againat immigrants, the history context of this expossion suggentine that theie models were able to reliably clasify whether would teil us that this user still expeesied prejudice but in a more a tweet was prejudiced speech apainst immijprants When coecpaf- iemplicit way ing XCEloost with AlaFoot, the everall performance of XCBoont 3.4.2 Aotomatically tabet data To astomatically label the unlateled was slightly better, with a 1.5N ed performance gain over Alhlloost data. we usd the trained SVM to label whether a tweet is alout for Fliscere. Therefore, the XGBoont peeformed the best amone all imanipants and the trained XGibobt to babel whether a tweet is cvaluated models. pecjudiced spech against immigrant. Amone 80.579 inmigrant. Table 2: Model performances for detectine rolowand and preju-_ related tweets, 4.0.622 tweets (about 55 se) were labeled as about imanigaants, and 155,014 (about 158) were labeled as prejudiced dicrd tweets spech againat imenigrants. 6 Analysis Results We organize our analysis tesults to answ kQ I and fQ 2 in section 6.1 . ROS in setion 6.2 , and RO4 in setion 61 . The unit of anslysis is on the user level For each usec, we measure the user's interest in the immigrationrelased tepics as "eRnirvane" - the proportion of user's tweets that are claskifin at relevand tweets among alli of the tweets possed ly the user avithin the study window. This cquantity raptures the user's relative interest in tweeting about imengrants of imenigrationrelaled topick, which serves as an indicater for his her being aware of the topics. We meavure the level of prejulice per aser as " m thej. 5.4.1 Error analyzis While classifier overall achieved poed aced welice" - the proportion of user's relevant tweets that are classified racy. there are still cases where machine mis-elassified the tweets. as peejudiced twoets within the study window', The two quantities For example, machine mis-clasified rehevant tweets as irrelevant, afe measared for each ecer in the dataset ia the pae-protest winder. "This National Gaurd "rounding up immigrants" story a a smear (within 14dws priot to the protest) and in the post protest windoer The MSM is aloo smearisg our miliary. This is disgaking. "In this (within 14 days after the onset of the arotest). We further eroup twoets. the use was diocussine his or her opinions about immi- wiers iste the preiuliced group if the user posted any prejudiced grants, indicated by National Guand "rounding op immigrants" twerts daring the pre-peotest window, and in the nos-precudiocd story. There wete also cases where machibe clasified the follow. growp if no pejudced twret was oloervid from the uaes. ing twects about imanigrasts, Bet it is about Mhaslims. "Jikual e3 Gewen our interent in peotest expesure. we alse identify each Mushims) got fired from Capital Hill for spying \& " "ending "A dota user's level of exponure based on their peo-locations - a user is to extemal werwer ". In this case, machine secins to make similar catesoried as "hiph expoware" if the user located in the cibies where mistakes as buman would make. As discusaed in Section 522, one the peotests happened, and "low cxpouure" otherwise. Talke 3 ahown of the coinmon mistake human coders made during the training a summary of uaens lreaking down by the two cvents, acroas two was to mio-lassify minorily grosp such as Mualims as inamigrants. greops iprejudiced and son-yojudiced and by the level of expoThis errof could be because when we discuss Moulems immigrants. sure (hiph and lowl. fecpectivily. ottea the cave we would use Mualims to refer this group. In addition to mis-chasify relevant tweets, machine also mis- 6.1 Changes After Protests chasified prepudiced tweets. For ezample, machine faliely bentified 6.1,1 Change in Alarenes Figure I shews changes in aser's per. a tweet that was not peejudiced as pejudiced, "Not Criminals, Not centage of twerts that are about immigrants following the "Day Mrgals, We Are leternational Workers, Fwek Dwald Trumpand His Whithout Inmiprants" protest (Figure I (a)) and "No Ban, No Wall" Pinche Walf " This error night be because the clasuifier tends to classificd tweets that cootain certain hostile words as prejudiced peotest (Fipure 1 (b)). Fer the "Day Wathout lamigrants" peotest. a such as "tliegals" and "Criminals", Thes isue coeld be addreised by addieg a negation feature when classifying ptejuliond twesti Waw zan, Ayda 3- je sron, trow fukw Table 3: User catecorization per event Figure is Change of the measured awareness on immigra- Figure 2 Change of measured prejudice after protests tion issues after protests. Wilcoxon Signed-ranks test indicated that among high-exposare asers across both high ecporure (Z=56,74,p

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts