Answered step by step

Verified Expert Solution

Question

1 Approved Answer

As we saw in lecture, hashing is very important for managing large data systems. For example, it is used to map from data/search keys

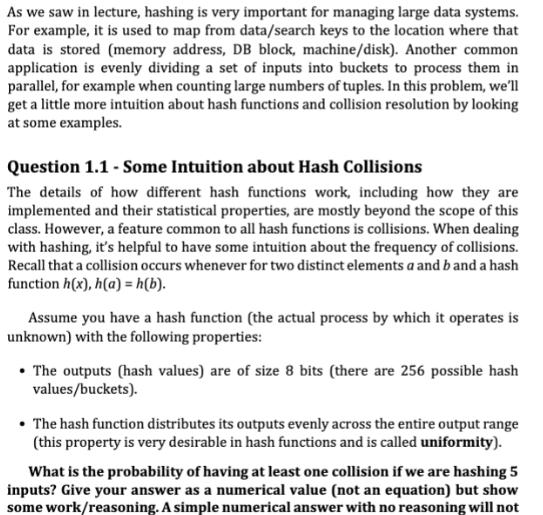

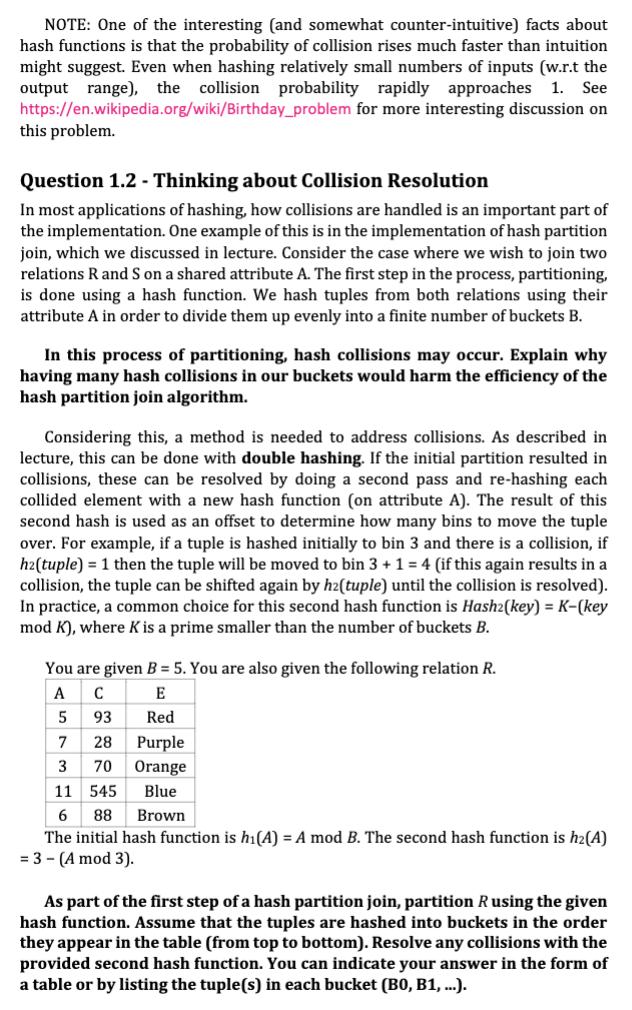

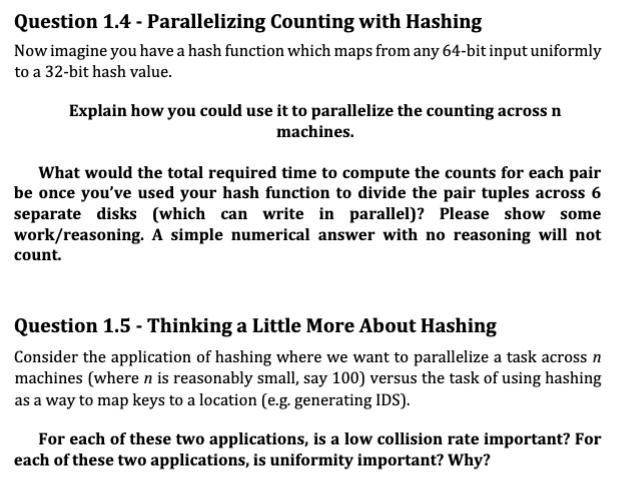

As we saw in lecture, hashing is very important for managing large data systems. For example, it is used to map from data/search keys to the location where that data is stored (memory address, DB block, machine/disk). Another common application is evenly dividing a set of inputs into buckets to process them in parallel, for example when counting large numbers of tuples. In this problem, we'll get a little more intuition about hash functions and collision resolution by looking at some examples. Question 1.1 - Some Intuition about Hash Collisions The details of how different hash functions work, including how they are implemented and their statistical properties, are mostly beyond the scope of this class. However, a feature common to all hash functions is collisions. When dealing with hashing, it's helpful to have some intuition about the frequency of collisions. Recall that a collision occurs whenever for two distinct elements a and b and a hash function h(x), h(a)=h(b). Assume you have a hash function (the actual process by which it operates is unknown) with the following properties: The outputs (hash values) are of size 8 bits (there are 256 possible hash values/buckets). The hash function distributes its outputs evenly across the entire output range (this property is very desirable in hash functions and is called uniformity). What is the probability of having at least one collision if we are hashing 5 inputs? Give your answer as a numerical value (not an equation) but show some work/reasoning. A simple numerical answer with no reasoning will not NOTE: One of the interesting (and somewhat counter-intuitive) facts about hash functions is that the probability of collision rises much faster than intuition might suggest. Even when hashing relatively small numbers of inputs (w.r.t the output range), the collision probability rapidly approaches 1. See https://en.wikipedia.org/wiki/Birthday_problem for more interesting discussion on this problem. Question 1.2 -Thinking about Collision Resolution In most applications of hashing, how collisions are handled is an important part of the implementation. One example of this is in the implementation of hash partition join, which we discussed in lecture. Consider the case where we wish to join two relations R and S on a shared attribute A. The first step in the process, partitioning, is done using a hash function. We hash tuples from both relations using their attribute A in order to divide them up evenly into a finite number of buckets B. In this process of partitioning, hash collisions may occur. Explain why having many hash collisions in our buckets would harm the efficiency of the hash partition join algorithm. Considering this, a method is needed to address collisions. As described in lecture, this can be done with double hashing. If the initial partition resulted in collisions, these can be resolved by doing a second pass and re-hashing each collided element with a new hash function (on attribute A). The result of this second hash is used as an offset to determine how many bins to move the tuple over. For example, if a tuple is hashed initially to bin 3 and there is a collision, if h2(tuple) = 1 then the tuple will be moved to bin 3 + 1 = 4 (if this again results in a collision, the tuple can be shifted again by h2(tuple) until the collision is resolved). In practice, a common choice for this second hash function is Hash2(key) = K-(key mod K), where K is a prime smaller than the number of buckets B. You are given B = 5. You are also given the following relation R. A C E 93 Red 28 Purple 70 Orange 11 545 Blue 6 88 Brown The initial hash function is h(A) = A mod B. The second hash function is h(A) = 3 - (A mod 3). 5 7 3 As part of the first step of a hash partition join, partition R using the given hash function. Assume that the tuples are hashed into buckets in the order they appear in the table (from top to bottom). Resolve any collisions with the provided second hash function. You can indicate your answer in the form of a table or by listing the tuple(s) in each bucket (B0, B1, ...). Bonus Note: There are many other collision resolution strategies beyond those mentioned here, each with different advantages and disadvantages which can be analyzed probabilistically. Question 1.3 - Computing Counts of Pairs As seen above, hash functions are useful whenever we need to (relatively) evenly distribute data. Another such application is dividing across multiple machines to process in parallel. Imagine you have created a music app. Users of your app can login, start a session, and then play songs. The database which forms the backend to your app contains a table with tuples (user_id, session id, song id) which represent every time a user has played a song. You wish to see which pairs of songs are most frequently listened to together in the same session. You are given the following information: There are 10 million users There are 1 thousand songs There are 4 billion sessions The user IDs are 3 bytes The song IDs are 2 bytes The session IDs are 4 bytes The avg. number of unique songs played per session is 5 Assume all your data is stored on hard disks and use the values for disk seek/scan times from the top of the assignment. Assume that data is stored sequentially on disk. Calculate the total time required to compute the counts for each distinct pair. Do not count pairs with the same song (i.e. Song A - Song A) and assume that when counting we do not count different orderings of the same songs as distinct pairs (i.e. if Song A and Song B are played in the same session, we only count the pair Song A - Song B and do not count the pair Song B - Song A). Assume that it takes 1 hr. to sort all pairs using the external merge sort algorithm. Please show some work/reasoning. A simple numerical answer with no reasoning will not count for full points. Question 1.4 - Parallelizing Counting with Hashing Now imagine you have a hash function which maps from any 64-bit input uniformly to a 32-bit hash value. Explain how you could use it to parallelize the counting across n machines. What would the total required time to compute the counts for each pair be once you've used your hash function to divide the pair tuples across 6 separate disks (which can write in parallel)? Please show some work/reasoning. A simple numerical answer with no reasoning will not count. Question 1.5 - Thinking a Little More About Hashing Consider the application of hashing where we want to parallelize a task across n machines (where n is reasonably small, say 100) versus the task of using hashing as a way to map keys to a location (e.g. generating IDS). For each of these two applications, is a low collision rate important? For each of these two applications, is uniformity important? Why?

Step by Step Solution

There are 3 Steps involved in it

Step: 1

To address your questions well need to tackle them one by one so lets start with the first question ...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started