Question

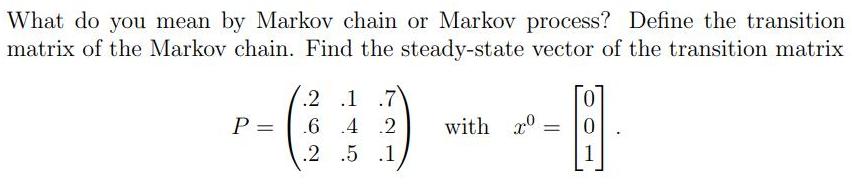

What do you mean by Markov chain or Markov process? Define the transition matrix of the Markov chain. Find the steady-state vector of the

What do you mean by Markov chain or Markov process? Define the transition matrix of the Markov chain. Find the steady-state vector of the transition matrix .2 .1 .7 P = .6 .4 .2 with x %3D 2 .5 .1

Step by Step Solution

3.43 Rating (156 Votes )

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get StartedRecommended Textbook for

Managing Information Technology

Authors: Carol Brown, Daniel W. DeHayes, Jeffrey A. Hoffer, Wainright E. Martin, William C. Perkins

6th edition

131789546, 978-0131789548

Students also viewed these Mathematics questions

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

Question

Answered: 1 week ago

View Answer in SolutionInn App