Can anyone help with the solution?

Can anyone help with the solution?

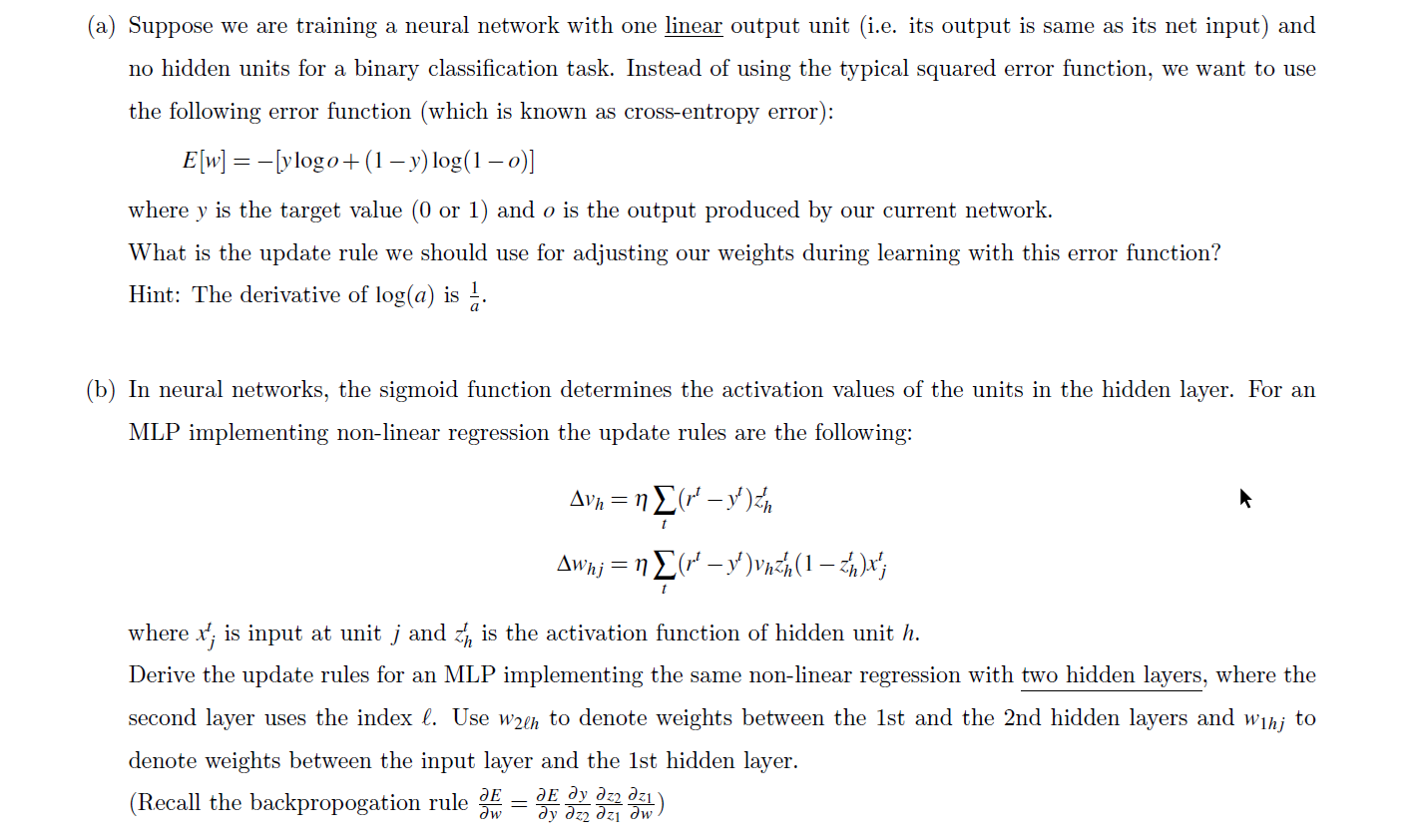

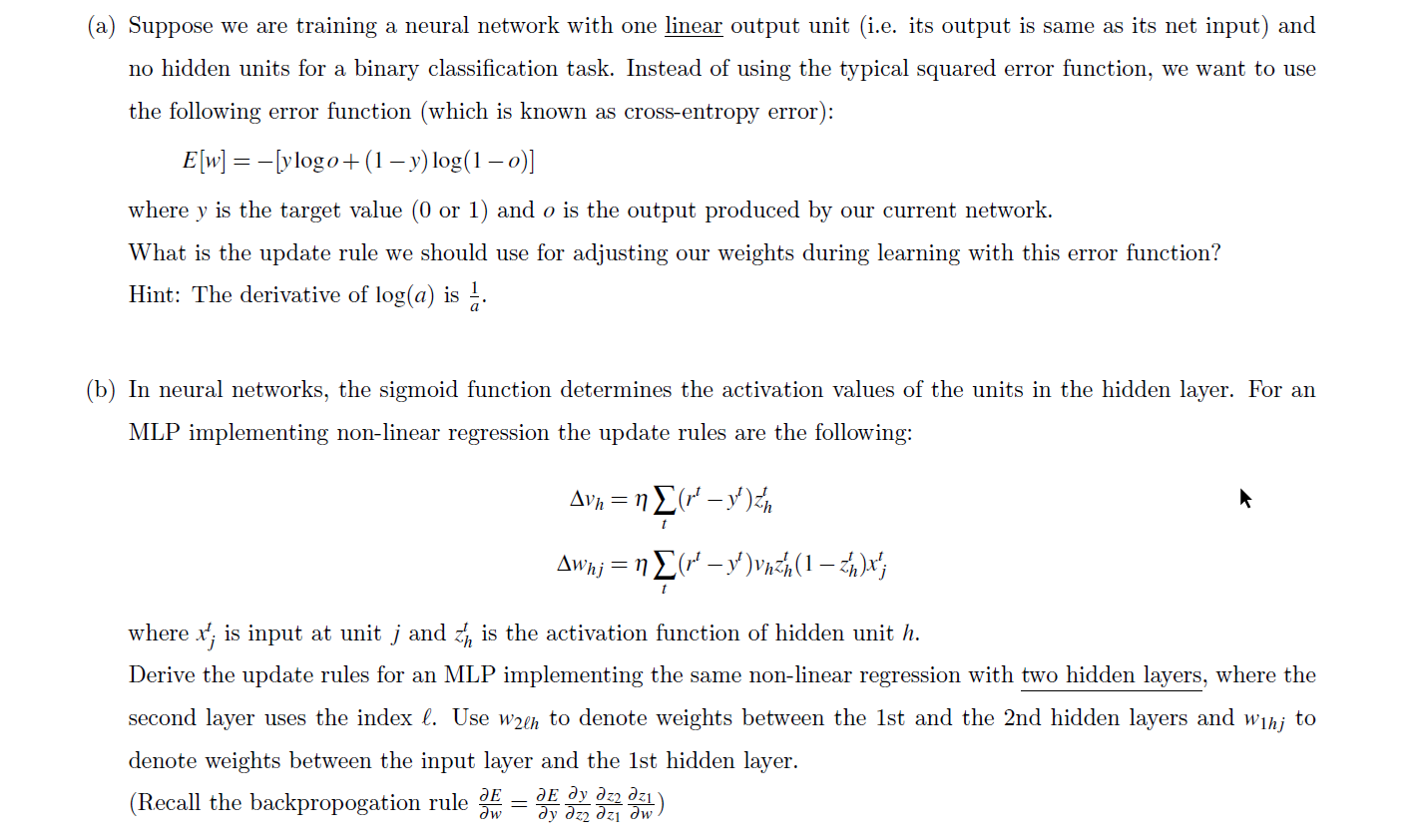

(a) Suppose we are training a neural network with one linear output unit (i.e. its output is same as its net input) and no hidden units for a binary classification task. Instead of using the typical squared error function, we want to use the following error function (which is known as cross-entropy error): E[w] = -[ylogo+(1 y) log(1 - 0)] where y is the target value (0 or 1) and o is the output produced by our current network. What is the update rule we should use for adjusting our weights during learning with this error function? Hint: The derivative of log(a) is a (b) In neural networks, the sigmoid function determines the activation values of the units in the hidden layer. For an MLP implementing non-linear regression the update rules are the following: Avn=nE(37 y)zh Awn;= n (M' y) vnzh(1 zh)x"; where x'; is input at unit j and Z, is the activation function of hidden unit h. Derive the update rules for an MLP implementing the same non-linear regression with two hidden layers, where the second layer uses the index l. Use waen to denote weights between the 1st and the 2nd hidden layers and winto denote weights between the input layer and the 1st hidden layer. (Recall the backpropogation rule E E z2 z1 z2 zi w. w (a) Suppose we are training a neural network with one linear output unit (i.e. its output is same as its net input) and no hidden units for a binary classification task. Instead of using the typical squared error function, we want to use the following error function (which is known as cross-entropy error): E[w] = -[ylogo+(1 y) log(1 - 0)] where y is the target value (0 or 1) and o is the output produced by our current network. What is the update rule we should use for adjusting our weights during learning with this error function? Hint: The derivative of log(a) is a (b) In neural networks, the sigmoid function determines the activation values of the units in the hidden layer. For an MLP implementing non-linear regression the update rules are the following: Avn=nE(37 y)zh Awn;= n (M' y) vnzh(1 zh)x"; where x'; is input at unit j and Z, is the activation function of hidden unit h. Derive the update rules for an MLP implementing the same non-linear regression with two hidden layers, where the second layer uses the index l. Use waen to denote weights between the 1st and the 2nd hidden layers and winto denote weights between the input layer and the 1st hidden layer. (Recall the backpropogation rule E E z2 z1 z2 zi w. w

Can anyone help with the solution?

Can anyone help with the solution?