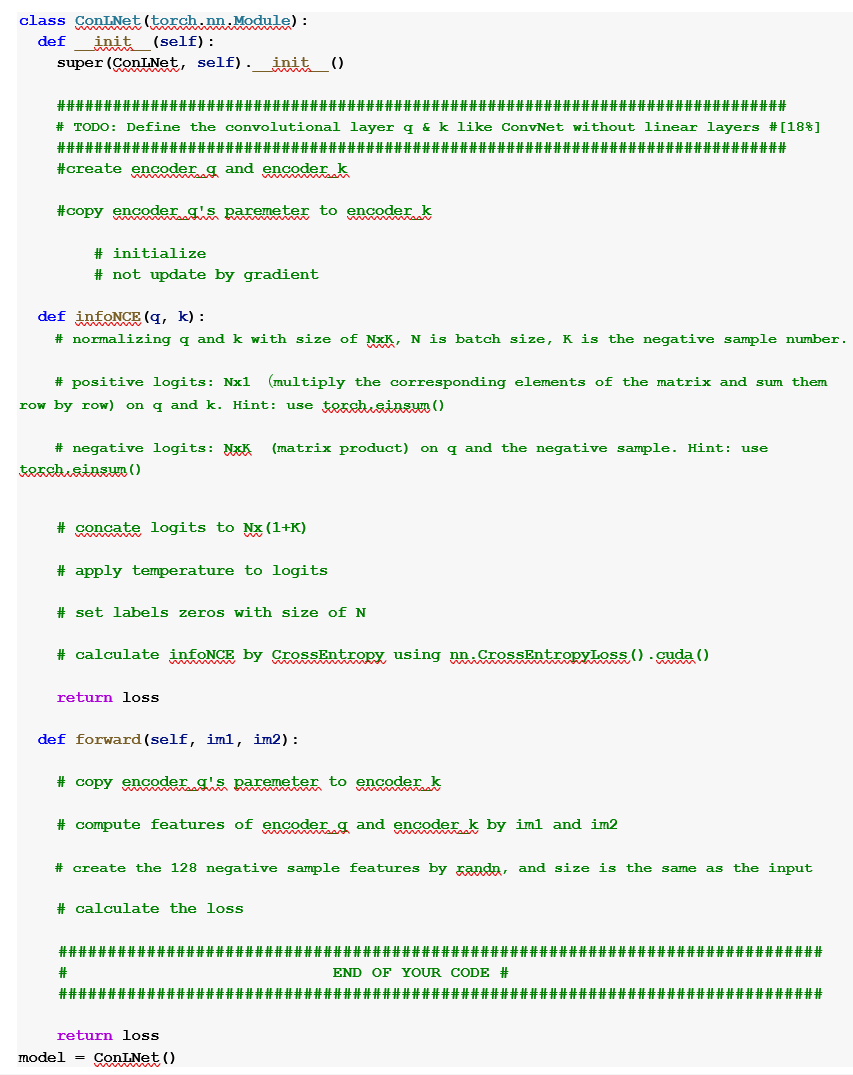

Question: class Conlet (torch.nn.Module): def init (self): super (ConLNet, self). init () ############################### ######################################## # TODO: Define the convolutional layer q & k like ConvNet

class Conlet (torch.nn.Module): def init (self): super (ConLNet, self). init () ############################### ######################################## # TODO: Define the convolutional layer q & k like ConvNet without linear layers # [188] ############################################################################## #create encoder g and encoder k #copy encoder g's paremeter to encoder k # initialize # not update by gradient def infoNCE (q, k): #normalizing qand k with size of NK, N is batch size, K is the negative sample number. # positive logits: Nx1 (multiply the corresponding elements of the matrix and sum them row by row) on qand k. Hint: use torch.sinsum () # negative logits: K (matrix product) on q and the negative sample. Hint: use texch.sinzu () # concate logits to NX (1+K) # apply temperature to logits #set labels zeros with size of N #calculate infoNCE by CrossEntropy using nn. CrossEntropy Loss ().cuda () return loss def forward (self, iml, im2): #copy encoder g's paremeter to encoder k # compute features of encoder g and encoder k by im1 and im2 # create the 128 negative sample features by kandn, and size is the same as # calculate the loss # ### ############################################################################## END OF YOUR CODE # the input return loss model ConLNet () #########

Step by Step Solution

There are 3 Steps involved in it

It appears that you have shared an image containing Python code that is part of an implementation of a neural network module specifically a ConvNet The class ConvNet is a subclass of torchnnModule whi... View full answer

Get step-by-step solutions from verified subject matter experts