Question

Complete the simulator and debug it Create a cache class that can be configured for different sizes (up to at least 256KiB), associativities (direct mapped

Complete the simulator and debug it

Create a cache class that can be configured for different sizes (up to at least 256KiB), associativities (direct mapped to 8-way set associative, and block sizes (from one byte to at least 64 bytes). For set-associative caches, the replacement policy should be LRU.

The only member function that is required for the cache is Cache.access(). This function should take on parameter (a byte address) and should simulate cache behavior as if the address was accessed. This includes updating cache state and returning TRUE if the access hit the cache, or FALSE if it did not. When the cache object is first created, all blocks in the cache should be marked as invalid.

Test the cache object using the provided trace files. After the entire trace file is run through the cache, the hit rates should match those provided. If your outputs do not match, spend more time debugging before proceeding. You will need a working simulator before proceeding.

Step III,IV - Gathering Data and Analysis

You will be instrumenting the Sieve of Eratosthenes algorithm by creating a cache object and "accessing it" using points from the actual data objects in the algorithm.

NOTE: Configure the Cache for a total of 256kiB, 8-way set associativity, and a 64 byte block size.

Your code should look something like this (all other variables we assume the compiler can assign to registers):

/* this code is based on the code from sieve of Erotosthenes at algolist.net http://www.algolist.net/Algorithms/Number_theoretic/Sieve_of_Eratosthenes */ unsigned long hits = 0; unsigned long accesses = 0; for (long m = 2; mHere are the code:

#include

using namespace std;

struct CacheBlock { bool valid; bool dirty; unsigned long tag; vector

data; }; class Cache { public: Cache(int size, int associativity, int block_size); bool access(unsigned long address);

private: int size; int associativity; int block_size; int num_sets; vector

> cache; void evictLRU(int set); int findLRU(int set); };

Cache::Cache(int size, int associativity, int block_size) { this->size = size; this->associativity = associativity; this->block_size = block_size;

num_sets = size / (associativity * block_size); cache = vector

>(num_sets, vector (associativity)); } bool Cache::access(unsigned long address) { int tag_bits = 32 - __builtin_clz(size) - __builtin_ctz(block_size); int set_bits = __builtin_ctz(num_sets);

unsigned long tag = address >> (set_bits + __builtin_ctz(block_size)); int set = (address >> __builtin_ctz(block_size)) & (num_sets - 1);

for (int i = 0; i

if (block.valid && block.tag == tag) { // Hit if (associativity > 1) { // Move block to MRU position cache[set].erase(cache[set].begin() + i); cache[set].push_back(block); } return true; } }

// Miss int lru_index = findLRU(set); CacheBlock &lru_block = cache[set][lru_index];

if (lru_block.valid && lru_block.dirty) { // Write back to memory // ... }

// Read from memory // ...

lru_block.valid = true; lru_block.dirty = false; lru_block.tag = tag;

return false; }

void Cache::evictLRU(int set) { int lru_index = findLRU(set); CacheBlock &lru_block = cache[set][lru_index];

if (lru_block.valid && lru_block.dirty) { // Write back to memory // ... }

lru_block.valid = false; lru_block.dirty = false; }

int Cache::findLRU(int set) { int lru_index = 0; int lru_timestamp = cache[set][0].timestamp;

for (int i = 1; i

return lru_index; }

please help to process the gathering data as the requested below

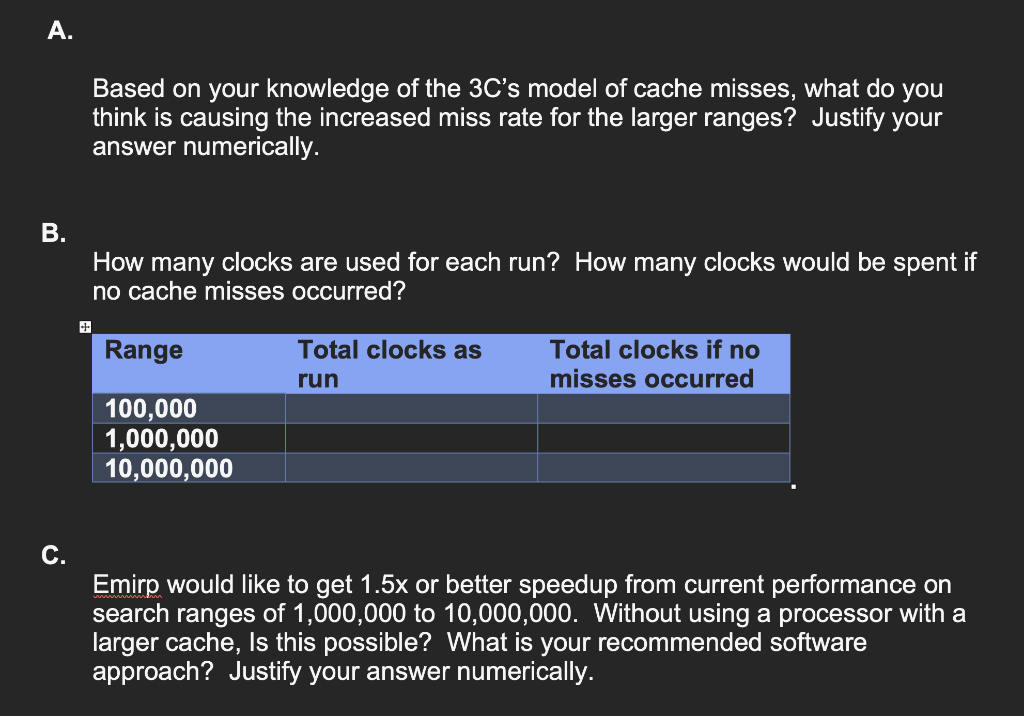

Based on your knowledge of the 3C's model of cache misses, what do you think is causing the increased miss rate for the larger ranges? Justify your answer numerically. How many clocks are used for each run? How many clocks would be spent if no cache misses occurred? Emirp would like to get 1.5x or better speedup from current performance on search ranges of 1,000,000 to 10,000,000. Without using a processor with a larger cache, Is this possible? What is your recommended software approach? Justify your answer numerically. Based on your knowledge of the 3C's model of cache misses, what do you think is causing the increased miss rate for the larger ranges? Justify your answer numerically. How many clocks are used for each run? How many clocks would be spent if no cache misses occurred? Emirp would like to get 1.5x or better speedup from current performance on search ranges of 1,000,000 to 10,000,000. Without using a processor with a larger cache, Is this possible? What is your recommended software approach? Justify your answer numerically

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started