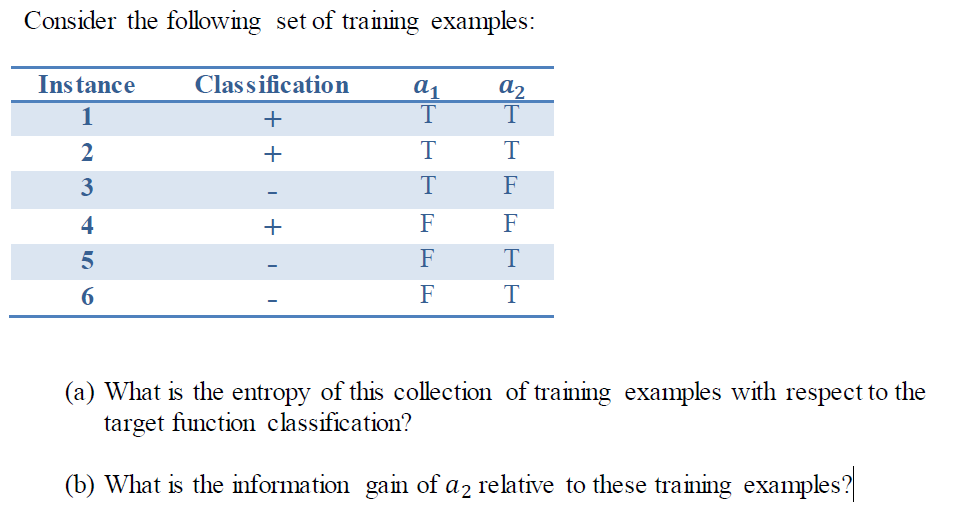

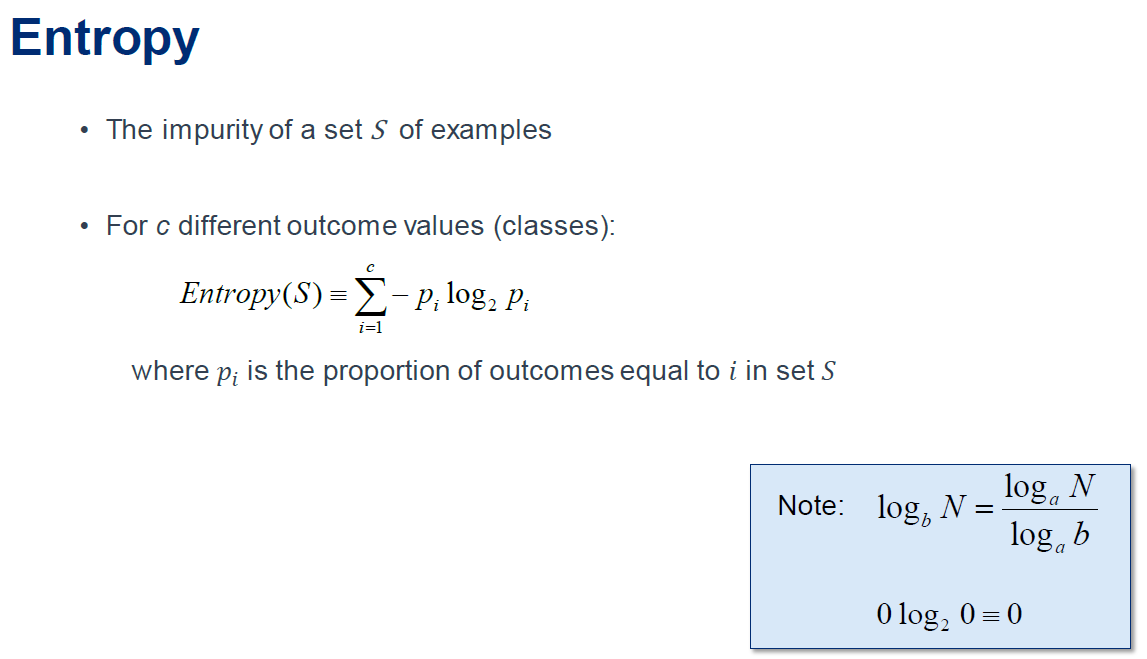

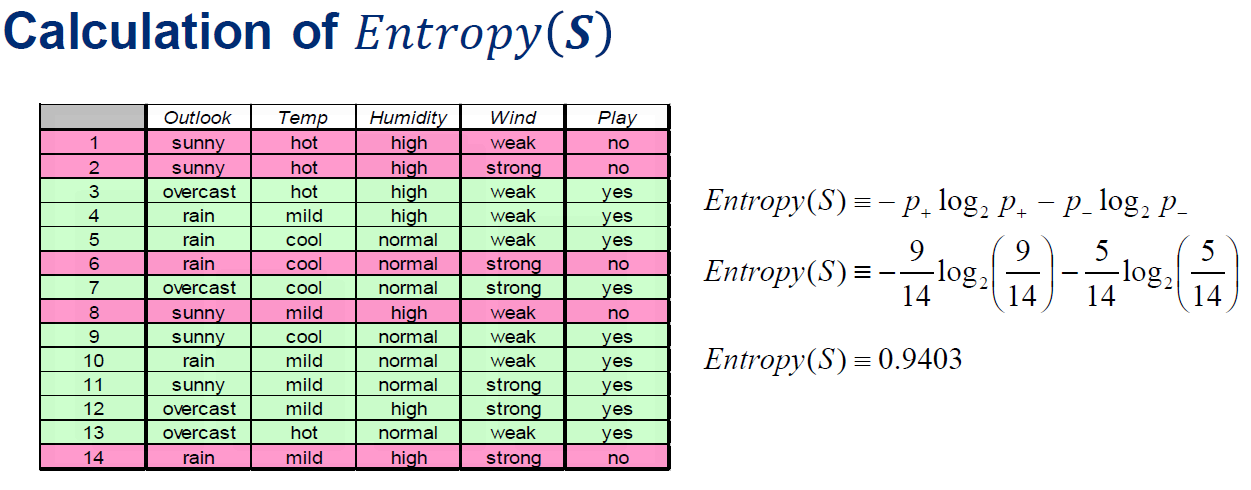

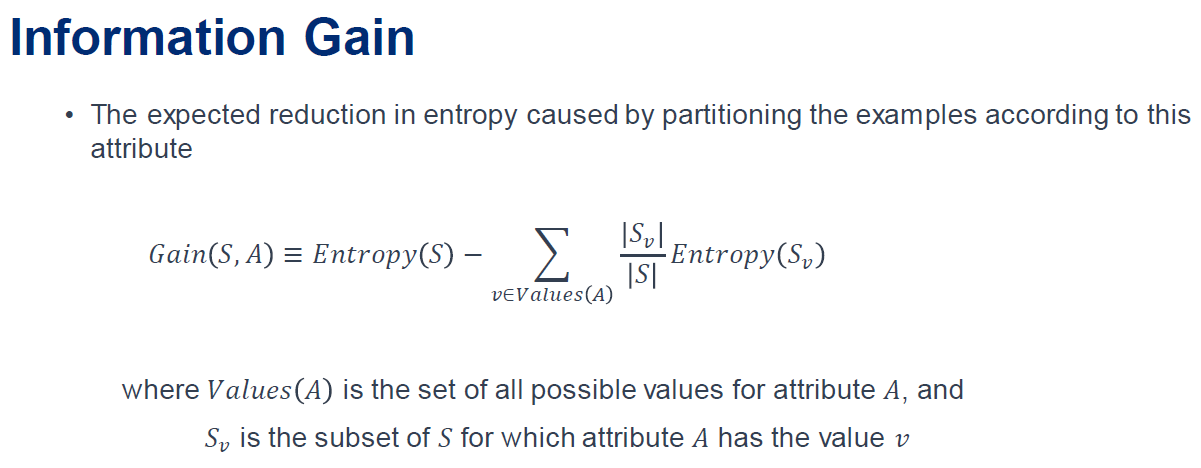

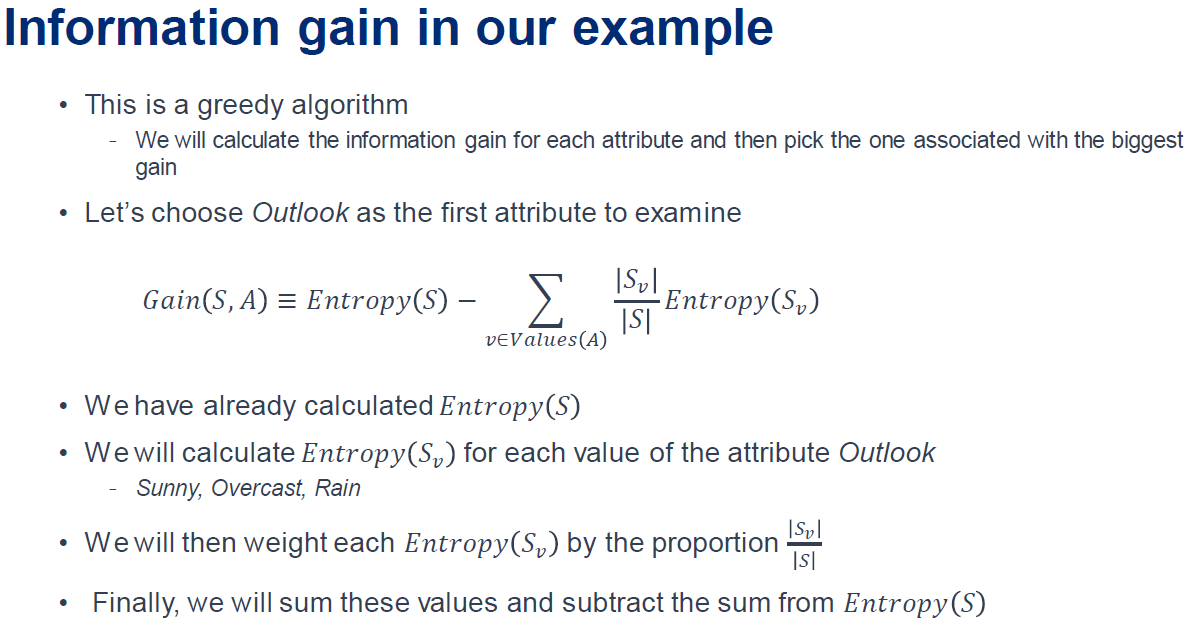

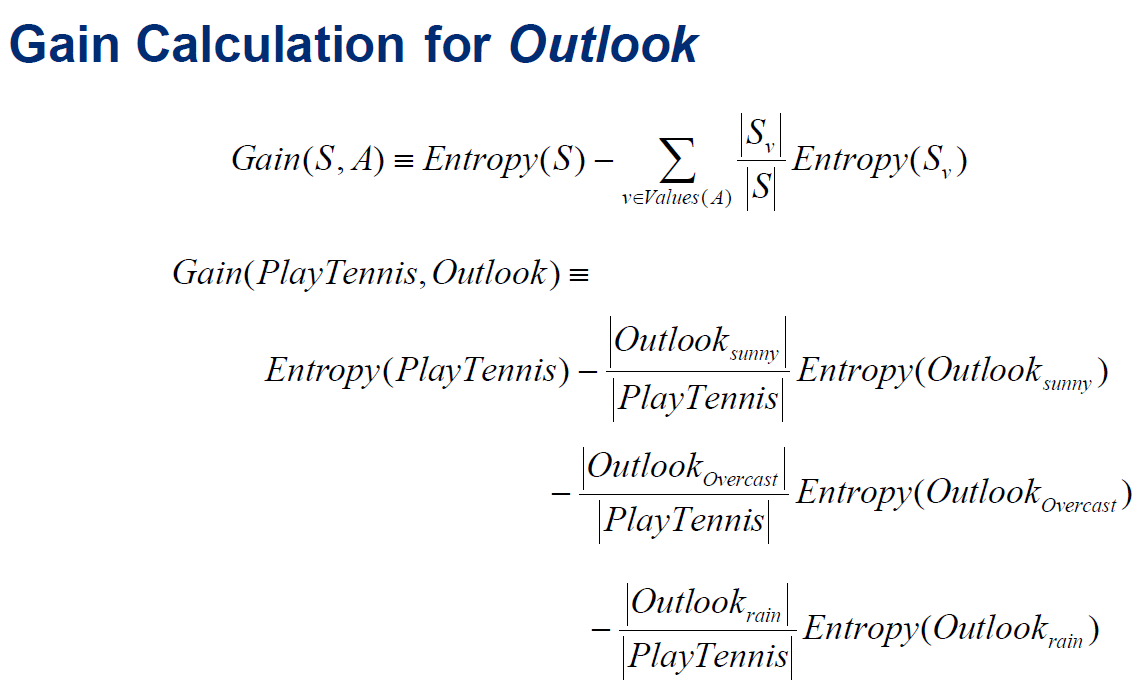

Consider the following set of training examples: Instance Classification a1 a2 + T T + T T T F QUIAWN + F - T T (a) What is the entropy of this collection of training examples with respect to the target function classification? (b) What is the information gain of a2 relative to these training examples?Entropy . The impurity of a set S of examples . For c different outcome values (classes): Entropy(S) = _ - P: 10g2 Pi i=1 where pi is the proportion of outcomes equal to i in set S Note: log, N =- log a N log b 0 log2 0=0Calculation of Entropy(S) Outlook Temp Humidity Wind Play sunny hot high weak no sunny hot high strong no overcast hot high weak yes rain mild high weak yes Entropy(S) = - p. log2 p+ - p_ log2 p_ D JO CI A W N - rain cool normal weak yes rain cool normal strong no 9 Entropy(S) = --log, - log2 5 overcast coo normal strong yes 14 14 14 14 sunny mild high weak no 9 sunny cool normal weak yes 10 rain mild normal weak yes Entropy(S) = 0.9403 11 sunny mild normal strong yes 12 overcast mild high strong yes 13 overcast hot normal weak yes 14 rain mild high strong noInformation Gain - The expected reduction in entropy caused by partitioning the examples according to this attribute |5v| |5| Gain(5,A) E Entropy(5) Z Entropyv) vEVaIueS(A) where Values(A) is the set of all possible values for attribute A, and S1, is the subset of S for which attribute A has the value v Information gain in our example - This is a greedy algorithm We will calculate the information gain for each attribute and then pick the one associated with the biggest gain - Let's choose Outlook as the first attribute to examine ISvl |5| Gain(S,A) E Entropy(5) Z Entropyv) vEVaEueS(A) - We have already calculated Entropy(5) - We will calculate Entropy(5v) for each value of the attribute Outlook Sunny, Overcast, Rain - Wewill then weight each Entropy(5v) by the proportion % - Finally, we will sum these values and subtract the sum from Entropy(5) Gain Calculation for Outlook S Gain(S, A) = Entropy(S) - E Entropy(S, ) U VEValues( A) Gain( Play Tennis, Outlook) = Outlook sunny Entropy(Play Tennis) Entropy( Outlook . Play Tennis sunny Outlook Overcast Entropy(Outlook Overcast Play Tennis Outlook, rain Entropy(Outlook rain ) PlayTennis