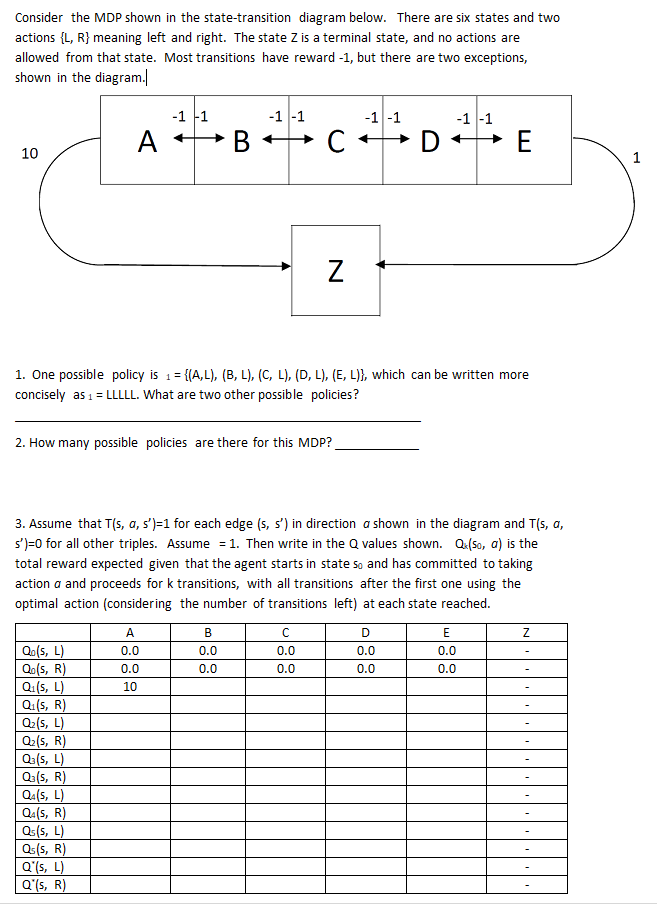

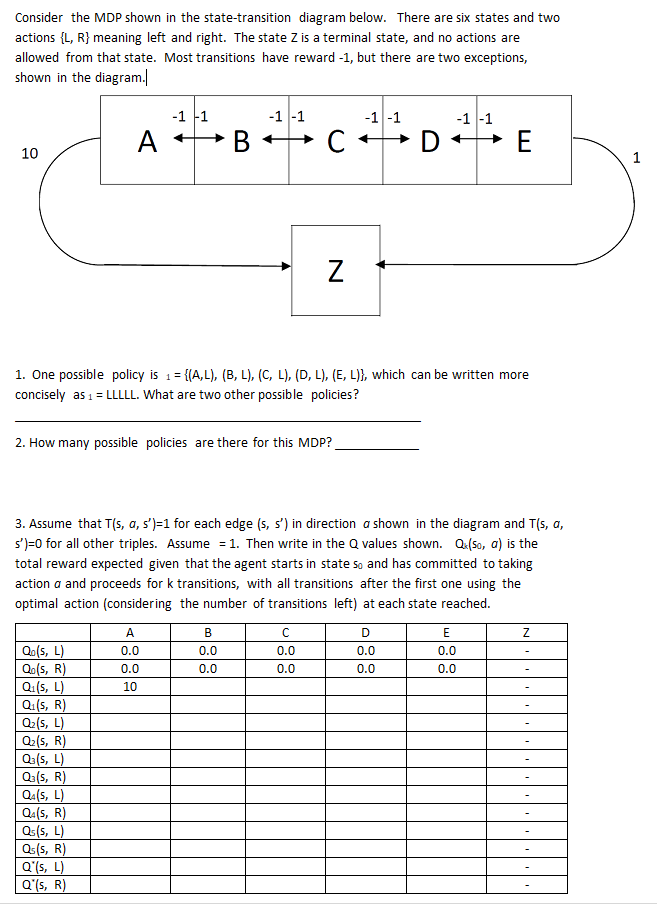

Consider the MDP shown in the state-transition diagram below. There are six states and two actions {L, R} meaning left and right. The state Z is a terminal state, and no actions are allowed from that state. Most transitions have reward -1, but there are two exceptions, shown in the diagram. -1 1 -1 -1 -1 -1 -1 -1 A B D E 10 1 Z 1. One possible policy is 1 = {(A,L), (B, L), (C, L), (D, L), (E, L)}, which can be written more concisely as 1 = LLLLL. What are two other possible policies? 2. How many possible policies are there for this MDP? 3. Assume that T(s, a, 5')=1 for each edge (s, s') in direction a shown in the diagram and T(s, a, s')=0 for all other triples. Assume = 1. Then write in the Q values shown. Qu(so, a) is the total reward expected given that the agent starts in state so and has committed to taking action a and proceeds for k transitions, with all transitions after the first one using the optimal action considering the number of transitions left) at each state reached. Z A 0.0 0.0 10 B 0.0 0.0 C 0.0 0.0 D 0.0 0.0 E 0.0 0.0 Qu(s, L) Qu(s, R) Qi(s, L) Qu(S, R) Q2(s, L) Qu(s, R) Q: (5, L) Qu(s, R) Qu(s, L) Qu(s, R) Qs(s, L) Qs(s, R) Q'(s, L) Q's, R) Consider the MDP shown in the state-transition diagram below. There are six states and two actions {L, R} meaning left and right. The state Z is a terminal state, and no actions are allowed from that state. Most transitions have reward -1, but there are two exceptions, shown in the diagram. -1 1 -1 -1 -1 -1 -1 -1 A B D E 10 1 Z 1. One possible policy is 1 = {(A,L), (B, L), (C, L), (D, L), (E, L)}, which can be written more concisely as 1 = LLLLL. What are two other possible policies? 2. How many possible policies are there for this MDP? 3. Assume that T(s, a, 5')=1 for each edge (s, s') in direction a shown in the diagram and T(s, a, s')=0 for all other triples. Assume = 1. Then write in the Q values shown. Qu(so, a) is the total reward expected given that the agent starts in state so and has committed to taking action a and proceeds for k transitions, with all transitions after the first one using the optimal action considering the number of transitions left) at each state reached. Z A 0.0 0.0 10 B 0.0 0.0 C 0.0 0.0 D 0.0 0.0 E 0.0 0.0 Qu(s, L) Qu(s, R) Qi(s, L) Qu(S, R) Q2(s, L) Qu(s, R) Q: (5, L) Qu(s, R) Qu(s, L) Qu(s, R) Qs(s, L) Qs(s, R) Q'(s, L) Q's, R)