Question: Consider the symmetric simple random walk at two timepoints: n and n + m. Find ?(Sn, Sn+m). What happens as m ? ? for fixed

Consider the symmetric simple random walk at two timepoints: n and n + m. Find

?(Sn, Sn+m). What happens as m ? ? for fixed n and as n ? ? for fixed m?

Explain intuitively.

23 Consider the simple random walk with p 6=

1

2

. Use the law of large numbers to argue

that Sn goes to ?? if p

2

and to ? if p > 1

2

.

24 Consider a symmetric simple random walk with reflecting barriers 0 and a, in the sense

that p0,0 = p0,1 =

1

2

and pa,a?1 = pa,a =

1

2

. (a) Describe this as a Markov chain

and find its stationary distribution. Is it the limit distribution? (b) If the walk starts

in 0, what is the expected number of steps until it is back? (c) Suppose instead that

reflection is immediate, so that p0,1 = 1 and pa,a?1 = 1, everything else being the

same. Describe the Markov chain, find its stationary distribution ?, and compare with

(a). Explain the difference. Is ? the limit distribution?

25 Consider a variant of the simple random walk where the walk takes a step up with

probability p, down with probability q, or stays where it is with probability r, where

p + q + r = 1. Let the walk start in 0, and let ?1 be the time of the first visit to 1. Find

P0(?1

26 Consider the simple random walk starting in 0 and let ?r be the time of the first visit to

state r, where r ? 1. Find the expected value of ?r if p > 1

2

.

27 Consider the simple random walk with p 6=

1

2

, starting in 0 and let

?0 = min{n ? 1 : Sn = 0}

the time of the first return to 0. Use Corollary 8.3.2 to show that P0(?0

2 min(p, 1 ? p).

28 Consider the simple random walk with p > 1

2

started in state 1. By Corollary 8.3.2

"reversed," the probability that the walk ever visits 0 is (1 ? p)/p. Now let the initial

state S0 be random, chosen according to a distribution on {0, 1, ...} that has pgf G. (a)

Show that the probability that 0 is ever visited (which could occur in step 0 if S0 = 0)

is G((1 ? p)/p). (b) Now instead consider the probability that 0 is ever visited at

step 1 or later. Show that this equals G((1 ? p)/p) ? 2p + 1. (c) Let p =

2

3

and

S0 ? Poi(1). Compute the probabilities in (a) and (b) and also compare with the

corresponding probability if S0 ? 1.

29 Consider a three-dimensional random walk Sn where in each step, one of the six neighbors along the axes is chosen with probability 1

6

each. Let the walk start in the origin

and show that

P(S2n = (0, 0, 0)) =

1

6

2n X

i+j+k=n

(2n)!

(i!j!k!)2

and use Stirling's formula to conclude that the walk is transient.

30 Consider a branching process with mean number of offspring , letting Yn be the total

number of individuals up to and including the nth generation and letting Y be the total

number of individuals ever born. (a) For what values of is Y finite? (b) Express Yn

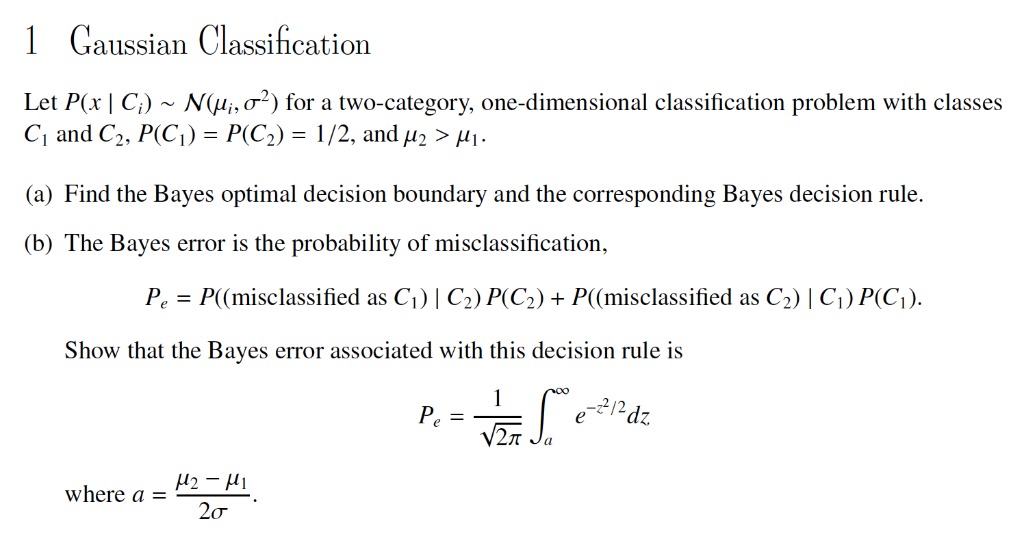

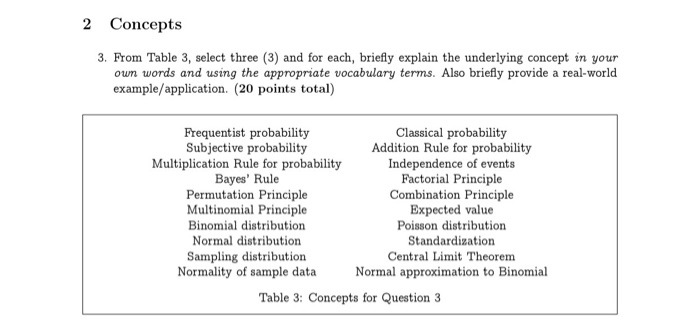

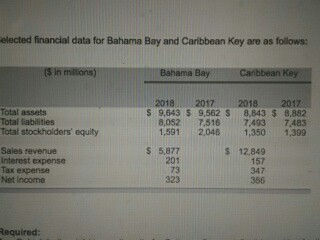

1 Gaussian Classification Let P(x | C;) ~ N(ju;, o2) for a two-category, one-dimensional classification problem with classes C1 and C2, P(C1) = P(C2) = 1/2, and /2 > M1. (a) Find the Bayes optimal decision boundary and the corresponding Bayes decision rule. (b) The Bayes error is the probability of misclassification, Pe = P((misclassified as C1) | C2) P(C2) + P((misclassified as C2) | C1) P(C1). Show that the Bayes error associated with this decision rule is DO Pe = e -27/2 dz V2IT where a = H2 - HI 202 Concepts 3. From Table 3, select three (3) and for each, briefly explain the underlying concept in your own words and using the appropriate vocabulary terms. Also briefly provide a real-world example/application. (20 points total) Frequentist probability Classical probability Subjective probability Addition Rule for probability Multiplication Rule for probability Independence of events Bayes' Rule Factorial Principle Permutation Principle Combination Principle Multinomial Principle Expected value Binomial distribution Poisson distribution Normal distribution Standardization Sampling distribution Central Limit Theorem Normality of sample data Normal approximation to Binomial Table 3: Concepts for Question 3elected financial data for Bahama Bay and Caribbean Key are as folows (5 in millions) Bahama Bay Caribbean Kay 2018 2017 2018 2017 Total mounts 5 9,643 5 0,562 5 8,643 $ 8.082 Total Liabilities 8.052 7.516 7.493 7:483 Total Stockholders equity 1.591 2016 1.350 1.390 Sales revenue 5 5877 12.849 Internet exponge 201 157 Tax expense 73 347 Net Income 323 356 Required:Problem 1: (9 points) Bayes Classifiers and Naive Bayes In this problem you will use Bayes Rule: p(y|x) = p(xly)p(y)/p(x) to perform classification. Sup- pose we observe some training data with two binary features 21 , 12 and a binary class y. After learning the model, you are also given some validation data. Table 1: Training Data T1 y 1 1 0 0 0 Table 2: Validation Data 0 y 1 0 0 1 0 0 1 0 1 0 1 1 0 0 0 0 0 In the case of any ties, we will prefer to predict class 0. (a) What is the classification validation error rate of the naive Bayes classifier on these data? (b) What is the classification validation error rate of the joint Bayes classifier on these data

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts