Question: could you help giving me explanations on this problem ? Thank you . Question : Derive the total loss function in [184) using the square

could you help giving me explanations on this problem ? Thank you .

Question :

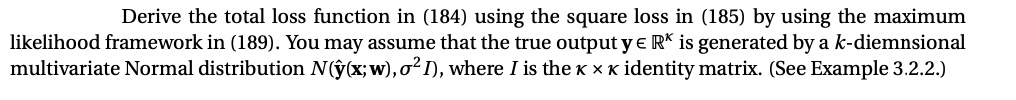

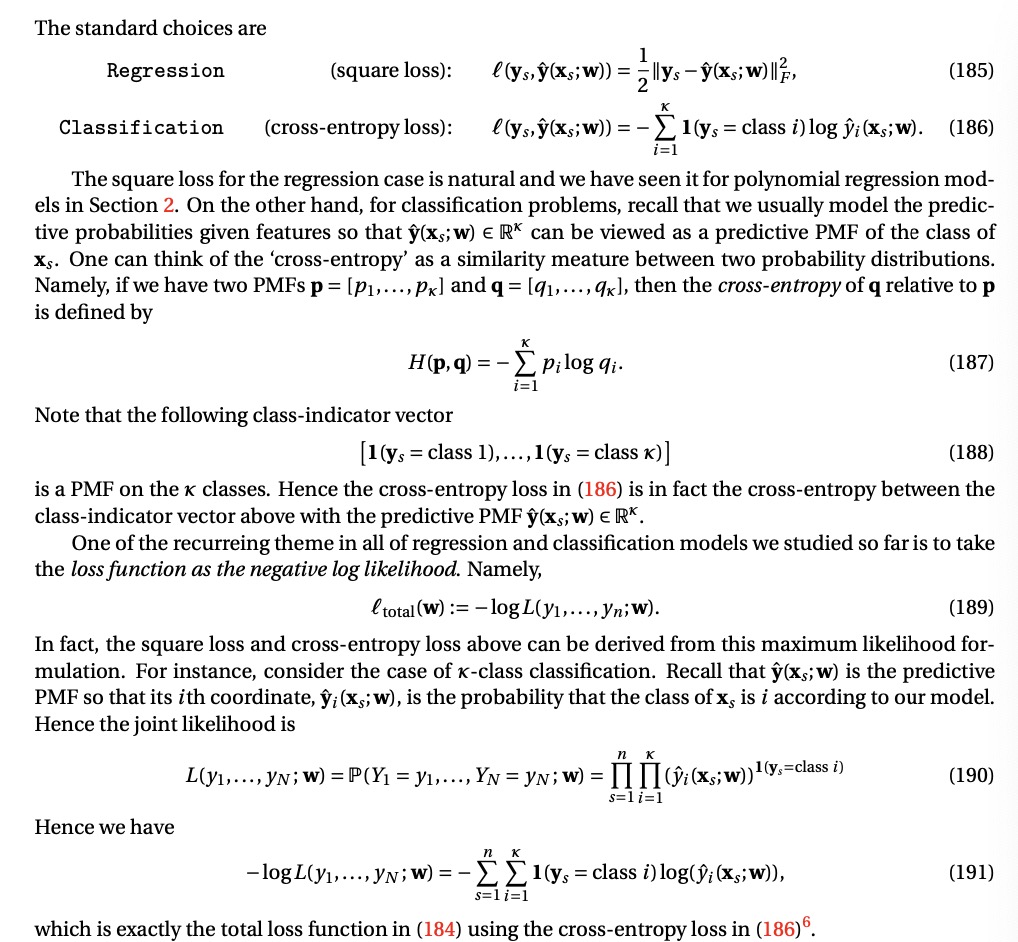

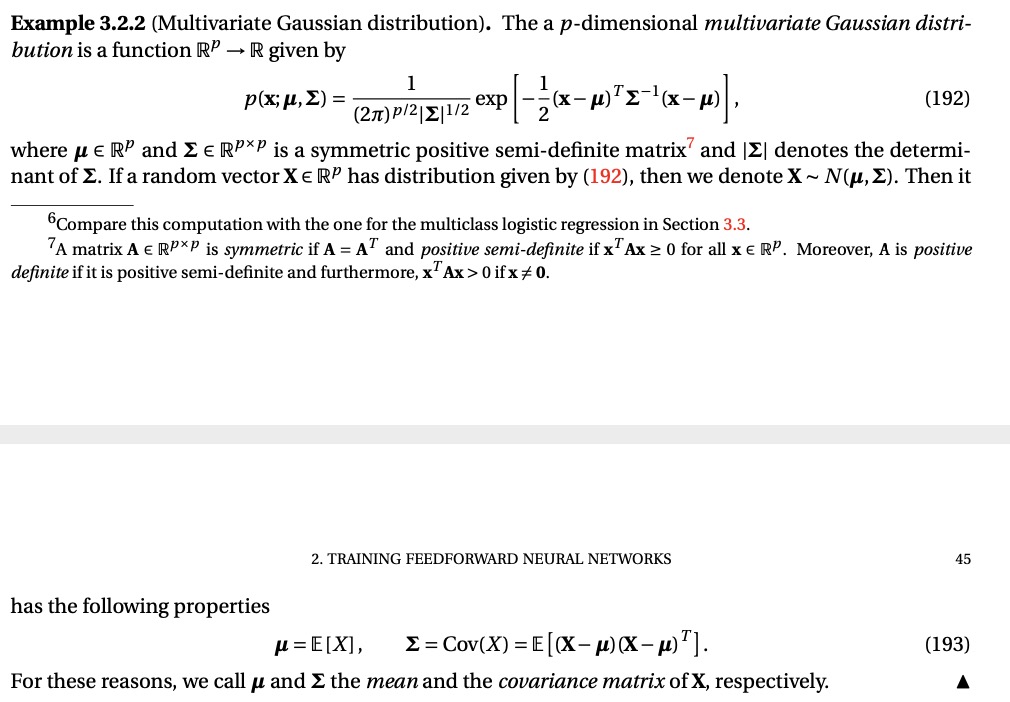

Derive the total loss function in [184) using the square loss in {185) by using the maximum likelihood framework in (189). You may assume that the true output y E IR" is generated by a k-diemnsional multivariate Normal distribution N (x; w), 02D, where I is the x x 'K identity matrix. (See Example 3.2.2.) The standard choices are Regression (square loss): ((ys, y(xs; W)) = 7 llys -y(xs; W)IIF. (185) Classification (cross-entropy loss): ((ys, y(xs;W)) = - _ l(ys = class i) log Vi(Xs; W). (186) 1= 1 The square loss for the regression case is natural and we have seen it for polynomial regression mod- els in Section 2. On the other hand, for classification problems, recall that we usually model the predic tive probabilities given features so that y(X,; w) ER* can be viewed as a predictive PMF of the class of Xs. One can think of the 'cross-entropy' as a similarity meature between two probability distributions. Namely, if we have two PMFs p = [p1,. ..,Px] and q = [q1,...,qx], then the cross-entropy of q relative to p is defined by H(p, q) = - E p; log qi. (187) 1=1 Note that the following class-indicator vector [1 (ys = class 1), ...,1(ys = class x) ] (188) is a PMF on the x classes. Hence the cross-entropy loss in (186) is in fact the cross-entropy between the class-indicator vector above with the predictive PMF y(Xs; W) ERK. One of the recurreing theme in all of regression and classification models we studied so far is to take the loss function as the negative log likelihood. Namely, total (w) := -log L(y1, ..., yn; W). (189) In fact, the square loss and cross-entropy loss above can be derived from this maximum likelihood for- mulation. For instance, consider the case of x-class classification. Recall that y(xs; w) is the predictive PMF so that its ith coordinate, yi(xs; W), is the probability that the class of X, is i according to our model. Hence the joint likelihood is L( )1,..., YN; W) = P(Y1 = y1,..., YN = YN; W) = IIII()i(xs;w)) 1(ys=class ?) (190) s=li=1 Hence we have -log L(y1,..., IN; W) = - _ _l(ys = class i) log(Vi(Xs; W)), (191) s=li=1 which is exactly the total loss function in (184) using the cross-entropy loss in (186)6Example 3.2.2 (Multivariate Gaussian distribution). The a p-dimensional multivariate Gaussian distri- bution is a function RP - R given by 1 p(x; u, Z) = (27) P/2| |1/2 exp (192) where u E RP and Z E RPXP is a symmetric positive semi-definite matrix' and |Z| denotes the determi nant of E. If a random vector X E RP has distribution given by (192), then we denote X ~ N(u, Z). Then it Compare this computation with the one for the multiclass logistic regression in Section 3.3. "A matrix A E RPXP is symmetric if A = A' and positive semi-definite if x Ax 2 0 for all x E RP. Moreover, A is positive definite if it is positive semi-definite and furthermore, x Ax > 0 ifx /0. 2. TRAINING FEEDFORWARD NEURAL NETWORKS 45 has the following properties " = [[X], E = Cov(X) = E [(X -() (X-M) ]]. (193) For these reasons, we call u and E the mean and the covariance matrix of X, respectively

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts