could you help giving me explanations on this problem ? Thank you.

For (i), just use the conclusion from exercise 2.7.2

Question :

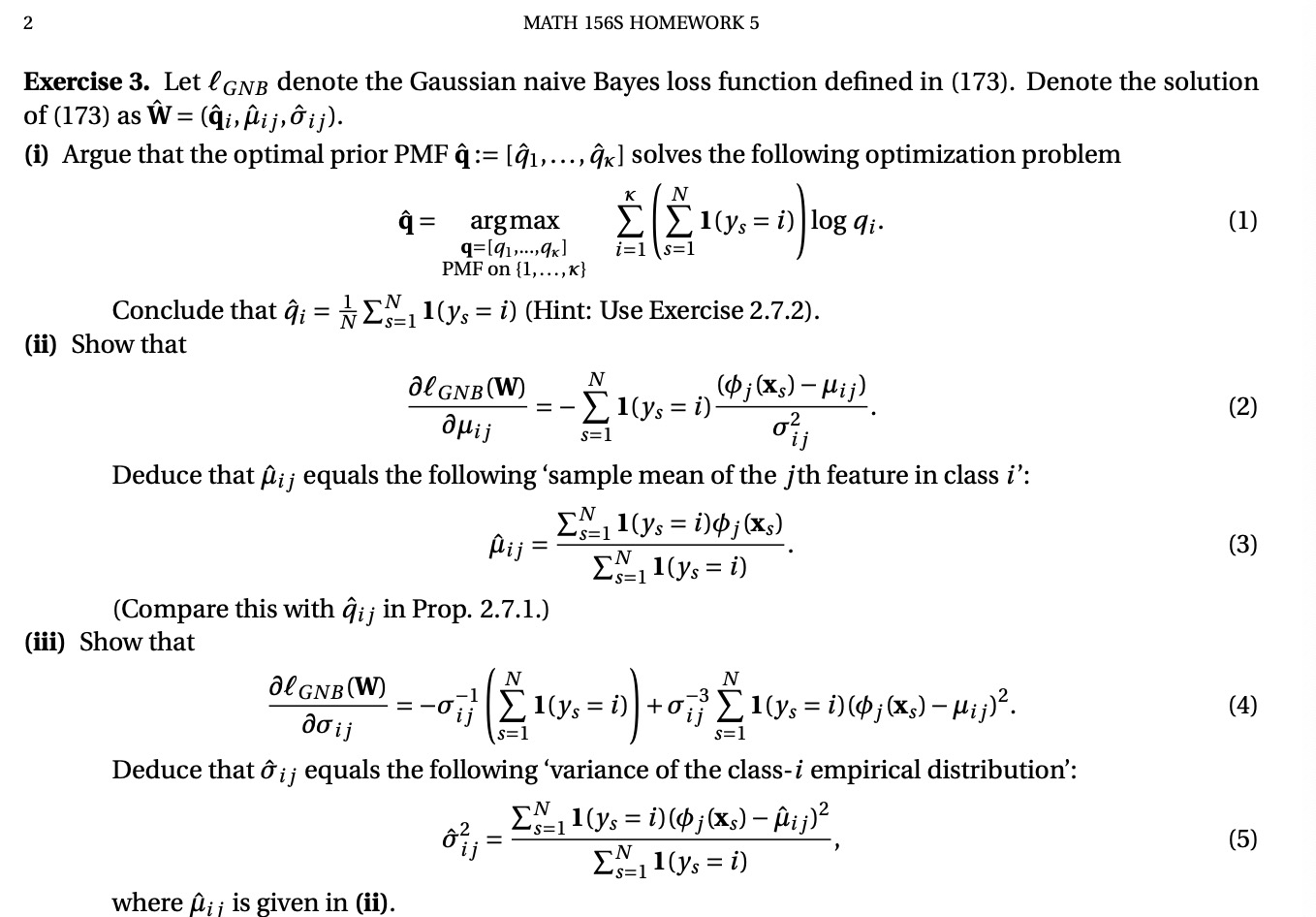

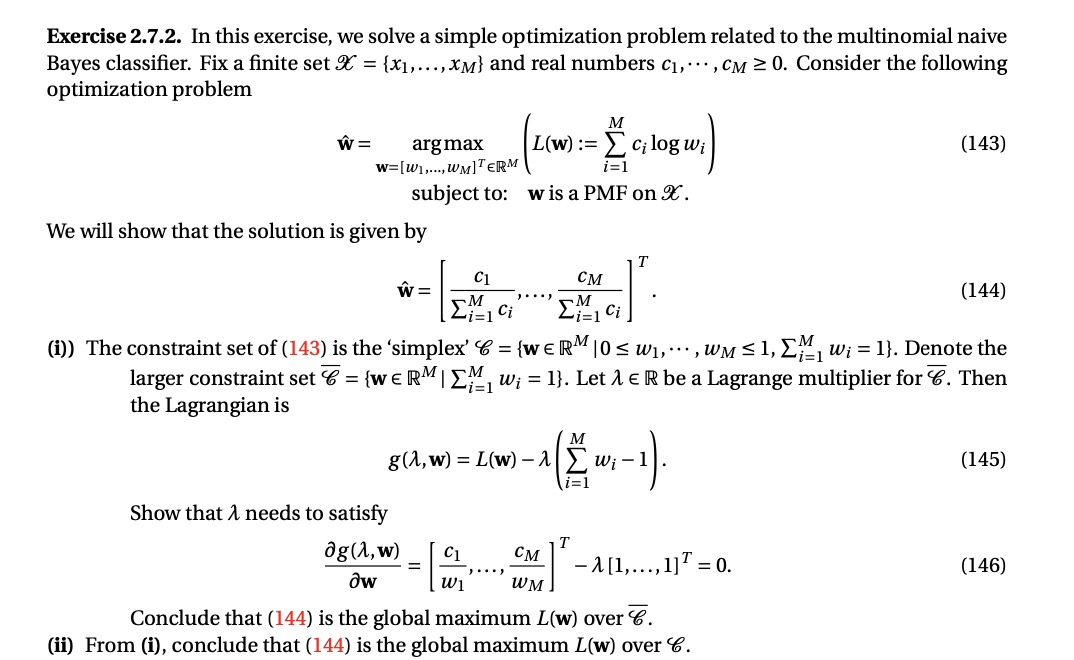

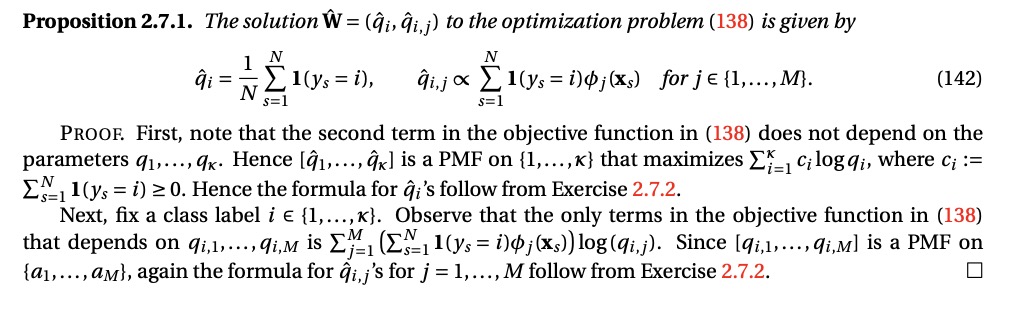

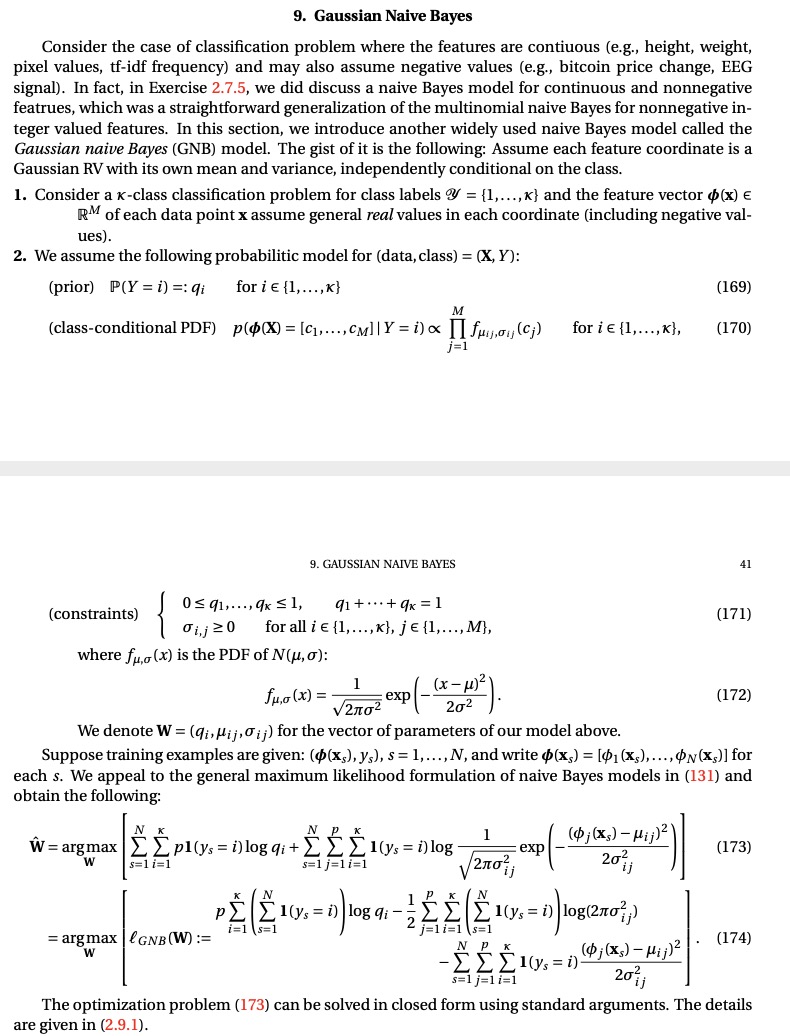

2 MATH 156S HOMEWORK 5 Exercise 3. Let & GNB denote the Gaussian naive Bayes loss function defined in (173). Denote the solution of (173) as W= (qi, prij, bij). (i) Argue that the optimal prior PMF q:= [91,...,qx] solves the following optimization problem q= argmax E El(y's = i) log qi. (1) q=[91,...,9x] i=1 S=1 PMF on {1, ..., K} Conclude that qi = ~ EN 1()s = i) (Hint: Use Exercise 2.7.2). (ii) Show that Ol GNB (W) = CI(ys = i)- ($ ; ( * s ) - Hij ) (2) S=1 Deduce that fij equals the following 'sample mean of the jth feature in class i': -5=1 1 ()s = i)dj (Xs) Pij = (3) Es- 1()s = i) (Compare this with qij in Prop. 2.7.1.) (iii) Show that al GNB (W) [I( ys = 1) to;; El(y's = i)($; (xs) -Mij). (4) ooij S= 1 Deduce that of equals the following 'variance of the class-i empirical distribution': 04 = 1( )s = i)(; (xs) -blij)2 (5) [N 1()s = i) where fi ; is given in (ii).Exercise 2.7.2. In this exercise, we solve a simple optimization problem related to the multinomial naive Bayes classifier. Fix a finite set 9 = (x1,..., XM} and real numbers c1, ..., CM 2 0. Consider the following optimization problem W = argmax L(w) := _ clog wi (143) W=[WI,..., WM]TERM i=1 subject to: w is a PMF on . We will show that the solution is given by W = C1 CM . . . ) (144) Zi=1 Ci Zi= Ci (i)) The constraint set of (143) is the 'simplex' 6 = (WER" |0 s w1, ..., WM S1, Zy, wi = 1). Denote the larger constraint set 6 = (we RM| EM, w; = 1). Let A ER be a Lagrange multiplier for 6. Then the Lagrangian is M 8 (1, w) = L(w) -A Z wi - 1 . (145) i=1 Show that 1 needs to satisfy ag(1, w) CM T - A [1,..., 1]] = 0. (146) Ow W1 WM Conclude that (144) is the global maximum L(w) over 6. (ii) From (i), conclude that (144) is the global maximum L(w) over 6.Proposition 2.7.1. The solution W= (qi, qi,;) to the optimization problem (138) is given by N qi = _l()s = i), qi,jo El(ys = i);(Xs) for je{1,..., M). (142) N S= 1 $=1 PROOF. First, note that the second term in the objective function in (138) does not depend on the parameters 91,..., qx. Hence [91,..., qx] is a PMF on {1,..., x} that maximizes _ _, c; logqi, where ci := [ 1()'s = i) 2 0. Hence the formula for qi's follow from Exercise 2.7.2. Next, fix a class label i e {1,...,x}. Observe that the only terms in the objective function in (138) that depends on qi,1,...,qi,M is E(Esel()'s = i); (Xs)) log(qi,j). Since [qi,1, ..., qi,M] is a PMF on (an,..., am), again the formula for qi, j's for j = 1,..., M follow from Exercise 2.7.2.9. Gaussian Naive Bayes Consider the case of classification problem where the features are contiuous (e.g., height, weight, pixel values, tf-idf frequency) and may also assume negative values (e.g., bitcoin price change, EEG signal). In fact, in Exercise 2.7.5, we did discuss a naive Bayes model for continuous and nonnegative featrues, which was a straightforward generalization of the multinomial naive Bayes for nonnegative in- teger valued features. In this section, we introduce another widely used naive Bayes model called the Gaussian naive Bayes (GNB) model. The gist of it is the following: Assume each feature coordinate is a Gaussian RV with its own mean and variance, independently conditional on the class. 1. Consider a x-class classification problem for class labels % = (1,..., x} and the feature vector p (x) E RAY of each data point x assume general real values in each coordinate (including negative val- ues). 2. We assume the following probabilitic model for (data, class) = (X, Y): (prior) P(Y = i) =: qi for i E {1,..., k} (169) M (class-conditional PDF) p($(X) =[c1..... cullY =D) > pl()s =i)log qi + > ELI(ys = 1) log ( ; (x5) - Hij)2 - exp (173) $=1i=1 = argmax | 6GNB (W) := (174) 202 The optimization problem (173) can be solved in closed form using standard arguments. The details are given in (2.9.1)