Answered step by step

Verified Expert Solution

Question

1 Approved Answer

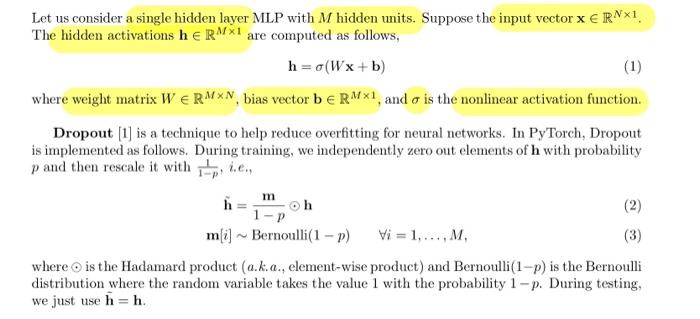

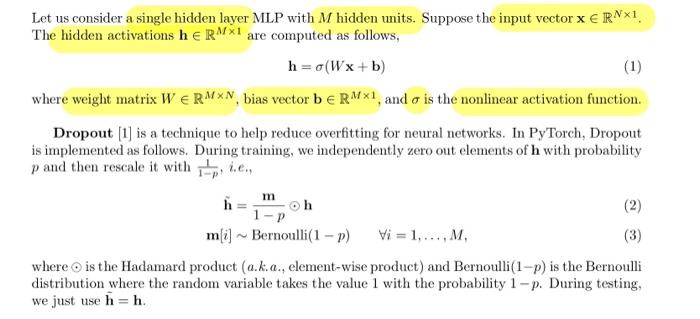

dropout Let us consider a single hidden layer MLP with M hidden units. Suppose the input vector xRN1. The hidden activations hRM1 are computed as

dropout

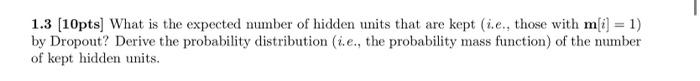

Let us consider a single hidden layer MLP with M hidden units. Suppose the input vector xRN1. The hidden activations hRM1 are computed as follows, h=(Wx+b) where weight matrix WRMN, bias vector bRM1, and is the nonlinear activation function. Dropout [1] is a technique to help reduce overfitting for neural networks. In PyTorch, Dropout is implemented as follows. During training, we independently zero out elements of h with probability p and then rescale it with 1p1,i.e. h~m[i]=1pmhBernoulli(1p)i=1,,M, where is the Hadamard product (a.k.a., element-wise product) and Bernoulli (1p) is the Bernoulli distribution where the random variable takes the value 1 with the probability 1p. During testing, we just use h=h. 1.3[10pts] What is the expected number of hidden units that are kept (i.e., those with m[i]=1 ) by Dropout? Derive the probability distribution (i.e., the probability mass function) of the number of kept hidden units

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started