Question

from __future__ import print_function import os, sys, time, datetime, json, random import numpy as np from keras.models import Sequential from keras.layers.core import Dense, Activation from

from __future__ import print_function import os, sys, time, datetime, json, random import numpy as np from keras.models import Sequential from keras.layers.core import Dense, Activation from keras.optimizers import SGD , Adam, RMSprop from keras.layers.advanced_activations import PReLU import matplotlib.pyplot as plt from TreasureMaze import TreasureMaze from GameExperience import GameExperience %matplotlib inline --------------------------------------------------------------------------- ModuleNotFoundError Traceback (most recent call last)in 7 from keras.layers.advanced_activations import PReLU 8 import matplotlib.pyplot as plt ----> 9 from TreasureMaze import TreasureMaze 10 from GameExperience import GameExperience 11 get_ipython().run_line_magic('matplotlib', 'inline') ModuleNotFoundError: No module named 'TreasureMaze' The following code block contains an 8x8 matrix that will be used as a maze object: maze = np.array([ [ 1., 0., 1., 1., 1., 1., 1., 1.], [ 1., 0., 1., 1., 1., 0., 1., 1.], [ 1., 1., 1., 1., 0., 1., 0., 1.], [ 1., 1., 1., 0., 1., 1., 1., 1.], [ 1., 1., 0., 1., 1., 1., 1., 1.], [ 1., 1., 1., 0., 1., 0., 0., 0.], [ 1., 1., 1., 0., 1., 1., 1., 1.], [ 1., 1., 1., 1., 0., 1., 1., 1.] ]) This helper function allows a visual representation of the maze object: def show(qmaze): plt.grid('on') nrows, ncols = qmaze.maze.shape ax = plt.gca() ax.set_xticks(np.arange(0.5, nrows, 1)) ax.set_yticks(np.arange(0.5, ncols, 1)) ax.set_xticklabels([]) ax.set_yticklabels([]) canvas = np.copy(qmaze.maze) for row,col in qmaze.visited: canvas[row,col] = 0.6 pirate_row, pirate_col, _ = qmaze.state canvas[pirate_row, pirate_col] = 0.3 # pirate cell canvas[nrows-1, ncols-1] = 0.9 # treasure cell img = plt.imshow(canvas, interpolation='none', cmap='gray') return img The pirate agent can move in four directions: left, right, up, and down. While the agent primarily learns by experience through exploitation, often, the agent can choose to explore the environment to find previously undiscovered paths. This is called "exploration" and is defined by epsilon. This value is typically a lower value such as 0.1, which means for every ten attempts, the agent will attempt to learn by experience nine times and will randomly explore a new path one time. You are encouraged to try various values for the exploration factor and see how the algorithm performs. LEFT = 0 UP = 1 RIGHT = 2 DOWN = 3 # Exploration factor epsilon = 0.1 # Actions dictionary actions_dict = { LEFT: 'left', UP: 'up', RIGHT: 'right', DOWN: 'down', } num_actions = len(actions_dict) The sample code block and output below show creating a maze object and performing one action (DOWN), which returns the reward. The resulting updated environment is visualized. qmaze = TreasureMaze(maze) canvas, reward, game_over = qmaze.act(DOWN) print("reward=", reward) show(qmaze) This function simulates a full game based on the provided trained model. The other parameters include the TreasureMaze object and the starting position of the pirate. def play_game(model, qmaze, pirate_cell): qmaze.reset(pirate_cell) envstate = qmaze.observe() while True: prev_envstate = envstate # get next action q = model.predict(prev_envstate) action = np.argmax(q[0]) # apply action, get rewards and new state envstate, reward, game_status = qmaze.act(action) if game_status == 'win': return True elif game_status == 'lose': return False This function helps you to determine whether the pirate can win any game at all. If your maze is not well designed, the pirate may not win any game at all. In this case, your training would not yield any result. The provided maze in this notebook ensures that there is a path to win and you can run this method to check. def completion_check(model, qmaze): for cell in qmaze.free_cells: if not qmaze.valid_actions(cell): return False if not play_game(model, qmaze, cell): return False return True The code you have been given in this block will build the neural network model. Review the code and note the number of layers, as well as the activation, optimizer, and loss functions that are used to train the model. def build_model(maze): model = Sequential() model.add(Dense(maze.size, input_shape=(maze.size,))) model.add(PReLU()) model.add(Dense(maze.size)) model.add(PReLU()) model.add(Dense(num_actions)) model.compile(optimizer='adam', loss='mse') return model #TODO: Complete the Q-Training Algorithm Code Block This is your deep Q-learning implementation. The goal of your deep Q-learning implementation is to find the best possible navigation sequence that results in reaching the treasure cell while maximizing the reward. In your implementation, you need to determine the optimal number of epochs to achieve a 100% win rate. You will need to complete the section starting with #pseudocode. The pseudocode has been included for you. def qtrain(model, maze, **opt): # exploration factor global epsilon # number of epochs n_epoch = opt.get('n_epoch', 15000) # maximum memory to store episodes max_memory = opt.get('max_memory', 1000) # maximum data size for training data_size = opt.get('data_size', 50) # start time start_time = datetime.datetime.now() # Construct environment/game from numpy array: maze (see above) qmaze = TreasureMaze(maze) # Initialize experience replay object experience = GameExperience(model, max_memory=max_memory) win_history = [] # history of win/lose game hsize = qmaze.maze.size//2 # history window size win_rate = 0.0 # pseudocode: # For each epoch for i in range(n_epoch): print('\n\n\n') print('This is the start of the next epoch...') print('\n') agent_cell = np.random.randint(0, high=7 size=2) #Reset the random statring postion qmaze.reset([0,0]) envstate = qmaze.observe() loss = 0 n_episodes = 0 # while game is not over while qmaze.game_status() == 'not_over':game with agent at the random starting postion previous_envstate = envstate valid_actions = qmaze.valid_actions() # Get next action if np.random.rand() < epsilon: action = random.choice(valid_actions) else: action = np.argmax(experience.predict(envstate)) # Take the action envstate, reward, game_status = qmaze.act(action) # Increase episode count because taking an action makes an episode n_episodes += 1 # Remember the episode episode = [previous_envstate, action, reward, envstate, game_status] experience.remember(episode) inputs,targets = experience.get_data() history = model.fit(inputs, targets, epochs=8, batch_size=24, verbose=0) loss = model.evaluate(inputs, targets) # if pirate agent won game if episode [4] == 'win': win_history.append(1) win_rate = sum(win_history) / len(win_history) break # if pirate agent lost the game elif episode[4] == 'lose': win_history.append(0) win_rate = sum(win_history) / len(win_history) break # else the game is not over if win_rate > epsilon: print('win rate is larger than epsilon!!!!') if completion_check(model, qmaze) == True: print('completion_check() passes!!') #Print the epoch, loss, episodes, win count, and win rate for each epoch dt = datetime.datetime.now() - start_time t = format_time(dt.total_seconds()) template = "Epoch: {:03d}/{:d} | Loss: {:.4f} | Episodes: {:d} | Win count: {:d} | Win rate: {:.3f} | time: {}" print(template.format(epoch, n_epoch-1, loss, n_episodes, sum(win_history), win_rate, t)) # We simply check if training has exhausted all free cells and if in all # cases the agent won. if win_rate > 0.9 : epsilon = 0.05 if sum(win_history[-hsize:]) == hsize and completion_check(model, qmaze): print("Reached 100%% win rate at epoch: %d" % (epoch,)) break # Determine the total time for training dt = datetime.datetime.now() - start_time seconds = dt.total_seconds() t = format_time(seconds) print("n_epoch: %d, max_mem: %d, data: %d, time: %s" % (epoch, max_memory, data_size, t)) return seconds # This is a small utility for printing readable time strings: def format_time(seconds): if seconds < 400: s = float(seconds) return "%.1f seconds" % (s,) elif seconds < 4000: m = seconds / 60.0 return "%.2f minutes" % (m,) else: h = seconds / 3600.0 return "%.2f hours" % (h,) File " ", line 30 print('\n\n\n') ^ IndentationError: unexpected indent Test Your Model Now we will start testing the deep Q-learning implementation. To begin, select Cell, then Run All from the menu bar. This will run your notebook. As it runs, you should see output begin to appear beneath the next few cells. The code below creates an instance of TreasureMaze. qmaze = TreasureMaze(maze) show(qmaze) In the next code block, you will build your model and train it using deep Q-learning. Note: This step takes several minutes to fully run. model = build_model(maze) qtrain(model, maze, epochs=1000, max_memory=8*maze.size, data_size=32) This cell will check to see if the model passes the completion check. Note: This could take several minutes. completion_check(model, qmaze) show(qmaze) This cell will test your model for one game. It will start the pirate at the top-left corner and run play_game. The agent should find a path from the starting position to the target (treasure). The treasure is located in the bottom-right corner. pirate_start = (0, 0) play_game(model, qmaze, pirate_start) show(qmaze)

Instructions for my part

Q-Training Algorithm You have been given a skeleton implementation in the TreasureHuntGame Jupyter Notebook. Your task is to implement deep-Q learning. The goal of your deep Q-learning implementation is to find the best possible navigation sequence that results in reaching the treasure cell while maximizing the reward. In your implementation, you need to determine the optimal number of epochs to achieve a 100% win rate.

You will need to complete the section starting with #pseudocode. The pseudocode has been included for you.

def qtrain(model, maze, **opt):

# exploration factor

global epsilon

# number of epochs

n_epoch = opt.get('n_epoch', 15000)

# maximum memory to store episodes

max_memory = opt.get('max_memory', 1000)

# maximum data size for training

data_size = opt.get('data_size', 50)

# start time

start_time = datetime.datetime.now()

# Construct environment/game from numpy array: maze (see above)

qmaze = TreasureMaze(maze)

# Initialize experience replay object

experience = GameExperience(model, max_memory=max_memory)

win_history = [] # history of win/lose game

hsize = qmaze.maze.size//2 # history window size

win_rate = 0.0

# pseudocode:

# For each epoch for i in range(n_epoch):

print('\n\n\n')

print('This is the start of the next epoch...')

print('\n')

agent_cell = np.random.randint(0, high=7 size=2)

#Reset the random statring postion

qmaze.reset([0,0])

envstate = qmaze.observe()

loss = 0

n_episodes = 0

# while game is not over

while qmaze.game_status() == 'not_over':game with agent at the random starting postion

previous_envstate = envstate

valid_actions = qmaze.valid_actions()

# Get next action

if np.random.rand() < epsilon:

action = random.choice(valid_actions)

else:

action = np.argmax(experience.predict(envstate))

# Take the action

envstate, reward, game_status = qmaze.act(action)

# Increase episode count because taking an action makes an episode

n_episodes += 1

# Remember the episode

episode = [previous_envstate, action, reward, envstate, game_status]

experience.remember(episode)

inputs,targets = experience.get_data()

history = model.fit(inputs, targets, epochs=8, batch_size=24, verbose=0)

loss = model.evaluate(inputs, targets)

# if pirate agent won game

if episode [4] == 'win':

win_history.append(1)

win_rate = sum(win_history) / len(win_history)

break

# if pirate agent lost the game

elif episode[4] == 'lose':

win_history.append(0)

win_rate = sum(win_history) / len(win_history)

break

# else the game is not over

if win_rate > epsilon:

print('win rate is larger than epsilon!!!!')

if completion_check(model, qmaze) == True:

print('completion_check() passes!!')

#Print the epoch, loss, episodes, win count, and win rate for each epoch

dt = datetime.datetime.now() - start_time

t = format_time(dt.total_seconds())

template = "Epoch: {:03d}/{:d} | Loss: {:.4f} | Episodes: {:d} | Win count: {:d} | Win rate: {:.3f} | time: {}"

print(template.format(epoch, n_epoch-1, loss, n_episodes, sum(win_history), win_rate, t))

# We simply check if training has exhausted all free cells and if in all

# cases the agent won.

if win_rate > 0.9 : epsilon = 0.05

if sum(win_history[-hsize:]) == hsize and completion_check(model, qmaze):

print("Reached 100%% win rate at epoch: %d" % (epoch,))

break

# Determine the total time for training

dt = datetime.datetime.now() - start_time

seconds = dt.total_seconds()

t = format_time(seconds)

print("n_epoch: %d, max_mem: %d, data: %d, time: %s" % (epoch, max_memory, data_size, t))

return seconds

# This is a small utility for printing readable time strings:

def format_time(seconds):

if seconds < 400:

s = float(seconds)

return "%.1f seconds" % (s,)

elif seconds < 4000:

m = seconds / 60.0

return "%.2f minutes" % (m,)

else:

h = seconds / 3600.0

return "%.2f hours" % (h,)

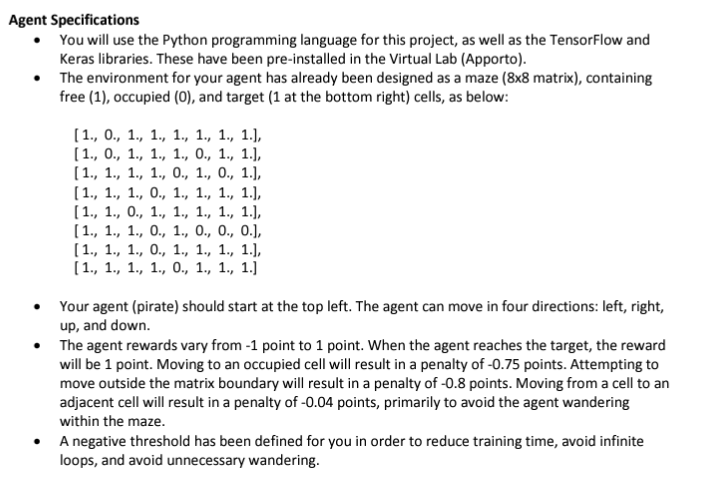

Agent Specifications You will use the Python programming language for this project, as well as the TensorFlow and Keras libraries. These have been pre-installed in the Virtual Lab (Apporto). The environment for your agent has already been designed as a maze (8x8 matrix), containing free (1), occupied (0), and target (1 at the bottom right) cells, as below: [1., 0., 1., 1., 1., 1., 1., 1.], [1., 0., 1., 1., 1., O., 1., 1.], [1., 1., 1., 1., O., 1., O., 1.], [1., 1., 1., 0., 1., 1., 1., 1.], [1., 1., 0., 1., 1., 1., 1., 1.], [1., 1., 1., 0., 1., O., O., 0.], [1., 1., 1., 0., 1., 1., 1., 1.], [1., 1., 1., 1., O., 1., 1., 1.] Your agent (pirate) should start at the top left. The agent can move in four directions: left, right, up, and down. The agent rewards vary from -1 point to 1 point. When the agent reaches the target, the reward will be 1 point. Moving to an occupied cell will result in a penalty of -0.75 points. Attempting to move outside the matrix boundary will result in a penalty of -0.8 points. Moving from a cell to an adjacent cell will result in a penalty of -0.04 points, primarily to avoid the agent wandering within the maze. A negative threshold has been defined for you in order to reduce training time, avoid infinite loops, and avoid unnecessary wandering.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started