Question: How does the article Fixing Facebook: Fake news, privacy, and platform governance relate to the ted talk video what obligations do social media platforms have

How does the article Fixing Facebook: Fake news, privacy, and platform governance relate to the ted talk video what obligations do social media platforms have to the greater good?

Ted talk video

https://www.ted.com/talks/eli_pariser_what_obligation_do_social_media_platforms_have_to_the_greater_good?language=en#t-1012548

Fixing Facebook: Fake News, Privacy, and Platform Governance 1 / 30 110% + . .. DAVID YOFFIE DANIEL FISHER Fixing Facebook: Fake News, Privacy, and Platform Governance It's not enough to just build tools. We need to make sure they are used for good. - Mark Zuckerberg, testifying before the U.S. Senate on April 10, 2018 During Facebook Inc.'s results conference call for its second quarter of 2019, Mark Zuckerberg - the company's CEO, chairman, and majority shareholder - announced that the number of people using at least one of Facebook's services each day had risen to 2.1 billion, more than a quarter of the global population. Zuckerberg also confirmed that Facebook had agreed to pay a record-setting $5 billion fine to the Federal Trade Commission (FTC) for violating consumers' privacy. The FTC settlement also required Facebook to become more accountable for how it handled users' data, which Zuckerberg said 2 would help Facebook set "a new standard" for transparency in its industry. He also welcomed further regulation on election safety, harmful content, and data portability. "I don't believe it's sustainable for private companies to be making so many decisions on social issues," he said."2 Zuckerberg's calls for regulation stood in stark contrast to his optimism in Facebook's early days. He long espoused the belief that connecting people was a way to foster social harmony. Yet a growing number of critics accused Facebook of doing the exact opposite by taking advantage of users' privacy and allowing bad actors to use its platform to cause real-world harm. Facebook tried to mitigate these problems: it hired more content moderators and changed its privacy policy to give users greater clarity and control. But numerous critics remained skeptical. 3 In March 2019, Zuckerberg announced that the Facebook platform would be shifting from a "public square" model, which focused on sharing public posts across the social graph, to a "digital living room" model, which would focus on smaller, more intimate conversations. However, many questions remained. First, what changes could Facebook make to ease its users' privacy concerns and keep bad actors out of the public square? Second, should Facebook govern more (be more directly engaged in censorship and curation), govern less, or do something in between? And finally, how could Zuckerberg monetize the digital living room? Over the past three years, Facebook's public square model had earned over $120 billion in revenue and a net income of $47 billion, mostly from advertising. On an encrypted platform focused on private communications, could an advertising model work? Or were financial success and good governance mutually exclusive? Professor David Yoffie and Research Associate Daniel Fisher prepared this case. This case was developed from published sources. Funding for the development of this case was provided by Harvard Business School and not by the company. HBS cases are developed solely as the basis for classFixing Facebook: Fake News, Privacy. and Platform Governance Face-on ABr-1e 0 emew Mark Zuclrerberg was a sophomore at Harvard when he launched thetacebookcom ("Facebook\" hereon] on February 4, 2004.3 Zuckerberg said that the idea was to make an online version of Harvard's class directory, or "face book." \"I think it's kind of silly that it would take the Uruversity a couple of years to get around to it," Zuckerberg told the Harvard Crimson. "Ican do it better than they can and I can do it in a week.\" The original Facebook site was simple: Users could create profiles with personal information like their course schedule, they could search for other profiles by looking up coursesorsocial organizations, and they could link their profiles to their friends' profiles Within 24 hours of its launch. more than 1,000 Harvard students registered to join the site.5 Despite its simplicity, or perhaps because of it, many early users found Facebook addictive and regularly checked the site to keep up with their friends Having lots of friends on Facehook become something of a status symbol, and students began competing to add a many as possible. In the process, Facebook became, in Zuckerberg's words, a complex \"social graph" that mirrored social connections in the real world Facebook quickly expanded to other universities, and by May 1005, the site had 2.8 million registered users- and a $13 million investment from Accel Partners, a Palo Alto venture finn.7 in September 2006. Facebook made the site available to everyone over the age of 13. the age limit set by United States federal law for collecting personal information onlihe.\" Over the next decade, Facebook growth was roughly linear, adding around 59 million monthly active users per quarter.B As Facebook grew, it added new features to the site. Among the first big changes was the addition of the News Feed in 2006, a feature that aggregated content posted byusers' friends In 2097, it launched the Facebook Platform. a set of tools and services that enabled third parties to develop applications (apps) like games and personality quizzes tor Facebook, as well as gather data from users that granled access to their prole infatuation\" Afterwards, Facebook added instant chat, video, livestreaming, classied ads, and the capability to interact with content by posting \"likes\" and " reactions." Facebook received several acquisition offers, including a $75 million otter from Viacom in 2315 and $1 billion offer trom Yahoo in 2005, but Zuckerberg rejected all of them.11 By 201 7, is number at monthly active users surpassed two billion. For 2018, it reported earning $55.8 billion in revenue and net income of $22.1 billion (see Exhibit 1).12 Facebook's Business Model Facebook earned revenue primarily through targeted advertising It first offered advertisements in 2004 through a service called Flyers, which allowed advertisers to send advertisements to particular college campuses: later versions allowed advertisers to target more specic audiences by specifying an age range or key words to 100k for in user proles.\" As technology evolved, Facehook enabled advertisers to target based on personal, location, and/or behavioral data, whichmade Facebook one of the most valuable resources for advertisers on the web (see Exhibit 8). As of 2019, Facebook had more than 7 million advertisers on its platform, and its average revenue per user (ARPU] in the US. had risen from less than$10 in 2015 to more than $33 (see Exhibit '7).M Over the years, Facebook acquired dozens of companies and integrated some of their products into its site. Facebook's three largest purchases were instagram, a photosharing mobile application, for $1 billion in 2012; Oculus VR, 3 virtual reality (VR) hardware and software developer, [or $2 billion in 2014; and WhatsApp, a mobile messaging application, for $19 billion in 2014. Facehook also acquired Onavo. a data security application, to track what applications were becoming popular with users}; It Fixing Facebook: Fake News, Privacy, and Platform Governance it felt a new application posed a threat, it would make an acquisition, as it did in the case of WhatsApp, or it would copy the application's Core feature, as it did with shapchat by adding 24-hour \"stories" to Facebook and lnstagrarn.16 For a time, \"Don't be too proud to copy" was an informal motto at Facebook\" Just before going public in 2012, Zuckerberg warned Facebook employees: 'If we don't create the thing that kills Facebook. someone else will . . . The irttemel is not a friendly place. Things that don't stay relevant don't even get the luxury of leaving ruins. They disappear."IE Criticism of Faceboak From its earliest days, Facebook received criticism for its curation of content and for violating users' privacy. Critii: assailed Facebook for removing and/or failing to remove certain content. In recent years, the critique focused on \"fake news.\" a nebulous category of content commonly associated with political misinformation masquerading as legitimate news stories. [n the case of privacy, Facebook received criticism for making it too easy for advertisers, third-party application developers, or olher users to access users' personal information. Concern over these two issues was global: critics accused Facebook of being compliu't in the spread of misinformation and hateful rumors that sparked deadly violence in several different countries, including alleged acts of genocide committed by the Myanmar government.\" [n addition, Facebook routinely faced lawsuits concerning violations of privacy in several different countries. as well as regulatory scrutiny from governmental agencies. These issues got everyone's attention following the 2016 Us. presidential election. Leading up to the election, Facebook pages that shared politicized misinformation became extremely popular, and journalists revealed that some of it was produced by the Russian government to support candidate Donald Trump.\" To the same and Russians were also buying targeted political ads.21 Then. in March 2018, the Guardian and the New York Times published articles about Cambridge Analytica, a small data analytics firm that had gained access to the data of tens of millions of Facebook users and was later hired by Donald Trump's presidential campaign. One month after the Cambridge Analytica stories broke, Zuckerberg appeared before the US. Senate. During his testimony. he publicly apologized: It's not enough to just give people control over their information. We need to make sure that the developers they share itwith protect their information. too . . . We didn't take a broad enough view of our responsibility, and that was a big mistake, and it was my mistake, and I'm sorry\" one year later, in May 2019, the New York Times published an op-ed by Chris Hughes, a Facebook cofounder, titled "It's Time to Break Up Facebook.\" According to Hughes, "Mark is a good, kind person. But I'm angry that his focus on growthled him to sacrifice security and civility for clicls . r . An era of accountability for Faceboolr and other monopolies may be beginningm The Issues: Curating Content Like many other online platforms, Facebook curated the content that its users posted to make its platform more welcoming. According to its Community Standards, its goal was to "err on the side of giving people a voice while preventing real world harm and ensuring that people feel safe in our community."24 To meet this goal, Facebook banned content falling into nine categories: (1) Adult Nudity and Sexual Activity, (2) Bullying and Harassment (3) Child Nudity and the Sexual Exploitation Fixing Facebook: Fake News, Privacy. and Platform Governance of Children. (4) Fake Accounts, (5) Hate Speech, (6) the Sale of Drugs or Firearms, (7] Spam (8) Terrorist Propaganda, and (9) Violence and Graphic Content (see Exhibit 4). Originally, FacebooYs guidelines tor moderators the people that saeened posts for inappropriate content were brief and vague. According to former Safety Manager Charlotte Willner, the instructions were essentially: \"if it makes you feel bad in your gut, then go ahead and take it down"?5 Facebook evolved to fixed guidelines in 2009 for two reasons: it needed to be sure that the members of its growing moderation team were removing Content in a consistent and predictable manner, and it needed an explanation available for how it made curation decisions to respond to criticisms, which were becoming more Erequertt.25 But Facebook struggled to translate the subtleties of its norms into hard-and-tast rules. Occasional leaks to the press revealed that Facebook's internal guidelines were a patchwork or thousands of PDFs, PowerPoint slides, and Excel sheets, reecting a long history or ad hoc responses.\" Hate speech was among the most challenging categories, and Facebook's attempts to define the concept often produced bizarreresults. For example, one rule for content curation mandated that moderators remove hateful speech directed at \"white men," but not "female drivers\" or "black children," because the latter two groups were too specific.\" The inherent ambiguities of categories of content like hate speech also impeded the development of algorithms for detecting violations. Algorithms required large, consistently labeled datasets to \"learn" how to identify different kinds of content. Categories like hate speech presented two challenges. First, amples of hate speech were numerws, diverse, and highly context-dependent. This meant that the amount of data required to train algorithms was enormous. The second challenge was that people often disagreed about what constituted hate speech, making it ditficult to label data consistently. Algorithms for detecting hate speech were often inaccurate, which meant most decisions about hate speech fell to human moderators (see Exhibit 3). Although algoritlu'ns struggled less with other categories of violations, moderators often made the final call on whether to remove content. By 2019, Facebook employed around 30,000 moderators around the world, and the average moderator viewed hundreds of pieces of content every day.\" Because much of the content was shocking and violent, many moderators were reportedly traumatized. leading to high turnover\" Misinfammtian and Fake News Zuckerberg's philosophy had been to refrain from making content decisions based upon "the trout." Because Facebook was meant to be a platform where people could share opiruons, it coold not be "in the business of . . . deciding what is true and what is not true,\" as Zur'lrerberg put it in 2018?' This first became an issue in 2009, when Jewish groups criticized Facebook for refusing to remove content promoting Holocaust denial.32 Faceboak's response was that it could not determine whether the posts were antiSemitic or merely ignorant, and it needed to err on the side of free expression.33 However. F acebook was never able to avoid such decisions completely, particularly after misinfor- mation on the site caused real-world violence. Starting in 2011, rumors of child abductors spread on Facebook and WhatsApp sparked mob violence in countries like Mexico and India, claiming dozens of lives.34 In Myanmar, the government used Facebool: to spread rumors about the Rohingya Muslim minority15 After more than 650,000 Rohingya Muslims fled the cotmtry, the chairman of the UN Independent International Fact-Finding Mission on Myanmar stated atly in 1013 that Facebook had played a "determining role\" in the crisis. 35 The issue that prompted the most concern was political misinformation Pages sharing sensational and misleading political content became extremelypopular during the 2016 U.S. presidential election 37 Fixing Facebook: Fake News, Privacy. and Platform Governance political pages were run by Macedonian teenagers, and some were run by organizations ded by Yevgeny Prigozhin. a Russian businessman with strong ties to the Russian government. 3\" Trump's victory in 2016 was a turning point for Facebmk. Critics argued that Facebook enabled foreign powers to unfairly inuence the election by spreading pro-Trump propaganda. Zuckerberg's first response was to call the theory a "pretty crazy idea." citing internal investigations that had concluded a \"very small volume" of the content on Facehook was take newer Facebook also found that almost every Facebook user in the us. had one or more friends that were members of a ditferent political party. Cutting against the popular theory that social media platforms like Facehook produced an \"echo chamber" by isolating users from opposing viewpoints and reinforcing their biasesr Instead. Zuckerberg claimed the problem was users, who were ultimately responsible for clicking on hamhtl content. "I don't know what to do about that,\" he said.\" However, following the 2016 election, Facebook instituted new measures to limit the spread of misinformation on its platforms. On Facebook, it partnered with (act-checkers, who atxed warnings to dubious content, and its News Feed algorithms deprioritized content Facebook had identified as inaccurate. On WhatsApp, where data was encrypted, Facehook could not observe or mderahe the content. Instead, Fareboolr instateda hard limit on the number of groups a message could be forwarded to, hoping to reduce the spread of misinformation indirectly\" Facebook never banned misinformation outright on any of its platforms, and it would only remove instances of misinformation for reasons besides its factual inaccuracy. For example, in August 1018, it removed hundreds of pages, groups, and accounts originating from Russia and Iran for "coordinated inaull'tentic bEhavior," which it defined as "working together to mislead others about who they are or what they are doing.42 One especially challenging form of misinformation was \"deepfakes.\" Deepfakes were videos altered by machine learning tools to make the people in the videos do and say things they had not. One deepfake that went viral on lnstagram was a video of Zuckerberg himself, in which he appears to lay bare his plan for total global domination.'3 Facebook decided to keep the video up, but Zuckerberg said that itwas \"currently evaluating what the policy [on deepfakesl needs to he," and noted that there was a"very good case" for treating them differently from other kinds of misinformation\" Another challenge was misinformation in political advertisementsr Facebook received criticism in September 2019 for refusing to take down anad run by Trump's reelection campaign that made false claims about he Biden. Following the controversy, Twitter banned political advertisements on its own plattonn, and Google limited the forms of targeting that political advertisers could use.\" Under pressure. Facebook hinted at the possibility of changing its approach to political ads, but Zuckerberg remained steadfast. "What I believe is that in a democracy, it's really important that people cans see tor themselves what politicians are saying so they can make judgments. And, you know, 1 don't think that a private company should be censoring politicians or news," he said.\" Legal Concerns: Fake News Laws, Free Speech, and Section 230 The 1016 US. presidential election was not the only election affected by misinformation: elections in Mexico. Brazil, Indonesia, France. and elsewhere suffered from similar fake news attacks" Consequently. many countries passed \"anti-fake news" laws that allowed government agencies to demand platforms, including Facelmok, to remove misinformation, although free speech activists generally panned these laws as thinly veiled attempts to quash dissent!8 Within the U.S., conservative politicians regularly criticized Facebook. Twitter, and others for failing to fulfill the ideal of free expression enshrined in the First Amendment of the United States Fixing Facebook: Fake News, Privacy, and Platform Governance 6 / 30 100% + . .. Constitution. Republican Senators Ted Cruz and Josh Hawley were among the most outspoken on this issue. According to them, Facebook actively censored content to impose an anti-conservative bias 2 on its users. "What makes the threat of political censorship so problematic is the lack of transparency, the invisibility, the ability for a handful of giant tech companies to decide if a particular speaker is disfavored," said Cruz.49 To address the issue, several Republicans advocated revising Section 230 of the 1996 Communications Decency Act of 1996 (CDA), which protected user-generated content platforms from the legal responsibilities of publishers, including liability for potentially tortious content (see Exhibit 5).50 The section was a response to a New York Supreme Court decision that found an online forum was liable for libelous posts because it exercised "editorial control" by enforcing comment guidelines. Fearing that legal threats could stifle the growth of the internet, lawmakers added Section 230 to specify that online service providers could not be classified as publishers, even if they moderated content. 3 According to Ron Wyden, a Democratic Senator from Oregon who wrote portions of Section 230: I wanted to make sure that internet companies could moderate their websites without getting clobbered by lawsuits. I think everybody can agree that's a better scenario than the alternative, which means websites hiding their heads in the sand out of fear of being weighed down with liability. 51 In June 2019, Hawley introduced legislation to revise Section 230. To enjoy legal protection in the future, Hawley wanted tech companies to provide "clear and convincing" evidence that they did not moderate content in a "politically biased manner."52 Democratic lawmakers, including Wyden and House Speaker Nancy Pelosi, also called for revisions of Section 230.53 Wyden argued that companies like Facebook were not doing a good enough job keeping "slime" off their platforms. "[If] you don't use the sword, there are going to be people coming for your shield," he warned. 54,c The Content Curation Paradox Common wisdom was that platforms needed to enforce community guidelines; otherwise, they ran the risk of shocking, graphic, or risque content driving the majority of users away. (In the words of Micah Schaffer, the technology advisor that wrote You Tube's first community guidelines, "Bikinis and Nazism have a chilling effect."$5) But Facebook also found the opposite. According to Zuckerberg: "our research suggests that no matter where we draw the lines . .. [as] content gets close to that line, people will engage with it more on average-even if they tell us afterwards they don't like the content . . . [This pattern] applies . . . to almost every category of content."56 5 According to the results of a study released in 2018, content that contained false information spread farther and faster than accurate news stories, often by an order of magnitude.57 In an analysis performed on partisan news pages that were popular in 2016, BuzzFeed News similarly found that false information received the most engagement from users. 5 These observations cut against the optimistic " self-cleaning oven" theory of social media, which held that users would act as a check on one another, keeping each other civil and calling out one another's ignorance and biases. 59 Amendment I of the United States Constitution stated that "Congress shall make no law .. . abridging the freedom of speech, or of the press." The Supreme Court of the United States held that the Amendment prohibited only governmental, not private abridgement of speech, unless the private entity exercised "powers traditionally exclusively reserved to the state" (see Jackson v. Metropolitan Edison Co.).Fixing Facebook: Fake News, Privacy. and Platform Governance The most shocking example of this conundrum occurred on March 15, 2019, when a gunman livestreamed to Facebook the rst of two deadly terrorist attacks on mosques in Ctm'stclmrch, New Zealartdl In an attempt to get as much attention as possible. the gunman brandished weapons painted with the names of white supremacists, and he made joking references to various online communities, including inns of the popular YouTuber PewDiePie. The livestrearn concluded without being removed because not a single user reported IL 111 the 14 hours following the attack, users re-uploaded the video over 1.5 million til-(team The Issues: Privacy in zoos, Facebook made the site available to everyone over the age of 13.In According to its privacy policy at the time. the site collected two types of information: personal mformation that users knowingly submitted (a name, for example) and aggregated browsing data like browser types and IF addressEsr Facebook said that it would share users' personal information only with groups they had specically identied in their privacy settings, but it also reserved the right to share personal information with business partners in addition Facebook allowed third-party advertisers to download "cookies" to users' computers to track their browsing behavior.\"2 Although Facebook was no longer the exclusive, insular community it once was, Zuckerberg wanted to preserve the same sense of security that motivated users to share personal information He said, "The problem Facebook is solving is this one paradox. People want access to all the information around them, but they also want complete control over their own information.\" is Facebook faced its rst controversy over privacy in 2006. when it launched the News Feed Within 24 hours, hundreds of thousands of Facebook users joined antiNews Feed groups on Facebook to protest the new feature, which they felt robbed them of autonomy over how they shared information about themselves.('1 Zuckerherg responded to the controversy in a blog post titled "Calm down Breathe We hear you ." \" [We] agree. stalking isn't cool" he said, "but being able to know what's going on in your friends' live is.\">5 The controversy died out soon after and user growth remained strong66 Facebook continued to receive criticism for its management of users' privacy. Typically, the criticism was that it robbed users of agency by failing to ask for meaningful consem to collect and share information, as well as by providing confusing or incomplete information about how their information was being shared under specific privacy settings Zuckerberg was comfortable subverting [JETS- privacy expectations because he believed that users did not care as much about privacy as critics thought, and that they would care even less about privacy in the future "We view it as our role in the system to constantly be innovating and be updating what our system is to reect what the current social norms are." he said.'.7 However, these updates did not reect many muntr'tes' privacy regulations: between 2009 and 2019, regulators in Canada, the U.S., and several countries in Europe concluded that Facebook had violated its users' right to privacy.\" One of Facebook's most consequential run-ins with regulators was its settlement with the ETC in 2011. The FTC began their investigation after organizatiom like the Electronic Frontier Foundation (EFF) submitted complaints that Facebook was tricking users into sharing more personal information than they might otherwise have by presenting them with misleading privacy settings controls}? After investigating, the FTC accused Faceboolt of making misleading statements about users' data privacy. In Facebook's 2011 settlement with the FTC, the company was barred from making further "misrepresentations" about users' privacy in the future.7n In a blog post, Zuckerberg said, 'We will mntinnp tn immve the :pnn'rs- build rte-w warm for van to sham 2an offer new wavt; tn Tymrnvt vmi Fixing Facebook: Fake News, Privacy, and Platform Governance 8 / 30 100% + . .. over Cambridge Analytica led U.S. government officials to question Facebook's compliance with its privacy commitments. Cambridge Analytica In 2014, a University of Cambridge lecturer named Alexander Kogan paid around 270,000 Facebook users through Amazon Mechanical Turk to take a personality quiz on a Facebook application he had developed.72 To access the application, the users first needed to agree to share their personal data, along with the personal data of their friends. In this way, Kogan gained access to the profile data of roughly 87 million Facebook users. He shared a large portion of that data with political consulting group Cambridge Analytica-a violation of Facebook's terms of service with third-party app developers. 5 In 2015, a reporter for the Guardian obtained documents revealing that Cambridge had data from millions of Facebook profiles and was working with Ted Cruz's presidential campaign to target advertisements. When asked to comment, a Facebook spokesman said that the company was "carefully investigating the situation."74 Eventually, Facebook discovered that Kogan had also shared data with research colleagues and Eunoia, a data analytics company run by former Cambridge employee Chris Wylie. 75 Facebook demanded that both companies delete the data, and both certified that they did. "[Lliterally all I had to do was tick a box and sign it and send it back, and that was it," said Wylie. "Facebook made zero effort to get the data back."76 After Cruz dropped out of the race, Cambridge began working for the Trump campaign. Both Cambridge and the Trump campaign denied ever using Kogan's Facebook data to target ads.?7 6 Following Trump's election, several publications reported that Cambridge had played a decisive role in Trump's victorious 2016 campaign." Then, in March 2018, the New York Times and the Guardian both ran stories featuring Wylie, who called the company a "psychological warfare mindf**k tool." 79 The - New York Times also reported that Cambridge Analytica retained hundreds of gigabytes of Facebook data on its servers. 80 A few days later, Zuckerberg announced that Facebook would perform a "thorough audit" of all apps that might have access to large amounts of user data. 51 At the end of March, it closed down a feature called Partner Categories, which used data from third-party data brokers to target advertisements. $2 And in April, it began blocking developers from accessing the data of users who had not used their apps for more 90 days. Facebook also introduced new privacy tools for users. For example, in July 2019, Facebook launched a tool that explained to users why particular advertisements were targeted at them and what third-party data brokers were involved. 8 Users could also opt out of particular ad campaigns. 85 It was unclear what effect the news of Cambridge's actions had on users. According to a survey conducted by The Atlantic, 42% of users changed their behavior on Facebook after hearing about Cambridge Analytica, and 25% of those who changed their behavior became more careful about what they posted. 86 However, after conducting their own survey, Piper Jaffrey analysts found that most Facebook and Instagram users had been "unfazed by the negative news flow." 87 At the time, Facebook's API for third-party apps allowed users to volunteer data from their friend's profile data along withFixing Facebook: Fake News, Privacy, and Platform Governance 9 / 30 100% + . .. 5 The response from legislators and regulators in the U.S. was less ambiguous: many state legislatures introduced data privacy bills, and the FTC began another investigation into Facebook. 8 In July 2019, the FTC announced that it had reached a settlement with Facebook, who would pay a fine of $5 billion, the largest privacy or data security penalty ever imposed in world history, for "deceiving users about their ability to control the privacy of their personal information." 89 Legal Concerns: The General Data Protection Regulation (GDPR) and Beyond Perhaps Facebook's most important legal challenge related to privacy was the General Data Protection Regulation (GDPR), an expansive regulation passed by the European Union (EU) in April 2016 and implemented in May 2018. The new EU regulation granted EU citizens the right to be 6 forgotten, the right to download all of their data, as well as other privacy guarantees. EU regulators could issue substantial fines to companies that violated these policies. In the years leading up to the GDPR's implementation, Facebook made numerous efforts to prepare. For example, it sent out a notice to inform users of their privacy settings and how to opt out of various features. Nevertheless, on the day the GDPR became enforceable, privacy advocates filed complaints that sought to fine Facebook up to 3.9 billion euros. 90 If a company was found in violation of the GDPR, the EU could levy a maximum fine of 4% of a company's global revenue the previous year -$2.23 billion in Facebook's case. 91 Facebook also faced a number of lawsuits in the U.S. One of the largest was filed in 2015 by residents of Illinois, who accused Facebook of violating a state privacy law by allowing its "Tags Suggestions" feature to use facial recognition tools on photos of them without asking for explicit consent. In August 2019, a federal appeals court upheld a district judge's decision to deny Facebook's motion to dismiss, exposing the company to damages that could total more than $7 billion. 92 Competitors and Digital Governance Facebook was not the only social network that faced challenges on how to govern its platform. Twitter, YouTube, WeChat, LinkedIn, and others also struggled with governance issues. Twitter First debuted in 2007, Twitter was a "microblogging" social media platform that allowed registered users to post and share text posts, or "tweets," which were originally limited to a length of 140 8 characters (see Exhibit 2). 93 In 2017, Twitter expanded the limit to 280.94 Twitter's original purpose was to allow users to share small updates about their lives, but as it grew more popular, journalists began using the service to give real-time news updates and political candidates used it to campaign. By 2019, Twitter had around 330 million monthly active users." Twitter first unveiled the "Twitter Rules" in 2009.% The Rules outlined 10 violations, including impersonation, publishing private information without permission, threats, spam, and pornographic profile pictures.97, Over the years, it added rules and eventually categorized them into three categories: Safety, Privacy, and Authenticity. Twitter's Safety rules banned threats of violence, the promotion of terrorism, harassment, and other forms of harmful content; its Privacy rules banned 19Fixing Facebook: Fake News, Privacy. and Platform Governance posting private information and intimate photographs without permission: and its Authenticity rules banned spam, impersonating other users, and spreading misinformation to inuence electionsa From the very beginning, Twitter received criticism for its failure to enforce its rules against abuse and harassment. Twitten's founders were strongly committed to freedom of expression so they were hesitant to actively curate tweets, and they set a high bar for removing tweets and users.\" Many Twitter critics were women subjected to misogynistic harasment campaigns on [he site. After one woman published an article in 1015 about being harxsed on Twitter, CEO Dick Costelo admitted that Twitter " suck [ed] at dealing with abuse and trolls on the platform and we've sucked at it for years?\" But Twitter struggled to improve. According to an Amnesty international study in 2918, the average female journalist or politician on'l'witter received an abusive tweet every 30 seconds, and black women were disproportionately targeted. 1m Twitter was also criticized for failing to remove conspiracy theorists and white supremacists from its platform.\"11 In June 2019, Twitter announced that it would not remove tweets that violated its rules if it were of \"public interest" such as tweets by t'rut-np.m Instead. such tweets were hidden under warning labels explaining that they were abusive YouTube Google's YouTube was a videosharing website that launched in zoos After explicit content began ooding the site in 2006, YouTube put together a small team to screen videosl'\" One decade later, YouTube's moderation team included thousands of Google employees, arid with the help of algorithms that agged inappropriate content, it removed millions of videos every yearm5 YouTube first created rules for users in 2007, banning pomogzmphy, criminal acts, violence, threats, spam and hale speech\" It later added rules banning impersonation, sharing private information about someone without their consent, and encouraging harmful or dangerous acts.1"7 Like Facebook and Twitter, YouTube was regularly criticized for how it curated content, particularly for failing to remove hateful content YouTube also received criticism for spreading misinformation Some theorized that its recommen- dation algorithms, which drove 79% of viewing time on the site, was inadvertently indoctrinating viewers in farright conspiracy theories by regularly recommending videos espousing them\" in response to the criticism, YouTube changed its algorithms to stop recommending conspiracy theory videos, and it began adding links to relevant Wildpedja pages under videos concerning hot-button issues like climate change and school shootings\")? One somewhat unique challenge that You'l'ube faced was how to properly curate content for young children. Although YouTube insisted that it had "never been for kids under 13," 12 of the 20 most- watched videos on YouTube were aimed primarily at children.1m Seeing the opportunity, many content creators specifically targeted children, producing videos featuring nursery rhymes, bright colors. and popular characters like Spider-mart The vast majority of these videos were of extremely poor quality, with Little educational value, and many featured violence and sexual irnagery.\"' To address the problem, YouTube introduced You'l'ube Kids, an app that ieahtred content screened by algorithms to remove non-child-friendly content. but inappropriate videos continued to slip through the cracks\"? in April 2013, YouTube introduced a new option that allowed parents to limit YouTube Kids to showing only videos that had been approved by human moderators. \"3 Microso Famous for its computer operating systems, software tools, and inter-net browsers, Mjctosoft was the world's largest software companyln\" Over its decadesdong history, cntiis repeatedly attacked Microsoft for violating users' privacy. For example, the Irish Data Protection Commissioner and the Fixing Facebook: Fake News, Privacy, and Platform Governance 11 / 30 100% + . .. emails and sentences checked by its spelling software. 115 In each case, Microsoft responded quickly to the concerns raised, and it never experienced a privacy scandal on the scale of Cambridge Analytica. 116 Microsoft also received little criticism for how it governed LinkedIn, a professional social network it bought in 2016. 117 With almost 700 million users, LinkedIn had community guidelines that were largely similar to Facebook's, and it employed filters and "machine-assisted" detection systems to identify inappropriate content. 118 According to LinkedIn's Editor-in-Chief Dan Roth, "You talk on LinkedIn the same way you talk in the office. There are certain boundaries around what is acceptable . . . This is something that your boss sees, your future boss, people you want to work with in the future."119 WeChat WeChat was a multipurpose social media application that was used primarily in China. It could be used to message friends, make phone calls, hold videoconferences, stream live video, and pay for goods 18 and services. In 2019, it had more than one billion users, and its users spent an average of one-third of their day interacting with the service. 120 Unlike Facebook, it did not rely on advertising: it generated revenue primarily through commissions paid by services using its mobile payments system. 121 But like Facebook, WeChat actively screened and removed content. Chinese law dictated that all digital platforms remove "sensitive" content. 122 For posts to WeChat Moments, the WeChat equivalent of the Facebook News Feed, it implemented real-time image filtering to scan for sensitive text and to compare images to a blacklist. Because the process was computationally expensive, it could take up to several seconds for content to be censored. For person-to-person chats, WeChat used a hash index, which stored shortened "fingerprints" of images, to screen and censor content more quickly. According to one study conducted by Citizen Lab, a research group at the University of Toronto, WeChat's method of curating content often resulted in "over-censorship."123 For example, in certain cases, it would remove not only negative references to specific government policies, but also neutral references, 9 including screenshots of official announcements from government websites. Facebook's Future In 2019, Facebook announced two significant changes. The first was that it was shifting to a "digital living room" model that would prioritize privacy. The second was that it would establish an independent board to make decisions about how Facebook curated content. The Digital Living Room During Facebook's second quarter of 2018 earnings call, Zuckerberg introduced a new "family- 10 wide" metric that counted individual users of at least one of Facebook's apps (Facebook, WhatsApp, Instagram, and Messenger). As of June 2018, that number stood at 2.5 billion. According to Facebook CFO David Wehner, this family-wide number better reflected the size of the Facebook community than Facebook's daily active user count, which had grown by only 1.54% during the quarter, or less than half its usual quarterly rate of growth. 124 Investors were unmoved by this argument: on the day Facebook released its report for the second quarter of 2018, its stock fell by 19%, and its market capitalization shrunk by $119 billion. It was the largest single-day drop in market value in U.S. stock market history. 125 The introduction of the family-wide metric was a harbinger for greater changes to come: in March 2019, Zuckerberg announced that Facebook planned to integrate Instagram, WhatsApp, and Messenger to create a combined platform for private, encrypted messaging. Zuckerberg explained thatFixing Facebook: Fake News, Privacy. and Platform Governance Facebook was responding to users' shift away from sharing and communicating openly in the "town square" toward more personal, impermanent \"living room" discussions: Today we already see that private messaging. ephemeral stories, and small groups are by far the fastest growing areas of online communication i . . Many people prefer the intimacy of communicating one-on-one or with fust a few friends. r . In a few years, I expect future versions of Messenger and WhalsApp to become the main ways people communicate on the Facebook network. 12' One month later, during its annual developer conference, Facebok unveiled its redesign of the site. which heavily featured groups and private messaging. Almost all of the presenters at the eanterence repeated the phrase: "the future is private,"127 Facebook's new living room model presented two significant challenges. The first was that it was unclear how advertising would work, given that the News Feed was both the venue and an important source of data for targeted advertisements. Zuckerberg admitted that having access to less intarmatian would make targeted advertisemens \"somewhat less effective," but he wag confident that Facebook would be able to work out a system for targeting advertisements using a fraction of the data.\" As for the advertisements themselves, Facebook was exploring a number of options, including inbox ads and sponsored messages for Messenger. It was also possible that Faceboo]: would go the way of WeChal, and would begin making revenue off transactions on the platform. This possibility became more plausible after Facebook proposed its own cryptocurrency, Libra, in lune 2019.12\" The second challenge the digital living room presented was content curatton: if users increasingly shifted to encrypted massaging. Facebouk needed new tools to stop the spread of false and harmful content. In an interview, Zuerberg said he was "much more worried" about the tradeoffs between privacy and safety than anything else, but he provided no specifics when asked how Facebook would address themm Facebonk was already addressing the problem on WhafsAppfnr example, by limiting the number of times a message could be forwardedbut it Was undesi- how successful its efforts had been. According to an internal study, the forwarding limit had reduced the total number of forwarded messages on WhatsApp by 25%. and another study found that it delayed the spread of content by up to two orders of magnitude: however, the second study also found that content designed to be viral (ego alarming conspiracy theoriES) was largely unaffected by the forwarding limit, and the authors reconunended that WhatsApp develop a "quarantine approach\" that directly limited the forwarding of specific messages or accounts131 There was also reason to be concerned about lnstagram According to one study published in December 2018, Russian efforts to influence the 2016 US. election had actually been more successful on Instagram than on Facebook. Although Russian posts reached fewer people on lnstagram, Instagram users interacted with these posts almost 2.5 times more than Facebook users did.112 Ahead of the 2010 election, Facebook began making changes to Instagram to limit the spread of false information, giving users the ability to flag false information, implementing image-detection algorithms to catch previously debunked content, rolling out more fact-checkers in the U.S., and removing debunked content from its "Explore\" tab and search results113 The Oversight Board During an interview in April 2018, Vox founder Ezra Klein asked Zuckerberg if Facebook had \"just become too big and too vast and too consequential for normal corporate governance structures?\" in response. Zuckerberg said that he wanted l'to create a governance structure around the content and Fixing Facebook: Fake News, Privacy. and Platform Governance shareholders might want," and that a key component of that governance structure would be asystem for appealing content decisions. possibly with an independent board \"almost like a Supreme Court" giving the final say\"15 Later, in November, Zuckerberg announced that Facebook was planning to create an independent board for reviewing its content decisions, and in January 2019, Facebook released a draft charter for the board to be reviewed by academics. activists, and Facebook users.136 In September 2019, Facebook released the final draft of the board's charter and announced that it planned to have an appeals system up and running by 1020.137 According to its charter, the Oversight Board would be a body of no fewer than 11 members chosen by anindependent trust to serve a maximum of three three-year terms.138 The Board would have the authority to review Facebook's content decisions and to instruct Facebook to allow, remove, or take other actions on content, as well as the responsibility to explain their decision in plain language by interpreting Facebook's Community Standards in light of Facebook's principles (see Exhibit 6) and international human rights nouns. According to Zuckerberg, \"The board will be an advocate for our communitysupporting people's rights to free expression and making sure we fulfill our responsibility to keep people safen\" Experts and advocates were cautiously optimistic. According to legal scholar Kate lGonick: [l]t fust seems that making sure this Oversight Board actually works is deeply in Facebook's best interests A . . And it might not work! [I describe it] as trying to retro-fit a skeletal system for a jellyfish. A private transnational company voluntarily Creating an independent body and process to oversee a fundamental human right. It's really a very daunting idea that no one has ever tackled before. 1'\" Facebook's Choices At the highest level, Facebook's options ranged from governing less to governing more. Governing less meant becoming more like WhaLsApp. As Facebook told its WhatsApp users: Your messages are yours. . . We've built privacy, end-to-end encryption, and other Security features into WhaisApp. We don't store your messages once they've been delivered. When they are end-Eoend encrypted, we and third parties can't read them \"1 In an encrypted world, the platform would have no way of knowing whether a user was sending harmful Content to others. Governance options would be technically limited: the platform could do little more than receive reports from users and/ or monitor publicly available information like users' profile photos, for known examples of harmful content\"2 And even if Facebook only moderated content reported by users, it would still need to make tough decisions. Historically, the vast majority of content Lhatusers reported on Facebook did not violate Community Standards onFacebook Instead, users reported content to show disagreement or disapproval. \"3 At the other extreme, Facebook could monitor the digital living room and curate all content, like WeChat. It could ramp up investment in Al and machine learning, and dramatically expand (maybe by 5-10 times) the number of human moderators around the world. Such an approach would raise questions about privacy, free speech and censorship. Despite Zuckerbergs consternation over Facebook becoming an arbiter of the truth, this strategy would require third-party fact-checkers monitoring and marking posts to quell the spread of misinformation, especially during elections. In between these two options, there were probably hundreds of small and large steps l-'acebook Fixing Facebook: Fake News, Privacy. and Platform Governance to curate content, while others called for a wholesale reinvention of the company In May 2018, for example, Facebook chartered an independent Data Tramparency Advisory Group (DTAG) to assess and provide recommendations for how Facebook measured and reported its effectiveness in enforcing its Community Standards. The DTAG report emphasized the importance of \" procedural justice": Over the past four decades, a large volume of social psychological research has shown that people are more likely to respect authorities and rules. and to follow those rules and cooperate with those authorities, if they perceive them as being legitimate. . .Perhaps Counterintuitively, this research also shows that peoples' iudgrnents about legitimacy do not depend primarily on whether authorities give them favorable outcomes . . r Rather, Judgments about legitimacy are more strongly swayed by the processes and procedures by which authorities use their authority!\" To address the spread of misinformation some experts recommended that social media companies introduce more "friction" into their platforms for example, put a Ill-minute gap between when a user submitted content and when it appeared online. or prioritize local content.\"" This would give Facebook more time to catch problematic content and give users the chance to think twice about posting As one analyst cemented, it would be better to \"put some cognitive space between stimulus and response when you are in a hot hedonic stateor, as everyone's mom used to put it, 'When you're mad, count to (on before you answer?\" At the same time, several critics argued that nothing short of a breakup would solve Facehook's troubles. WhenFacebook cofourtdet, Chris Hughes, made this argument in the New York Times on May 9, 2019, he created a stir. Hughes wrote: "Mark may never have a boss, but he needs to have some check on his power. The American government needs to do two things: break up Facebook's monopoly and regulate the company to make it more accountable to the American people.\"\"7 Zuckerberg's Choice? Mark Zuckerberg controlled a majority of the voting stock at Facebook, which meant that he was responsible for making the choice. On July 24. 2019, Zuckerberg posted a message to his Facebook page: Our top priority has been addressing the important social issues facing the internet and our company. With our privacy-focused vision for building the digital living room and with today's FTC settlement, delivering worldclass privacy protections willbe even more central to our vision of the future. But I also believe we have a responsibility to keep innovating and building qualitatively new experiences for people to come together in new ways So I've been focused on making sure we can keep executing our proactive roadmap while we worl: hard to address important sodal issues . . . Our mission to bring the world Closer together is difficult but important, and I'm grateful for the role every one of you plays to help make this happen1\"" Ultimately. Zucl-(erberg had to decide what kind of company he was building. Since the economics of the current business were spectacular, should he only tinker around the edges and avoid threatening the \"golden goose\"? Should he make a dramatic move to shift towards a privacy-driven, encrypted platform? Should he consider breaking up the company, as his Co-founder proposed? 01' should he invest heavily in building a deeply curated platform? The future of his company, and maybe the future of large swathes of the world. were at stake. Fixing Facebook: Fake News, Privacy, and Platform Governance 19 / 30 100% + . .. 17 Fixing Facebook: Fake News, Privacy, and Platform Governance 720-400 Exhibit 5 Excerpts from Section 230 of Title V of the Telecommunications Act of 1996 Communication Decency Act) (a) Findings The Congress finds the following: (1) The rapidly developing array of Internet and other interactive computer services available to individual Americans represent an extraordinary advance in the availability of 18 educational and informational resources to our citizens. (2) These services offer users a great degree of control over the information that they receive, as well as the potential for even greater control in the future as technology develops. (3) The Internet and other interactive computer services offer a forum for a true diversity of political discourse, unique opportunities for cultural development, and myriad avenues for intellectual activity ... (b) Policy It is the policy of the United States - (1) to promote the continued development of the Internet and other interactive computer services and other interactive media; 19 (2) to preserve the vibrant and competitive free market that presently exists for the Internet and other interactive computer services, unfettered by Federal or State regulation... (c) Protection for "Good Samaritan" Blocking and Screening of Offensive Material (1) Treatment of Publisher or Speaker No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider. (2) Civil Liability No provider or user of an interactive computer service shall be held liable on account of - 20 (A) any action voluntarily taken in good faith to restrict access to or availability of material that the provider or user considers to be obscene, lewd, lascivious, filthy, excessively violent, harassing, or otherwise objectionable, whether or not such material is constitutionally protected; or (B) any action taken to enable or make available to information content providers or others the technical means to restrict access to material described in paragraph (1)... Source: "47 U.S. Code $ 230. Protection for private blocking and screening of offensive material," Cornell Law School Legal Information Institute, https://www.law.cornell.edu/ uscode/text/47/230#fn002008, accessed August 23, 2019.Fixing Facebook: Fake News, Privacy, and Platform Governance 20 / 30 100% + . .. 17 720-400 Fixing Facebook: Fake News, Privacy, and Platform Governance Exhibit 6 Facebook's Updated Community Values The goal of our Community Standards is to create a place for expression and give people voice. Building community and bringing the world closer together depends on people's ability to share diverse views, experiences, ideas and information. We want people to be able to talk openly about the issues that matter to them, even if some may disagree or find them objectionable. In some cases, we allow content which would otherwise go against our Community Standards - if it is newsworthy and 18 in the public interest. We do this only after weighing the public interest value against the risk of harm, and we look to international human rights standards to make these judgments. A commitment to expression is paramount, but we recognize the internet creates new and increased opportunities for abuse. For these reasons, when we limit expression we do it in service of one or more of the following values: Authenticity: We want to make sure the content people are seeing on Facebook is authentic. We believe that authenticity creates a better environment for sharing, and that's why we don't want people using Facebook to misrepresent who they are or what they're doing. Safety: We are committed to making Facebook a safe place. Expression that threatens people 19 has the potential to intimidate, exclude or silence others and isn't allowed on Facebook. Privacy: We are committed to protecting personal privacy and information. Privacy gives people the freedom to be themselves, and to choose how and when to share on Facebook and to connect more easily. Dignity: We believe that all people are equal in dignity and rights. We expect that people will respect the dignity of others and not harass or degrade others. Our Community Standards apply to everyone around the world, and to all types of content. They're designed to be comprehensive - for example, content that might not be considered hateful may still be removed for violating a different policy. 120 We recognize that words mean different things or affect people differently depending on their local community, language or background. We work hard to account for these nuances while also applying our policies consistently and fairly to people and their expression. Source: Monika Bickert, "Updating the Values That Inform Our Community Standards," September 12, 2019, https:/ewsroom.fb.comews/2019/09/updating-the-values-that-inform-our-community-standards/, accessed September 24, 2019

Step by Step Solution

There are 3 Steps involved in it

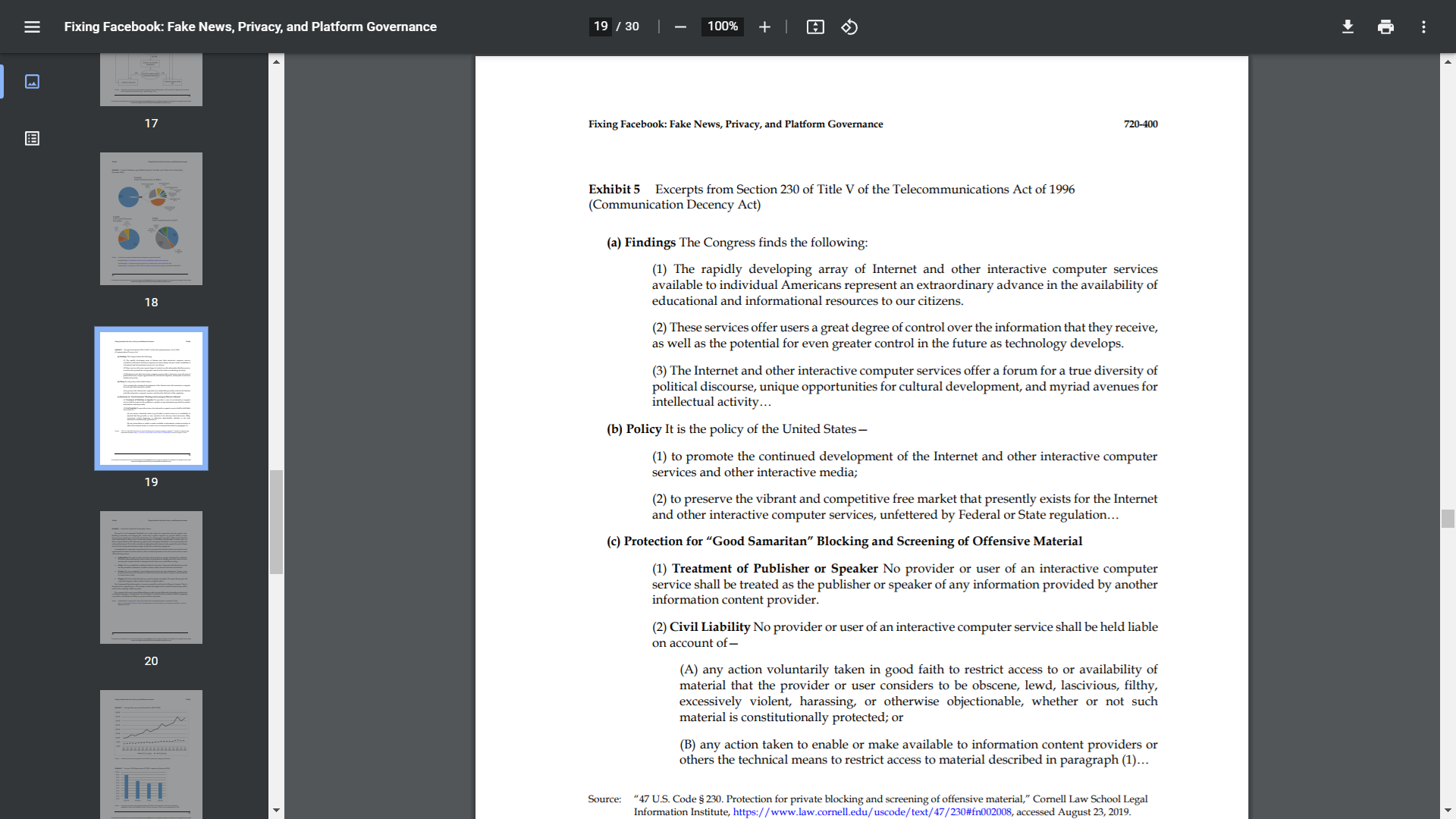

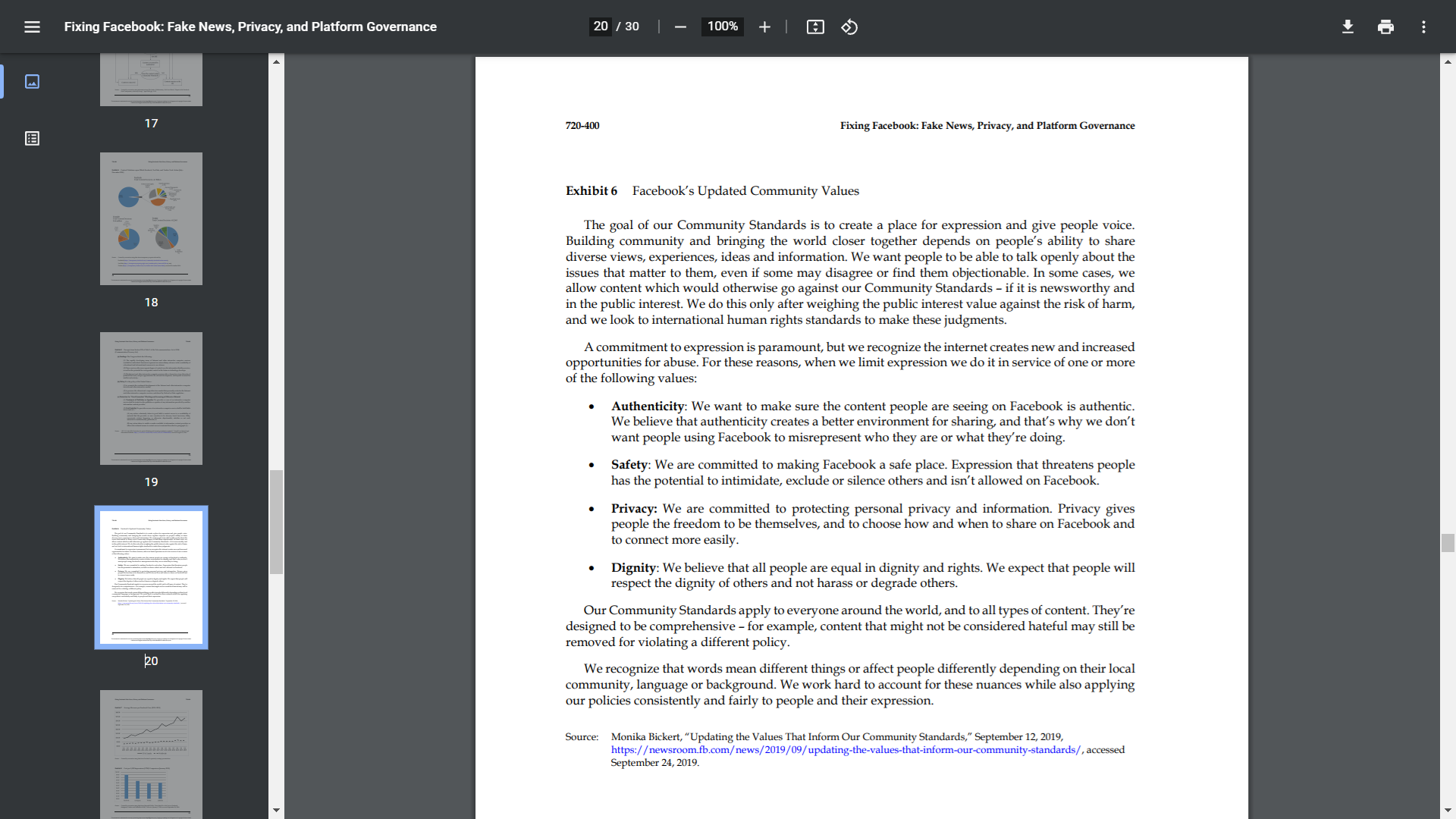

Get step-by-step solutions from verified subject matter experts