Question

I need help with my source code which I am doing on a macbook terminal on spark with scala as my line of code is

I need help with my source code which I am doing on a macbook terminal on spark with scala as my line of code is giving me an error saying "value toDF is not a member of org.apche.spark.rdd.RDD{array[AnyVal]]" from the line of code "val dfWithSchema = transformedRdd.toDF(schema:_*).withColumn("booleanField", col("booleanField").cast("boolean"))" how do I fix this as my code is down below. Will leave thumbs up to how to correct this.

Here's the Scala code to load the block_1.csv file

// Import SparkSession and functions for working with data types

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.functions._

import org.apache.spark.sql.types.{IntegerType, DoubleType}

// Create a SparkSession

val spark = SparkSession.builder().appName("CSV Processing").getOrCreate()

// Load the block_1.csv file as a DataFrame

val df = spark.read.option("header", "true").csv("desktop/scala/linkage/block_1.csv")

// Convert the DataFrame to RDD and remove the heading

val rdd = df.rdd.mapPartitionsWithIndex((index, iterator) => if (index == 0) iterator.drop(1) else iterator)

// Convert the first two fields to integers and other fields except the last one to doubles

val transformedRdd = rdd.map(line => {

val fields = line.mkString(",").split(",")

val firstTwo = fields.slice(0, 2).map(_.toInt)

val middleFields = fields.slice(2, fields.length - 1).map(field => if (field == "?") Double.NaN else field.toDouble)

val lastField = fields.last.toLowerCase() match {

case "true" => true

case "false" => false

case _ => throw new Exception("Invalid value for boolean field")

}

firstTwo ++ middleFields ++ Array(lastField)

})

// Convert the RDD back to DataFrame and apply the schema

val schema = List("field1", "field2") ++ (1 to 8).map(i => s"field$i").toList ++ List("booleanField")

val dfWithSchema = transformedRdd.toDF(schema:_*).withColumn("booleanField", col("booleanField").cast("boolean"))

// Group the fields of type Double by the last field and output an array of statistics

val groupByLastField = dfWithSchema.groupBy("booleanField").agg(

mean("field3").alias("mean_field3"),

stddev("field3").alias("stddev_field3"),

mean("field4").alias("mean_field4"),

stddev("field4").alias("stddev_field4"),

mean("field5").alias("mean_field5"),

stddev("field5").alias("stddev_field5"),

mean("field6").alias("mean_field6"),

stddev("field6").alias("stddev_field6"),

mean("field7").alias("mean_field7"),

stddev("field7").alias("stddev_field7"),

mean("field8").alias("mean_field8"),

stddev("field8").alias("stddev_field8")

).collect()

// Print the output

groupByLastField.foreach(println)

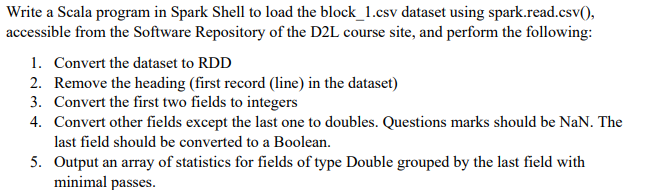

Write a Scala program in Spark Shell to load the block_1.csv dataset using spark.read.csv(), accessible from the Software Repository of the D2L course site, and perform the following: 1. Convert the dataset to RDD 2. Remove the heading (first record (line) in the dataset) 3. Convert the first two fields to integers 4. Convert other fields except the last one to doubles. Questions marks should be NaN. The last field should be converted to a Boolean. 5. Output an array of statistics for fields of type Double grouped by the last field with minimal passesStep by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started