Implement this in C++ please! I'll appreciate it very much!!

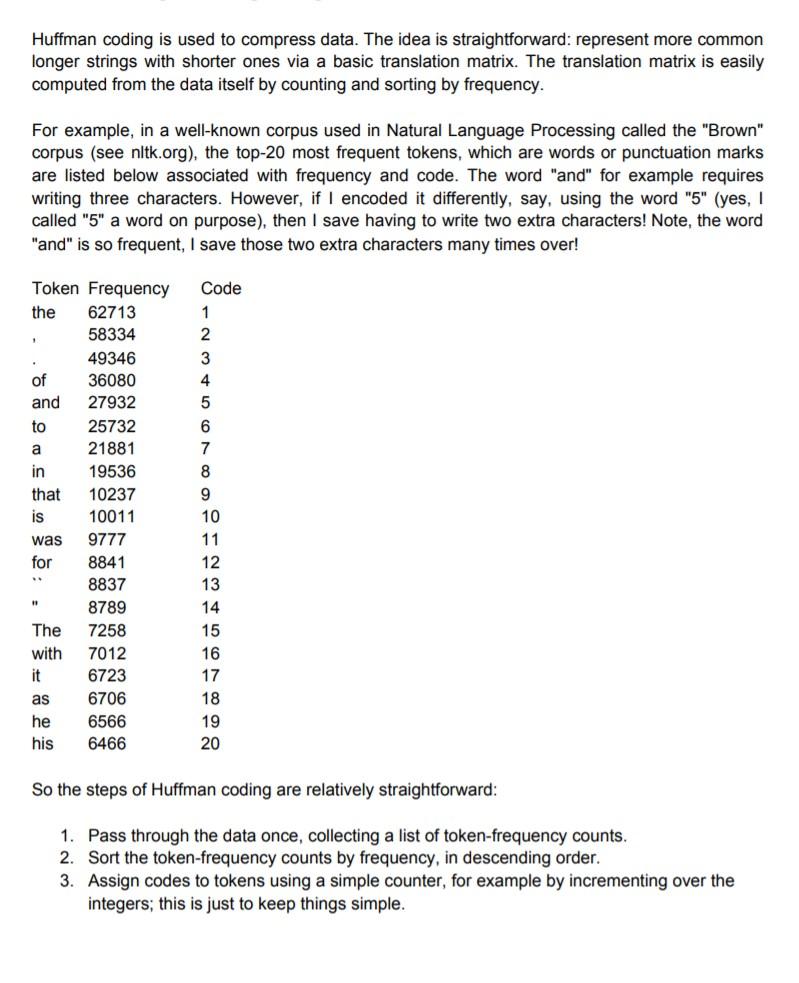

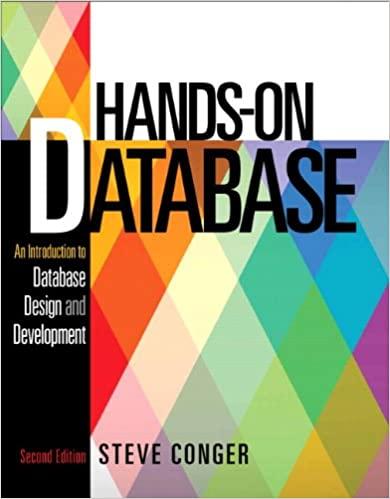

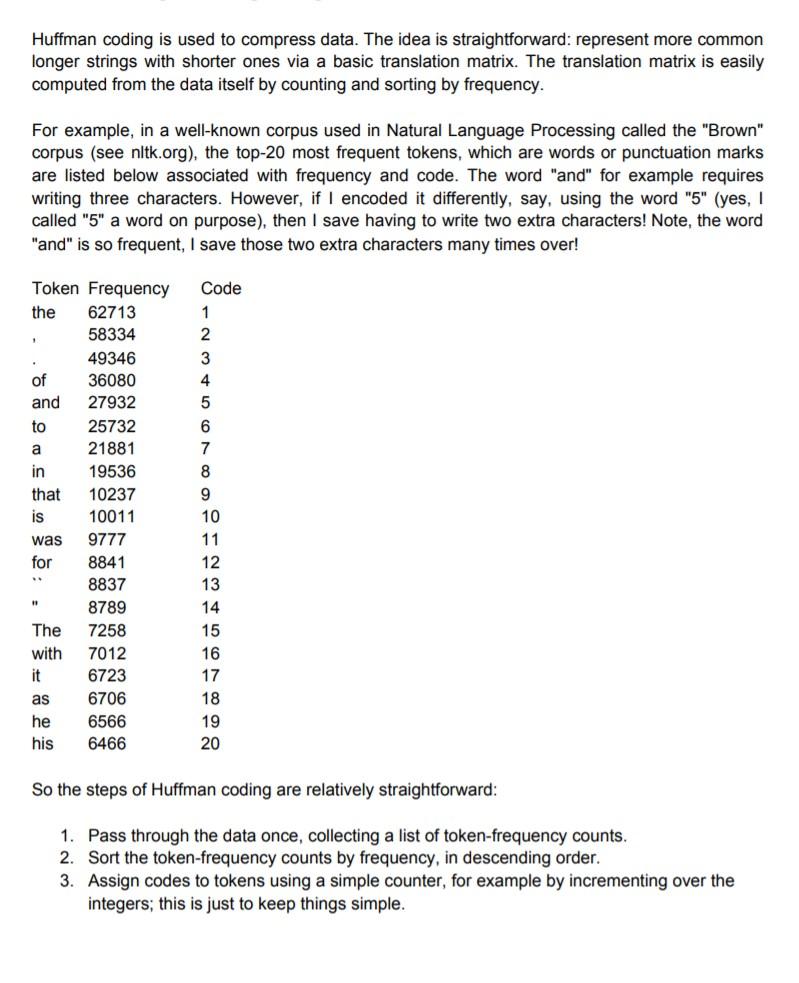

Huffman coding is used to compress data. The idea is straightforward: represent more common longer strings with shorter ones via a basic translation matrix. The translation matrix is easily computed from the data itself by counting and sorting by frequency. For example, in a well-known corpus used in Natural Language Processing called the "Brown" corpus (see nltk.org), the top-20 most frequent tokens, which are words or punctuation marks are listed below associated with frequency and code. The word "and" for example requires writing three characters. However, if I encoded it differently, say, using the word "5" (yes, I called "5" a word on purpose), then I save having to write two extra characters! Note, the word "and" is so frequent, I save those two extra characters many times over! the Code 1 2 1 3 4 5 6 7 8 Token Frequency 62713 58334 49346 of 36080 and 27932 to 25732 a 21881 in 19536 that 10237 is 10011 was 9777 for 8841 8837 8789 The 7258 with 7012 it 6723 as 6706 he 6566 his 6466 9 10 11 12 13 14 15 16 17 18 19 20 So the steps of Huffman coding are relatively straightforward: 1. Pass through the data once, collecting a list of token-frequency counts. 2. Sort the token-frequency counts by frequency, in descending order. 3. Assign codes to tokens using a simple counter, for example by incrementing over the integers; this is just to keep things simple. 4. Store the new mapping (token -> code) in a hashtable called "encoder". 5. Store the reverse mapping (code -> token) in a hashtable called "decoder". 6. Pass through the data a second time. This time, replace all tokens with their codes. Now, be amazed at how much you've shrunk your data! Delivery Notes: (1) Implement your own hashtable from scratch, you are not allowed to use existing hash table libraries. (2) To be useful, your output should include the coded data as well as the decoder (code -> token) mapping file. Huffman coding is used to compress data. The idea is straightforward: represent more common longer strings with shorter ones via a basic translation matrix. The translation matrix is easily computed from the data itself by counting and sorting by frequency. For example, in a well-known corpus used in Natural Language Processing called the "Brown" corpus (see nltk.org), the top-20 most frequent tokens, which are words or punctuation marks are listed below associated with frequency and code. The word "and" for example requires writing three characters. However, if I encoded it differently, say, using the word "5" (yes, I called "5" a word on purpose), then I save having to write two extra characters! Note, the word "and" is so frequent, I save those two extra characters many times over! the Code 1 2 1 3 4 5 6 7 8 Token Frequency 62713 58334 49346 of 36080 and 27932 to 25732 a 21881 in 19536 that 10237 is 10011 was 9777 for 8841 8837 8789 The 7258 with 7012 it 6723 as 6706 he 6566 his 6466 9 10 11 12 13 14 15 16 17 18 19 20 So the steps of Huffman coding are relatively straightforward: 1. Pass through the data once, collecting a list of token-frequency counts. 2. Sort the token-frequency counts by frequency, in descending order. 3. Assign codes to tokens using a simple counter, for example by incrementing over the integers; this is just to keep things simple. 4. Store the new mapping (token -> code) in a hashtable called "encoder". 5. Store the reverse mapping (code -> token) in a hashtable called "decoder". 6. Pass through the data a second time. This time, replace all tokens with their codes. Now, be amazed at how much you've shrunk your data! Delivery Notes: (1) Implement your own hashtable from scratch, you are not allowed to use existing hash table libraries. (2) To be useful, your output should include the coded data as well as the decoder (code -> token) mapping file