Question

import numpy as np import os import torch import torch.nn as nn import torch.nn.functional as F import matplotlib.pyplot as plt import imageio.v2 as imageio import

import numpy as np import os import torch import torch.nn as nn import torch.nn.functional as F import matplotlib.pyplot as plt import imageio.v2 as imageio import time import gdown

device = torch.device("cuda" if torch.cuda.is_available() else "cpu") print(device)

url = "https://drive.google.com/file/d/1rD1aaxN8aSynZ8OPA7EI3G936IF0vcUt/view?usp=sharing" gdown.download(url=url, output='starry_night.jpg', quiet=False, fuzzy=True)

# Load painting image painting = imageio.imread("starry_night.jpg") painting = torch.from_numpy(np.array(painting, dtype=np.float32)/255.).to(device) height_painting, width_painting = painting.shape[:2]

def positional_encoding(x, num_frequencies=6, incl_input=True):

""" Apply positional encoding to the input.

Args: x (torch.Tensor): Input tensor to be positionally encoded. The dimension of x is [N, D], where N is the number of input coordinates, and D is the dimension of the input coordinate. num_frequencies (optional, int): The number of frequencies used in the positional encoding (default: 6). incl_input (optional, bool): If True, concatenate the input with the computed positional encoding (default: True).

Returns: (torch.Tensor): Positional encoding of the input tensor. """

results = [] if incl_input: results.append(x) ############################# TODO 1(a) BEGIN ############################ # encode input tensor and append the encoded tensor to the list of results.

############################# TODO 1(a) END ############################## return torch.cat(results, dim=-1)

class model_2d(nn.Module):

""" Define a 2D model comprising of three fully connected layers, two relu activations and one sigmoid activation. """

def __init__(self, filter_size=128, num_frequencies=6): super().__init__() ############################# TODO 1(b) BEGIN ############################ # for autograder compliance, please follow the given naming for your layers self.layer_in = nn.Linear(...) self.layer = ... self.layer_out = ...

############################# TODO 1(b) END ##############################

def forward(self, x): ############################# TODO 1(b) BEGIN ############################ # example of forward through a layer: y = self.layer_in(x)

############################# TODO 1(b) END ############################## return x

def normalize_coord(height, width, num_frequencies=6):

""" Creates the 2D normalized coordinates, and applies positional encoding to them

Args: height (int): Height of the image width (int): Width of the image num_frequencies (optional, int): The number of frequencies used in the positional encoding (default: 6).

Returns: (torch.Tensor): Returns the 2D normalized coordinates after applying positional encoding to them. """

############################# TODO 1(c) BEGIN ############################ # Create the 2D normalized coordinates, and apply positional encoding to them

############################# TODO 1(c) END ############################

return embedded_coordinates

def train_2d_model(test_img, num_frequencies, device, model=model_2d, positional_encoding=positional_encoding, show=True):

# Optimizer parameters lr = 5e-4 iterations = 10000 height, width = test_img.shape[:2]

# Number of iters after which stats are displayed display = 2000

# Define the model and initialize its weights. model2d = model(num_frequencies=num_frequencies) model2d.to(device)

def weights_init(m): if isinstance(m, nn.Linear): torch.nn.init.xavier_uniform_(m.weight)

model2d.apply(weights_init)

############################# TODO 1(c) BEGIN ############################ # Define the optimizer

############################# TODO 1(c) END ############################

# Seed RNG, for repeatability seed = 5670 torch.manual_seed(seed) np.random.seed(seed)

# Lists to log metrics etc. psnrs = [] iternums = []

t = time.time() t0 = time.time()

############################# TODO 1(c) BEGIN ############################ # Create the 2D normalized coordinates, and apply positional encoding to them

############################# TODO 1(c) END ############################

for i in range(iterations+1): optimizer.zero_grad() ############################# TODO 1(c) BEGIN ############################ # Run one iteration

# Compute mean-squared error between the predicted and target images. Backprop!

############################# TODO 1(c) END ############################

# Display images/plots/stats if i % display == 0 and show: ############################# TODO 1(c) BEGIN ############################ # Calculate psnr

############################# TODO 1(c) END ############################

print("Iteration %d " % i, "Loss: %.4f " % loss.item(), "PSNR: %.2f" % psnr.item(), \ "Time: %.2f secs per iter" % ((time.time() - t) / display), "%.2f secs in total" % (time.time() - t0)) t = time.time()

psnrs.append(psnr.item()) iternums.append(i)

plt.figure(figsize=(13, 4)) plt.subplot(131) plt.imshow(pred.detach().cpu().numpy()) plt.title(f"Iteration {i}") plt.subplot(132) plt.imshow(test_img.cpu().numpy()) plt.title("Target image") plt.subplot(133) plt.plot(iternums, psnrs) plt.title("PSNR") plt.show()

print('Done!') torch.save(model2d.state_dict(),'model_2d_' + str(num_frequencies) + 'freq.pt') plt.imsave('van_gogh_' + str(num_frequencies) + 'freq.png',pred.detach().cpu().numpy()) return pred.detach().cpu()

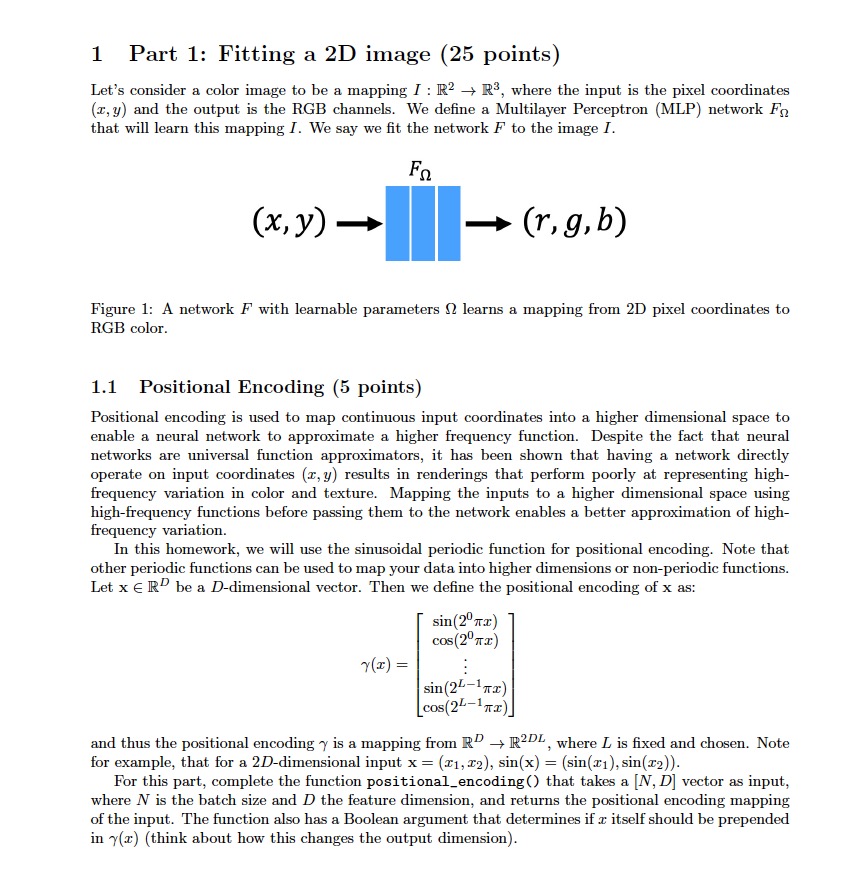

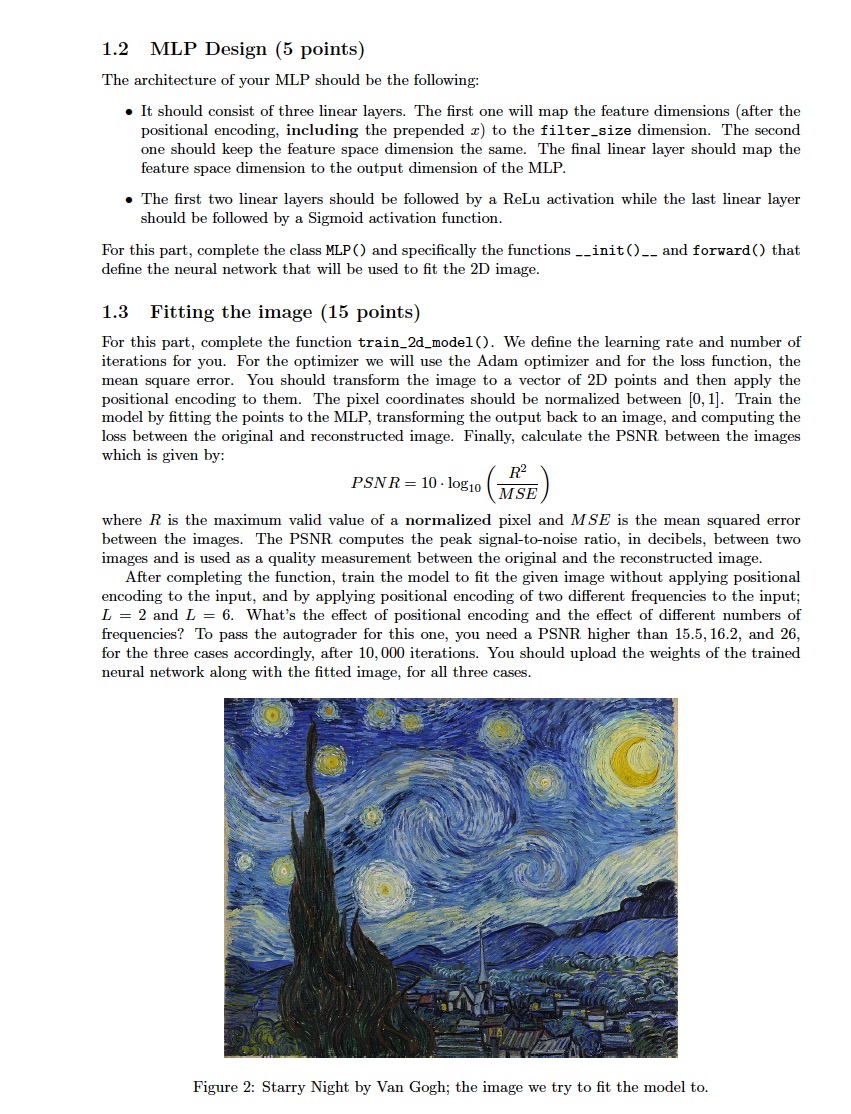

1 Part 1: Fitting a 2D image (25 points) Let's consider a color image to be a mapping I:R2R3, where the input is the pixel coordinates (x,y) and the output is the RGB channels. We define a Multilayer Perceptron (MLP) network F that will learn this mapping I. We say we fit the network F to the image I. Figure 1: A network F with learnable parameters learns a mapping from 2D pixel coordinates to RGB color. 1.1 Positional Encoding (5 points) Positional encoding is used to map continuous input coordinates into a higher dimensional space to enable a neural network to approximate a higher frequency function. Despite the fact that neural networks are universal function approximators, it has been shown that having a network directly operate on input coordinates (x,y) results in renderings that perform poorly at representing highfrequency variation in color and texture. Mapping the inputs to a higher dimensional space using high-frequency functions before passing them to the network enables a better approximation of highfrequency variation. In this homework, we will use the sinusoidal periodic function for positional encoding. Note that other periodic functions can be used to map your data into higher dimensions or non-periodic functions. Let xRD be a D-dimensional vector. Then we define the positional encoding of x as: (x)=sin(20x)cos(20x)sin(2L1x)cos(2L1x) and thus the positional encoding is a mapping from RDR2DL, where L is fixed and chosen. Note for example, that for a 2D-dimensional input x=(x1,x2),sin(x)=(sin(x1),sin(x2)). For this part, complete the function positional_encoding() that takes a [N,D] vector as input, where N is the batch size and D the feature dimension, and returns the positional encoding mapping of the input. The function also has a Boolean argument that determines if x itself should be prepended in (x) (think about how this changes the output dimension). 1.2 MLP Design (5 points) The architecture of your MLP should be the following: - It should consist of three linear layers. The first one will map the feature dimensions (after the positional encoding, including the prepended x ) to the filter_size dimension. The second one should keep the feature space dimension the same. The final linear layer should map the feature space dimension to the output dimension of the MLP. - The first two linear layers should be followed by a ReLu activation while the last linear layer should be followed by a Sigmoid activation function. For this part, complete the class MLP() and specifically the functions __init()__ and forward() that define the neural network that will be used to fit the 2D image. 1.3 Fitting the image (15 points) For this part, complete the function train_2d_model(). We define the learning rate and number of iterations for you. For the optimizer we will use the Adam optimizer and for the loss function, the mean square error. You should transform the image to a vector of 2D points and then apply the positional encoding to them. The pixel coordinates should be normalized between [0,1]. Train the model by fitting the points to the MLP, transforming the output back to an image, and computing the loss between the original and reconstructed image. Finally, calculate the PSNR between the images which is given by: PSNR=10log10(MSER2) where R is the maximum valid value of a normalized pixel and MSE is the mean squared error between the images. The PSNR computes the peak signal-to-noise ratio, in decibels, between two images and is used as a quality measurement between the original and the reconstructed image. After completing the function, train the model to fit the given image without applying positional encoding to the input, and by applying positional encoding of two different frequencies to the input; L=2 and L=6. What's the effect of positional encoding and the effect of different numbers of frequencies? To pass the autograder for this one, you need a PSNR higher than 15.5, 16.2, and 26, for the three cases accordingly, after 10,000 iterations. You should upload the weights of the trained neural network along with the fitted image, for all three cases. Figure 2: Starry Night by Van Gogh; the image we try to fit the model to

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started